一些学习资料

- 最近对MLsys比较感兴趣,遂找些资料开始学习一下

- https://fazzie-key.cool/2023/02/21/MLsys/

- https://qiankunli.github.io/2023/12/16/llm_inference.html

- https://dlsyscourse.org

- https://github.com/chenzomi12/DeepLearningSystem/tree/main/04Inference

- https://csdiy.wiki/en/%E6%9C%BA%E5%99%A8%E5%AD%A6%E4%B9%A0%E7%B3%BB%E7%BB%9F/AICS/

- 打算假期里把以上内容都过一遍

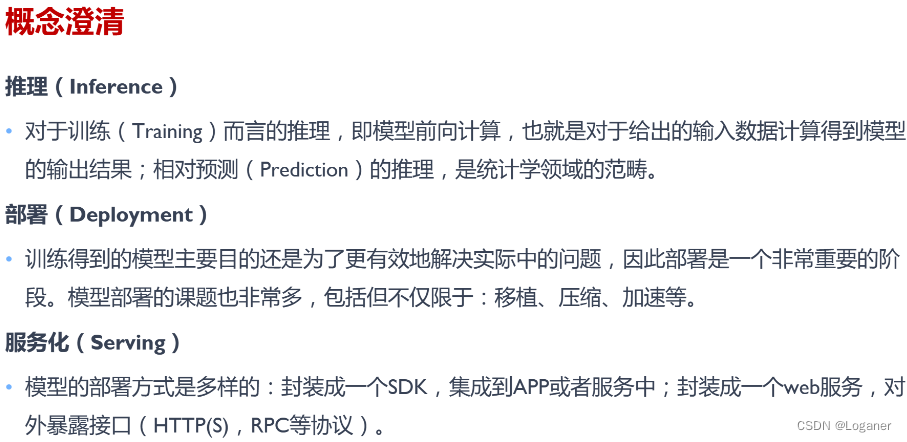

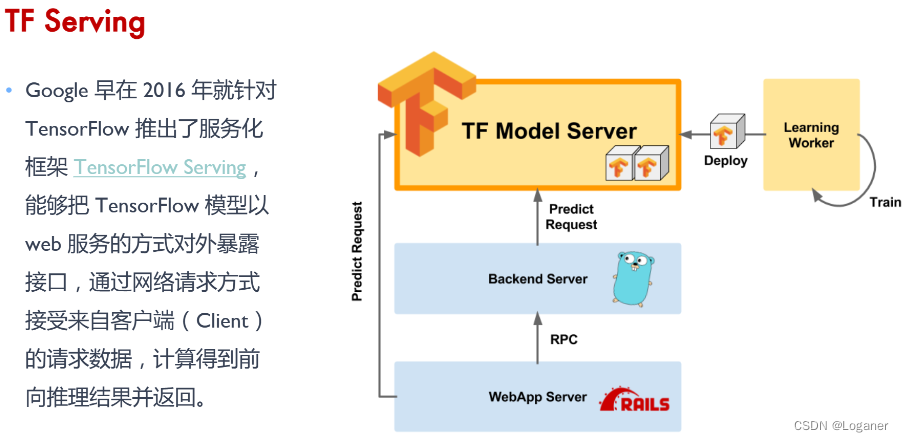

推理系统概念

https://github.com/chenzomi12/DeepLearningSystem/tree/main/04Inference

在CPU上推理中文版LLaMA2 Chinese-LLaMA-Alpaca-2

https://github.com/ymcui/Chinese-LLaMA-Alpaca-2/tree/main

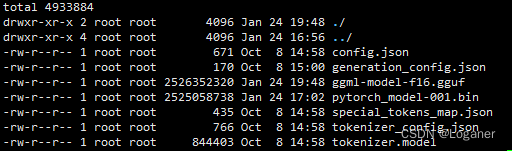

- download

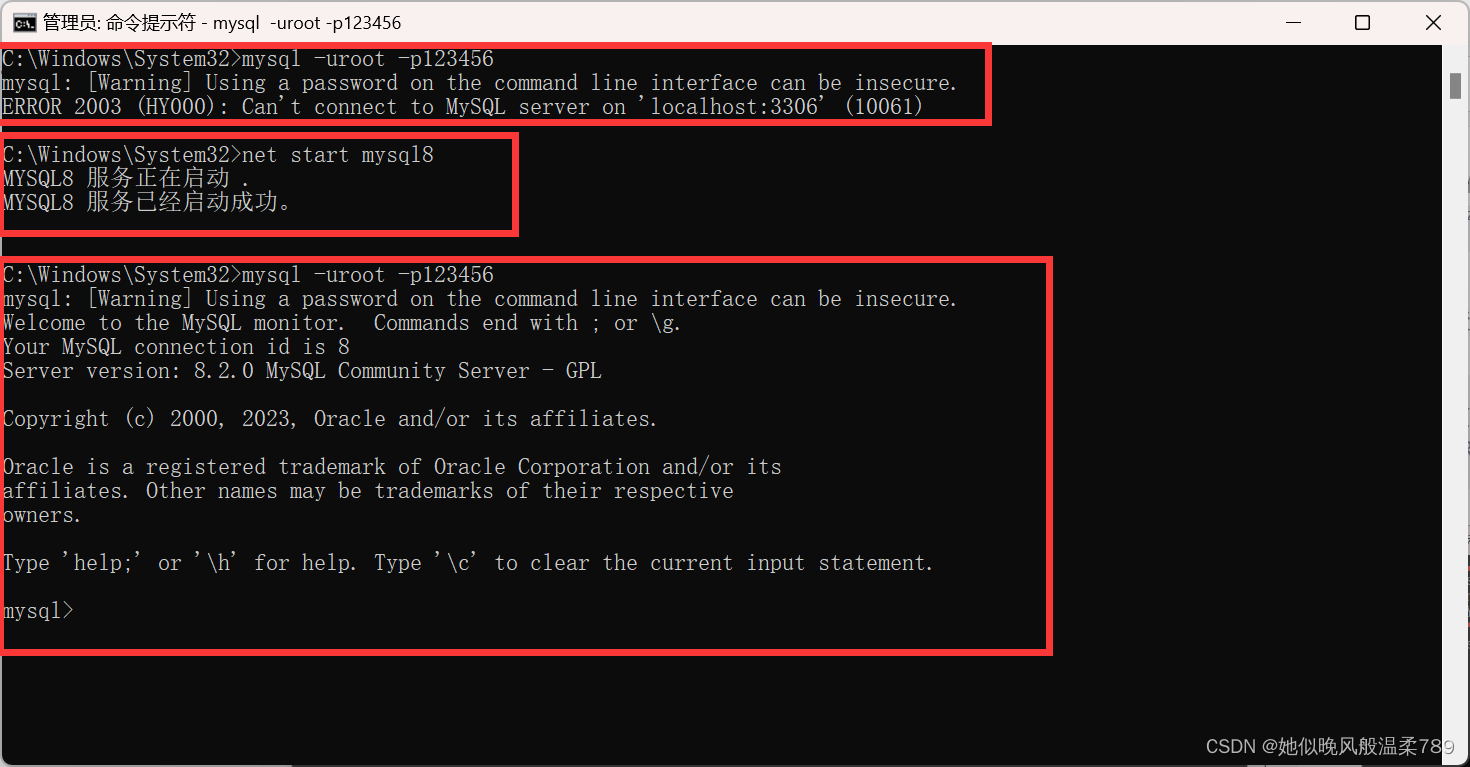

.gguf 文件是通过llama_cpp后面生成的 - 借助llama.cpp在CPU上推理

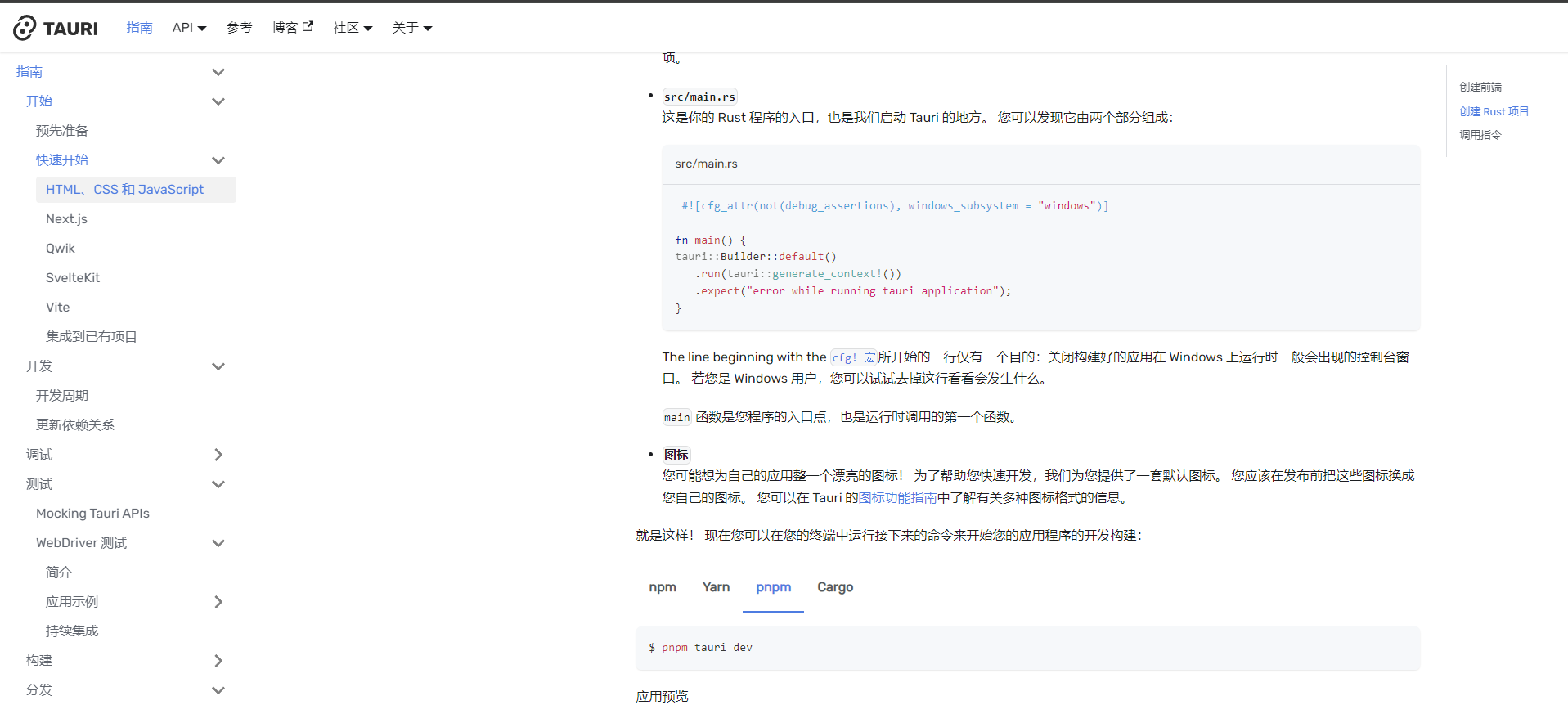

官网教程

克隆仓库到本地

Ubuntu20做法

git clone https://github.com/ggerganov/llama.cpp

cd llama.cpp

make

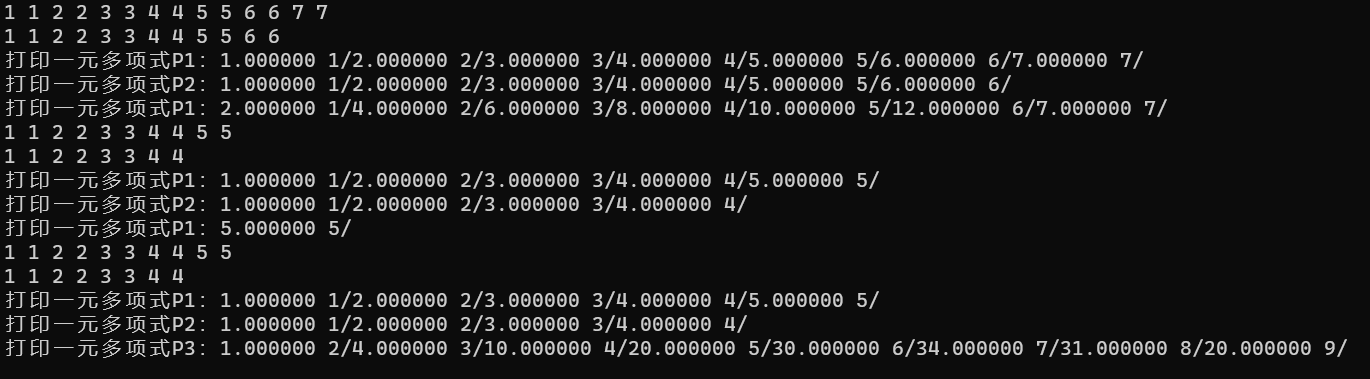

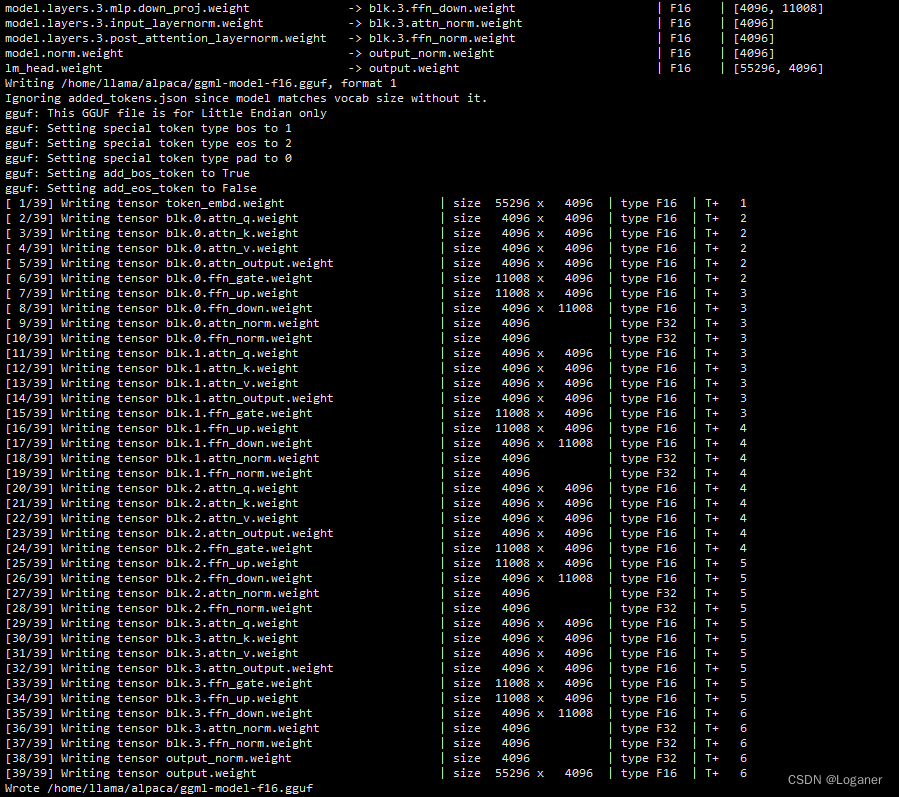

- 生成量化版模型

python3 convert.py /home/llama/alpaca/

注意替换对应的模型文件目录地址,这里有个小坑,就是在convert.py文件的load_some_model()函数中globs参数会匹配不上实际模型使用的值,这里要修改适配一下,不知道作者为什么这么设计。

def load_some_model(path: Path) -> ModelPlus:

'''Load a model of any supported format.'''

# Be extra-friendly and accept either a file or a directory:

if path.is_dir():

# Check if it's a set of safetensors files first

globs = ["model-00001-of-*.safetensors", "model.safetensors"]

files = [file for glob in globs for file in path.glob(glob)]

if not files:

# Try the PyTorch patterns too, with lower priority

globs = ["consolidated.00.pth", "pytorch_model-001*.bin", "*.pt", "pytorch_model.bin"]

files = [file for glob in globs for file in path.glob(glob)]

if not files:

raise Exception(f"Can't find model in directory {path}")

if len(files) > 1:

raise Exception(f"Found multiple models in {path}, not sure which to pick: {files}")

path = files[0]

paths = find_multifile_paths(path)

models_plus: list[ModelPlus] = []

for path in paths:

print(f"Loading model file {path}")

models_plus.append(lazy_load_file(path))

model_plus = merge_multifile_models(models_plus)

return model_plus

成功后这样子。

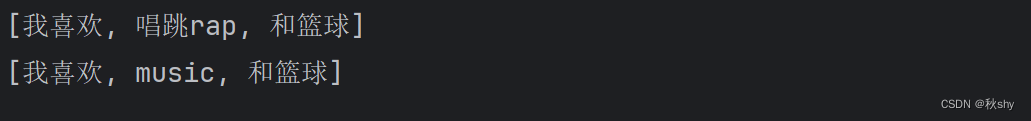

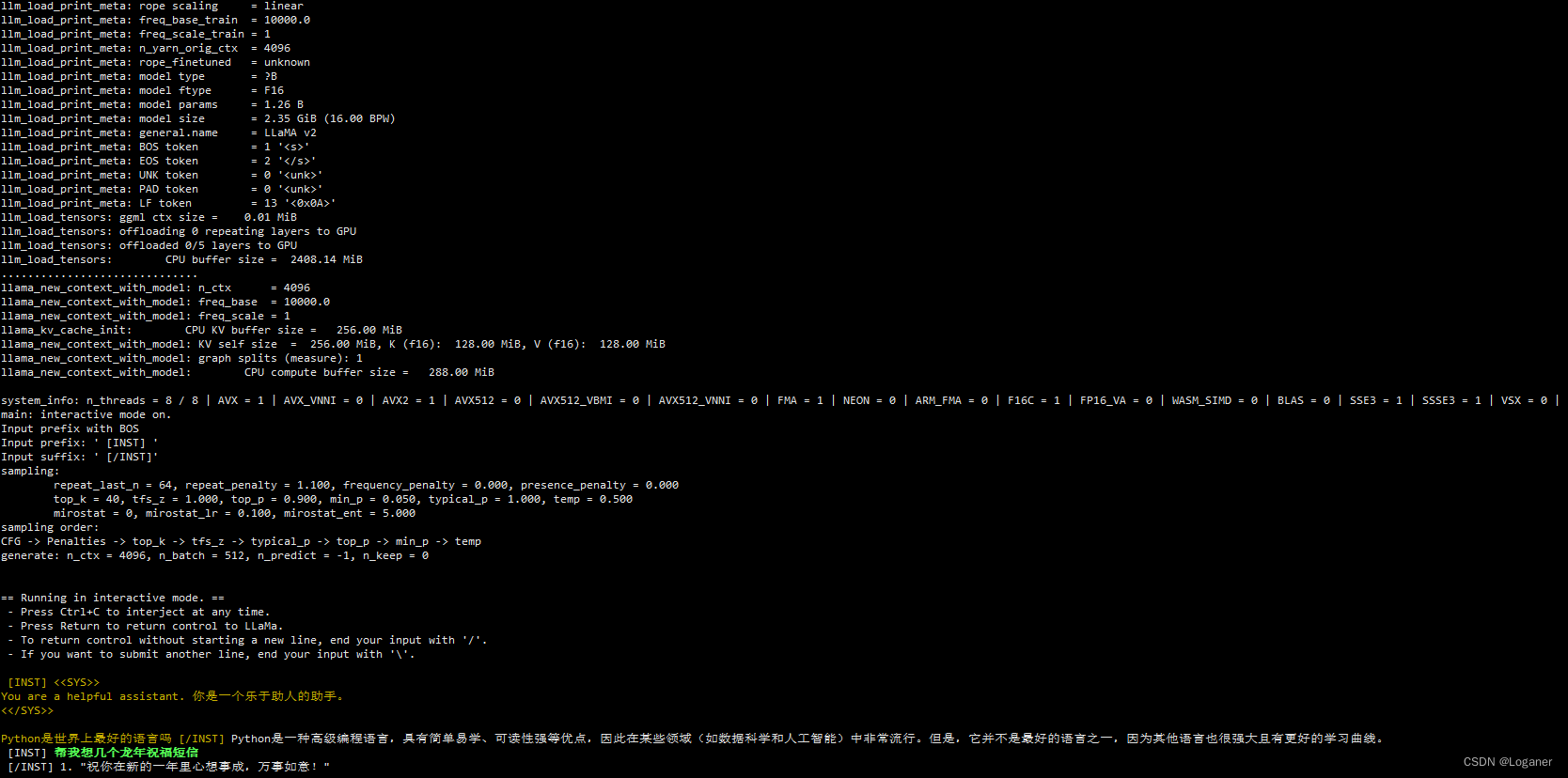

- 加载启动模型

将Alpaca-2项目的scripts/llama-cpp/chat.sh拷贝至llama.cpp的根目录。

#!/bin/bash

# temporary script to chat with Chinese Alpaca-2 model

# usage: ./chat.sh alpaca2-ggml-model-path your-first-instruction

SYSTEM_PROMPT='You are a helpful assistant. 你是一个乐于助人的助手。'

# SYSTEM_PROMPT='You are a helpful assistant. 你是一个乐于助人的助手。请你提供专业、有逻辑、内容真实、有价值的详细回复。' # Try this one, if you prefer longer response.

MODEL_PATH=$1

FIRST_INSTRUCTION=$2

./main -m "$MODEL_PATH" \

--color -i -c 4096 -t 8 --temp 0.5 --top_k 40 --top_p 0.9 --repeat_penalty 1.1 \

--in-prefix-bos --in-prefix ' [INST] ' --in-suffix ' [/INST]' -p \

"[INST] <<SYS>>

$SYSTEM_PROMPT

<</SYS>>

$FIRST_INSTRUCTION [/INST]"

shell脚本接收两个传参,.gguf文件和’问答的句子’

chmod +x chat.sh

./chat.sh /home/llama/alpaca/ggml-model-f16.gguf 'Python是世界上最好的语言吗'

-------------------- 未完待续 --------------------------