⭐️ 前言

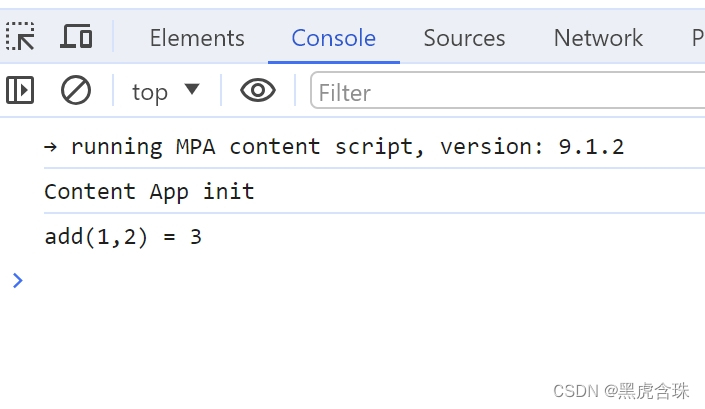

小编让ChatGPT写一个风格迁移的例子,注意注意,代码无任何改动,直接运行,输出结果。

额。。。。这不是风格转换后的结果图。

⭐️ 风格迁移基本原理

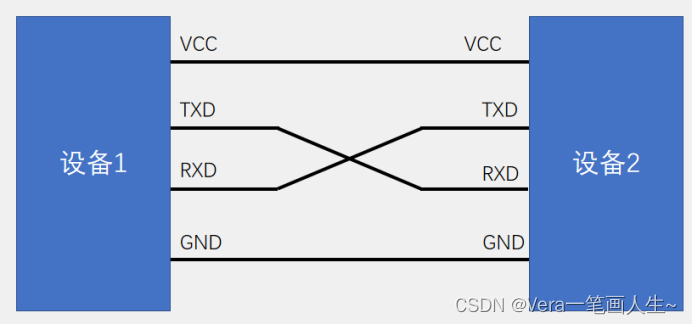

风格迁移是一种计算机视觉领域的图像处理技术,它的目标是将一张图像的内容与另一张图像的艺术风格相结合,创造出一张新的图像。这一技术通过深度学习的方法,结合卷积神经网络(CNN)和损失函数,实现了在内容和风格之间进行有效迁移。

下面详细探讨风格迁移的原理:

1. 内容表示:

内容图像: 风格迁移的源,提供图像的内容信息。

内容表示: 使用预训练的卷积神经网络,通常选择网络中的某一层,提取内容图像的特征表示。

2. 风格表示:

风格图像: 风格迁移的目标,提供所需的艺术风格。

风格表示: 同样使用卷积神经网络,选择多个层次的特征表示,以捕捉图像的不同尺度和层次的艺术风格。

5. 优化过程:

通过调整生成图像的像素值,以最小化总体损失函数,来生成最终的风格迁移图像。这通常通过梯度下降等优化算法来实现。

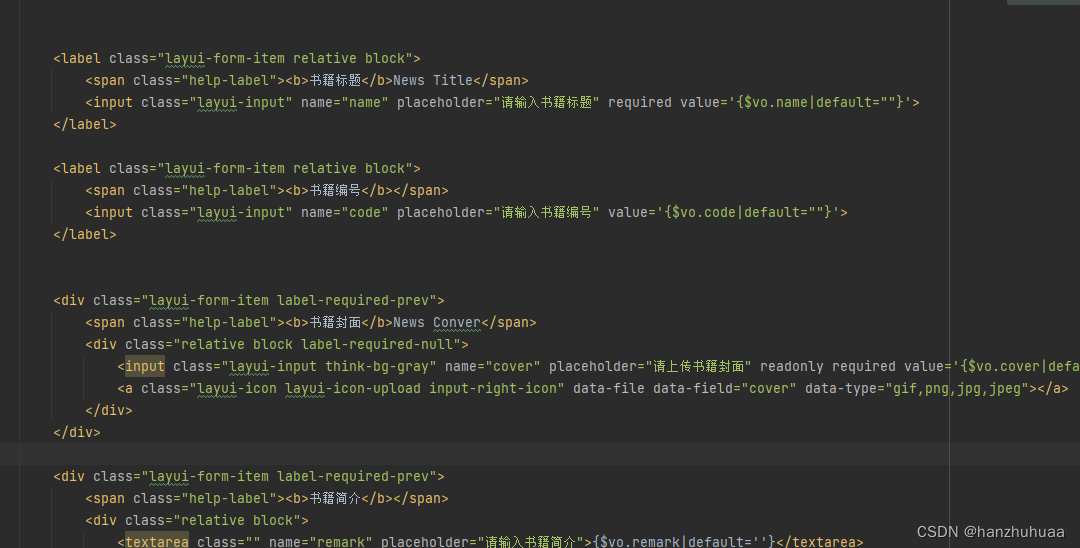

代码如下:

import torch

import torch.nn as nn

import torch.optim as optim

from torchvision import models, transforms

from PIL import Image

import numpy as np

# 加载预训练的VGG模型

def load_vgg_model():

vgg = models.vgg19(pretrained=True).features

for param in vgg.parameters():

param.requires_grad_(False)

return vgg

# 图像预处理

def load_image(image_path, transform=None, max_size=None, shape=None):

image = Image.open(image_path).convert('RGB')

if max_size:

scale = max_size / max(image.size)

size = tuple(int(x * scale) for x in image.size)

image = image.resize(size)

if shape:

image = image.resize(shape)

if transform:

image = transform(image).unsqueeze(0)

return image

# 图像后处理

def convert_image(tensor):

image = tensor.to("cpu").clone().detach()

image = image.numpy().squeeze()

image = image.transpose(1,2,0)

image = image * np.array((0.229, 0.224, 0.225)) + np.array((0.485, 0.456, 0.406))

image = image.clip(0, 1)

return image

# 定义风格迁移网络

class StyleTransferNet(nn.Module):

def __init__(self, content_layers, style_layers):

super(StyleTransferNet, self).__init__()

self.vgg = load_vgg_model()

self.content_layers = content_layers

self.style_layers = style_layers

def forward(self, x):

content_outputs = []

style_outputs = []

for i, layer in enumerate(self.vgg):

x = layer(x)

if i in self.content_layers:

content_outputs.append(x)

if i in self.style_layers:

style_outputs.append(x)

return content_outputs, style_outputs

# 损失函数

def content_loss(target, generated):

return torch.mean((target - generated)**2)

def gram_matrix(tensor):

_, d, h, w = tensor.size()

tensor = tensor.view(d, h * w)

gram = torch.mm(tensor, tensor.t())

return gram

def style_loss(target, generated):

target_gram = gram_matrix(target)

generated_gram = gram_matrix(generated)

return torch.mean((target_gram - generated_gram)**2)

def total_variation_loss(image):

return torch.sum(torch.abs(image[:, :, :, :-1] - image[:, :, :, 1:])) + \

torch.sum(torch.abs(image[:, :, :-1, :] - image[:, :, 1:, :]))

# 风格迁移主函数

def style_transfer(content_path, style_path, output_path, num_steps=10000, content_weight=1, style_weight=1e6, tv_weight=1e-6):

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

content_image = load_image(content_path, transform, max_size=400)

style_image = load_image(style_path, transform, shape=[content_image.size(2), content_image.size(3)])

content_image = content_image.to(device)

style_image = style_image.to(device)

model = StyleTransferNet(content_layers, style_layers).to(device).eval()

# 优化器

optimizer = optim.Adam([content_image.requires_grad_(), style_image.requires_grad_()], lr=0.01)

for step in range(num_steps):

optimizer.zero_grad()

content_outputs, style_outputs = model(content_image)

content_loss_value = 0

for target, generated in zip(content_outputs, model(content_image)[0]):

content_loss_value += content_loss(target, generated)

style_loss_value = 0

for target, generated in zip(style_outputs, model(style_image)[1]):

style_loss_value += style_loss(target, generated)

tv_loss_value = total_variation_loss(content_image)

total_loss = content_weight * content_loss_value + style_weight * style_loss_value + tv_weight * tv_loss_value

total_loss.backward()

optimizer.step()

if step % 50 == 0 or step == num_steps - 1:

print(f"Step {step}/{num_steps}, Total Loss: {total_loss.item()}")

# 保存生成的图像

output_image = convert_image(content_image)

Image.fromarray((output_image * 255).astype(np.uint8)).save(output_path)

# 主程序

content_image_path = "./content.jpg"

style_image_path = "./style.jpg"

output_image_path = "./image.jpg"

transform = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.485, 0.456, 0.406), (0.229, 0.224, 0.225)),

])

content_layers = [21]

style_layers = [0, 5, 10, 19, 28]

style_transfer(content_image_path, style_image_path, output_image_path)

输入的图片是这两张

输出的图片是这样(运行了10000轮):

风格是有了,调整一些参数,结果会有不同。

风格迁移技术的核心思想是通过深度学习网络将图像的内容和风格进行数学建模,然后通过优化损失函数来生成具有目标风格的图像。这使得艺术风格的迁移成为可能,为图像处理领域带来了新的可能性。

笔者水平有限,若有不对的地方欢迎评论指正!

![BUUCTF-Real-[Tomcat]CVE-2017-12615](https://img-blog.csdnimg.cn/direct/5ae00e6044ea4453947915b6b513c052.png)

![[C++]类和对象(下)](https://img-blog.csdnimg.cn/direct/d451720621a34fe7b5b20ea39a511637.png)