一、架构说明

1.1 架构概述

MGR(单主)+VIP架构是一种分布式数据库架构,其中数据库系统采用单主复制模式,

同时引入虚拟IP(VIP)来提高可用性和可扩展性。

这种架构结合了传统主从复制和虚拟IP技术的优势,为数据库系统提供了高可用、

高性能和可扩展的解决方案。

每个MGR实例必须部署在单台服务器上

每个复制组各个节点的server端口和内部通信端口必须相同

数据存储引擎仅支持InnoDB

服务器必须支持IPv4网络

数据库隔离级别为READ COMMITTED,不支持可重复读

1.2 环境信息

| 架构 | 操作系统 | IP | VIP | 主机名 | 服务版本 | 端口 | GCT端口 | 磁盘空间 | 内存 | CPU |

|---|---|---|---|---|---|---|---|---|---|---|

| MGR | redhat7.9 | 192.168.111.30 | 192.168.111.33 | mgrserver01 | mysql-8.0.35、python-3.6.8(含py_modes模块)、HAIPMGR-master(VIP服务) | 3307 | 13307 | 50G | 4G | 8C |

| – | – | 192.168.111.31 | 192.168.111.33 | mgrserver02 | mysql-8.0.35、python-3.6.8(含py_modes模块)、HAIPMGR-master(VIP服务) | 3307 | 13307 | 50G | 4G | 8C |

| – | – | 192.168.111.32 | 192.168.111.33 | mgrserver03 | mysql-8.0.35、python-3.6.8(含py_modes模块)、HAIPMGR-master(VIP服务) | 3307 | 13307 | 50G | 4G | 8C |

1.3 账号信息

| 用户名 | 用途 |

|---|---|

| root@localhost | 管理员本地账号 |

| admin | 管理员远程账号 |

| vip_monitor | 监控VIP服务 |

| repl | MGR节点之间数据同步 |

| backup | 数据备份 |

| i_dbm | 数据库管理平台 |

| sess_monitor | sesswait监控 |

备注:本次资源测试使用,具体资源根据业务需要调整。

二、环境准备

备注:三个节点都操作。

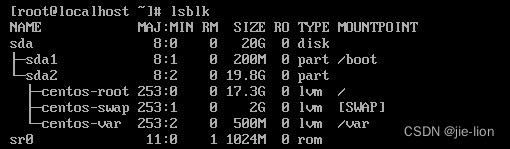

2.1 划分磁盘:/data

[root@mgrserver01 ~]# fdisk /dev/sdb

Welcome to fdisk (util-linux 2.23.2).

Changes will remain in memory only, until you decide to write them.

Be careful before using the write command.

Device does not contain a recognized partition table

Building a new DOS disklabel with disk identifier 0x1549bfc0.

Command (m for help): n

Partition type:

p primary (0 primary, 0 extended, 4 free)

e extended

Select (default p): p

Partition number (1-4, default 1): 1

First sector (2048-419430399, default 2048):

Using default value 2048

Last sector, +sectors or +size{K,M,G} (2048-419430399, default 419430399):

Using default value 419430399

Partition 1 of type Linux and of size 200 GiB is set

Command (m for help): w

The partition table has been altered!

Command (m for help): t

Selected partition 1

Hex code (type L to list all codes): 8e

Changed type of partition 'Linux' to 'Linux LVM'

Command (m for help): w

The partition table has been altered!

Calling ioctl() to re-read partition table.

Syncing disks.

磁盘更新

# partprobe /dev/sdb

LVM操作

创建PV

# pvcreate /dev/sdb1

创建VG

# vgcreate vg_data /dev/sdb1

创建LV

# lvcreate -L 299G -n lv_data vg_data

格式化文件系统

# mkfs.xfs /dev/mapper/vg_data-lv_data

挂载文件系统

# mount /dev/mapper/vg_data-lv_data /data

设置开机自动启动

# vi /etc/fstab

/dev/mapper/vg_data-lv_data /data xfs defaults 0 0

2.2 在data目录下创建数据库相应目录

mkdir -p /data/soft

mkdir -p /data/mysql8.0.35/3307

mkdir -p /data/mysql8.0.35/install

mkdir -p /data/mysql8.0.35/tools

mkdir -p /data/mysql8.0.35/tools/HAIPMGR

cd /data/mysql8.0.35/3307

mkdir {data,binlog,logs,conf,relaylog,tmp}

2.3 软件包下载

##MySQL软件包

[root@mgrserver01 soft]# cd /data/soft

[root@mgrserver01 soft]# wget https://downloads.mysql.com/archives/get/p/23/file/mysql-8.0.35-linux-glibc2.12-x86_64.tar.xz

--2024-01-25 16:59:42-- https://downloads.mysql.com/archives/get/p/23/file/mysql-8.0.35-linux-glibc2.12-x86_64.tar.xz

正在解析主机 downloads.mysql.com (downloads.mysql.com)... 23.64.178.143, 2600:140b:2:5ad::2e31

正在连接 downloads.mysql.com (downloads.mysql.com)|23.64.178.143|:443... 已连接。

已发出 HTTP 请求,正在等待回应... 302 Moved Temporarily

位置:https://cdn.mysql.com/archives/mysql-8.0/mysql-8.0.35-linux-glibc2.12-x86_64.tar.xz [跟随至新的 URL]

--2024-01-25 16:59:43-- https://cdn.mysql.com/archives/mysql-8.0/mysql-8.0.35-linux-glibc2.12-x86_64.tar.xz

正在解析主机 cdn.mysql.com (cdn.mysql.com)... 96.7.189.131, 2600:140b:2:593::1d68, 2600:140b:2:58f::1d68

正在连接 cdn.mysql.com (cdn.mysql.com)|96.7.189.131|:443... 已连接。

已发出 HTTP 请求,正在等待回应... 200 OK

长度:617552732 (589M) [text/plain]

正在保存至: “mysql-8.0.35-linux-glibc2.12-x86_64.tar.xz”

100%[====================================================================================================================================================================>] 617,552,732 4.28MB/s 用时 2m 17s

2024-01-25 17:02:02 (4.30 MB/s) - 已保存 “mysql-8.0.35-linux-glibc2.12-x86_64.tar.xz” [617552732/617552732])

##python-3.6.8.tar.xz

https://www.python.org/downloads/release/python-368/

##HAIPMGR-master

https://github.com/gaopengcarl/HAIPMGR

2.4 设置/etc/security/limits.conf、 /etc/sysctl.conf、/etc/hosts,禁用selinux、关闭防火墙

[root@mgrserver01 ~]# cat /etc/security/limits.conf

...

mysql soft nproc 16384

mysql hard nproc 16384

mysql soft nofile 65536

mysql hard nofile 65536

mysql soft stack 1024

mysql hard stack 1024

[root@mgrserver01 ~]# cat /etc/sysctl.conf

....

kernel.sysrq = 1

#basic setting

#net.ipv6.conf.all.disable_ipv6 = 1

#net.ipv6.conf.default.disable_ipv6 = 1

kernel.pid_max = 524288

fs.file-max = 6815744

fs.aio-max-nr = 1048576

kernel.sem = 500 256000 250 8192

net.ipv4.ip_local_port_range = 9000 65000

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_tw_reuse = 1

#net.ipv4.tcp_tw_recycle = 1

net.ipv4.tcp_fin_timeout = 30

net.ipv4.tcp_keepalive_time = 1800

net.ipv4.tcp_retries2 = 5

net.core.rmem_default = 262144

net.core.rmem_max = 16777216

net.core.wmem_default = 262144

net.core.wmem_max = 16777216

net.ipv4.tcp_rmem = 4096 87380 16777216

net.ipv4.tcp_wmem = 4096 65536 16777216

net.ipv4.tcp_max_syn_backlog = 8192

net.core.somaxconn = 4096

net.core.netdev_max_backlog = 3000

vm.swappiness = 0

net.ipv6.conf.eth0.disable_ipv6 = 1

vm.swappiness = 1

[root@mgrserver01 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.111.30 mgrserver01

192.168.111.31 mgrserver02

192.168.111.31 mgrserver03

getenforce

setenforce 0

sed -i s/SELINUX=enforcing/SELINUX=disabled/ /etc/selinux/config

systemctl stop firewalld

systemctl disable firewalld

2.5 创建mysql用户

groupadd mysql

useradd -g mysql -d /home/mysql mysql

echo "777dba_test" | passwd --stdin mysql

chown -R mysql:mysql /data

chmod -R 775 /data

三、开始安装Mysql数据库

注意:三节点都安装

3.1 依赖包安装

## mysql 依赖

[root@mgrserver01 soft]# yum -y install make cmake libaio numactl.x86_64

## python 依赖

[root@mgrserver01 soft]# yum -y install gcc openssl-devel bzip2-devel expat-devel gdbm-devel readline-devel sqlite-devel zlib*

3.2 安装jemalloc、python-3.6.8

我们在日常运维工作中,发现测试环境的mysql服务端偶尔出现内存使用率非常高的情况。

系统中查看mysqld已用内存量远远超出了innodb_buffer_pool_size配置的数倍之多。

另外在问题发生时,查看mysqld中的连接数仅500多,且大部分连接为sleep状态。

也就是说,虽然系统分配了大量的内存给mysqld,但其实际上并不需要用到那么多内存,内存可能产生了碎片,内存没有及时GC。

所以,尝试将mysqld的内存管理器改为使用 jemalloc 来提升内存效能。

jemalloc 是由Jason Evans 在FreeBSD 项目中引入的新一代内存分配器。

它是一个通用的malloc 实现,侧重于减少内存碎片和提升高并发场景下内存的分配效率,其目标是能够替代malloc。

redis目前默认使用的内存管理器就是 jemalloc 。

[root@mgrserver01]# cd /data/soft

[root@mgrserver01 soft]# wget https://github.com/jemalloc/jemalloc/archive/refs/tags/5.3.0.tar.gz

[root@mgrserver01 soft]# tar -zvxf jemalloc-5.3.0.tar.gz -C /data/soft/

[root@mgrserver01 soft]# cd jemalloc-5.3.0

[root@mgrserver01 jemalloc-5.3.0]# ls

autogen.sh config.stamp COPYING jemalloc.pc Makefile.in src

bin config.stamp.in doc jemalloc.pc.in msvc test

build-aux config.status doc_internal lib README TUNING.md

ChangeLog configure include m4 run_tests.sh VERSION

config.log configure.ac INSTALL.md Makefile scripts

[root@mgrserver01 jemalloc-5.3.0]# ./autogen.sh

autoconf

./autogen.sh:行5: autoconf: 未找到命令

Error 0 in autoconf

[root@mgrserver01 jemalloc-5.3.0]# yum -y install autoconf

[root@mgrserver01 jemalloc-5.3.0]# ./configure --prefix=/usr/local/jemalloc --libdir=/usr/ocal/lib

[root@mgrserver01 jemalloc-5.3.0]# make && make install

[root@mgrserver01 jemalloc-5.3.0]# echo "export LD_PRELOAD=/usr/local/lib/libjemalloc.so" >>/root/.bash_profile

[root@mgrserver01 soft]# tar -Jxvf Python-3.6.8.tar.xz

[root@mgrserver01 soft]# cd Python-3.6.8

[root@mgrserver01 Python-3.6.8]# ./configure prefix=/usr/local/python3

[root@mgrserver01 Python-3.6.8]# make && make install

[root@mgrserver01 Python-3.6.8]# ln -fs /usr/local/python3/bin/python3 /usr/bin/python3

[root@mgrserver01 Python-3.6.8]# ln -fs /usr/local/python3/bin/pip3 /usr/bin/pip3

3.3 安装常用模块psutil、pymysql(vip服务依赖包)

[root@mgrserver01 py_modes_2]# pip3 install psutil==5.7.0

Collecting psutil==5.7.0

Downloading https://files.pythonhosted.org/packages/c4/b8/3512f0e93e0db23a71d82485ba256071ebef99b227351f0f5540f744af41/psutil-5.7.0.tar.gz (449kB)

100% |████████████████████████████████| 450kB 1.1MB/s

Installing collected packages: psutil

Running setup.py install for psutil ... done

Successfully installed psutil-5.7.0

[root@mgrserver01 py_modes_2]# pip3 install PyMySQL==0.9.3

Collecting PyMySQL==0.9.3

Downloading https://files.pythonhosted.org/packages/ed/39/15045ae46f2a123019aa968dfcba0396c161c20f855f11dea6796bcaae95/PyMySQL-0.9.3-py2.py3-none-any.whl (47kB)

100% |████████████████████████████████| 51kB 179kB/s

Installing collected packages: PyMySQL

Successfully installed PyMySQL-0.9.3

[root@mgrserver01 py_modes_2]# pip3 install cryptography==3.3.1

Collecting cryptography==3.3.1

Downloading https://files.pythonhosted.org/packages/7c/b6/1f3dd48a22fcd56f19e6cfa95f74ff0a64b046306354e1bd2b936b7c9ab4/cryptography-3.3.1-cp36-abi3-manylinux1_x86_64.whl (2.7MB)

100% |████████████████████████████████| 2.7MB 40kB/s

Collecting six>=1.4.1 (from cryptography==3.3.1)

Downloading https://files.pythonhosted.org/packages/d9/5a/e7c31adbe875f2abbb91bd84cf2dc52d792b5a01506781dbcf25c91daf11/six-1.16.0-py2.py3-none-any.whl

Collecting cffi>=1.12 (from cryptography==3.3.1)

Downloading https://files.pythonhosted.org/packages/3a/12/d6066828014b9ccb2bbb8e1d9dc28872d20669b65aeb4a86806a0757813f/cffi-1.15.1-cp36-cp36m-manylinux_2_5_x86_64.manylinux1_x86_64.whl (402kB)

100% |████████████████████████████████| 409kB 32kB/s

Collecting pycparser (from cffi>=1.12->cryptography==3.3.1)

Downloading https://files.pythonhosted.org/packages/62/d5/5f610ebe421e85889f2e55e33b7f9a6795bd982198517d912eb1c76e1a53/pycparser-2.21-py2.py3-none-any.whl (118kB)

100% |████████████████████████████████| 122kB 43kB/s

Installing collected packages: six, pycparser, cffi, cryptography

Successfully installed cffi-1.15.1 cryptography-3.3.1 pycparser-2.21 six-1.16.0

3.4 解压软件包

[root@mgrserver01 soft]# tar -Jxvf /data/soft/mysql-8.0.35-linux-glibc2.12-x86_64.tar.xz -C /data/mysql8.0.35/install/

[root@mgrserver01 soft]# cd /data/mysql8.0.35/install/

[root@mgrserver01 install]# ll

总用量 0

drwxr-xr-x. 9 root root 129 1月 26 16:50 mysql-8.0.35-linux-glibc2.12-x86_64

[root@mgrserver01 install]# mv mysql-8.0.35-linux-glibc2.12-x86_64/ mysql-8.0.35

[root@mgrserver01 install]# ls

mysql-8.0.35

3.5 my.cnf文件配置

8.0版本

注意:server-id 三个节点不一致,节点之间需要修改的参数。

##节点一

server-id = 303307 #IP主机位 + 端口号

innodb_buffer_pool_size = 4G #物理内存的一半

innodb_thread_concurrency = 4 #CPU核数

innodb_write_io_threads = 4 #CPU核数

innodb_read_io_threads = 4 #CPU核数

loose-group_replication_local_address = 192.168.111.30:13307 #物理ip:GCT端口

loose-group_replication_group_seeds = "192.168.111.30:13307,192.168.111.31:13307,192.168.111.32:13307"

loose-group_replication_ip_whitelist = "192.168.111.0/24" #白名单网段

##节点三

server-id = 313307 #IP主机位 + 端口号

innodb_buffer_pool_size = 4G #物理内存的一半

innodb_thread_concurrency = 4 #CPU核数

innodb_write_io_threads = 4 #CPU核数

innodb_read_io_threads = 4 #CPU核数

loose-group_replication_local_address = 192.168.111.31:13307 #物理ip:GCT端口

loose-group_replication_group_seeds = "192.168.111.30:13307,192.168.111.31:13307,192.168.111.32:13307"

loose-group_replication_ip_whitelist = "192.168.111.0/24" #白名单网段

##节点三

server-id = 323307 #IP主机位 + 端口号

innodb_buffer_pool_size = 4G #物理内存的一半

innodb_thread_concurrency = 4 #CPU核数

innodb_write_io_threads = 4 #CPU核数

innodb_read_io_threads = 4 #CPU核数

loose-group_replication_local_address = 192.168.111.32:13307 #物理ip:GCT端口

loose-group_replication_group_seeds = "192.168.111.30:13307,192.168.111.31:13307,192.168.111.32:13307"

loose-group_replication_ip_whitelist = "192.168.111.0/24" #白名单网段

3.6 搭建基础库

3.6.1 初始化mysql数据库

/data/mysql8.0.35/install/mysql-8.0.35/bin/mysqld --defaults-file=/data/mysql8.0.35/3307/conf/my.cnf --initialize --user=mysql --log_error_verbosity --explicit_defaults_for_timestamp

3.6.2 命令执行完毕输出无误,启动数据库

nohup /data/mysql8.0.35/install/mysql-8.0.35/bin/mysqld_safe --defaults-file=/data/mysql8.0.35/3307/conf/my.cnf &

3.6.3 登录数据库

登录密码在error.log里

grep password /data/mysql8.0.35/3307/logs/error.log

2024-01-31T13:57:14.481383+08:00 6 [Note] [MY-010454] [Server] A temporary password is generated for root@localhost: r2_#y)mg&OV2

/data/mysql8.0.35/install/mysql-8.0.35/bin/mysql --defaults-file=/data/mysql8.0.35/3307/conf/my.cnf -uroot -p

3.6.4 修改Mysql的root用户密码、创建管理员远程账号

alter user 'root'@'localhost' identified by "r2_#y)mg&OV3";

create user 'admin'@'%' identified by 'r2_#y)mg&OV3';

grant all on *.* to admin;

reset master; ##清除二进制日志,按需执行。

flush privileges;

3.6.5 数据库关闭命令

/data/mysql8.0.35/install/mysql-8.0.35/bin/mysqladmin --defaults-file=/data/mysql8.0.35/3307/conf/my.cnf -uroot -p shutdown

四、MGR配置

4.1 创建复制组

4.1.1 在mgrserver01节点执行

reset master; #清除二进制日志

SET SQL_LOG_BIN=0; # 禁止当前会话中的SQL语句被写入二进制日志

CREATE USER 'repl'@'%' IDENTIFIED WITH mysql_native_password by 'r2_#y)mg&OV3';

GRANT REPLICATION SLAVE ON *.* TO 'repl'@'%';

SET SQL_LOG_BIN=1; #启用当前会话中的SQL语句写入二进制日志

CHANGE MASTER TO MASTER_USER='repl', MASTER_PASSWORD='r2_#y)mg&OV3' FOR CHANNEL 'group_replication_recovery';

INSTALL PLUGIN group_replication SONAME 'group_replication.so';

show plugins;

SET GLOBAL group_replication_bootstrap_group=ON;

START GROUP_REPLICATION;

SET GLOBAL group_replication_bootstrap_group=OFF;

SET GLOBAL group_replication_bootstrap_group=ON; #启动 MySQL 组复制的命令,它将允许一个节点在启动时作为新的组复制的启动节点。在执行这个命令之前,请确保你已经正确地配置了组复制的参数和设置。

START GROUP_REPLICATION; 是用于启动 MySQL 组复制的命令。这个命令会使启动节点加入到现有的组内,并与其他节点进行通信以实现数据复制和同步。

SET GLOBAL group_replication_bootstrap_group=OFF; 命令被用来关闭启动节点的引导模式,这意味着启动节点将正式加入组复制,并且不再作为新的组启动节点。使用这个命令之前,请确保启动节点已成功地加入了组内。

4.1.2 查看状态

SELECT * FROM performance_schema.replication_group_members;

+---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+----------------------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION | MEMBER_COMMUNICATION_STACK |

+---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+----------------------------+

| group_replication_applier | 2c7bd415-c173-11ee-a4d1-000c293f5404 | mgrserver01 | 3307 | ONLINE | PRIMARY | 8.0.35 | XCom |

+---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+----------------------------+

1 row in set (0.00 sec)

# 添加一些数据

CREATE DATABASE test;

USE test;

CREATE TABLE t1 (c1 INT PRIMARY KEY, c2 TEXT NOT NULL);

INSERT INTO t1 VALUES (1, 'Luis');

SELECT * FROM t1;

4.1.3 在mgrserver02、mgrserver03节点执行

reset master; #清除二进制日志

SET SQL_LOG_BIN=0; # 禁止当前会话中的SQL语句被写入二进制日志

CREATE USER 'repl'@'%' IDENTIFIED WITH mysql_native_password by 'r2_#y)mg&OV3';

GRANT REPLICATION SLAVE ON *.* TO 'repl'@'%';

SET SQL_LOG_BIN=1; #启用当前会话中的SQL语句写入二进制日志

CHANGE MASTER TO MASTER_USER='repl', MASTER_PASSWORD='r2_#y)mg&OV3' FOR CHANNEL 'group_replication_recovery';

INSTALL PLUGIN group_replication SONAME 'group_replication.so';

START GROUP_REPLICATION;

4.1.4 查看状态

SELECT * FROM performance_schema.replication_group_members;

root@localhost: 18:37: [(none)]> SELECT * FROM performance_schema.replication_group_members;

+---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+----------------------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION | MEMBER_COMMUNICATION_STACK |

+---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+----------------------------+

| group_replication_applier | 2c7bd415-c173-11ee-a4d1-000c293f5404 | mgrserver01 | 3307 | ONLINE | PRIMARY | 8.0.35 | XCom |

| group_replication_applier | 33e4f69b-c173-11ee-9a5d-000c29f132d9 | mgrserver03 | 3307 | ONLINE | SECONDARY | 8.0.35 | XCom |

| group_replication_applier | 3fc65174-c173-11ee-94aa-000c29c1073f | mgrserver02 | 3307 | ONLINE | SECONDARY | 8.0.35 | XCom |

+---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+----------------------------+

3 rows in set (0.00 sec)

4.1.5 查看mgr_node01的数据是否同步

SHOW DATABASES LIKE 'test';

SELECT * FROM test.t1;

root@localhost: 11:25: [(none)]> SHOW DATABASES LIKE 'test';

+-----------------+

| Database (test) |

+-----------------+

| test |

+-----------------+

1 row in set (0.00 sec)

root@localhost: 11:25: [(none)]> SELECT * FROM test.t1;

+----+------+

| c1 | c2 |

+----+------+

| 1 | Luis |

+----+------+

1 row in set (0.00 sec)

4.2 查看MGR集群状态

SELECT

MEMBER_ID,

MEMBER_HOST,

MEMBER_PORT,

MEMBER_STATE,

IF(global_status.VARIABLE_NAME IS NOT NULL,

'PRIMARY',

'SECONDARY') AS MEMBER_ROLE

FROM

performance_schema.replication_group_members

LEFT JOIN

performance_schema.global_status ON global_status.VARIABLE_NAME = 'group_replication_primary_member'

AND global_status.VARIABLE_VALUE = replication_group_members.MEMBER_ID;

root@localhost: 11:23: [test]> SELECT

-> MEMBER_ID,

-> MEMBER_HOST,

-> MEMBER_PORT,

-> MEMBER_STATE,

-> IF(global_status.VARIABLE_NAME IS NOT NULL,

-> 'PRIMARY',

-> 'SECONDARY') AS MEMBER_ROLE

-> FROM

-> performance_schema.replication_group_members

-> LEFT JOIN

-> performance_schema.global_status ON global_status.VARIABLE_NAME = 'group_replication_primary_member'

-> AND global_status.VARIABLE_VALUE = replication_group_members.MEMBER_ID;

+--------------------------------------+-------------+-------------+--------------+-------------+

| MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | MEMBER_ROLE |

+--------------------------------------+-------------+-------------+--------------+-------------+

| 2c7bd415-c173-11ee-a4d1-000c293f5404 | mgrserver01 | 3307 | ONLINE | PRIMARY |

| 33e4f69b-c173-11ee-9a5d-000c29f132d9 | mgrserver03 | 3307 | ONLINE | SECONDARY |

| 3fc65174-c173-11ee-94aa-000c29c1073f | mgrserver02 | 3307 | ONLINE | SECONDARY |

+--------------------------------------+-------------+-------------+--------------+-------------+

3 rows in set (0.00 sec)

4.3 创建监控视图

在主库执行即可。

4.3.1 mysql 5.7 使用以下命令创建

USE sys;

DELIMITER $$

CREATE FUNCTION IFZERO(a INT, b INT)

RETURNS INT

DETERMINISTIC

RETURN IF(a = 0, b, a)$$

CREATE FUNCTION LOCATE2(needle TEXT(10000), haystack TEXT(10000), offset INT)

RETURNS INT

DETERMINISTIC

RETURN IFZERO(LOCATE(needle, haystack, offset), LENGTH(haystack) + 1)$$

CREATE FUNCTION GTID_NORMALIZE(g TEXT(10000))

RETURNS TEXT(10000)

DETERMINISTIC

RETURN GTID_SUBTRACT(g, '')$$

CREATE FUNCTION GTID_COUNT(gtid_set TEXT(10000))

RETURNS INT

DETERMINISTIC

BEGIN

DECLARE result BIGINT DEFAULT 0;

DECLARE colon_pos INT;

DECLARE next_dash_pos INT;

DECLARE next_colon_pos INT;

DECLARE next_comma_pos INT;

SET gtid_set = GTID_NORMALIZE(gtid_set);

SET colon_pos = LOCATE2(':', gtid_set, 1);

WHILE colon_pos != LENGTH(gtid_set) + 1 DO

SET next_dash_pos = LOCATE2('-', gtid_set, colon_pos + 1);

SET next_colon_pos = LOCATE2(':', gtid_set, colon_pos + 1);

SET next_comma_pos = LOCATE2(',', gtid_set, colon_pos + 1);

IF next_dash_pos < next_colon_pos AND next_dash_pos < next_comma_pos THEN

SET result = result +

SUBSTR(gtid_set, next_dash_pos + 1,

LEAST(next_colon_pos, next_comma_pos) - (next_dash_pos + 1)) -

SUBSTR(gtid_set, colon_pos + 1, next_dash_pos - (colon_pos + 1)) + 1;

ELSE

SET result = result + 1;

END IF;

SET colon_pos = next_colon_pos;

END WHILE;

RETURN result;

END$$

CREATE FUNCTION gr_applier_queue_length()

RETURNS INT

DETERMINISTIC

BEGIN

RETURN (SELECT sys.gtid_count( GTID_SUBTRACT( (SELECT

Received_transaction_set FROM performance_schema.replication_connection_status

WHERE Channel_name = 'group_replication_applier' ), (SELECT

@@global.GTID_EXECUTED) )));

END$$

CREATE FUNCTION gr_member_in_primary_partition()

RETURNS VARCHAR(3)

DETERMINISTIC

BEGIN

RETURN (SELECT IF( MEMBER_STATE='ONLINE' AND ((SELECT COUNT(*) FROM

performance_schema.replication_group_members WHERE MEMBER_STATE != 'ONLINE') >=

((SELECT COUNT(*) FROM performance_schema.replication_group_members)/2) = 0),

'YES', 'NO' ) FROM performance_schema.replication_group_members JOIN

performance_schema.replication_group_member_stats USING(member_id));

END$$

CREATE VIEW gr_member_routing_candidate_status AS SELECT

sys.gr_member_in_primary_partition() as viable_candidate,

IF( (SELECT (SELECT GROUP_CONCAT(variable_value) FROM

performance_schema.global_variables WHERE variable_name IN ('read_only',

'super_read_only')) != 'OFF,OFF'), 'YES', 'NO') as read_only,

sys.gr_applier_queue_length() as transactions_behind, Count_Transactions_in_queue as 'transactions_to_cert' from performance_schema.replication_group_member_stats;$$

DELIMITER ;

4.3.2 mysql 8.0 使用以下命令创建

USE sys;

DELIMITER $$

CREATE FUNCTION my_id() RETURNS TEXT(36) DETERMINISTIC NO SQL RETURN (SELECT @@global.server_uuid as my_id);$$

CREATE FUNCTION gr_member_in_primary_partition()

RETURNS VARCHAR(3)

DETERMINISTIC

BEGIN

RETURN (SELECT IF( MEMBER_STATE='ONLINE' AND ((SELECT COUNT(*) FROM

performance_schema.replication_group_members WHERE MEMBER_STATE NOT IN ('ONLINE', 'RECOVERING')) >=

((SELECT COUNT(*) FROM performance_schema.replication_group_members)/2) = 0),

'YES', 'NO' ) FROM performance_schema.replication_group_members JOIN

performance_schema.replication_group_member_stats USING(member_id) where member_id=my_id());

END$$

CREATE VIEW gr_member_routing_candidate_status AS SELECT

sys.gr_member_in_primary_partition() as viable_candidate,

IF( (SELECT (SELECT GROUP_CONCAT(variable_value) FROM

performance_schema.global_variables WHERE variable_name IN ('read_only',

'super_read_only')) != 'OFF,OFF'), 'YES', 'NO') as read_only,

Count_Transactions_Remote_In_Applier_Queue as transactions_behind, Count_Transactions_in_queue as 'transactions_to_cert'

from performance_schema.replication_group_member_stats where member_id=my_id();$$

DELIMITER ;

4.4 安装VIP服务

三个节点都部署

unzip /data/soft/HAIPMGR-master.zip -d /data/mysql8.0.35/tools/HAIPMGR/

chown -R mysql.mysql /data/mysql8.0.35/tools/HAIPMGR/

4.4.1 修改配置文件

vi /data/mysql8.0.35/tools/HAIPMGR/HAIPMGR-master/parameter.py

par_var={"vip":"192.168.111.33","cluser_ip":{"192.168.111.30":"eth0","192.168.111.31":"eth0","192.168.111.32":"eth0"},"ip_gateway":"192.168.111.2","mysql_port":"3307","inter_port":"13307","passwd":"Monitor@dba1","user":"vip_monitor","platfrom":"linux","sleeptime":7,"install_path":"/data/mysql8.0.35/tools/HAIPMGR/HAIPMGR-master/"}

4.4.2 创建监控用户

在主库执行以下命令

create user vip_monitor@'%' IDENTIFIED WITH mysql_native_password by 'Monitor@dba1';

grant select on performance_schema.* to vip_monitor@'%';

4.4.3 启动VIP服务

nohup python3 /data/mysql8.0.35/tools/HAIPMGR/HAIPMGR-master/HAIPMGR.py &

4.4.4 检查vip是否绑定在primary节点

ip addr

[root@mgrserver01 HAIPMGR-master]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:3f:54:04 brd ff:ff:ff:ff:ff:ff

inet 192.168.111.30/24 brd 192.168.111.255 scope global noprefixroute eth0

valid_lft forever preferred_lft forever

inet 192.168.111.33/24 brd 192.168.111.255 scope global secondary eth0:3307

valid_lft forever preferred_lft forever

4.4.5 查看日志输出

tail -f /data/mysql8.0.35/tools/HAIPMGR/HAIPMGR-master/tail -f HAIPMGR3307.log

***********************************HAIPMGR:One loop begin:*********************************** file:HAIPMGR.py line:75 fun:main

2024-02-06 16:24:22,153 - logger.Fun_log_create - INFO - all_vip.check_stat:[STAGE1]------------------------------------- file:all_vip.py line:72 fun:check_stat

2024-02-06 16:24:22,154 - logger.Fun_log_create - INFO - fun:getl_ip_isincluter:local ip addr is [('192.168.111.30', 'eth0'), ('192.168.111.33', 'eth0:3307')] file:tool.py line:139 fun:getl_ip_isincluter

2024-02-06 16:24:22,155 - logger.Fun_log_create - INFO - fun:getl_ip_isincluter:local ip 192.168.111.30 addr is in cluster dict_items([('192.168.111.30', 'eth0'), ('192.168.111.31', 'eth0'), ('192.168.111.32', 'eth0')]) file:tool.py line:145 fun:getl_ip_isincluter

2024-02-06 16:24:22,155 - logger.Fun_log_create - INFO - all_vip.check_stat:[STAGE1] check scuess: local ip 192.168.111.30 is in cluster {'192.168.111.30': 'eth0', '192.168.111.31': 'eth0', '192.168.111.32': 'eth0'} file:all_vip.py line:76 fun:check_stat

2024-02-06 16:24:22,155 - logger.Fun_log_create - INFO - all_vip.check_stat:[STAGE2]------------------------------------- file:all_vip.py line:87 fun:check_stat

2024-02-06 16:24:22,158 - logger.Fun_log_create - INFO - fun:is_mysqld_up:192.168.111.30 3307 vip_monitor connect mysqld sucess file:tool.py line:187 fun:is_mysqld_up

2024-02-06 16:24:22,159 - logger.Fun_log_create - INFO - all_vip.check_stat:[STAGE2] mysqld ip:192.168.111.30 port:3307 connect scuess file:all_vip.py line:91 fun:check_stat

2024-02-06 16:24:22,159 - logger.Fun_log_create - INFO - all_vip.check_stat:[STAGE3]------------------------------------- file:all_vip.py line:103 fun:check_stat

2024-02-06 16:24:22,159 - logger.Fun_log_create - INFO - fun:is_mgrok_master: connect mysqld sucess file:tool.py line:240 fun:is_mgrok_master

2024-02-06 16:24:22,160 - logger.Fun_log_create - INFO - fun:is_mgrok_master: current host mgrserver01 is MGR online node mgrserver01 file:tool.py line:283 fun:is_mgrok_master

2024-02-06 16:24:22,160 - logger.Fun_log_create - INFO - fun:is_mgrok_master: current host mgrserver01 is MGR master node mgrserver01 file:tool.py line:291 fun:is_mgrok_master

2024-02-06 16:24:22,160 - logger.Fun_log_create - INFO - all_vip.check_stat:[STAGE3] check scuess: mysqld ip:192.168.111.30 is master node file:all_vip.py line:107 fun:check_stat

2024-02-06 16:24:22,160 - logger.Fun_log_create - INFO - all_vip.check_stat:[STAGE4]------------------------------------- file:all_vip.py line:120 fun:check_stat

2024-02-06 16:24:24,178 - logger.Fun_log_create - INFO - fun:is_connect_ip: ping reslut is (0, 'PING 192.168.111.2 (192.168.111.2) 56(84) bytes of data.\n64 bytes from 192.168.111.2: icmp_seq=1 ttl=128 time=0.137 ms\n64 bytes from 192.168.111.2: icmp_seq=2 ttl=128 time=0.491 ms\n64 bytes from 192.168.111.2: icmp_seq=3 ttl=128 time=1.44 ms\n\n--- 192.168.111.2 ping statistics ---\n3 packets transmitted, 3 received, 0% packet loss, time 2011ms\nrtt min/avg/max/mdev = 0.137/0.692/1.448/0.553 ms') file:tool.py line:63 fun:is_connect_ip

2024-02-06 16:24:24,179 - logger.Fun_log_create - INFO - all_vip.check_stat:[STAGE4] check sucess: gateway 192.168.111.2 is connect sucess file:all_vip.py line:124 fun:check_stat

2024-02-06 16:24:24,179 - logger.Fun_log_create - INFO - all_vip.check_vip:[STAGE5]------------------------------------- file:all_vip.py line:139 fun:check_vip

2024-02-06 16:24:24,179 - logger.Fun_log_create - INFO - fun:is_vip_local: check vip 192.168.111.33 is hit local ip ('192.168.111.33', 'eth0:3307') file:tool.py line:322 fun:is_vip_local

2024-02-06 16:24:24,180 - logger.Fun_log_create - INFO - all_vip.check_vip:Vip 192.168.111.33 must start on this node file:all_vip.py line:145 fun:check_vip

2024-02-06 16:24:24,180 - logger.Fun_log_create - INFO - all_vip.check_vip:Vip 192.168.111.33 is on this node keep it file:all_vip.py line:148 fun:check_vip

2024-02-06 16:24:24,180 - logger.Fun_log_create - INFO - all_vip.oper_vip:[STAGE6]------------------------------------- file:all_vip.py line:194 fun:oper_vip

2024-02-06 16:24:24,180 - logger.Fun_log_create - INFO - all_vip.oper_vip:Vip opertion keep on file:all_vip.py line:209 fun:oper_vip

#查看进程

ps aux|grep -i mgr

[root@mgrserver01 HAIPMGR-master]# ps aux | grep -i mgr

postfix 1231 0.0 0.1 89984 4284 ? S 01:09 0:00 qmgr -l -t unix -u

root 4860 0.1 0.4 223584 17052 pts/0 S+ 15:37 0:03 python3 HAIPMGR.py

root 5253 0.0 0.0 128172 1236 pts/1 S+ 16:24 0:00 tail -f HAIPMGR3307.log

root 5262 0.0 0.0 130752 1568 pts/2 S+ 16:25 0:00 grep --color=auto -i mgr

4.5 安装sesswait

4.5.1 创建账号、目录

在主库创建sesswait监控账号

CREATE USER ‘sess_monitor’@‘%’ IDENTIFIED BY ‘Session_monit77’;

GRANT PROCESS ON . TO sess_monitor@‘%’;

GRANT select on sys.* to sess_monitor@‘%’;

4.5.2配置安全登录认证

在三个节点上部署sesswait

/data/mysql8.0.35/install/mysql-8.0.35/bin/mysql_config_editor set -S /data/mysql8.0.35/3307/tmp/mysql.sock --login-path=sesswait -usess_monitor -p

4.5.3 查看

[root@mgrserver01 HAIPMGR-master]# /data/mysql8.0.35/install/mysql-8.0.35/bin/mysql_config_editor print --all

[sesswait]

user = "sess_monitor"

password = *****

socket = "/data/mysql8.0.35/3307/tmp/mysql.sock"

4.5.4 sess_monitor用户登录测试

/data/mysql8.0.35/install/mysql-8.0.35/bin/mysql --login-path=sesswait

4.5.5 创建监控脚本

自动化脚本统一放到/home/mysql/DBmanagerment下

mkdir -p /home/mysql/DBmanagerment/sesswait

chown -R mysql.mysql /home/mysql/DBmanagerment/

cat /home/mysql/DBmanagerment/sesswait.sh

#!/bin/bash

USEROPTIONS="--login-path=sesswait"

MYSQL="/data/mysql8.0.35/install/mysql-8.0.35/bin/mysql"

i=0

export LANG="en_US.UTF-8"

while (($i<2881))

do

echo "#############当前时间#############"

$MYSQL $USEROPTIONS -e "select now();"

echo "#############活动会话数#############"

$MYSQL $USEROPTIONS -e "select user,count(*) from information_schema.PROCESSLIST where COMMAND not in ('Sleep') group by user;"

echo "#############连接会话数#############"

$MYSQL $USEROPTIONS -e "select user,count(*) from information_schema.PROCESSLIST group by user;"

echo "#############正在锁的事务#############"

$MYSQL $USEROPTIONS -e "SELECT lock_id,lock_trx_id,lock_mode,lock_table,lock_index,lock_data FROM INFORMATION_SCHEMA.INNODB_LOCKS;"

$MYSQL $USEROPTIONS -e "SELECT * FROM INFORMATION_SCHEMA.INNODB_LOCK_WAITS;"

echo "#############运行时间大于5秒的事务#############"

$MYSQL $USEROPTIONS -e "select ID,USER,COMMAND,TIME,STATE,INFO from information_schema.PROCESSLIST where TIME>5 and COMMAND not in ('Sleep');"

echo "#############运行时间大于60秒的事务#############"

$MYSQL $USEROPTIONS -e "select ID,USER,COMMAND,TIME,STATE,INFO from information_schema.PROCESSLIST where TIME>60 and COMMAND not in ('Sleep');"

echo "#############运行时间大于600秒的事务#############"

$MYSQL $USEROPTIONS -e "select ID,USER,COMMAND,TIME,STATE,INFO from information_schema.PROCESSLIST where TIME>600 and COMMAND not in ('Sleep');"

echo "#############运行中的SQL#############"

$MYSQL $USEROPTIONS -e "select ID,USER,COMMAND,TIME,STATE,INFO from information_schema.PROCESSLIST where COMMAND not in ('Sleep');"

echo "#############延迟监控#############"

$MYSQL $USEROPTIONS -e "select * from sys.gr_member_routing_candidate_status"

echo "############################Will Sleep 30 Seconds#############################"

let i++

sleep 30

done

echo "###################################!Clean 10 day!####################################"

find /home/mysql/DBmanagerment/sesswait/sesswait_* -type f -mtime +10 -exec rm {} \;

4.5.6 添加计划任务

每天晚上00:01点生成新文件

crontab -e

0 4 * * * sh /home/mysql/DBmanagerment/sess.sh

vi /home/mysql/DBmanagerment/sess.sh

sh /home/mysql/DBmanagerment/sesswait.sh > /home/mysql/DBmanagerment/sesswait/sesswait_`date +%F`.log 2>&1 &

赋予权限

chown -R mysql.mysql /home/mysql/DBmanagerment/

chmod -R 755 /home/mysql/DBmanagerment/