前言

我们之前跑了一个yolo的模型,然后我们通过PaddleDetection的库对这个模型进行了一定程度的调用,但是那个调用还是基于命令的调用,这样的库首先第一个不能部署到客户的电脑上,第二个用起来也非常不方便,那么我们可不可以直接将Paddle的库直接做成一个DLL部署到我们的软件上呢?答案是可以的,接下来我就会全流程地完成这一操作。

流程

部署流程主要参考了几个文档:

Visual Studio 2019 Community CMake 编译指南

PaddleDetection部署c++测试图片视频 (win10+vs2017)

流程的话主要有以下几个步骤

- 编译opencv

- 下载cuda库(如果之前安装了cudnn和cuda toolkit,那就不用管这一块,我这里演示cpu版本的安装)

- 下载PaddlePaddle C++ 预测库 paddle_inference

- 编译

内容

编译opencv

这里直接略过吧,这个没什么好说的,而且在我们上文中全流程机器视觉工程开发(三)任务前瞻 - 从opencv的安装编译说起,到图像增强和分割

我们已经完成了opencv的安装和配置,这里就不多说了

下载cuda库

这里由于使用的是CPU版本的推理库,所以这里也直接略过。

下载推理库paddle_inference

下载安装预测库

下载后解压即可,找个地方摆好备用。

- 编译

编译的时候找到PaddleDetection库下的 \PaddleDetection\deploy\cpp 目录

使用CMake GUI打开这个文件夹,会有很多地方爆红,按照文档中给定的要求填就可以了

由于我这里没有用CUDA,所以只用填OPencv_dir和paddle_dir即可,如果你不想每次都填,可以直接改写cpp文件夹下的CMakeList.txt

将11 -17行中改成你想要的,比如我下面的改法:

option(WITH_MKL "Compile demo with MKL/OpenBlas support,defaultuseMKL." ON)

#我这里不开GPU,所以这个改成off

option(WITH_GPU "Compile demo with GPU/CPU, default use CPU." OFF)

option(WITH_TENSORRT "Compile demo with TensorRT." OFF)

option(WITH_KEYPOINT "Whether to Compile KeyPoint detector" OFF)

option(WITH_MOT "Whether to Compile MOT detector" OFF)

#SET(PADDLE_DIR "" CACHE PATH "Location of libraries")

#SET(PADDLE_LIB_NAME "" CACHE STRING "libpaddle_inference")

#SET(OPENCV_DIR "" CACHE PATH "Location of libraries")

#SET(CUDA_LIB "" CACHE PATH "Location of libraries")

#SET(CUDNN_LIB "" CACHE PATH "Location of libraries")

#SET(TENSORRT_INC_DIR "" CACHE PATH "Compile demo with TensorRT")

#SET(TENSORRT_LIB_DIR "" CACHE PATH "Compile demo with TensorRT")

SET(PADDLE_DIR "D:\\WorkShop\\Python\\paddle_inference")

SET(PADDLE_LIB_NAME "paddle_inference")

SET(OPENCV_DIR "C:\\Program Files (x86)\\opencv")

include(cmake/yaml-cpp.cmake)

include_directories("${CMAKE_SOURCE_DIR}/")

include_directories("${CMAKE_CURRENT_BINARY_DIR}/ext/yaml-cpp/src/ext-yaml-cpp/include")

link_directories("${CMAKE_CURRENT_BINARY_DIR}/ext/yaml-cpp/lib")

重新在gui里面configure一下这个工程,就会变成这样:

点击generate,就在build 文件夹下生成了工程

这时候直接build一下总的解决方案就可以了

常见错误

未定义标识符CV_xxxx_xxxx

这个是因为在opencv新版本中将这些标识符都改名了,现在将这些未定义的标识符从CV_xxx_xxx改成cv::xxx_xxx即可,比如:

改为:

把报错的地方都改一下就可以了

无法打开源文件 “yaml-cpp/yaml.h”

这个是main工程里的CMakeList.txt的问题,将25行左右按照如下修改一下就可以了:

# 尽量不要用CMAKE_CURRENT_BINARY_DIR,改用CMAKE_BINARY_DIR

#include_directories("${CMAKE_CURRENT_BINARY_DIR}/ext/yaml-cpp/src/ext-yaml-cpp/include")

message(STATUS ".123123${CMAKE_BINARY_DIR}/ext/yaml-cpp/src/ext-yaml-cpp/include")

include_directories(${CMAKE_BINARY_DIR}/ext/yaml-cpp/src/ext-yaml-cpp/include)

#link_directories("${CMAKE_CURRENT_BINARY_DIR}/ext/yaml-cpp/lib")

link_directories(${CMAKE_BINARY_DIR}/ext/yaml-cpp/lib)

无法找到libyaml-cppmt.lib

请使用Release编译,貌似这个库不支持debug编译,因为这里引用似乎没有引用libyaml-cppmtd.lib

修改

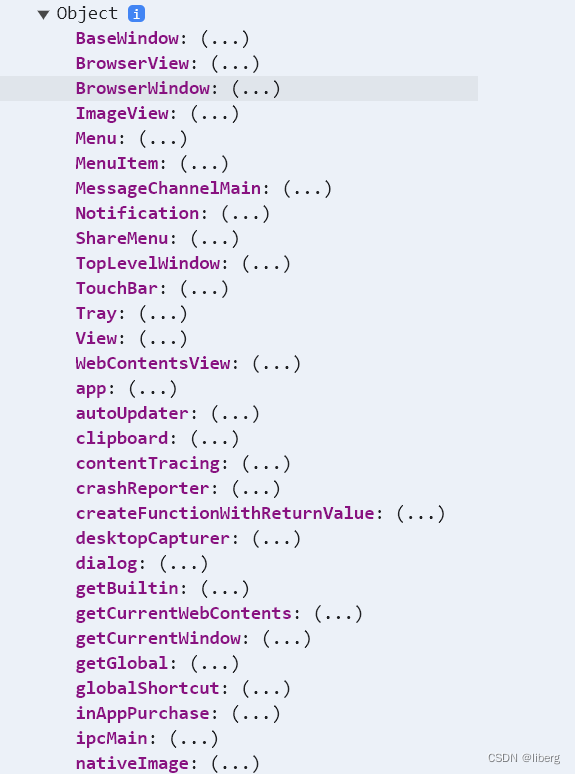

我们现在就编译过了这个库,但是现在编译完成的结果其实还有个问题,那就是这玩意还是个exe,当然了我并不需要exe,我希望我的东西是个dll,这样就可以集成到别的项目去了。

这里提一嘴,我这里编译出来的库没有用CMake管理,因为接口比较简单,所以就是直接用windows的那种lib+dll的形式导出的,没有让 cmake直接管理。因为用CMake直接管理引用的话,会比较麻烦,容易造成一些不必要的问题。用静态链接的方式的话,反正直接链接上去掉接口就完了,只要头文件里面不要包含paddle的东西,管你内容是什么呢?

我这里给main函数添加了一个头文件:

//extern "C" __declspec(dllexport) int main_test();

#include <iostream>

#include <vector>

extern "C" __declspec(dllexport) int add(int a, int b);

int add(int a, int b) {

return a + b;

}

struct ObjDetector {

std::string model_dir;

const std::string device = "CPU";

bool use_mkldnn = false; int cpu_threads = 1;

std::string run_mode = std::string("paddle");

int batch_size = 1; int gpu_id = 0;

int trt_min_shape = 1;

int trt_max_shape = 1280;

int trt_opt_shape = 640;

bool trt_calib_mode = false;

};

using namespace std;

class __declspec(dllexport) Lev_ModelInfer

{

public:

void PrintBenchmarkLog(std::vector<double> det_time, int img_num);

static string DirName(const std::string& filepath);

static bool PathExists(const std::string& path);

static void MkDir(const std::string& path);

static void MkDirs(const std::string& path);

void GetAllFiles(const char* dir_name, std::vector<std::string>& all_inputs);

//void PredictVideo(const std::string& video_path, PaddleDetection::ObjectDetector* det);

void PredictImage_(const std::vector<std::string> all_img_paths,

const int batch_size,

const double threshold,

const bool run_benchmark,

ObjDetector det,

const std::string& output_dir = "output");

private:

};

同时修改了main函数如下:

// Copyright (c) 2020 PaddlePaddle Authors. All Rights Reserved.

//

// Licensed under the Apache License, Version 2.0 (the "License");

// you may not use this file except in compliance with the License.

// You may obtain a copy of the License at

//

// http://www.apache.org/licenses/LICENSE-2.0

//

// Unless required by applicable law or agreed to in writing, software

// distributed under the License is distributed on an "AS IS" BASIS,

// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

// See the License for the specific language governing permissions and

// limitations under the License.

#include "Leventure_ModelInfer.h"

#include "include/object_detector.h"

#include <glog/logging.h>

#include <dirent.h>

#include <iostream>

#include <string>

#include <vector>

#include <numeric>

#include <sys/types.h>

#include <sys/stat.h>

#include <math.h>

#include <filesystem>

#ifdef _WIN32

#include <direct.h>

#include <io.h>

#elif LINUX

#include <stdarg.h>

#include <sys/stat.h>

#endif

#include <gflags/gflags.h>

void PrintBenchmarkLog(std::vector<double> det_time, int img_num) {

}

void Lev_ModelInfer::PrintBenchmarkLog(std::vector<double> det_time, int img_num) {

PrintBenchmarkLog(det_time, img_num);

}

string Lev_ModelInfer::DirName(const std::string& filepath) {

auto pos = filepath.rfind(OS_PATH_SEP);

if (pos == std::string::npos) {

return "";

}

return filepath.substr(0, pos);

}

bool Lev_ModelInfer::PathExists(const std::string& path) {

#ifdef _WIN32

struct _stat buffer;

return (_stat(path.c_str(), &buffer) == 0);

#else

struct stat buffer;

return (stat(path.c_str(), &buffer) == 0);

#endif // !_WIN32

}

void Lev_ModelInfer::MkDir(const std::string& path) {

if (PathExists(path)) return;

int ret = 0;

#ifdef _WIN32

ret = _mkdir(path.c_str());

#else

ret = mkdir(path.c_str(), 0755);

#endif // !_WIN32

if (ret != 0) {

std::string path_error(path);

path_error += " mkdir failed!";

throw std::runtime_error(path_error);

}

}

void Lev_ModelInfer::MkDirs(const std::string& path) {

if (path.empty()) return;

if (PathExists(path)) return;

MkDirs(DirName(path));

MkDir(path);

}

void Lev_ModelInfer::GetAllFiles(const char* dir_name,

std::vector<std::string>& all_inputs) {

if (NULL == dir_name) {

std::cout << " dir_name is null ! " << std::endl;

return;

}

struct stat s;

stat(dir_name, &s);

if (!S_ISDIR(s.st_mode)) {

std::cout << "dir_name is not a valid directory !" << std::endl;

all_inputs.push_back(dir_name);

return;

}

else {

struct dirent* filename; // return value for readdir()

DIR* dir; // return value for opendir()

dir = opendir(dir_name);

if (NULL == dir) {

std::cout << "Can not open dir " << dir_name << std::endl;

return;

}

std::cout << "Successfully opened the dir !" << std::endl;

while ((filename = readdir(dir)) != NULL) {

if (strcmp(filename->d_name, ".") == 0 ||

strcmp(filename->d_name, "..") == 0)

continue;

all_inputs.push_back(dir_name + std::string("/") +

std::string(filename->d_name));

}

}

}

//void Lev_ModelInfer::PredictVideo(const std::string& video_path,

// PaddleDetection::ObjectDetector* det) {

// // Open video

// cv::VideoCapture capture;

// if (camera_id != -1) {

// capture.open(camera_id);

// }

// else {

// capture.open(video_path.c_str());

// }

// if (!capture.isOpened()) {

// printf("can not open video : %s\n", video_path.c_str());

// return;

// }

//

// // Get Video info : resolution, fps

// int video_width = static_cast<int>(capture.get(CV_CAP_PROP_FRAME_WIDTH));

// int video_height = static_cast<int>(capture.get(CV_CAP_PROP_FRAME_HEIGHT));

// int video_fps = static_cast<int>(capture.get(CV_CAP_PROP_FPS));

//

// // Create VideoWriter for output

// cv::VideoWriter video_out;

// std::string video_out_path = "output.avi";

// video_out.open(video_out_path.c_str(),

// CV_FOURCC('D', 'I', 'V', 'X'),

// video_fps,

// cv::Size(video_width, video_height),

// true);

// std::cout << video_out.isOpened();

// if (!video_out.isOpened()) {

// printf("create video writer failed!\n");

// return;

// }

//

// std::vector<PaddleDetection::ObjectResult> result;

// std::vector<int> bbox_num;

// std::vector<double> det_times;

// auto labels = det->GetLabelList();

// auto colormap = PaddleDetection::GenerateColorMap(labels.size());

// // Capture all frames and do inference

// cv::Mat frame;

// int frame_id = 0;

// bool is_rbox = false;

// int icu = 0;

// while (capture.read(frame)) {

// icu += 1;

// std::cout << icu << "frame" << std::endl;

// if (frame.empty()) {

// break;

// }

// std::vector<cv::Mat> imgs;

// imgs.push_back(frame);

// det->Predict(imgs, 0.5, 0, 1, &result, &bbox_num, &det_times);

// for (const auto& item : result) {

// if (item.rect.size() > 6) {

// is_rbox = true;

// printf("class=%d confidence=%.4f rect=[%d %d %d %d %d %d %d %d]\n",

// item.class_id,

// item.confidence,

// item.rect[0],

// item.rect[1],

// item.rect[2],

// item.rect[3],

// item.rect[4],

// item.rect[5],

// item.rect[6],

// item.rect[7]);

// }

// else {

// printf("class=%d confidence=%.4f rect=[%d %d %d %d]\n",

// item.class_id,

// item.confidence,

// item.rect[0],

// item.rect[1],

// item.rect[2],

// item.rect[3]);

// }

// }

//

// cv::Mat out_im = PaddleDetection::VisualizeResult(

// frame, result, labels, colormap, is_rbox);

//

// video_out.write(out_im);

// frame_id += 1;

// }

// capture.release();

// video_out.release();

//}

void PredictImage(const std::vector<std::string> all_img_paths,

const int batch_size,

const double threshold,

const bool run_benchmark,

PaddleDetection::ObjectDetector* det,

const std::string& output_dir) {

std::vector<double> det_t = { 0, 0, 0 };

int steps = ceil(float(all_img_paths.size()) / batch_size);

printf("total images = %d, batch_size = %d, total steps = %d\n",

all_img_paths.size(), batch_size, steps);

for (int idx = 0; idx < steps; idx++) {

std::vector<cv::Mat> batch_imgs;

int left_image_cnt = all_img_paths.size() - idx * batch_size;

if (left_image_cnt > batch_size) {

left_image_cnt = batch_size;

}

for (int bs = 0; bs < left_image_cnt; bs++) {

std::string image_file_path = all_img_paths.at(idx * batch_size + bs);

cv::Mat im = cv::imread(image_file_path, 1);

batch_imgs.insert(batch_imgs.end(), im);

}

// Store all detected result

std::vector<PaddleDetection::ObjectResult> result;

std::vector<int> bbox_num;

std::vector<double> det_times;

bool is_rbox = false;

if (run_benchmark) {

det->Predict(batch_imgs, threshold, 10, 10, &result, &bbox_num, &det_times);

}

else {

det->Predict(batch_imgs, threshold, 0, 1, &result, &bbox_num, &det_times);

// get labels and colormap

auto labels = det->GetLabelList();

auto colormap = PaddleDetection::GenerateColorMap(labels.size());

int item_start_idx = 0;

for (int i = 0; i < left_image_cnt; i++) {

std::cout << all_img_paths.at(idx * batch_size + i) << "result" << std::endl;

if (bbox_num[i] <= 1) {

continue;

}

for (int j = 0; j < bbox_num[i]; j++) {

PaddleDetection::ObjectResult item = result[item_start_idx + j];

if (item.rect.size() > 6) {

is_rbox = true;

printf("class=%d confidence=%.4f rect=[%d %d %d %d %d %d %d %d]\n",

item.class_id,

item.confidence,

item.rect[0],

item.rect[1],

item.rect[2],

item.rect[3],

item.rect[4],

item.rect[5],

item.rect[6],

item.rect[7]);

}

else {

printf("class=%d confidence=%.4f rect=[%d %d %d %d]\n",

item.class_id,

item.confidence,

item.rect[0],

item.rect[1],

item.rect[2],

item.rect[3]);

}

}

item_start_idx = item_start_idx + bbox_num[i];

}

// Visualization result

int bbox_idx = 0;

for (int bs = 0; bs < batch_imgs.size(); bs++) {

if (bbox_num[bs] <= 1) {

continue;

}

cv::Mat im = batch_imgs[bs];

std::vector<PaddleDetection::ObjectResult> im_result;

for (int k = 0; k < bbox_num[bs]; k++) {

im_result.push_back(result[bbox_idx + k]);

}

bbox_idx += bbox_num[bs];

cv::Mat vis_img = PaddleDetection::VisualizeResult(

im, im_result, labels, colormap, is_rbox);

std::vector<int> compression_params;

compression_params.push_back(CV_IMWRITE_JPEG_QUALITY);

compression_params.push_back(95);

std::string output_path = output_dir + "\\";

std::string image_file_path = all_img_paths.at(idx * batch_size + bs);

output_path += std::filesystem::path(image_file_path).filename().string();

cv::imwrite(output_path, vis_img, compression_params);

printf("Visualized output saved as %s\n", output_path.c_str());

//std::string output_path(output_dir);

//if (output_dir.rfind(OS_PATH_SEP) != output_dir.size() - 1) {

// output_path += OS_PATH_SEP;

//}

//std::string image_file_path = all_img_paths.at(idx * batch_size + bs);

//output_path += image_file_path.substr(image_file_path.find_last_of('/') + 1);

//cv::imwrite(output_path, vis_img, compression_params);

//printf("Visualized output saved as %s\n", output_path.c_str());

}

}

det_t[0] += det_times[0];

det_t[1] += det_times[1];

det_t[2] += det_times[2];

}

//PrintBenchmarkLog(det_t, all_img_paths.size());

}

void Lev_ModelInfer::PredictImage_(const std::vector<std::string> all_img_paths,

const int batch_size,

const double threshold,

const bool run_benchmark,

ObjDetector det,

const std::string& output_dir) {

PaddleDetection::ObjectDetector* model = new PaddleDetection::ObjectDetector(

det.model_dir, det.device, det.use_mkldnn, det.cpu_threads, det.run_mode, det.batch_size, det.trt_min_shape

, det.trt_max_shape, det.trt_opt_shape, det.trt_calib_mode

);

PredictImage(all_img_paths, batch_size, threshold, run_benchmark, model, output_dir);

}

//std::string model_dir;

//std::string image_file;

//std::string video_file;

//std::string image_dir;

//int batch_size = 1;

//bool use_gpu = true;

//int camera_id = -1;

//double threshold = 0.1;

//std::string output_dir = "output";

//std::string run_mode = "fluid";

//int gpu_id = 0;

//bool run_benchmark = false;

//bool use_mkldnn = false;

//double cpu_threads = 0.9;

//bool use_dynamic_shape = false;

//int trt_min_shape = 1;

//int trt_max_shape = 1280;

//int trt_opt_shape = 640;

//bool trt_calib_mode = false;

//

//int main_test() {

// model_dir = "D:/projects/PaddleDetection/deploy/cpp/out/Release/models";

// image_file = "D:/projects/PaddleDetection/deploy/cpp/out/Release/images/1.jpg";

// //video_file = "bb.mp4";

// //image_dir = "";

// // Parsing command-line

// //google::ParseCommandLineFlags(&argc, &argv, true);

//

// if (model_dir.empty()

// || (image_file.empty() && image_dir.empty() && video_file.empty())) {

// std::cout << "Usage: ./main --model_dir=/PATH/TO/INFERENCE_MODEL/ "

// << "--image_file=/PATH/TO/INPUT/IMAGE/" << std::endl;

// }

// if (!(run_mode == "fluid" || run_mode == "trt_fp32"

// || run_mode == "trt_fp16" || run_mode == "trt_int8")) {

// std::cout << "run_mode should be 'fluid', 'trt_fp32', 'trt_fp16' or 'trt_int8'.";

// return -1;

// }

// // Load model and create a object detector

// PaddleDetection::ObjectDetector det(model_dir, use_gpu, use_mkldnn,

// threshold, run_mode, batch_size, gpu_id, use_dynamic_shape,

// trt_min_shape, trt_max_shape, trt_opt_shape, trt_calib_mode);

// // Do inference on input video or image

// MyClass predictvideo;

// if (!video_file.empty() || camera_id != -1) {

// predictvideo.PredictVideo(video_file, &det);

// }

// else if (!image_file.empty() || !image_dir.empty()) {

// if (!predictvideo.PathExists(output_dir)) {

// predictvideo.MkDirs(output_dir);

// }

// std::vector<std::string> all_imgs;

// if (!image_file.empty()) {

// all_imgs.push_back(image_file);

// if (batch_size > 1) {

// std::cout << "batch_size should be 1, when image_file is not None" << std::endl;

// batch_size = 1;

// }

// }

// else {

// predictvideo.GetAllFiles((char*)image_dir.c_str(), all_imgs);

// }

// predictvideo.PredictImage(all_imgs, batch_size, threshold, run_benchmark, &det, output_dir);

// }

// return 0;

//}

int main(int argc, char** argv) {

}

详情见本人github仓库:

添加链接描述

![BUUCTF-Real-ThinkPHP]5.0.23-Rce](https://img-blog.csdnimg.cn/direct/b9e802ae77ed4893b4b9746c72e589b3.png)