一 为什么要开启动态资源分配

⽤户提交Spark应⽤到Yarn上时,可以通过spark-submit的num-executors参数显示地指定executor个数,随后, ApplicationMaster会为这些executor申请资源,每个executor作为⼀个Container在Yarn上运⾏。 Spark调度器会把Task按照合适的策略分配到executor上执⾏。所有任务执⾏完后,executor被杀死,应⽤结束。在job运⾏的过程中,⽆论executor是否领取到任务,都会⼀直占有着资源不释放。很显然,这在任务量⼩且显示指定⼤量executor的情况下会很容易造成资源浪费。

二 开启动态资源分配

2.1 spark-defaults.conf配置

spark.shuffle.service.enabled true //启⽤External shuffle Service服务

spark.shuffle.service.port 7337 //Shuffle Service服务端⼝,必须和yarn-site中的⼀致

spark.dynamicAllocation.enabled true //开启动态资源分配

spark.dynamicAllocation.minExecutors 1 //每个Application最⼩分配的executor数

spark.dynamicAllocation.maxExecutors 30 //每个Application最⼤并发分配的executor数

spark.dynamicAllocation.schedulerBacklogTimeout 1s

spark.dynamicAllocation.sustainedSchedulerBacklogTimeout 5s

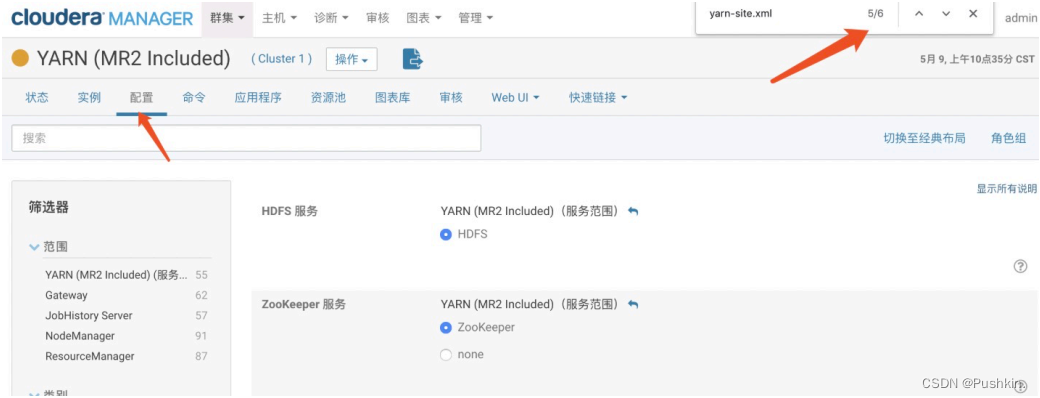

2.2 yarn-site.xml配置

进⼊yarn的配置⻚⾯,然后搜索yarn-site.xml

找到yarn-site.xml 的 NodeManager ⾼级配置代码段(安全阀),然后添加如下内容:

<property>

<name>yarn.nodemanager.aux-services</name>

<value>spark_shuffle,mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.spark_shuffle.class</name>

<value>org.apache.spark.network.yarn.YarnShuffleService</value>

</property>

<property

<name>spark.shuffle.service.port</name>

<value>7337</value>

</property

2.3 复制spark_shuffle jar包

复制spark_shuffle jar包到yarn下(所有hadoop节点上执行)

# 先删除原来的shuffle包

sudo mv /opt/cloudera/parcels/CDH/lib/hadoop-yarn/lib/spark-2.4.0-cdh6.3.2-yarn-shuffle.jar /opt/cloudera/parcels/CDH/lib/hadoop-yarn/lib/spark-2.4.0-cdh6.3.2-yarn-shuffle.jar.bak

# 替换为spark3的shuffle包

sudo cp /opt/cloudera/parcels/CDH/lib/spark3/yarn/spark-3.3.1-yarn-shuffle.jar /opt/cloudera/parcels/CDH/lib/hadoop-yarn/lib

sudo chmod root:root /opt/cloudera/parcels/CDH/lib/hadoop-yarn/lib/spark-3.3.1-yarn-shuffle.jar

sudo chmod 777 /opt/cloudera/parcels/CDH/lib/hadoop-yarn/lib/spark-3.3.1-yarn-shuffle.jar

spark3 和 cdh6.3.2替换jar包后有冲突。。。

然后重启Yarn。