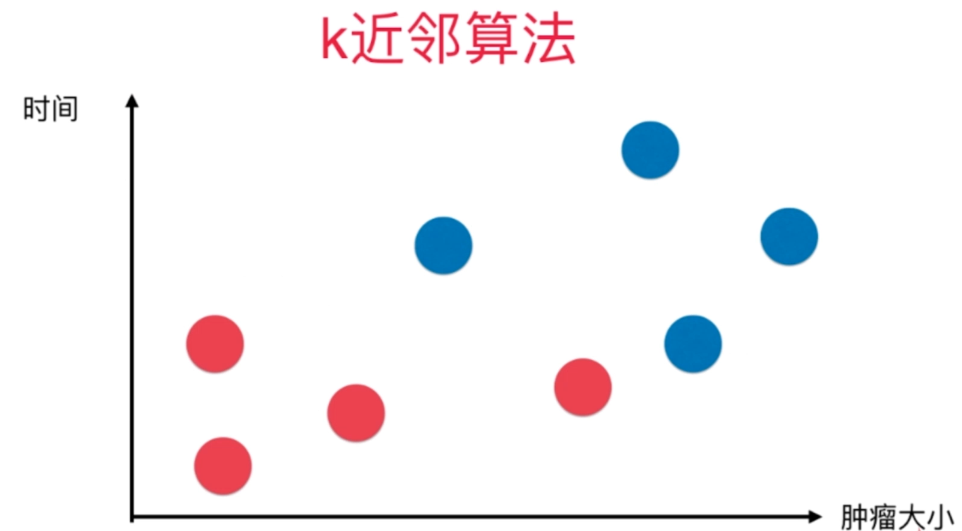

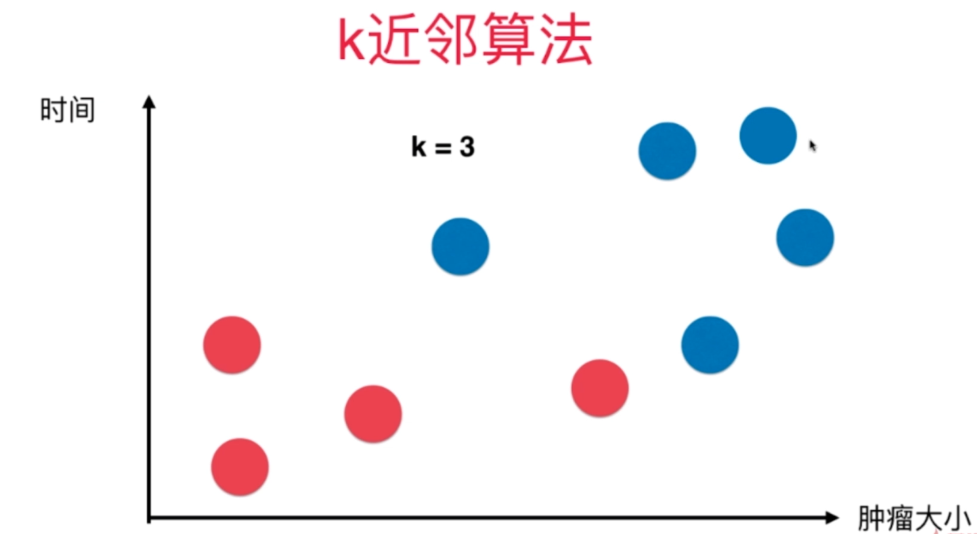

KNN-k近邻算法

k-Nearest Neighbors

- 思想极度简单

- 应用数学只是少

- 效果好

- 可以解释机器学习算法使用过程中的很多细节问题

- 更完整的刻画机器学习应用的流程

创建简单测试用例

import numpy as np

import matplotlib.pyplot as plt

raw_data_X = [[3.393533211, 2.331273381],

[3.110073483, 1.781539638],

[1.343808831, 3.368360954],

[3.582294042, 4.679179110],

[2.280362439, 2.866990263],

[7.423436942, 4.696522875],

[5.745051997, 3.533989803],

[9.172168622, 2.511101045],

[7.792783481, 3.424088941],

[7.939820817, 0.791637231]

]

raw_data_y = [0, 0, 0, 0, 0, 1, 1, 1, 1, 1]

X_train = np.array(raw_data_X)

y_train = np.array(raw_data_y)

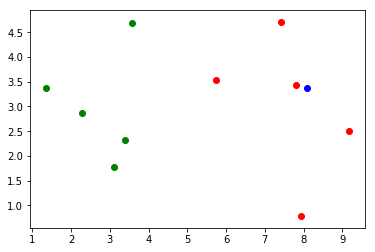

plt.scatter(X_train[y_train==0,0], X_train[y_train==0,1], color='g')

plt.scatter(X_train[y_train==1,0], X_train[y_train==1,1], color='r')

plt.show()

预测

x = np.array([8.093607318, 3.365731514])

plt.scatter(X_train[y_train==0,0], X_train[y_train==0,1], color='g')

plt.scatter(X_train[y_train==1,0], X_train[y_train==1,1], color='r')

plt.scatter(x[0], x[1], color='b')

plt.show()

KNN过程

from math import sqrt

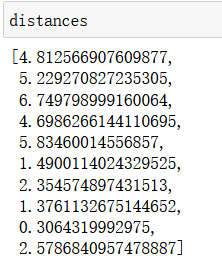

distances = []

for x_train in X_train:

d = sqrt(np.sum((x_train - x)**2))

distances.append(d)

distances = [sqrt(np.sum((x_train - x)**2))

for x_train in X_train]

数组排序返回索引

np.argsort(distances)

nearest = np.argsort(distances)

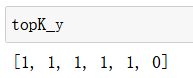

最近k个点相近的y坐标

k = 6

topK_y = [y_train[neighbor] for neighbor in nearest[:k]]

topK_y

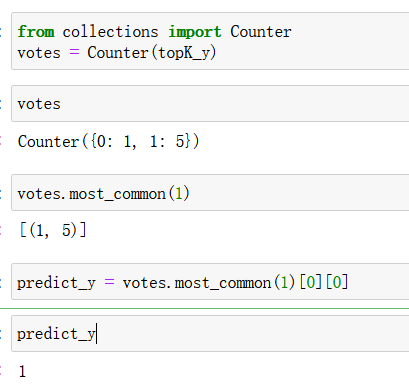

统计

from collections import Counter

votes = Counter(topK_y)

votes.most_common(1)

predict_y = votes.most_common(1)[0][0]

封装

import numpy as np

from math import sqrt

from collections import Counter

def kNN_classify(k, X_train, y_train, x):

assert 1 <= k <= X_train.shape[0], "k must be valid"

assert X_train.shape[0] == y_train.shape[0], \

"the size of X_train must equal to the size of y_train"

assert X_train.shape[1] == x.shape[0], \

"the feature number of x must be equal to X_train"

distances = [sqrt(np.sum((x_train - x)**2)) for x_train in X_train]

nearest = np.argsort(distances)

topK_y = [y_train[i] for i in nearest[:k]]

votes = Counter(topK_y)

return votes.most_common(1)[0][0]

- k近邻算法是非常特殊的,可以被认为是没有模型的算法

- 为了和其他算法统一,可以认为训练数据集就是模型本身

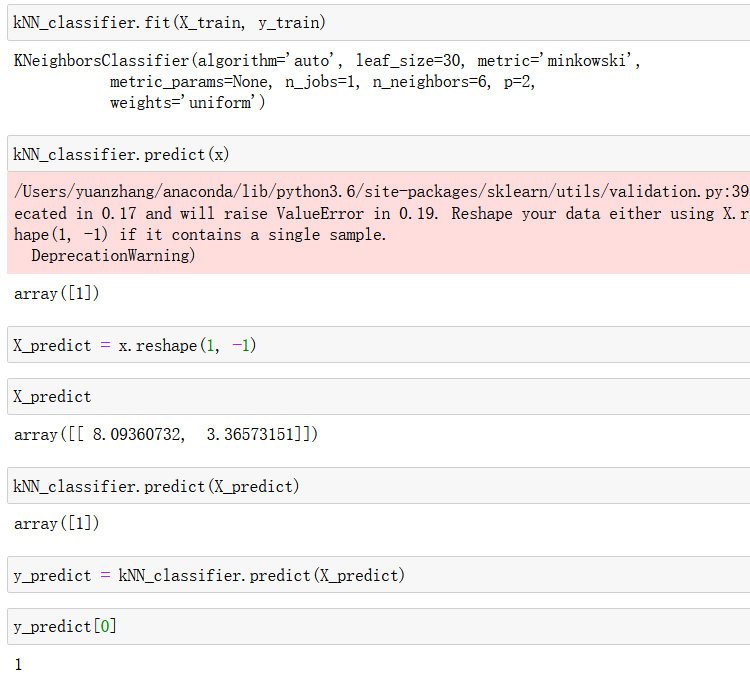

使用scikit-learn中的kNN

raw_data_X = [[3.393533211, 2.331273381],

[3.110073483, 1.781539638],

[1.343808831, 3.368360954],

[3.582294042, 4.679179110],

[2.280362439, 2.866990263],

[7.423436942, 4.696522875],

[5.745051997, 3.533989803],

[9.172168622, 2.511101045],

[7.792783481, 3.424088941],

[7.939820817, 0.791637231]

]

raw_data_y = [0, 0, 0, 0, 0, 1, 1, 1, 1, 1]

X_train = np.array(raw_data_X)

y_train = np.array(raw_data_y)

x = np.array([8.093607318, 3.365731514])

from sklearn.neighbors import KNeighborsClassifier

kNN_classifier = KNeighborsClassifier(n_neighbors=6)

kNN_classifier.fit(X_train, y_train)

#预测

kNN_classifier.predict(x)

#转化为矩阵

X_predict = x.reshape(1, -1)

kNN_classifier.predict(X_predict)

y_predict = kNN_classifier.predict(X_predict)

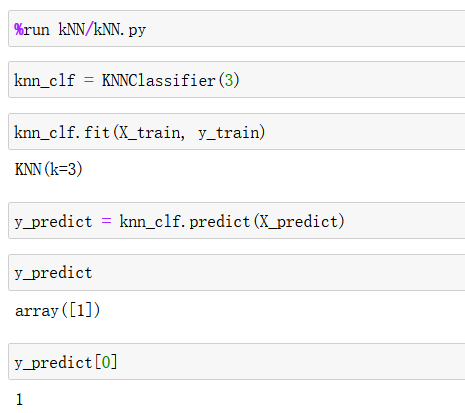

优化封装的KNN

import numpy as np

from math import sqrt

from collections import Counter

class KNNClassifier:

def __init__(self, k):

"""初始化kNN分类器"""

assert k >= 1, "k must be valid"

self.k = k

self._X_train = None

self._y_train = None

def fit(self, X_train, y_train):

"""根据训练数据集X_train和y_train训练kNN分类器"""

assert X_train.shape[0] == y_train.shape[0], \

"the size of X_train must be equal to the size of y_train"

assert self.k <= X_train.shape[0], \

"the size of X_train must be at least k."

self._X_train = X_train

self._y_train = y_train

return self

def predict(self, X_predict):

"""给定待预测数据集X_predict,返回表示X_predict的结果向量"""

assert self._X_train is not None and self._y_train is not None, \

"must fit before predict!"

assert X_predict.shape[1] == self._X_train.shape[1], \

"the feature number of X_predict must be equal to X_train"

y_predict = [self._predict(x) for x in X_predict]

return np.array(y_predict)

def _predict(self, x):

"""给定单个待预测数据x,返回x的预测结果值"""

assert x.shape[0] == self._X_train.shape[1], \

"the feature number of x must be equal to X_train"

distances = [sqrt(np.sum((x_train - x) ** 2))

for x_train in self._X_train]

nearest = np.argsort(distances)

topK_y = [self._y_train[i] for i in nearest[:self.k]]

votes = Counter(topK_y)

return votes.most_common(1)[0][0]

def __repr__(self):

return "KNN(k=%d)" % self.k

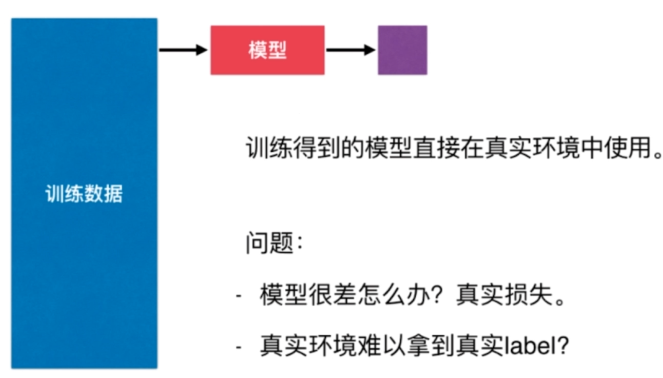

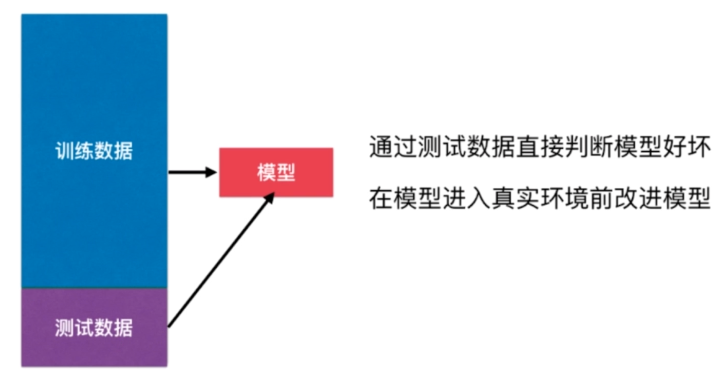

判断机器学习算法的性能-训练数据分割测试数据

分一部分数据设置为测试数据

、

、

train test split

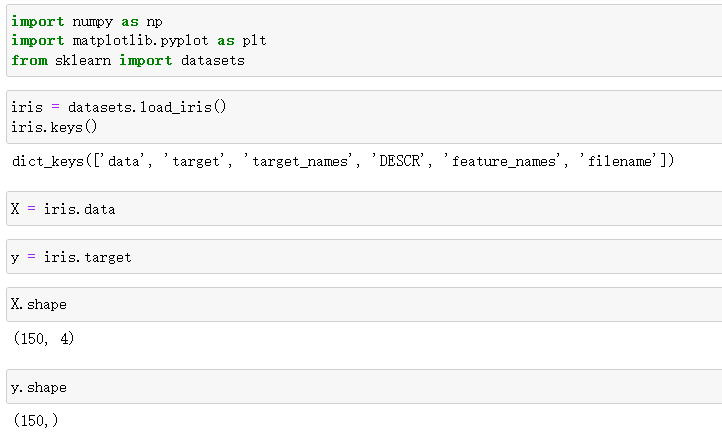

加载数据

import numpy as np

import matplotlib.pyplot as plt

from sklearn import datasets

iris = datasets.load_iris()

iris.keys()

X = iris.data

y = iris.target

X.shape

y.shape

**分离出一部分数据做训练,另外一部分数据做测试 **

将索引随机排列

shuffled_indexes = np.random.permutation(len(X))

shuffled_indexes

设置测试数据百分比

获取测试数据

test_ratio = 0.2

test_size = int(len(X) * test_ratio)

test_indexes = shuffled_indexes[:test_size]

train_indexes = shuffled_indexes[test_size:]

X_train = X[train_indexes]

y_train = y[train_indexes]

X_test = X[test_indexes]

y_test = y[test_indexes]

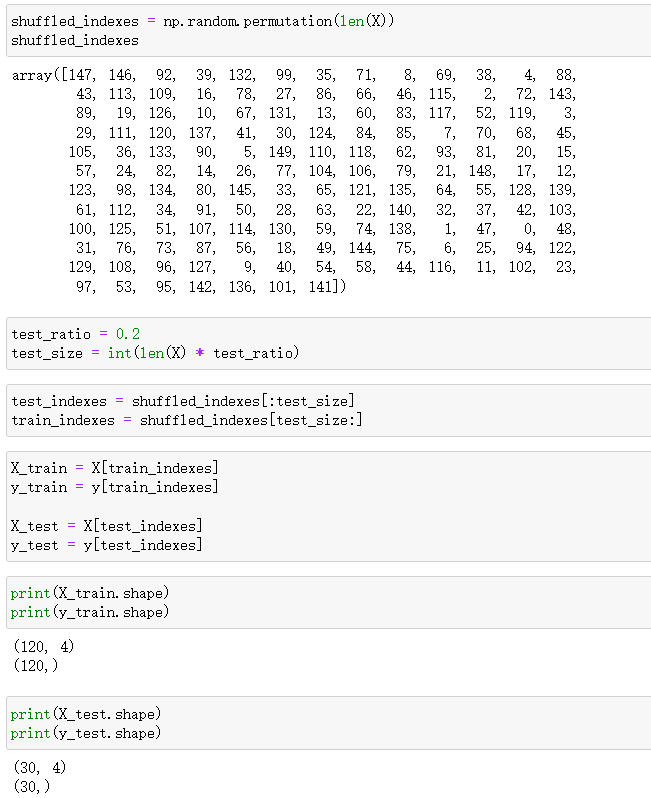

封装算法

import numpy as np

def train_test_split(X, y, test_ratio=0.2, seed=None):

"""将数据 X 和 y 按照test_ratio分割成X_train, X_test, y_train, y_test"""

assert X.shape[0] == y.shape[0], \

"the size of X must be equal to the size of y"

assert 0.0 <= test_ratio <= 1.0, \

"test_ration must be valid"

if seed:

np.random.seed(seed)

shuffled_indexes = np.random.permutation(len(X))

test_size = int(len(X) * test_ratio)

test_indexes = shuffled_indexes[:test_size]

train_indexes = shuffled_indexes[test_size:]

X_train = X[train_indexes]

y_train = y[train_indexes]

X_test = X[test_indexes]

y_test = y[test_indexes]

return X_train, X_test, y_train, y_test

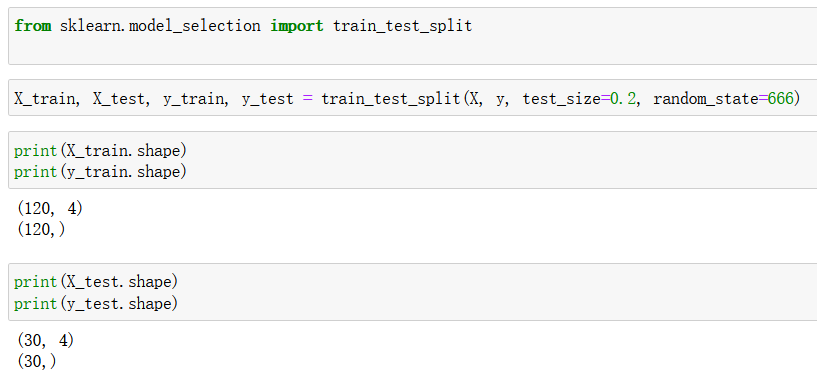

sklearn中的train_test_split

分类准确度

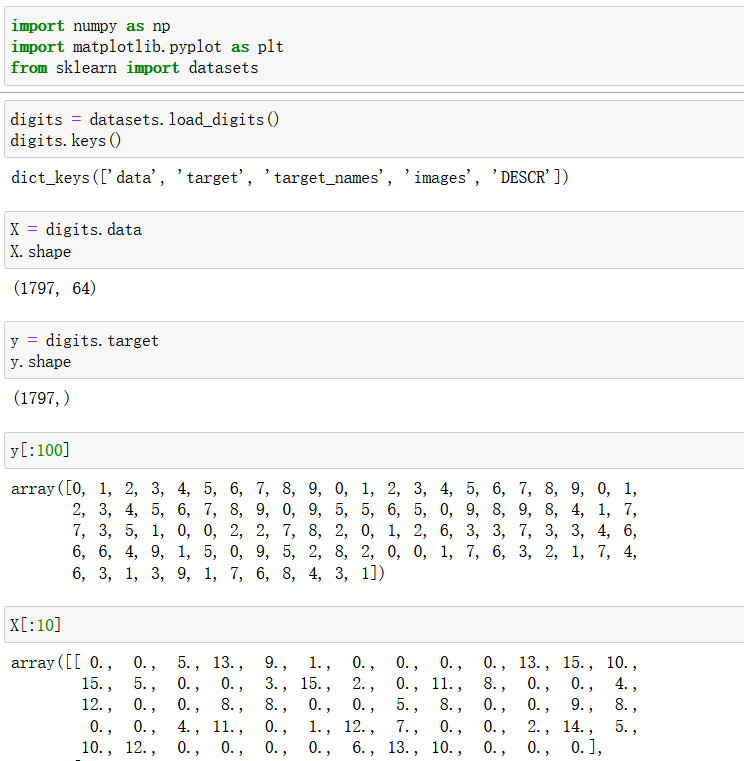

加载手写数字数据

import numpy as np

import matplotlib.pyplot as plt

from sklearn import datasets

digits = datasets.load_digits()

digits.keys()

X = digits.data

X.shape

y = digits.target

y.shape

查看数据

some_digit = X[666]

some_digit_image = some_digit.reshape(8, 8)

import matplotlib

import matplotlib.pyplot as plt

plt.imshow(some_digit_image, cmap = matplotlib.cm.binary)

plt.show()

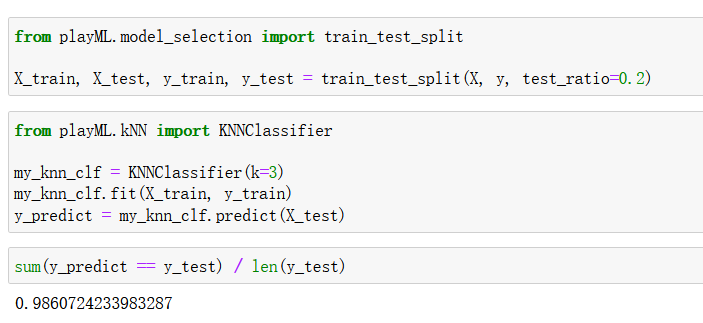

预测准确率

from playML.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_ratio=0.2)

from playML.kNN import KNNClassifier

my_knn_clf = KNNClassifier(k=3)

my_knn_clf.fit(X_train, y_train)

y_predict = my_knn_clf.predict(X_test)

sum(y_predict == y_test) / len(y_test)

封装自己的accuracy_score

import numpy as np

def accuracy_score(y_true, y_predict):

'''计算y_true和y_predict之间的准确率'''

assert y_true.shape[0] == y_predict.shape[0], \

"the size of y_true must be equal to the size of y_predict"

return sum(y_true == y_predict) / len(y_true)

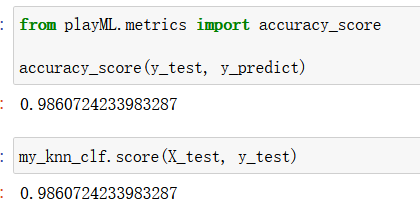

scikit-learn中的accuracy_score

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=666)

from sklearn.neighbors import KNeighborsClassifier

knn_clf = KNeighborsClassifier(n_neighbors=3)

knn_clf.fit(X_train, y_train)

y_predict = knn_clf.predict(X_test)

from sklearn.metrics import accuracy_score

accuracy_score(y_test, y_predict)

超参数和模型参数

- 超参数:在算法运行前需要决定的参数

- 模型参数:算法过程中学习的参数

KNN算法没有模型参数

KNN算法中的K是典型的超参数

寻找好的超参数

- 领域知识

- 经验数值

- 实验搜索

加载手写数字数据集

import numpy as np

from sklearn import datasets

digits = datasets.load_digits()

X = digits.data

y = digits.target

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=666)

from sklearn.neighbors import KNeighborsClassifier

knn_clf = KNeighborsClassifier(n_neighbors=3)

knn_clf.fit(X_train, y_train)

knn_clf.score(X_test, y_test)

寻找最好的k、

best_score = 0.0

best_k = -1

for k in range(1, 11):

knn_clf = KNeighborsClassifier(n_neighbors=k)

knn_clf.fit(X_train, y_train)

score = knn_clf.score(X_test, y_test)

if score > best_score:

best_k = k

best_score = score

print("best_k =", best_k)

print("best_score =", best_score)

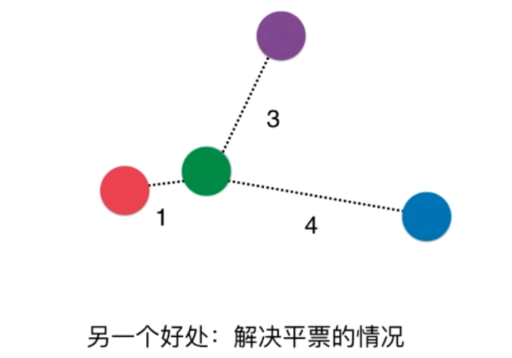

加入是否考虑距离

best_score = 0.0

best_k = -1

best_method = ""

for method in ["uniform", "distance"]:

for k in range(1, 11):

knn_clf = KNeighborsClassifier(n_neighbors=k, weights=method)

knn_clf.fit(X_train, y_train)

score = knn_clf.score(X_test, y_test)

if score > best_score:

best_k = k

best_score = score

best_method = method

print("best_method =", best_method)

print("best_k =", best_k)

print("best_score =", best_score)

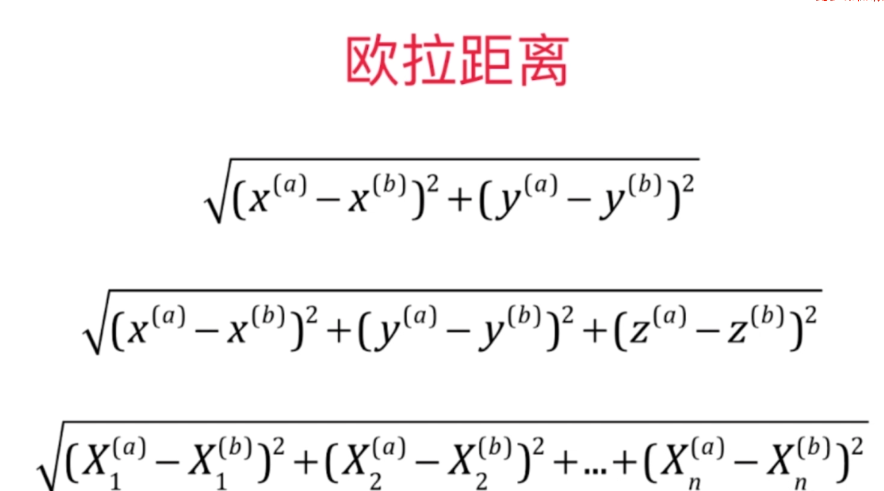

明可夫斯基距离

best_score = 0.0

best_k = -1

best_p = -1

for k in range(1, 11):

for p in range(1, 6):

knn_clf = KNeighborsClassifier(n_neighbors=k, weights="distance", p=p)

knn_clf.fit(X_train, y_train)

score = knn_clf.score(X_test, y_test)

if score > best_score:

best_k = k

best_p = p

best_score = score

print("best_k =", best_k)

print("best_p =", best_p)

print("best_score =", best_score)

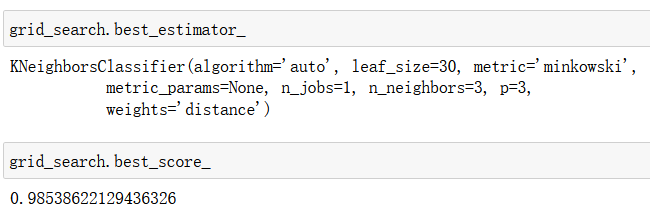

网格搜索 Grid Search

定义搜索参数

param_grid = [

{

'weights': ['uniform'],

'n_neighbors': [i for i in range(1, 11)]

},

{

'weights': ['distance'],

'n_neighbors': [i for i in range(1, 11)],

'p': [i for i in range(1, 6)]

}

]

knn_clf = KNeighborsClassifier()

from sklearn.model_selection import GridSearchCV

grid_search = GridSearchCV(knn_clf, param_grid)

grid_search.fit(X_train, y_train)

查看最佳分类器参数和准确度

grid_search.best_estimator_

grid_search.best_score_

更多的距离定义

- 向量空间余弦相似度 Cosine Similarity

- 调整余弦相似度 Adjusted Cosine Similarity

- 皮尔森相关系数 Pearson Correlation Coefficient

- Jaccard相似吸收 Jaccard Coeffcient

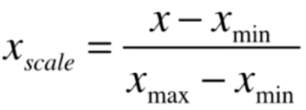

数据归一化 Feature Scaling

将所有的数据映射到同一尺度

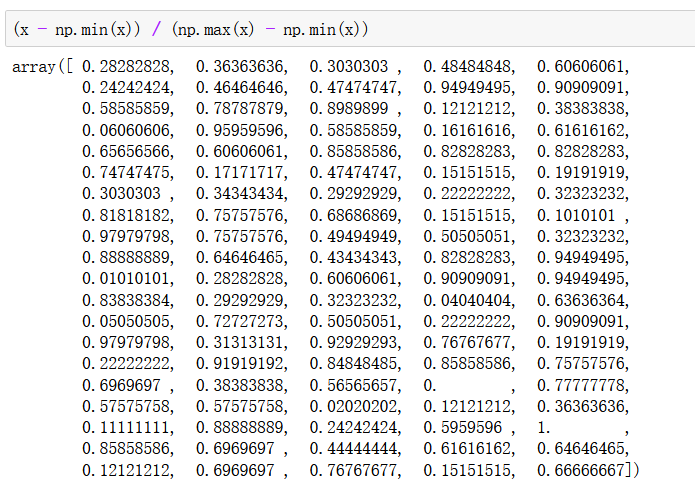

最值归一化 normalization:把所有数据映射到0-1之间

适用于分布有明显边界的情况;受outlier影响较大

x = np.random.randint(0, 100, 100)

(x - np.min(x)) / (np.max(x) - np.min(x))

X = np.random.randint(0, 100, (50, 2))

X = np.array(X, dtype=float)

X[:,0] = (X[:,0] - np.min(X[:,0])) / (np.max(X[:,0]) - np.min(X[:,0]))

X[:,1] = (X[:,1] - np.min(X[:,1])) / (np.max(X[:,1]) - np.min(X[:,1]))

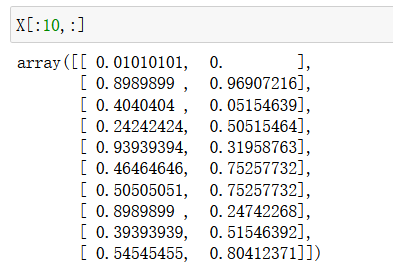

X[:10,:]

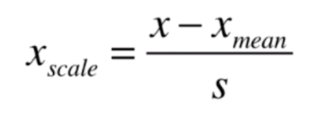

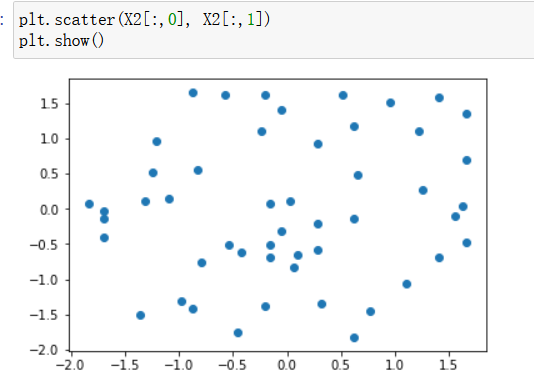

均值方差归一化 standardization

数据分布没有明显的边界,有可能存在极端数据值

均值方差归一化:把所有数据归一到均值为0方差为1的分布中

X2 = np.random.randint(0, 100, (50, 2))

X2 = np.array(X2, dtype=float)

X2[:,0] = (X2[:,0] - np.mean(X2[:,0])) / np.std(X2[:,0])

X2[:,1] = (X2[:,1] - np.mean(X2[:,1])) / np.std(X2[:,1])

测试数据集的归一化

测试数据说模拟真实环境

- 真实环境很有可能无法得到所有测试数据的均值和方差

- 对数据的归一化也是算法的一部分

(x_test-mean_train)/std_train

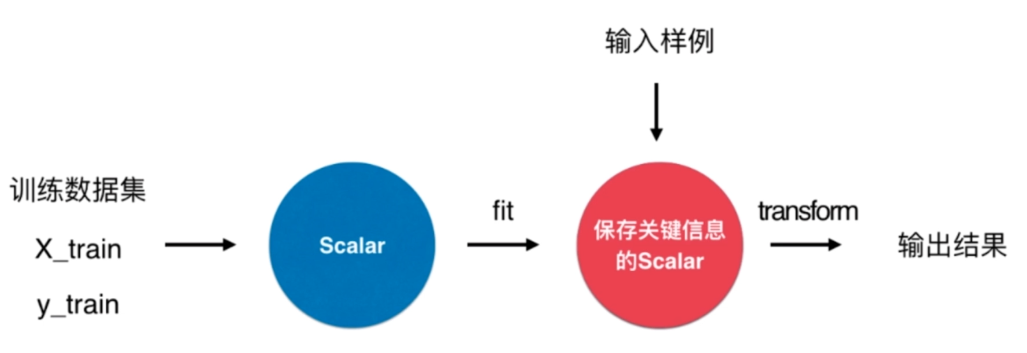

scikit-learn中的Scaler

加载数据

import numpy as np

from sklearn import datasets

iris = datasets.load_iris()

X = iris.data

y = iris.target

数据分割

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(iris.data, iris.target, test_size=0.2, random_state=666)

数据归一化

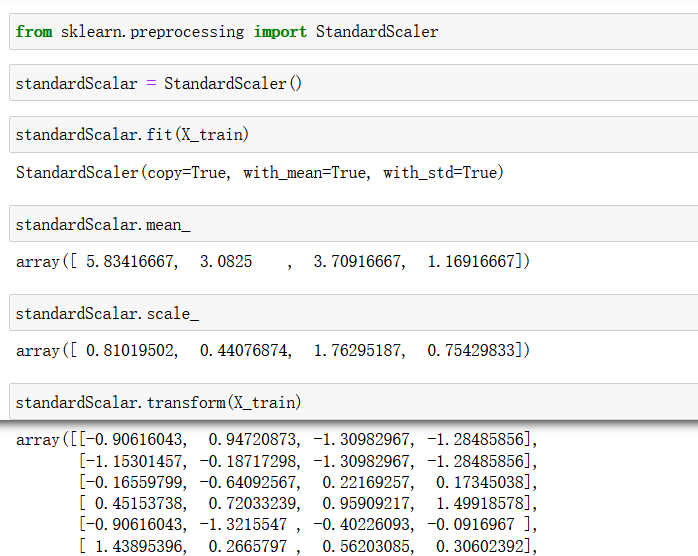

from sklearn.preprocessing import StandardScaler

standardScalar = StandardScaler()

standardScalar.fit(X_train)

standardScalar.transform(X_train)#归一化

归一化结果赋值

X_train = standardScalar.transform(X_train)

X_test_standard = standardScalar.transform(X_test)

使用归一化后的数据进行knn分类

from sklearn.neighbors import KNeighborsClassifier

knn_clf = KNeighborsClassifier(n_neighbors=3)

knn_clf.fit(X_train, y_train)

knn_clf.score(X_test_standard, y_test)

实现自己的standardScaler

import numpy as np

class StandardScaler:

def __init__(self):

self.mean_ = None

self.scale_ = None

def fit(self, X):

"""根据训练数据集X获得数据的均值和方差"""

assert X.ndim == 2, "The dimension of X must be 2"

self.mean_ = np.array([np.mean(X[:,i]) for i in range(X.shape[1])])

self.scale_ = np.array([np.std(X[:,i]) for i in range(X.shape[1])])

return self

def transform(self, X):

"""将X根据这个StandardScaler进行均值方差归一化处理"""

assert X.ndim == 2, "The dimension of X must be 2"

assert self.mean_ is not None and self.scale_ is not None, \

"must fit before transform!"

assert X.shape[1] == len(self.mean_), \

"the feature number of X must be equal to mean_ and std_"

resX = np.empty(shape=X.shape, dtype=float)

for col in range(X.shape[1]):

resX[:,col] = (X[:,col] - self.mean_[col]) / self.scale_[col]

return resX

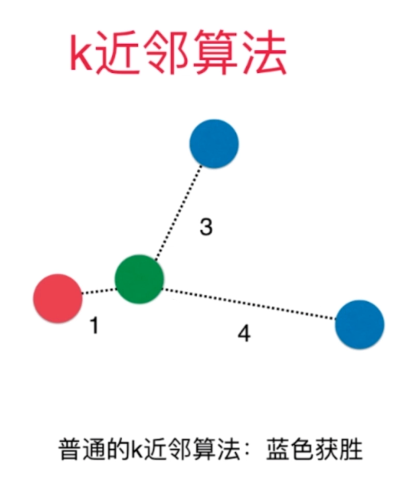

有关k近邻算法

解决分类问题

天然可以解决多分类问题

思想简单,效果强大

最大缺点:效率低下

如果训练集有m个样本,n个特征,则预测每一个新的数据,需要O(m*n)

优化 使用树结构:KD-Tree,Ball-Tree

缺点2:高度数据相关

缺点3:预测结果不具有可解释性

维数灾难

随着维度的增加,“看似相近”的两个点之间的距离越来越大

解决方法:降维、