一、目标

-

利用DPVS部署一个基于OSPF/ECMP的提供HTTP服务的多活高可用的测试环境。

-

本次部署仅用于验证功能,不提供性能验证。

-

配置两台DPVS组成集群、两台REAL SERVER提供实际HTTP服务。

注:在虚拟环境里面,通过在一台虚拟服务器上面安装FRRouting来模拟支持OSPF的路由器。

二、组网架构

角色及配置说明:

CLIENT: 透过DPVS集群访问REAL SERVER提供的相关服务。

CLIENT1的配置:

网卡名称: enp0s8

ip地址: 192.168.2.100/24

静态路由: ip route add 192.168.0.0/16 via 192.168.2.254 dev enp0s8

将所有和192.168.0.0/16的请求指向路由器

CLIENT2的配置:

网卡名称: enp0s8

ip地址: 192.168.2.101/24

静态路由: ip route add 192.168.0/16 via 192.168.2.254 dev enp0s8

将所有和192.168.0.0/16的请求指向路由器

ROUTER: 提供OSPF/ECMP功能的路由器(这里用frrouting模拟)。

网卡1名称: enp0s8

网卡1的ip地址: 192.168.2.254/24

网卡2名称: enp0s9

网卡2的ip地址: 192.168.3.254/24

DPVS: dpvs slb集群。

DPVS1服务器的配置:

网卡1名称: enp0s8 -> dpdk0 dpdk0.kni

网卡1的ip地址: 192.168.3.1/24

192.168.0.1/24 (VIP)

网卡2名称: enp0s9 -> dpdk1 dpdk1.kni

网卡2的ip地址: 192.168.1.1/24

DPVS1服务器2的配置:

网卡1名称: enp0s8 -> dpdk0 dpdk0.kni

网卡1的ip地址: 192.168.3.2/24

192.168.0.1/24 (VIP)

网卡2名称: enp0s9 -> dpdk1 dpdk1.kni

网卡2的ip地址: 192.168.1.2/24

REAL SERVER: 提供HTTP SERVER服务。

real server1的配置:

网卡名称: enp0s8

ip地址: 192.168.1.100/24

real server2的配置:

网卡名称: enp0s8

ip地址: 192.168.1.101/24

三、部署过程

以下以dpvs1为例, dpvs2和dpvs1的配置类似。

1. ROUTER的部署

1.1 安装frrouting服务

对于centos7执行以下命令

curl -O https://rpm.frrouting.org/repo/$FRRVER-repo-1-0.el7.noarch.rpm

sudo yum install ./$FRRVER*

# install FRR

sudo yum install frr frr-pythontools

对于centos8执行以下命令

curl -O https://rpm.frrouting.org/repo/$FRRVER-repo-1-0.el8.noarch.rpm

sudo yum install ./$FRRVER*

# install FRR

sudo yum install frr frr-pythontools

1.2 配置frr服务

1.2.1 开启ospf服务

在启动前,先要开启linux服务器的路由转发功能

echo "net.ipv4.ip_forward=1" > /etc/sysctl.conf

sysctl -p

在/etc/frr/daemons文件中开启:

ospfd=yes (对于ipv4)

ospf6d=yes (对于ipv6)

cd /etc/frr

cat daemons

----------------------

# This file tells the frr package which daemons to start.

#

# Sample configurations for these daemons can be found in

# /usr/share/doc/frr/examples/.

#

# ATTENTION:

#

# When activating a daemon for the first time, a config file, even if it is

# empty, has to be present *and* be owned by the user and group "frr", else

# the daemon will not be started by /etc/init.d/frr. The permissions should

# be u=rw,g=r,o=.

# When using "vtysh" such a config file is also needed. It should be owned by

# group "frrvty" and set to ug=rw,o= though. Check /etc/pam.d/frr, too.

#

# The watchfrr, zebra and staticd daemons are always started.

#

bgpd=no

ospfd=yes

ospf6d=yes

ripd=no

ripngd=no

isisd=no

pimd=no

ldpd=no

nhrpd=no

eigrpd=no

babeld=no

sharpd=no

pbrd=no

bfdd=no

fabricd=no

vrrpd=no

pathd=no

1.2.2 配置ospf服务

cd /etc/frr

cat frr.conf

----------------------

frr version 8.1

frr defaults traditional

hostname router

log syslog informational

service integrated-vtysh-config

!

interface enp0s8

ip address 192.168.2.254/24

exit

!

interface enp0s9

ip address 192.168.3.254/24

ip ospf 100 area 0

ip ospf dead-interval 40

exit

!

router ospf 100

ospf router-id 192.168.2.254

exit

!

1.3 启动FRR服务

service frr start

用ps -ef|grep frr检查frr,ospfd,zebra进程是否已经启动

ps -ef|grep frr

-----------------

root 3635 1 0 18:11 ? 00:00:01 /usr/lib/frr/watchfrr -d -F traditional zebra ospfd-100 ospfd-200 ospfd-300 staticd

frr 3657 1 0 18:11 ? 00:00:00 /usr/lib/frr/zebra -d -F traditional --daemon -A 127.0.0.1 -s 90000000

frr 3662 1 0 18:11 ? 00:00:02 /usr/lib/frr/ospfd -d -F traditional -n 100 --daemon -A 127.0.0.1

frr 3665 1 0 18:11 ? 00:00:00 /usr/lib/frr/ospfd -d -F traditional -n 200 --daemon -A 127.0.0.1

frr 3668 1 0 18:11 ? 00:00:00 /usr/lib/frr/ospfd -d -F traditional -n 300 --daemon -A 127.0.0.1

frr 3671 1 0 18:11 ? 00:00:00 /usr/lib/frr/staticd -d -F traditional --daemon -A 127.0.0.1

1.4 frr的常用命令

# 查看邻居列表

vtysh

do show ip ospf neighbor

# 查看路由表

vtysh

show ip route

# 写入配置

do write

do write mem

2. DPVS的部署

2.1 安装frrouting服务

2.1.1 Centos7执行以下命令

curl -O https://rpm.frrouting.org/repo/$FRRVER-repo-1-0.el7.noarch.rpm

sudo yum install ./$FRRVER*

# install FRR

sudo yum install frr frr-pythontools

对于centos8执行以下命令

curl -O https://rpm.frrouting.org/repo/$FRRVER-repo-1-0.el8.noarch.rpm

sudo yum install ./$FRRVER*

# install FRR

sudo yum install frr frr-pythontools

2.1.2 配置frr服务

2.1.2.1 开启ospf服务

在/etc/frr/daemons文件中开启:

ospfd=yes (对于ipv4)

ospf6d=yes (对于ipv6)

cd /etc/frr

cat daemons

----------------------

# This file tells the frr package which daemons to start.

#

# Sample configurations for these daemons can be found in

# /usr/share/doc/frr/examples/.

#

# ATTENTION:

#

# When activating a daemon for the first time, a config file, even if it is

# empty, has to be present *and* be owned by the user and group "frr", else

# the daemon will not be started by /etc/init.d/frr. The permissions should

# be u=rw,g=r,o=.

# When using "vtysh" such a config file is also needed. It should be owned by

# group "frrvty" and set to ug=rw,o= though. Check /etc/pam.d/frr, too.

#

# The watchfrr, zebra and staticd daemons are always started.

#

bgpd=no

ospfd=yes

ospf6d=yes

ripd=no

ripngd=no

isisd=no

pimd=no

ldpd=no

nhrpd=no

eigrpd=no

babeld=no

sharpd=no

pbrd=no

bfdd=no

fabricd=no

vrrpd=no

pathd=no

2.1.2.2 配置ospf服务

cd /etc/frr

cat frr.conf

----------------------

frr version 8.2.2

frr defaults traditional

hostname localhost.localdomain

log syslog informational

no ip forwarding

no ipv6 forwarding

!

interface dpdk0.kni

ip ospf dead-interval 10

ip ospf dead-interval 40

exit

!

router ospf

ospf router-id 192.168.3.1 # 如果是dpvs2, 则修改为192.168.3.2

log-adjacency-changes

auto-cost reference-bandwidth 1000

network 192.168.0.0/24 area 0

network 192.168.3.0/24 area 0

exit

!

2.1.3 启动FRR服务

service frr start

用ps -ef|grep frr检查frr,ospfd,zebra进程是否已经启动

ps -ef|grep frr

-----------------

root 3635 1 0 18:11 ? 00:00:01 /usr/lib/frr/watchfrr -d -F traditional zebra ospfd-100 ospfd-200 ospfd-300 staticd

frr 3657 1 0 18:11 ? 00:00:00 /usr/lib/frr/zebra -d -F traditional --daemon -A 127.0.0.1 -s 90000000

frr 3662 1 0 18:11 ? 00:00:02 /usr/lib/frr/ospfd -d -F traditional -n 100 --daemon -A 127.0.0.1

frr 3665 1 0 18:11 ? 00:00:00 /usr/lib/frr/ospfd -d -F traditional -n 200 --daemon -A 127.0.0.1

frr 3668 1 0 18:11 ? 00:00:00 /usr/lib/frr/ospfd -d -F traditional -n 300 --daemon -A 127.0.0.1

frr 3671 1 0 18:11 ? 00:00:00 /usr/lib/frr/staticd -d -F traditional --daemon -A 127.0.0.1

2.2 部署dpvs服务

2.2.1 编译dpvs源码

# 拉取dpvs和dpdk的代码

git clone http://10.0.171.10/networkService/l4slb-dpvs-1.9.git

git checkout feature_ospf

# 编译dpdk 20.11.1

cd l4slb-dpvs-1.9/dpdk-stable-20.11.1

meson -Denable_kmods=true -Dexamples=l2fwd,l3fwd -Ddisable_drivers=net/af_xdp,event/dpaa,,event/dpaa2 -Dprefix=`pwd`/dpdklib ./build

ninja -C build

ninja -C build install

export PKG_CONFIG_PATH=`pwd`/dpdk-stable-20.11.1/dpdklib/lib64/pkgconfig

# 编译dpvs

make -j8

#如果编译DEBUG版本

make DEBUG=1 -j8

2.2.2 初始化dpdk运行环境

# 配置大页内存

echo 1024 > /sys/devices/system/node/node0/hugepages/hugepages-2048kB/nr_hugepages

[ -d /mnt/huge ] || mkdir /mnt/huge

mount -t hugetlbfs nodev /mnt/huge

# 加载uio内核模块

modprobe uio_pci_generic

# 加载rte_kni内核模块

insmod dpdk-stable-20.11.1/build/kernel/linux/kni/rte_kni.ko

# 加载dpvs使用的网卡

dpdk-stable-20.11.1/usertools/dpdk-devbind.py -b uio_pci_generic enp0s8

dpdk-stable-20.11.1/usertools/dpdk-devbind.py -b uio_pci_generic enp0s9

2.2.3 配置dpvs:

!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

! This is dpvs default configuration file.

!

! The attribute "<init>" denotes the configuration item at initialization stage. Item of

! this type is configured oneshoot and not reloadable. If invalid value configured in the

! file, dpvs would use its default value.

!

! Note that dpvs configuration file supports the following comment type:

! * line comment: using '#" or '!'

! * inline range comment: using '<' and '>', put comment in between

!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

! global config

global_defs {

log_level INFO

! log_file /var/log/dpvs.log

! log_async_mode on

! pdump off

}

! netif config

netif_defs {

<init> pktpool_size 65536

<init> pktpool_cache 256

<init> fdir_mode perfect

<init> device dpdk0 {

rx {

queue_number 1

descriptor_number 1024

rss all

}

tx {

queue_number 1

descriptor_number 1024

}

mtu 1500

!promisc_mode

kni_name dpdk0.kni

}

<init> device dpdk1 {

rx {

queue_number 1

descriptor_number 1024

rss all

}

tx {

queue_number 1

descriptor_number 1024

}

mtu 1500

!promisc_mode

kni_name dpdk1.kni

}

}

! worker config (lcores)

! notes:

! 1. rx(tx) queue ids MUST start from 0 and continous

! 2. cpu ids and rx(tx) queue ids MUST be unique, repeated ids is forbidden

! 3. cpu ids identify dpvs workers only, and not correspond to physical cpu cores.

! If you are to specify cpu cores on which to run dpvs, please use dpdk eal options,

! such as "-c", "-l", "--lcores". Use "dpvs -- --help" for supported eal options.

worker_defs {

<init> worker cpu0 {

type master

cpu_id 0

}

<init> worker cpu1 {

type slave

cpu_id 1

port dpdk0 {

rx_queue_ids 0

tx_queue_ids 0

! isol_rx_cpu_ids 9

! isol_rxq_ring_sz 1048576

}

port dpdk1 {

rx_queue_ids 0

tx_queue_ids 0

! isol_rx_cpu_ids 9

! isol_rxq_ring_sz 1048576

}

}

}

! timer config

timer_defs {

# cpu job loops to schedule dpdk timer management

schedule_interval 500

}

! dpvs neighbor config

neigh_defs {

<init> unres_queue_length 128

timeout 60

}

! dpvs ipset config

ipset_defs {

<init> ipset_hash_pool_size 131072

}

! dpvs ipv4 config

ipv4_defs {

forwarding off

<init> default_ttl 64

fragment {

<init> bucket_number 4096

<init> bucket_entries 16

<init> max_entries 4096

<init> ttl 1

}

}

! dpvs ipv6 config

ipv6_defs {

disable off

forwarding off

route6 {

<init> method hlist

recycle_time 10

}

}

! control plane config

ctrl_defs {

lcore_msg {

<init> ring_size 4096

sync_msg_timeout_us 20000

priority_level low

}

ipc_msg {

<init> unix_domain /var/run/dpvs_ctrl

}

}

! ipvs config

ipvs_defs {

conn {

<init> conn_pool_size 65536

<init> conn_pool_cache 256

conn_init_timeout 3

! expire_quiescent_template

! fast_xmit_close

! <init> redirect off

}

udp {

! defence_udp_drop

uoa_mode ipo <opp|ipo>

uoa_max_trail 3

timeout {

normal 300

last 3

}

}

tcp {

! defence_tcp_drop

timeout {

none 2

established 90

syn_sent 3

syn_recv 30

fin_wait 7

time_wait 7

close 3

close_wait 7

last_ack 7

listen 120

synack 30

last 2

}

synproxy {

synack_options {

mss 1452

ttl 63

sack

! wscale

! timestamp

}

close_client_window

! defer_rs_syn

rs_syn_max_retry 3

ack_storm_thresh 10

max_ack_saved 3

conn_reuse_state {

close

time_wait

! fin_wait

! close_wait

! last_ack

}

}

}

}

! sa_pool config

sa_pool {

pool_hash_size 16

flow_enable off

}

2.2.4 启动dpvs:

bin/dpvs

2.2.5 初始化dpvs的服务配置,创建端口为8080的HTTP负载均衡服务:

VIP=192.168.0.1 # dpvs虚IP

OSPFIP=192.168.3.1 # dpvs的ospf心跳IP, 如果是dpvs2,改成192.168.3.2

GATEWAY=192.168.3.254 # dpvs的出口网关(指向ROUTER)

VIP_PREFIX=192.168.0.0 # VIP的掩码前缀

LIP_PREFIX=192.168.1.0 # LOCAL IP的掩码前缀

LIP=("192.168.1.1") # LOCAL IP地址列表, 如果是client2,改成192.168.1.2

SERVICE=$VIP:8080 # 开启的服务

# REAL SERVER列表

RS_SERVER=("192.168.1.100:8080" "192.168.1.101:8080")

# 配置IP地址

./tools/dpip/build/dpip addr add $VIP/24 dev dpdk0

./tools/dpip/build/dpip addr add $OSPFIP/24 dev dpdk0

# 定义默认出口网关指向ROUTER

./tools/dpip/build/dpip route add default via $GATEWAY dev dpdk0

# 配置kni虚拟网卡的IP以及路由(和dpdk网卡中的配置一致)

ifconfig dpdk0.kni $VIP netmask 255.255.255.0

ip addr add $OSPFIP/24 dev dpdk0.kni

ip route add default via $GATEWAY dev dpdk0.kni

ifconfig dpdk1.kni ${LIP[0]} netmask 255.255.255.0

# 添加后端IP

./tools/dpip/build/dpip route add $LIP_PREFIX/24 dev dpdk1

# 添加服务

./tools/ipvsadm/ipvsadm -A -t $SERVICE -s rr

# 添加RS

for rs in ${RS_SERVER[*]}

do

./tools/ipvsadm/ipvsadm -a -t $SERVICE -r $rs -b

done

# 配置VIP监听器使用的DPVS local_ip

for lip in ${LIP[*]}

do

./tools/ipvsadm/ipvsadm -P -z $lip -t $SERVICE -F dpdk1

done

2.2.6 部署real server的健康检查

#!/bin/bash

#

VIP=192.168.0.1 # DPVS的vip

CPORT=8080 # DPVS的服务端口

RS=("192.168.1.100" "192.168.1.101") # REAL SERVER列表

declare -a RSSTATUS # 存储 REAL SERVER的状态

RW=("2" "1") # REAL SERVER的权重

RPORT=8080 # REAL SERVER的HTTP服务端口

RSURL="/index.html" # REAL SERVER的测试URL路径

TYPE=b # FULLNAT模式

CHKLOOP=3 # 错误检查失败尝试次数

LOG=/var/log/ipvsmonitor.log # 日志存放路径

# 添加realserver

addrs() {

ipvsadm -a -t $VIP:$CPORT -r $1:$RPORT -$TYPE -w $2

[ $? -eq 0 ] && return 0 || return 1

}

# 删除realserver

delrs() {

ipvsadm -d -t $VIP:$CPORT -r $1:$RPORT

[ $? -eq 0 ] && return 0 || return 1

}

# 通过http接口对realserver的状态进行探测

checkrs() {

local I=1

while [ $I -le $CHKLOOP ]; do

if curl --connect-timeout 1 http://${1}:${RPORT}${RSURL} &> /dev/null; then

return 0

fi

let I++

done

return 1

}

# 状态初始化

initstatus() {

local I

local COUNT=0;

for I in ${RS[*]}; do

if ipvsadm -L -n | grep "$I:$RPORT" && > /dev/null ; then

RSSTATUS[$COUNT]=1

else

RSSTATUS[$COUNT]=0

fi

let COUNT++

done

}

initstatus

# 状态检测循环

while :; do

let COUNT=0

for I in ${RS[*]}; do

if checkrs $I; then

if [ ${RSSTATUS[$COUNT]} -eq 0 ]; then

addrs $I ${RW[$COUNT]}

[ $? -eq 0 ] && RSSTATUS[$COUNT]=1 && echo "`date +'%F %H:%M:%S'`, $I is back." >> $LOG

fi

else if [ ${RSSTATUS[$COUNT]} -eq 1 ]; then

delrs $I

[ $? -eq 0 ] && RSSTATUS[$COUNT]=0 && echo "`date +'%F %H:%M:%S'`, $I is gone." >> $LOG

fi

fi

let COUNT++

done

sleep 5

done

3. REALSERVER的部署

3.1 部署nginx服务

3.1.1 编译nginx

# 安装nginx服务

wget https://nginx.org/download/nginx-1.22.1.tar.gz

tar xzvf /nginx-1.22.1.tar.gz

cd nginx-1.22.1

./configure --prefix=/opt/nginx

make -j4

make install

3.1.2 配置nginx服务

# 安装nginx服务

wget https://nginx.org/download/nginx-1.22.1.tar.gz

tar xzvf /nginx-1.22.1.tar.gz

cd nginx-1.22.1

./configure --prefix=/opt/nginx

make -j4

make install

3.1.3 启动nginx服务

cd /opt/nginx/

./sbin/nginx

四、测试验证

-

连通性测试

# 在client服务器上面ping VIP是否通 ping 192.168.0.1 # 在dpvs服务器上面ping client是否通 ping 192.168.2.100 ping 192.168.2.101 # 在client服务器上面测试8080 tcp端口是否通 telnet 192.168.0.1 8080 -

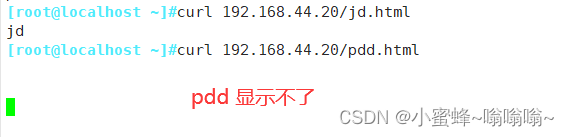

http请求测试

# 在client服务器上面请求HTTP curl "http://192.168.0.1:8080/index.html" -

模拟单dpvs宕机测试

随机选择一台dpvs,譬如dvps1,将dpvs进程kill 查看http请求测试依旧可以成功 -

模拟单dpvs宕机后恢复测试

将以上kill的dpvs进程重新启动 通过查看real server上的访问日志,查看http请求测试是否可以重新从这台dpvs服务器恢复。 -

模拟单realserver宕机测试

将以上kill的dpvs进程重新启动

通过查看real server上的访问日志,查看http请求测试是否可以重新从这台dpvs服务器恢复。

- 模拟单realserver宕机后恢复测试

将以上kill的dpvs进程重新启动

通过查看real server上的访问日志,查看http请求测试是否可以重新从这台dpvs服务器恢复。