1、亲和和反亲和

- node的亲和性和反亲和性

- pod的亲和性和反亲和性

1.1node的亲和和反亲和

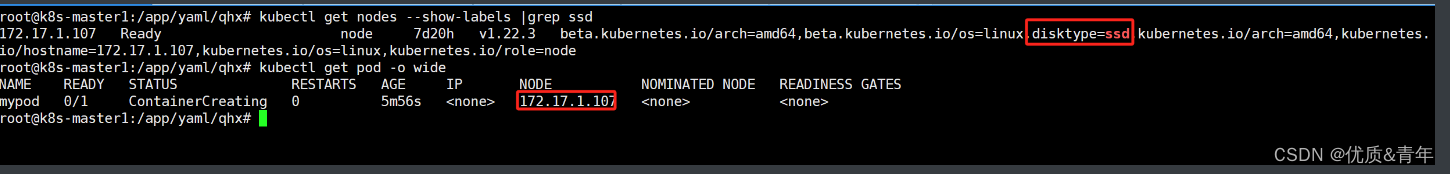

1.1.1ndoeSelector(node标签亲和)

#查看node的标签

root@k8s-master1:~# kubectl get nodes --show-labels

#给node节点添加标签

root@k8s-master1:~# kubectl label nodes 172.17.1.107 disktype=ssd

node/172.17.1.107 labeled

root@k8s-master1:~# kubectl get nodes --show-labels |grep ssd

172.17.1.107 Ready node 7d19h v1.22.3 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,disktype=ssd,kubernetes.io/arch=amd64,kubernetes.io/hostname=172.17.1.107,kubernetes.io/os=linux,kubernetes.io/role=node

root@k8s-master1:/app/yaml/qhx# cat nginx-nodeSelector.yaml

apiVersion: v1

kind: Pod

metadata:

name: mypod

spec:

containers:

- name: nginx-pod

image: nginx

nodeSelector:

disktype: ssd

此时pod只会部署在带有disktype=ssd的这个标签上

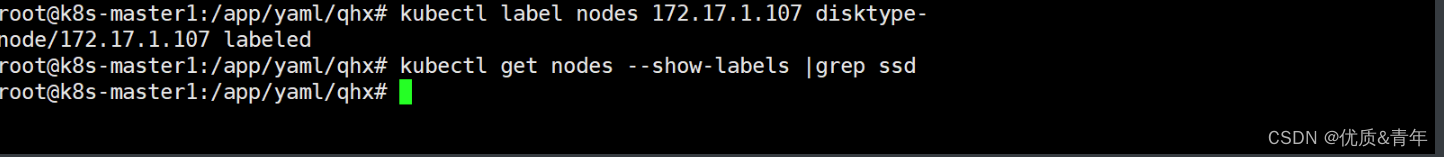

删除标签

root@k8s-master1:/app/yaml/qhx# kubectl label nodes 172.17.1.107 disktype-

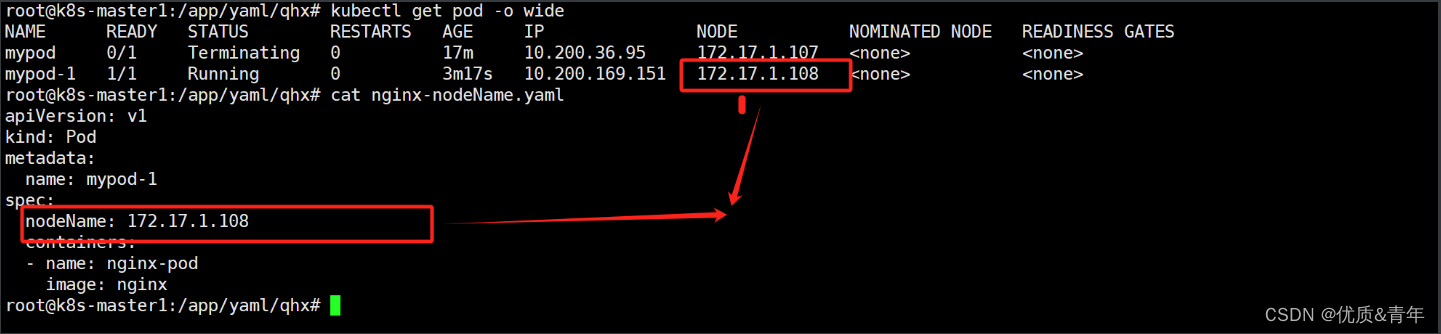

1.1.2 nodeName亲和

通过template中的spec指定nodeName也可以将pod运行在指定的node上

root@k8s-master1:/app/yaml/qhx# cat nginx-nodeName.yaml

apiVersion: v1

kind: Pod

metadata:

name: mypod-1

spec:

nodeName: 172.17.1.108

containers:

- name: nginx-pod

image: nginx

1.1.3Affinity

类似于nodeSelector允许使用者指定一些pod在Node间调度的约束,日常支持两种模式:

requiredDuringSchedulingIgnoredDuringExecution: 硬性条件,满足则调度,不满足则不调度

preferedDuringShedulingIgnoreDuringExecution:软性条件,不满足的情况下可以往其他不符合要求的node节点调度

IgnoreDuringExecution 如果Pod已经运行,如果标签发生变化不会影响已经运行的pod.

Affinity亲和,anti-affinity反亲和,相对于nodeSelector的功能更强大

- 标签支持and,还支持in,Notin,Exists,DoesNotExist,Gt,Lt

- 可以设置软匹配和硬匹配,在软匹配如果调度器无法匹配节点,仍然会将pod调度到其他不符合的节点上去

- 可以对pod定义和策略,比如那些pod可以或者不可以被调度到同一个node上

- In:标签的值存在列表中

- NotIn:标签的值不存在指定的匹配列表中

- Gt:标签的值大于某个值(字符串)

- Lt:标签的值小于某个值

- Exists:指定的标签存在

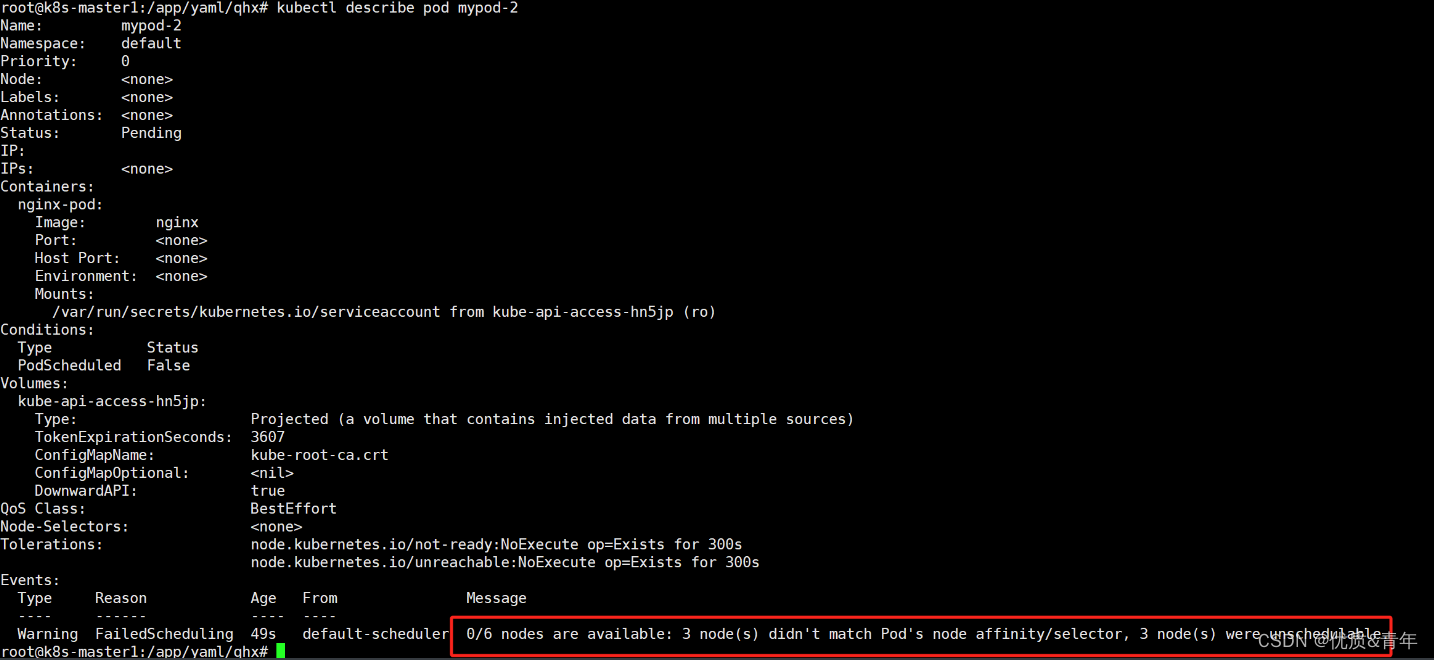

1.1.3.1 硬策略-requiredDuringSchedulingIgnoredDuringExecution

注意:不匹配不会被调度

实例一:当matchExpressions只有一个key,只要满足任意调度中的一个value,就会被调度到相应的节点上(多个条件之间是或的关系)

root@k8s-master1:/app/yaml/qhx# cat pod-1.yaml

apiVersion: v1

kind: Pod

metadata:

name: mypod-2

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions: #匹配条件1,多个values可以调度

- key: disktype

operator: In

values:

- ssd

- hdd

- matchExpressions: #匹配条件1,多个matchExpressions加上每个的matchExpressions values只要其中有一个value匹配成功就可以被调度

- key: project

operator: In

values:

- Linux

- Python

containers:

- name: nginx-pod

image: nginx

root@k8s-master1:/app/yaml/qhx# kubectl label nodes 172.17.1.108 disktype=ssd

node/172.17.1.108 labeled

root@k8s-master1:/app/yaml/qhx# kubectl get nodes --show-labels |grep ssd

172.17.1.108 Ready node 9d v1.22.3 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,disktype=ssd,kubernetes.io/arch=amd64,kubernetes.io/hostname=172.17.1.108,kubernetes.io/os=linux,kubernetes.io/role=node

#此时当172.17.1.108带有disktype=ssd的标签时就可以被调度了

root@k8s-master1:/app/yaml/qhx# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

mypod-1 1/1 Running 1 (45h ago) 46h 10.200.169.153 172.17.1.108 <none> <none>

mypod-2 1/1 Running 0 4m54s 10.200.169.154 172.17.1.108 <none> <none>

实例二、当matchExpressions有多个key时,需要满足所有的key,才会被调度.一个key里多个值可以任意满足一个.

disktype这个key下ssd和hdd只要满足其中一个,那么这个条件即满足:

- project这个key必须满足

- disktype和project之间是and

- ssd和hdd之间是or

root@k8s-master1:/app/yaml/qhx# cat pod-2.yaml

apiVersion: v1

kind: Pod

metadata:

name: mypod-3

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions: #匹配条件1,多个values可以调度

- key: disktype

operator: In

values:

- ssd

- hdd #同个key多个value只要有一个value满足条件就可以了

- key: project #当同一个matchExpressions存在多个key时,要求多个key的条件同时满足才可以被调度

operator: In

values:

- Linux

- Python

containers:

- name: nginx-pod

image: nginx

root@k8s-master1:/app/yaml/qhx# kubectl apply -f pod-2.yaml

pod/mypod-3 created

root@k8s-master1:/app/yaml/qhx# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

mypod-1 1/1 Running 1 (45h ago) 46h 10.200.169.153 172.17.1.108 <none> <none>

mypod-2 1/1 Running 0 19m 10.200.169.154 172.17.1.108 <none> <none>

mypod-3 0/1 Pending 0 7s <none> <none> <none> <none>

root@k8s-master1:/app/yaml/qhx# kubectl describe pod mypod-3

Name: mypod-3

Namespace: default

Priority: 0

Node: <none>

Labels: <none>

Annotations: <none>

Status: Pending

IP:

IPs: <none>

Containers:

nginx-pod:

Image: nginx

Port: <none>

Host Port: <none>

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-pfjf5 (ro)

Conditions:

Type Status

PodScheduled False

Volumes:

kube-api-access-pfjf5:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 23s default-scheduler 0/6 nodes are available: 3 node(s) didn't match Pod's node affinity/selector, 3 node(s) were unschedulable.

#因为172.17.1.108这个节点只满足一个key的要求,故pod无法被调度到这个节点

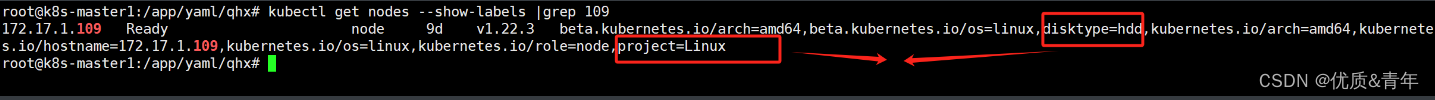

root@k8s-master1:/app/yaml/qhx# kubectl label nodes 172.17.1.109 disktype=hdd project=Linux

node/172.17.1.109 labeled

root@k8s-master1:/app/yaml/qhx# kubectl get nodes --show-labels |grep 109

172.17.1.109 Ready node 9d v1.22.3 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,disktype=hdd,kubernetes.io/arch=amd64,kubernetes.io/hostname=172.17.1.109,kubernetes.io/os=linux,kubernetes.io/role=node,project=Linux

root@k8s-master1:/app/yaml/qhx# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

mypod-1 1/1 Running 1 (46h ago) 46h 10.200.169.153 172.17.1.108 <none> <none>

mypod-2 1/1 Running 0 29m 10.200.169.154 172.17.1.108 <none> <none>

mypod-3 1/1 Running 0 13s 10.200.107.239 172.17.1.109 <none> <none>

root@k8s-master1:/app/yaml/qhx# kubectl describe pod mypod-3

Name: mypod-3

Namespace: default

Priority: 0

Node: 172.17.1.109/172.17.1.109

Start Time: Wed, 31 Jan 2024 15:49:21 +0800

Labels: <none>

Annotations: <none>

Status: Running

IP: 10.200.107.239

IPs:

IP: 10.200.107.239

Containers:

nginx-pod:

Container ID: docker://19a130c06ea78cd4469fe724096f0bb066896e10c035c30c3553aafd580bf504

Image: nginx

Image ID: docker-pullable://nginx@sha256:4c0fdaa8b6341bfdeca5f18f7837462c80cff90527ee35ef185571e1c327beac

Port: <none>

Host Port: <none>

State: Running

Started: Wed, 31 Jan 2024 15:49:27 +0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-544dq (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

kube-api-access-544dq:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 22s default-scheduler Successfully assigned default/mypod-3 to 172.17.1.109

Normal Pulling 19s kubelet Pulling image "nginx"

Normal Pulled 16s kubelet Successfully pulled image "nginx" in 2.972306097s

Normal Created 16s kubelet Created container nginx-pod

Normal Started 16s kubelet Started container nginx-pod

1.1.3.2 软策略-preferedDuringShedulingIgnoreDuringExecution

如果匹配成功,则会被调度到指定的Node上,即使不匹配,也会被调度

实例:

root@k8s-master1:/app/yaml/qhx# cat pod-3.yaml

apiVersion: v1

kind: Pod

metadata:

name: mypod-4

spec:

affinity:

nodeAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 80 #权重范围:1-100,权重越高越被优先调度

preference:

matchExpressions:

- key: project

operator: In

values:

- Java

containers:

- name: nginx-pod

image: nginx

root@k8s-master1:/app/yaml/qhx# kubectl get nodes --show-labels |grep Java

root@k8s-master1:/app/yaml/qhx# kubectl apply -f pod-3.yaml

pod/mypod-4 created

root@k8s-master1:/app/yaml/qhx# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

mypod-1 1/1 Running 1 (46h ago) 46h 10.200.169.153 172.17.1.108 <none> <none>

mypod-2 1/1 Running 0 49m 10.200.169.154 172.17.1.108 <none> <none>

mypod-3 1/1 Running 0 19m 10.200.107.239 172.17.1.109 <none> <none>

mypod-4 1/1 Running 0 13s 10.200.36.96 172.17.1.107 <none> <none>

1.1.3.3 node软策略和硬策略的综合使用

硬策略是(NotIn)反亲和,不往master节点调度

软策略是(In)亲和,优先将pod调度到含有标签的node节点,如果没有任何node满足pod的标签,再根据计算调度到其他节点上

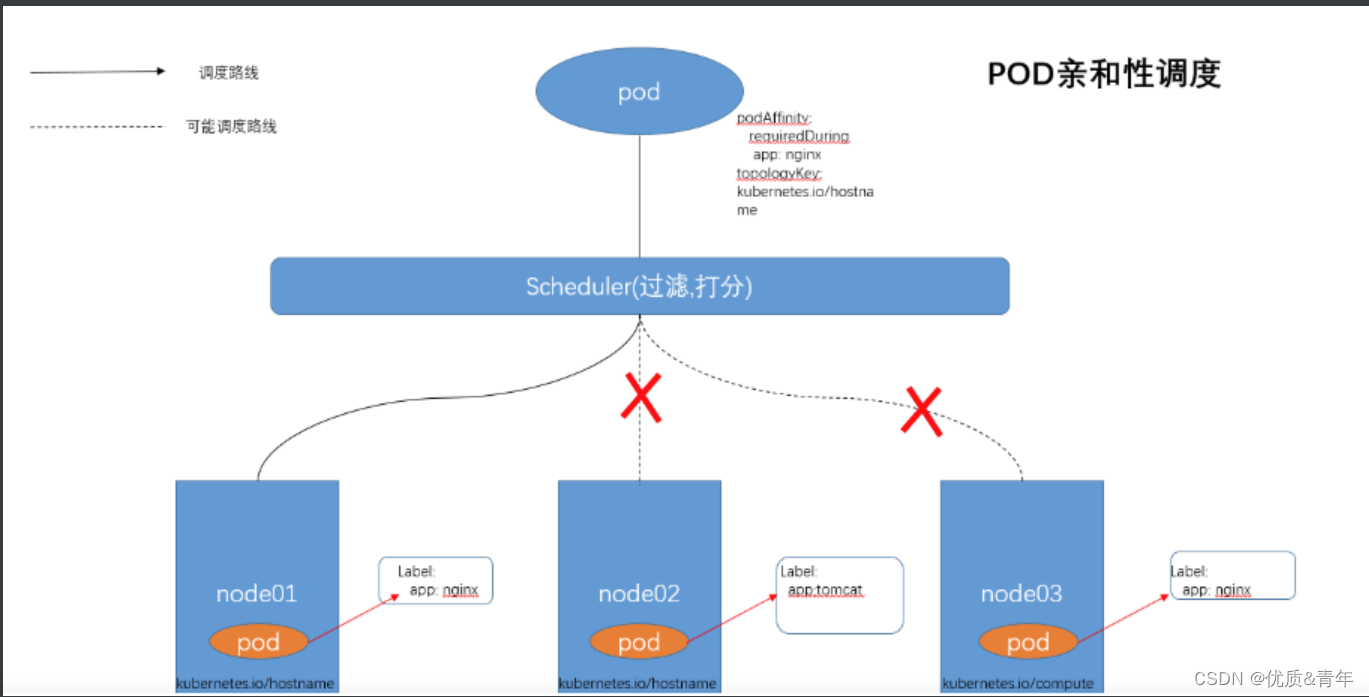

1.2pod的亲和

Pod亲和与反亲和是根据已经运行在node节点上的Pod标签进行匹配的,pod标签必须指定namespace

亲和:将新创建的pod分配到有这些标签的node上,可以减少网络传输的消耗

反亲和:创建pod时避免将pod新建到有这些标签的node节点上,可以用来做项目资源分配和高可用

Pod亲和与反亲和合法操作符有:In,NotIn,Exists,DoesNotxist

1.2.1pod之间的亲和

root@k8s-master1:/app/yaml/qhx# kubectl get nodes --show-labels |grep Linux

172.17.1.107 Ready node 9d v1.22.3 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=172.17.1.107,kubernetes.io/os=linux,kubernetes.io/role=node,project=Linux

root@k8s-master1:/app/yaml/qhx# cat deply-pod1.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

namespace: webwork

spec:

replicas: 1

selector:

matchLabels:

app: nginx

project: Linux

template:

metadata:

labels:

app: nginx

project: Linux

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

#此时pod被调度到含有project=Linux标签上的node节点上了

root@k8s-master1:/app/yaml/qhx# kubectl get pod -n webwork -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deployment-55b448df4c-94bbl 1/1 Running 0 16s 10.200.36.99 172.17.1.107 <none> <none>

redis-deploy-79bb95b948-hhjtc 1/1 Running 5 (46h ago) 6d20h 10.200.107.237 172.17.1.109 <none> <none>

1.2.2 pod间的软限制-preferredDuringSchedulingIgnoredDuringExecution

实例:将nginx pod部署到命名空间为webwork中含有标签project值为webwork的pod一起

root@k8s-master1:/app/yaml/qhx# cat deply-pod3.yaml

root@k8s-master1:/app/yaml/qhx# cat deply-pod3.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

namespace: webwork

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

affinity:

podAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: project

operator: In

values:

- Linux

topologyKey: "kubernetes.io/hostname"

namespaces:

- webwork

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

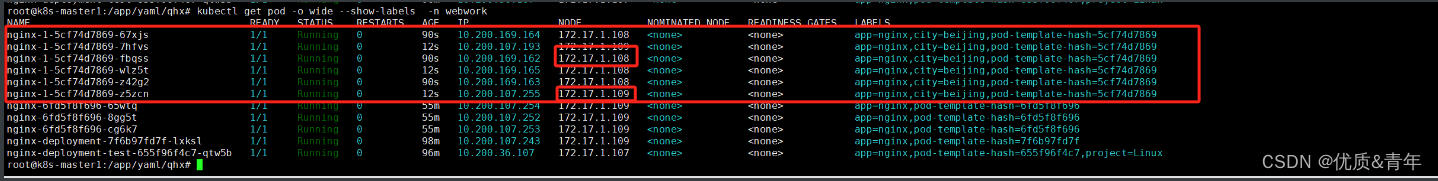

root@k8s-master1:/app/yaml/qhx# kubectl get pod -o wide -n webwork --show-labels

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES LABELS

nginx-6fd5f8f696-65wtq 1/1 Running 0 9m41s 10.200.107.254 172.17.1.109 <none> <none> app=nginx,pod-template-hash=6fd5f8f696

nginx-6fd5f8f696-8gg5t 1/1 Running 0 9m41s 10.200.107.252 172.17.1.109 <none> <none> app=nginx,pod-template-hash=6fd5f8f696

nginx-6fd5f8f696-cg6k7 1/1 Running 0 9m41s 10.200.107.253 172.17.1.109 <none> <none> app=nginx,pod-template-hash=6fd5f8f696

nginx-deployment-7f6b97fd7f-lxksl 1/1 Running 0 53m 10.200.107.243 172.17.1.109 <none> <none> app=nginx,pod-template-hash=7f6b97fd7f,project=Linux

nginx-deployment-test-655f96f4c7-qtw5b 1/1 Running 0 51m 10.200.36.107 172.17.1.107 <none> <none> app=nginx,pod-template-hash=655f96f4c7

1.2.3 pod间的硬限制-requiredDuringSchedulingIgnoredDuringExecution

将nginx Pod的亲和到Namespace为wework,标签为project值为wework的Pod的同一个Node上,如果Node上资源不足或匹配失败则无法创建此Pod

root@k8s-master1:/app/yaml/qhx# cat deply-pod4.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-1

namespace: webwork

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

city: beijing

spec:

affinity:

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: project

operator: In

values:

- Linux

topologyKey: "kubernetes.io/hostname"

namespaces:

- webwork

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

#现象:因pod的硬限制无法被调度

root@k8s-master1:/app/yaml/qhx# kubectl describe pod nginx-1-f7ffc7d7-7x97n -n webwork

Name: nginx-1-f7ffc7d7-7x97n

Namespace: webwork

Priority: 0

Node: <none>

Labels: app=nginx

city=beijing

pod-template-hash=f7ffc7d7

Annotations: <none>

Status: Pending

IP:

IPs: <none>

Controlled By: ReplicaSet/nginx-1-f7ffc7d7

Containers:

nginx:

Image: nginx:latest

Port: 80/TCP

Host Port: 0/TCP

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-vtl74 (ro)

Conditions:

Type Status

PodScheduled False

Volumes:

kube-api-access-vtl74:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 57s default-scheduler 0/6 nodes are available: 3 node(s) didn't match pod affinity rules, 3 node(s) were unschedulable.

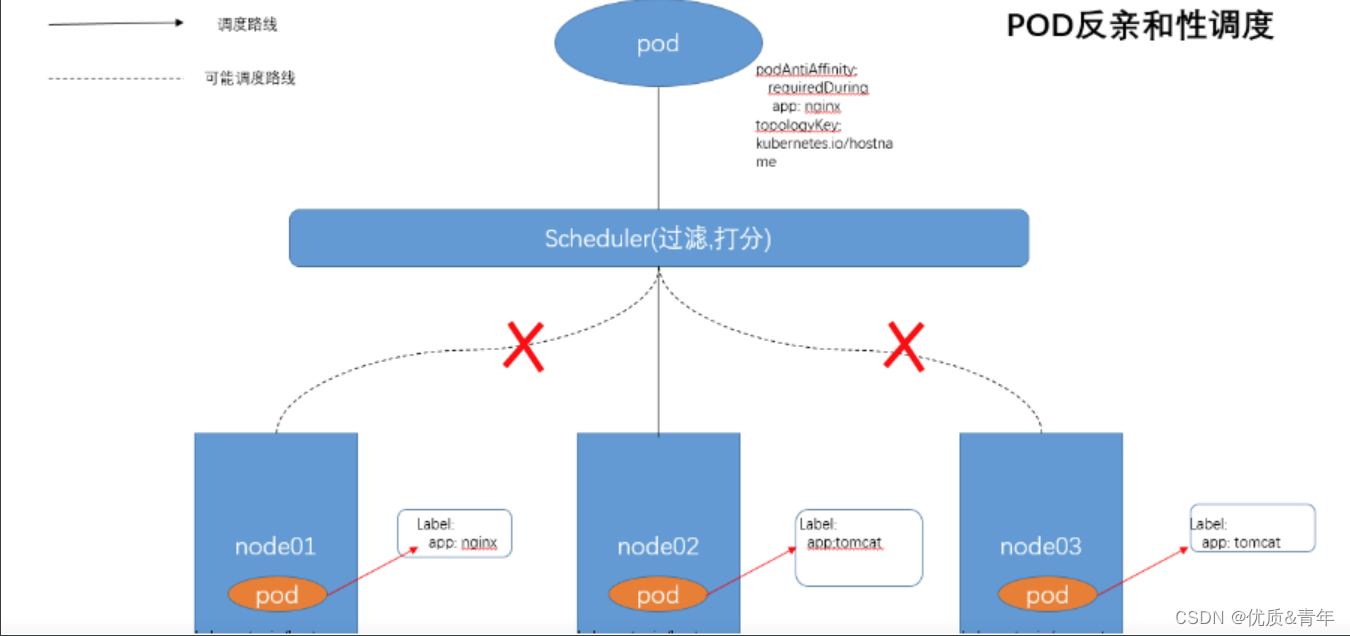

1.3pod的反亲和

1.3.1硬限制–requiredDuringSchedulingIgnoredDuringExecution

实例:将nginx Pod的亲和到Namespace为wework,标签为project值为wework的Pod的不在同一个Node上,如果Node上资源不足或匹配失败则无法创建此Pod

root@k8s-master1:/app/yaml/qhx# cat deply-pod5.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-1

namespace: webwork

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

city: beijing

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: project

operator: In

values:

- Linux

topologyKey: "kubernetes.io/hostname"

namespaces:

- webwork

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

1.3.2软限制

root@k8s-master1:/app/yaml/qhx# cat deply-pod6.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

namespace: webwork

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: project

operator: In

values:

- Linux

topologyKey: "kubernetes.io/hostname"

namespaces:

- webwork

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80