文章目录

- 1、zone类型

- 2、zone结构体

- 3、zone的初始化流程

1、zone类型

NUMA结构下, 每个处理器CPU与一个本地内存直接相连, 而不同处理器之前则通过总线进行进一步的连接, 因此相对于任何一个CPU访问本地内存的速度比访问远程内存的速度要快, 而Linux为了兼容NUMA结构, 把物理内存相依照CPU的不同node分成簇, 一个CPU-node对应一个本地内存pgdata_t。

这样已经很好的表示物理内存了, 在一个理想的计算机系统中, 一个页框就是一个内存的分配单元, 可用于任何事情:存放内核数据, 用户数据和缓冲磁盘数据等等。任何种类的数据页都可以存放在任页框中, 没有任何限制。

但是Linux内核又把各个物理内存节点分成n个不同的管理区域zone, 这是为什么呢?

因为实际的计算机体系结构有硬件的诸多限制, 这限制了页框可以使用的方式。尤其是, Linux内核必须处理两种硬件约束。

ISA总线的直接内存存储DMA处理器有一个严格的限制:他们只能对RAM的前16MB进行寻址。

在具有大容量RAM的现代32位计算机中, CPU不能直接访问所有的物理地址, 因为线性地址空间太小, 内核不可能直接映射所有物理内存到线性地址空间。

对于每个node中的内存,Linux分成了若干内存管理区域,定义在mmzone.h的枚举类型zone_type中,

enum zone_type {

/*

* ZONE_DMA and ZONE_DMA32 are used when there are peripherals not able

* to DMA to all of the addressable memory (ZONE_NORMAL).

* On architectures where this area covers the whole 32 bit address

* space ZONE_DMA32 is used. ZONE_DMA is left for the ones with smaller

* DMA addressing constraints. This distinction is important as a 32bit

* DMA mask is assumed when ZONE_DMA32 is defined. Some 64-bit

* platforms may need both zones as they support peripherals with

* different DMA addressing limitations.

*/

#ifdef CONFIG_ZONE_DMA

ZONE_DMA,

#endif

#ifdef CONFIG_ZONE_DMA32

ZONE_DMA32,

#endif

/*

* Normal addressable memory is in ZONE_NORMAL. DMA operations can be

* performed on pages in ZONE_NORMAL if the DMA devices support

* transfers to all addressable memory.

*/

ZONE_NORMAL,

#ifdef CONFIG_HIGHMEM

/*

* A memory area that is only addressable by the kernel through

* mapping portions into its own address space. This is for example

* used by i386 to allow the kernel to address the memory beyond

* 900MB. The kernel will set up special mappings (page

* table entries on i386) for each page that the kernel needs to

* access.

*/

ZONE_HIGHMEM,

#endif

/*

* ZONE_MOVABLE is similar to ZONE_NORMAL, except that it contains

* movable pages with few exceptional cases described below. Main use

* cases for ZONE_MOVABLE are to make memory offlining/unplug more

* likely to succeed, and to locally limit unmovable allocations - e.g.,

* to increase the number of THP/huge pages. Notable special cases are:

*

* 1. Pinned pages: (long-term) pinning of movable pages might

* essentially turn such pages unmovable. Therefore, we do not allow

* pinning long-term pages in ZONE_MOVABLE. When pages are pinned and

* faulted, they come from the right zone right away. However, it is

* still possible that address space already has pages in

* ZONE_MOVABLE at the time when pages are pinned (i.e. user has

* touches that memory before pinning). In such case we migrate them

* to a different zone. When migration fails - pinning fails.

* 2. memblock allocations: kernelcore/movablecore setups might create

* situations where ZONE_MOVABLE contains unmovable allocations

* after boot. Memory offlining and allocations fail early.

* 3. Memory holes: kernelcore/movablecore setups might create very rare

* situations where ZONE_MOVABLE contains memory holes after boot,

* for example, if we have sections that are only partially

* populated. Memory offlining and allocations fail early.

* 4. PG_hwpoison pages: while poisoned pages can be skipped during

* memory offlining, such pages cannot be allocated.

* 5. Unmovable PG_offline pages: in paravirtualized environments,

* hotplugged memory blocks might only partially be managed by the

* buddy (e.g., via XEN-balloon, Hyper-V balloon, virtio-mem). The

* parts not manged by the buddy are unmovable PG_offline pages. In

* some cases (virtio-mem), such pages can be skipped during

* memory offlining, however, cannot be moved/allocated. These

* techniques might use alloc_contig_range() to hide previously

* exposed pages from the buddy again (e.g., to implement some sort

* of memory unplug in virtio-mem).

* 6. ZERO_PAGE(0), kernelcore/movablecore setups might create

* situations where ZERO_PAGE(0) which is allocated differently

* on different platforms may end up in a movable zone. ZERO_PAGE(0)

* cannot be migrated.

* 7. Memory-hotplug: when using memmap_on_memory and onlining the

* memory to the MOVABLE zone, the vmemmap pages are also placed in

* such zone. Such pages cannot be really moved around as they are

* self-stored in the range, but they are treated as movable when

* the range they describe is about to be offlined.

*

* In general, no unmovable allocations that degrade memory offlining

* should end up in ZONE_MOVABLE. Allocators (like alloc_contig_range())

* have to expect that migrating pages in ZONE_MOVABLE can fail (even

* if has_unmovable_pages() states that there are no unmovable pages,

* there can be false negatives).

*/

ZONE_MOVABLE,

#ifdef CONFIG_ZONE_DEVICE

ZONE_DEVICE,

#endif

__MAX_NR_ZONES

};

可以看到上面有些区的定义是这样的:

#ifdef CONFIG_HIGHMEM

/*

* A memory area that is only addressable by the kernel through

* mapping portions into its own address space. This is for example

* used by i386 to allow the kernel to address the memory beyond

* 900MB. The kernel will set up special mappings (page

* table entries on i386) for each page that the kernel needs to

* access.

*/

ZONE_HIGHMEM,

#endif

这表明这些区是可以配置的,并不一定会存在。如在64位系统中, 并不需要高端内存, 因为64位linux支持的最大物理内存为64TB, 对于虚拟地址空间的划分,将0x0000,0000,0000,0000 – 0x0000,7fff,ffff,f000这128T地址用于用户空间;而0xffff,8000,0000,0000以上的128T为系统空间地址, 这远大于当前我们系统中的内存空间, 因此所有的物理地址都可以直接映射到虚拟地址, 不需要高端内存的特殊映射。

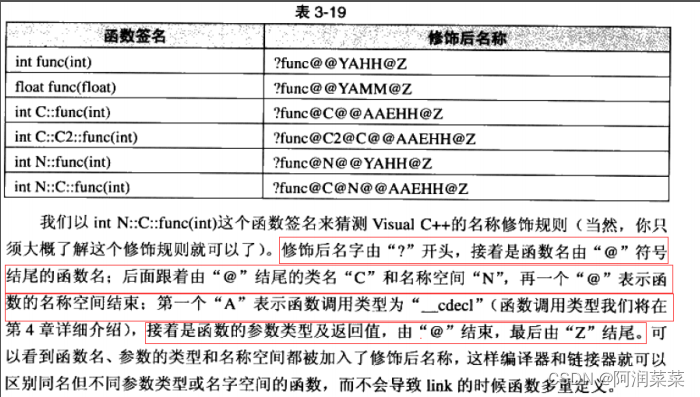

管理区的类型用zone_type表示, 有如下几种:

| 管理内存域 | 描述 |

|---|---|

| ZONE_DMA | 需要单独管理DMA的物理页面的原因: 1、DMA使用物理地址访问内存,不经过MMU 2、需要连续的缓冲区 为了能够提供物理上连续的缓冲区,必须从物理地址空间专门划分一段区域用于DMA。 |

| ZONE_DMA32 | 不解释 |

| ZONE_NORMA | 表示内核能够直接线性映射的普通内存区域。比如内核程序中代码段、全局变量以及kmalloc获取的堆内存等。从此处获取内存一般是连续的,但是不能太大。 |

| ZONE_HIGHMEM | 标记了超出内核虚拟地址空间的物理内存段, 因此这段地址不能被内核直接映射,它的存在是为了解决前面提及的内存映射不够的问题。这个区域比较复杂可细分为三部分。但64位的cpu不存在虚拟地址不够用的问题,所以不存在高端内存区,arm32也即将消亡,所以我们不会再讨论高端内存区。 |

| ZONE_MOVABLE | 内核定义了一个伪内存域ZONE_MOVABLE, 在防止物理内存碎片的机制memory migration中需要使用该内存域,供防止物理内存碎片的极致使用。 |

| ZONE_DEVICE | 为支持热插拔设备而分配的Non Volatile Memory非易失性内存 |

2、zone结构体

一个管理区域用结构体struct zone来描述,struct zone在linux/mmzone.h中定义。

struct zone {

/* Read-mostly fields */

/* zone watermarks, access with *_wmark_pages(zone) macros */

unsigned long _watermark[NR_WMARK];

unsigned long watermark_boost;

unsigned long nr_reserved_highatomic;

/*

* We don't know if the memory that we're going to allocate will be

* freeable or/and it will be released eventually, so to avoid totally

* wasting several GB of ram we must reserve some of the lower zone

* memory (otherwise we risk to run OOM on the lower zones despite

* there being tons of freeable ram on the higher zones). This array is

* recalculated at runtime if the sysctl_lowmem_reserve_ratio sysctl

* changes.

*/

long lowmem_reserve[MAX_NR_ZONES];

/*zone 中预留的内存, 为了防止一些代码必须运行在低地址区域,所以事先保留一些低地址区域的内存。*/

#ifdef CONFIG_NUMA

int node; /*NUMA体系下需要知道该zone属于哪个结点,因为有多个节点*/

#endif

struct pglist_data *zone_pgdat; /* 这个zone所属内存节点 */

struct per_cpu_pages __percpu *per_cpu_pageset;

struct per_cpu_zonestat __percpu *per_cpu_zonestats;

/*

* the high and batch values are copied to individual pagesets for

* faster access

*/

int pageset_high;

int pageset_batch;

#ifndef CONFIG_SPARSEMEM

/*

* Flags for a pageblock_nr_pages block. See pageblock-flags.h.

* In SPARSEMEM, this map is stored in struct mem_section

*/

unsigned long *pageblock_flags;

#endif /* CONFIG_SPARSEMEM */

/* zone_start_pfn == zone_start_paddr >> PAGE_SHIFT */

unsigned long zone_start_pfn; /*区域的起始帧号*/

/*

* spanned_pages is the total pages spanned by the zone, including

* holes, which is calculated as:

* spanned_pages = zone_end_pfn - zone_start_pfn;

*

* present_pages is physical pages existing within the zone, which

* is calculated as:

* present_pages = spanned_pages - absent_pages(pages in holes);

*

* present_early_pages is present pages existing within the zone

* located on memory available since early boot, excluding hotplugged

* memory.

*

* managed_pages is present pages managed by the buddy system, which

* is calculated as (reserved_pages includes pages allocated by the

* bootmem allocator):

* managed_pages = present_pages - reserved_pages;

*

* cma pages is present pages that are assigned for CMA use

* (MIGRATE_CMA).

*

* So present_pages may be used by memory hotplug or memory power

* management logic to figure out unmanaged pages by checking

* (present_pages - managed_pages). And managed_pages should be used

* by page allocator and vm scanner to calculate all kinds of watermarks

* and thresholds.

*

* Locking rules:

*

* zone_start_pfn and spanned_pages are protected by span_seqlock.

* It is a seqlock because it has to be read outside of zone->lock,

* and it is done in the main allocator path. But, it is written

* quite infrequently.

*

* The span_seq lock is declared along with zone->lock because it is

* frequently read in proximity to zone->lock. It's good to

* give them a chance of being in the same cacheline.

*

* Write access to present_pages at runtime should be protected by

* mem_hotplug_begin/done(). Any reader who can't tolerant drift of

* present_pages should use get_online_mems() to get a stable value.

*/

atomic_long_t managed_pages; /*present_pages中被buddy system管理的业数*/

unsigned long spanned_pages; /* 总页数,包含空洞 */

unsigned long present_pages; /* 可用页数,不包含空洞 */

#if defined(CONFIG_MEMORY_HOTPLUG)

unsigned long present_early_pages;

#endif

#ifdef CONFIG_CMA

unsigned long cma_pages;

#endif

const char *name;

#ifdef CONFIG_MEMORY_ISOLATION

/*

* Number of isolated pageblock. It is used to solve incorrect

* freepage counting problem due to racy retrieving migratetype

* of pageblock. Protected by zone->lock.

*/

unsigned long nr_isolate_pageblock;

#endif

#ifdef CONFIG_MEMORY_HOTPLUG

/* see spanned/present_pages for more description */

seqlock_t span_seqlock;

#endif

int initialized;

/* Write-intensive fields used from the page allocator */

CACHELINE_PADDING(_pad1_);

/* free areas of different sizes */

struct free_area free_area[MAX_ORDER]; /* 伙伴系统:zone区域的内存被分成11种2的n次方大小的内存块,相同大小的内存块通过链表组织起来 */

/* zone flags, see below */

unsigned long flags;

/*

enum zone_flags {

ZONE_BOOSTED_WATERMARK, /* zone recently boosted watermarks.

* Cleared when kswapd is woken.

*/

ZONE_RECLAIM_ACTIVE, /* kswapd may be scanning the zone. */

};

*/

/* Primarily protects free_area */

spinlock_t lock;

/* Write-intensive fields used by compaction and vmstats. */

CACHELINE_PADDING(_pad2_);

/*

* When free pages are below this point, additional steps are taken

* when reading the number of free pages to avoid per-cpu counter

* drift allowing watermarks to be breached

*/

unsigned long percpu_drift_mark;

#if defined CONFIG_COMPACTION || defined CONFIG_CMA

/* pfn where compaction free scanner should start */

unsigned long compact_cached_free_pfn;

/* pfn where compaction migration scanner should start */

unsigned long compact_cached_migrate_pfn[ASYNC_AND_SYNC];

unsigned long compact_init_migrate_pfn;

unsigned long compact_init_free_pfn;

#endif

#ifdef CONFIG_COMPACTION

/*

* On compaction failure, 1<<compact_defer_shift compactions

* are skipped before trying again. The number attempted since

* last failure is tracked with compact_considered.

* compact_order_failed is the minimum compaction failed order.

*/

unsigned int compact_considered;

unsigned int compact_defer_shift;

int compact_order_failed;

#endif

#if defined CONFIG_COMPACTION || defined CONFIG_CMA

/* Set to true when the PG_migrate_skip bits should be cleared */

bool compact_blockskip_flush;

#endif

bool contiguous;

CACHELINE_PADDING(_pad3_);

/* Zone statistics */ /* zone统计信息 */

atomic_long_t vm_stat[NR_VM_ZONE_STAT_ITEMS];

atomic_long_t vm_numa_event[NR_VM_NUMA_EVENT_ITEMS];

} ____cacheline_internodealigned_in_smp;

每个zone在系统启动时会计算出 3 个水位值, 分别为 WMAKR_MIN, WMARK_LOW, WMARK_HIGH 水位, 这在页面分配器和 kswapd 页面回收中会用到。

enum zone_watermarks {

WMARK_MIN,

WMARK_LOW,

WMARK_HIGH,

WMARK_PROMO,

NR_WMARK

};

当系统中可用内存很少的时候,系统进程kswapd被唤醒, 开始回收释放page, 水印这些参数(WMARK_MIN, WMARK_LOW, WMARK_HIGH)影响着这个代码的行为。

每个zone有三个水平标准:watermark[WMARK_MIN], watermark[WMARK_LOW], watermark[WMARK_HIGH],帮助确定zone中内存分配使用的压力状态:

| 标准 | 描述 |

|---|---|

| watermark[WMARK_MIN] | 当空闲页面的数量达到page_min所标定的数量的时候, 说明页面数非常紧张, 分配页面的动作和kswapd线程同步运行.WMARK_MIN所表示的page的数量值,是在内存初始化的过程中调用free_area_init_core中计算的。这个数值是根据zone中的page的数量除以一个>1的系数来确定的。通常是这样初始化的ZoneSizeInPages/12 |

| watermark[WMARK_LOW] | 当空闲页面的数量达到WMARK_LOW所标定的数量的时候,说明页面刚开始紧张, 则kswapd线程将被唤醒,并开始释放回收页面 |

| watermark[WMARK_HIGH] | 当空闲页面的数量达到page_high所标定的数量的时候, 说明内存页面数充足, 不需要回收, kswapd线程将重新休眠,通常这个数值是page_min的3倍 |

由于页框频繁的分配和释放,内核在每个zone中放置了一些事先保留的页框。这些页框只能由来自本地CPU的请求使用,存放在per_cpu_pages。

/* Fields and list protected by pagesets local_lock in page_alloc.c */

struct per_cpu_pages {

spinlock_t lock; /* Protects lists field */

int count; /* number of pages in the list */

int high; /* high watermark, emptying needed */

int batch; /* chunk size for buddy add/remove */

short free_factor; /* batch scaling factor during free */

#ifdef CONFIG_NUMA

short expire; /* When 0, remote pagesets are drained */

#endif

/* Lists of pages, one per migrate type stored on the pcp-lists */

struct list_head lists[NR_PCP_LISTS];

} ____cacheline_aligned_in_smp;

当per_cpu_pages里的page数超过high值时,就会归还batch个pages给zone的buddy system。如果pcp没有free page了,每次从zone中批量分配batch个pages。

关于pcp技术后面还会深入。

struct free_area free_area[MAX_ORDER];

伙伴系统:zone区域的内存被分成11种2的n次方大小的内存块,相同大小的内存块通过链表组织起来。

struct free_area {

struct list_head free_list[MIGRATE_TYPES];

unsigned long nr_free;

};

enum migratetype {

MIGRATE_UNMOVABLE,

MIGRATE_MOVABLE,

MIGRATE_RECLAIMABLE,

MIGRATE_PCPTYPES, /* the number of types on the pcp lists */

MIGRATE_HIGHATOMIC = MIGRATE_PCPTYPES,

#ifdef CONFIG_CMA

/*

* MIGRATE_CMA migration type is designed to mimic the way

* ZONE_MOVABLE works. Only movable pages can be allocated

* from MIGRATE_CMA pageblocks and page allocator never

* implicitly change migration type of MIGRATE_CMA pageblock.

*

* The way to use it is to change migratetype of a range of

* pageblocks to MIGRATE_CMA which can be done by

* __free_pageblock_cma() function.

*/

MIGRATE_CMA,

#endif

#ifdef CONFIG_MEMORY_ISOLATION

MIGRATE_ISOLATE, /* can't allocate from here */

#endif

MIGRATE_TYPES

};

这张图画的非常棒,展示了内存各级的关系:

3、zone的初始化流程

--->setup_arch

--->bootmem_init

---->sparse_init

---->zone_sizes_init

--->free_area_init

---->free_area_init_node

void __init bootmem_init(void)

{

unsigned long min, max;

min = PFN_UP(memblock_start_of_DRAM());

max = PFN_DOWN(memblock_end_of_DRAM());

early_memtest(min << PAGE_SHIFT, max << PAGE_SHIFT);

max_pfn = max_low_pfn = max;

min_low_pfn = min;

...

bootmem_init从memblock管理的物理内存计算出物理内存的最大和最小PFN,用于初始化zone。

free_area_init初始化了所有node结点结构体pg_data_t和每个node的zone。关于node结点的初始化可以参看前面的文章,简而言之就是从设备树解析出有多少个node结点,以及每个node结点的内存区间。

然后对每个node调用free_area_init_node:

static void __init free_area_init_node(int nid)

{

pg_data_t *pgdat = NODE_DATA(nid);

unsigned long start_pfn = 0;

unsigned long end_pfn = 0;

/* pg_data_t should be reset to zero when it's allocated */

WARN_ON(pgdat->nr_zones || pgdat->kswapd_highest_zoneidx);

get_pfn_range_for_nid(nid, &start_pfn, &end_pfn);

pgdat->node_id = nid;

pgdat->node_start_pfn = start_pfn;

pgdat->per_cpu_nodestats = NULL;

if (start_pfn != end_pfn) {

pr_info("Initmem setup node %d [mem %#018Lx-%#018Lx]\n", nid,

(u64)start_pfn << PAGE_SHIFT,

end_pfn ? ((u64)end_pfn << PAGE_SHIFT) - 1 : 0);

} else {

pr_info("Initmem setup node %d as memoryless\n", nid);

}

calculate_node_totalpages(pgdat, start_pfn, end_pfn);

alloc_node_mem_map(pgdat);

pgdat_set_deferred_range(pgdat);

free_area_init_core(pgdat);

}

初始化了pg_data_t的部分成员,主要计算每个结点的内存页数(每个结点的内存区间存放在memblock里,根据memblock来初始化每个结点的内存区间)、空洞内存页数,每个zone的起始pfn、可用页数(除去一部分用来存放mem_map)、平铺页数(包含空洞)、存在页数(原始物理内存页数)、free_area链表数组等。

如果是平坦内存模型,alloc_node_mem_map函数用于给pg_data_t的node_mem_map分配空间;

如果不是,alloc_node_mem_map是个空函数,因为稀疏内存模型不用pg_data_t里的node_mem_map存放物理page。

这张图画的非常棒,展示了内存各级的关系:

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-FEmNzXr5-1672924168842)(https://gimg2.baidu.com/image_search/src=http%3A%2F%2Fimg-blog.csdnimg.cn%2F6d25e52f470a47349a5de1e2f48bc429.png%3Fx-oss-process%3Dimage%2Fwatermark%2Ctype_ZHJvaWRzYW5zZmFsbGJhY2s%2Cshadow_50%2Ctext_Q1NETiBA5byg5a2f5rWpX2pheQ%3D%3D%2Csize_20%2Ccolor_FFFFFF%2Ct_70%2Cg_se%2Cx_16&refer=http%3A%2F%2Fimg-blog.csdnimg.cn&app=2002&size=f9999,10000&q=a80&n=0&g=0n&fmt=auto?sec=1675336720&t=78dc5a3886d8bf544cd9242fbb7b6c5d)]](https://img-blog.csdnimg.cn/c3072285eb4c4142b81c6cf33e3a29c0.png)

下一节将介绍page结构体。