import torch

import torchvision. datasets

from torch import nn

from torch. utils. data import DataLoader

dataset = torchvision. datasets. CIFAR10( root= './data' , train= False , download= True ,

transform= torchvision. transforms. ToTensor( ) )

dataloader = DataLoader( dataset, batch_size= 64 , shuffle= True )

class Tudui ( nn. Module) :

def __init__ ( self) :

super ( Tudui, self) . __init__( )

self. model1 = nn. Sequential(

nn. Conv2d( in_channels= 3 , out_channels= 32 , kernel_size= 5 , stride= 1 , padding= 2 ) ,

nn. MaxPool2d( kernel_size= 2 ) ,

nn. Conv2d( in_channels= 32 , out_channels= 32 , kernel_size= 5 , stride= 1 , padding= 2 ) ,

nn. MaxPool2d( 2 ) ,

nn. Conv2d( 32 , 64 , 5 , padding= 2 ) ,

nn. MaxPool2d( 2 ) ,

nn. Flatten( ) ,

nn. Linear( 1024 , 64 ) ,

nn. Linear( 64 , 10 )

)

def forward ( self, x) :

x = self. model1( x)

return x

tudui = Tudui( )

loss = nn. CrossEntropyLoss( )

optim = torch. optim. SGD( tudui. parameters( ) , lr= 0.01 )

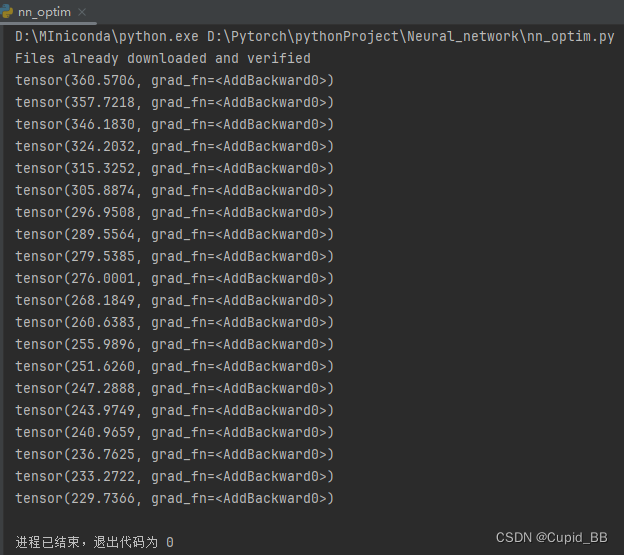

for epoch in range ( 20 ) :

running_loss = 0.0

for data in dataloader:

imgs, targets = data

outputs = tudui( imgs)

result_loss = loss( outputs, targets)

optim. zero_grad( )

result_loss. backward( )

optim. step( )

running_loss += result_loss

print ( running_loss)