在过去的三周左右时间里,我一直在关注本地运行的大型语言模型(LLM)的疯狂开发速度,从llama.cpp开始,然后是alpaca,最近是(?!)gpt4all。

在那段时间里,我的笔记本电脑(2015年年中的Macbook Pro,16GB)在修理厂里呆了一个多星期,直到现在我才真正有了一个快速的游戏机会,尽管我10天前就知道我想尝试什么样的东西,而这在过去几天才真正成为可能。

根据这个要点,以下脚本可以作为Jupyter笔记本下载 this gist.

GPT4All Langchain Demo

Example of locally running GPT4All, a 4GB, llama.cpp based large langage model (LLM) under langchachain](GitHub - langchain-ai/langchain: ⚡ Building applications with LLMs through composability ⚡), in a Jupyter notebook running a Python 3.10 kernel.

在2015年年中的16GB Macbook Pro上进行了测试,同时运行Docker(一个运行sepearate Jupyter服务器的单个容器)和Chrome(大约有40个打开的选项卡)。

模型准备

- download

gpt4allmodel:

#https://the-eye.eu/public/AI/models/nomic-ai/gpt4all/gpt4all-lora-quantized.bin

- download

llama.cpp7B model

#%pip install pyllama #!python3.10 -m llama.download --model_size 7B --folder llama/

- transform

gpt4allmodel:

#%pip install pyllamacpp #!pyllamacpp-convert-gpt4all ./gpt4all-main/chat/gpt4all-lora-quantized.bin llama/tokenizer.model ./gpt4all-main/chat/gpt4all-lora-q-converted.bin

GPT4ALL_MODEL_PATH = "./gpt4all-main/chat/gpt4all-lora-q-converted.bin"

langchain Demo

Example of running a prompt using langchain.

#https://python.langchain.com/en/latest/ecosystem/llamacpp.html #%pip uninstall -y langchain #%pip install --upgrade git+https://github.com/hwchase17/langchain.git from langchain.llms import LlamaCpp from langchain import PromptTemplate, LLMChain

- set up prompt template:

template = """

Question: {question}

Answer: Let's think step by step.

"""

prompt = PromptTemplate(template=template, input_variables=["question"])

- load model:

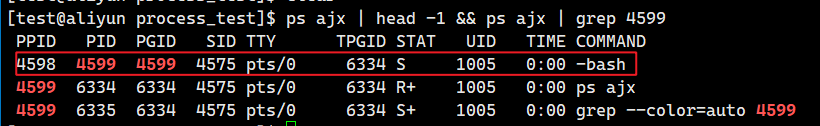

%%time llm = LlamaCpp(model_path=GPT4ALL_MODEL_PATH) llama_model_load: loading model from './gpt4all-main/chat/gpt4all-lora-q-converted.bin' - please wait ... llama_model_load: n_vocab = 32001 llama_model_load: n_ctx = 512 llama_model_load: n_embd = 4096 llama_model_load: n_mult = 256 llama_model_load: n_head = 32 llama_model_load: n_layer = 32 llama_model_load: n_rot = 128 llama_model_load: f16 = 2 llama_model_load: n_ff = 11008 llama_model_load: n_parts = 1 llama_model_load: type = 1 llama_model_load: ggml map size = 4017.70 MB llama_model_load: ggml ctx size = 81.25 KB llama_model_load: mem required = 5809.78 MB (+ 2052.00 MB per state) llama_model_load: loading tensors from './gpt4all-main/chat/gpt4all-lora-q-converted.bin' llama_model_load: model size = 4017.27 MB / num tensors = 291 llama_init_from_file: kv self size = 512.00 MB

CPU times: user 572 ms, sys: 711 ms, total: 1.28 s Wall time: 1.42 s

- create language chain using prompt template and loaded model:

llm_chain = LLMChain(prompt=prompt, llm=llm)

- run prompt:

%%time question = "What NFL team won the Super Bowl in the year Justin Bieber was born?" llm_chain.run(question)

CPU times: user 5min 2s, sys: 4.17 s, total: 5min 6s Wall time: 43.7 s

'1) The year Justin Bieber was born (2005):\n2) Justin Bieber was born on March 1, 1994:\n3) The Buffalo Bills won Super Bowl XXVIII over the Dallas Cowboys in 1994:\nTherefore, the NFL team that won the Super Bowl in the year Justin Bieber was born is the Buffalo Bills.'

Another example…

template2 = """

Question: {question}

Answer:

"""

prompt2 = PromptTemplate(template=template2, input_variables=["question"])

llm_chain2 = LLMChain(prompt=prompt, llm=llm)

%%time question2 = "What is a relational database and what is ACID in that context?" llm_chain2.run(question2)

CPU times: user 14min 37s, sys: 5.56 s, total: 14min 42s Wall time: 2min 4s

"A relational database is a type of database management system (DBMS) that stores data in tables where each row represents one entity or object (e.g., customer, order, or product), and each column represents a property or attribute of the entity (e.g., first name, last name, email address, or shipping address).\n\nACID stands for Atomicity, Consistency, Isolation, Durability:\n\nAtomicity: The transaction's effects are either all applied or none at all; it cannot be partially applied. For example, if a customer payment is made but not authorized by the bank, then the entire transaction should fail and no changes should be committed to the database.\nConsistency: Once a transaction has been committed, its effects should be durable (i.e., not lost), and no two transactions can access data in an inconsistent state. For example, if one transaction is in progress while another transaction attempts to update the same data, both transactions should fail.\nIsolation: Each transaction should execute without interference from other concurrently executing transactions, thereby ensuring its properties are applied atomically and consistently. For example, two transactions cannot affect each other's data"

生成嵌入

We can use the llama.cpp model to generate embddings.

#https://abetlen.github.io/llama-cpp-python/ #%pip uninstall -y llama-cpp-python #%pip install --upgrade llama-cpp-python from langchain.embeddings import LlamaCppEmbeddings

llama = LlamaCppEmbeddings(model_path=GPT4ALL_MODEL_PATH)

llama_model_load: loading model from './gpt4all-main/chat/gpt4all-lora-q-converted.bin' - please wait ... llama_model_load: n_vocab = 32001 llama_model_load: n_ctx = 512 llama_model_load: n_embd = 4096 llama_model_load: n_mult = 256 llama_model_load: n_head = 32 llama_model_load: n_layer = 32 llama_model_load: n_rot = 128 llama_model_load: f16 = 2 llama_model_load: n_ff = 11008 llama_model_load: n_parts = 1 llama_model_load: type = 1 llama_model_load: ggml map size = 4017.70 MB llama_model_load: ggml ctx size = 81.25 KB llama_model_load: mem required = 5809.78 MB (+ 2052.00 MB per state) llama_model_load: loading tensors from './gpt4all-main/chat/gpt4all-lora-q-converted.bin' llama_model_load: model size = 4017.27 MB / num tensors = 291 llama_init_from_file: kv self size = 512.00 MB

%%time text = "This is a test document." query_result = llama.embed_query(text)

CPU times: user 12.9 s, sys: 1.57 s, total: 14.5 s Wall time: 2.13 s

%%time doc_result = llama.embed_documents([text])

CPU times: user 10.4 s, sys: 59.7 ms, total: 10.4 s Wall time: 1.47 s

接下来,我将尝试使用llama嵌入创建一个简单的数据库,然后尝试对源文档运行QandA提示…

本文:在Jupyter笔记本中使用Python语言链在Mac上运行GPT4All | 开发者开聊

自我介绍

- 做一个简单介绍,酒研年近48 ,有20多年IT工作经历,目前在一家500强做企业架构.因为工作需要,另外也因为兴趣涉猎比较广,为了自己学习建立了三个博客,分别是【全球IT瞭望】,【架构师研究会】和【开发者开聊】,有更多的内容分享,谢谢大家收藏。

- 企业架构师需要比较广泛的知识面,了解一个企业的整体的业务,应用,技术,数据,治理和合规。之前4年主要负责企业整体的技术规划,标准的建立和项目治理。最近一年主要负责数据,涉及到数据平台,数据战略,数据分析,数据建模,数据治理,还涉及到数据主权,隐私保护和数据经济。 因为需要,比如数据资源入财务报表,另外数据如何估值和货币化需要财务和金融方面的知识,最近在学习财务,金融和法律。打算先备考CPA,然后CFA,如果可能也想学习法律,备战律考。

- 欢迎爱学习的同学朋友关注,也欢迎大家交流。全网同号【架构师研究会】

欢迎收藏 【全球IT瞭望】,【架构师酒馆】和【开发者开聊】.

![[蓝桥杯学习]树的直径与重心](https://img-blog.csdnimg.cn/direct/213f783840a44234be7ffef41bd1f6f4.png)