引入 Grounding 目标检测模型串联 SAM 从而实现实例分割任务,目前支持 Grounding DINO 和 GLIP

参考教程

MMDetection-SAM

如果是 Grounding DINO 则安装如下依赖即可

cd playground

pip install git+https://github.com/facebookresearch/segment-anything.git

pip install git+https://github.com/IDEA-Research/GroundingDINO.git # 需要编译 CUDA OP,请确保你的 PyTorch 版本、GCC 版本和 NVCC 编译版本兼容如果是 GLIP 则安装如下依赖即可

cd playground

pip install git+https://github.com/facebookresearch/segment-anything.git

pip install einops shapely timm yacs tensorboardX ftfy prettytable pymongo transformers nltk inflect scipy pycocotools opencv-python matplotlib

git clone https://github.com/microsoft/GLIP.git

cd GLIP; python setup.py build develop --user # 需要编译 CUDA OP,请确保你的 PyTorch 版本、GCC 版本和 NVCC 编译版本兼容,暂时不支持 PyTorch 1.11+ 版本执行功能演示代码报错

(mmdet-sam) hadoop@server:~/jupyter/mmdet-sam/playground/mmdet_sam$ python detector_sam_demo.py ../images/cat_remote.jpg \

configs/GroundingDINO_SwinT_OGC.py \

../models/groundingdino_swint_ogc.pth \

-t "cat . remote" \

--sam-device cpu

[nltk_data] Downloading package punkt to /home/hadoop/nltk_data...

[nltk_data] Package punkt is already up-to-date!

[nltk_data] Downloading package averaged_perceptron_tagger to

[nltk_data] /home/hadoop/nltk_data...

[nltk_data] Package averaged_perceptron_tagger is already up-to-

[nltk_data] date!

final text_encoder_type: bert-base-uncased

pytorch_model.bin: 100%|█████████████████████████████████████████████████████████████████████████████████████████████████████| 440M/440M [00:25<00:00, 17.2MB/s]

Some weights of the model checkpoint at bert-base-uncased were not used when initializing BertModel: ['cls.predictions.transform.dense.bias', 'cls.predictions.decoder.weight', 'cls.predictions.transform.dense.weight', 'cls.predictions.transform.LayerNorm.bias', 'cls.seq_relationship.bias', 'cls.seq_relationship.weight', 'cls.predictions.transform.LayerNorm.weight', 'cls.predictions.bias']

- This IS expected if you are initializing BertModel from the checkpoint of a model trained on another task or with another architecture (e.g. initializing a BertForSequenceClassification model from a BertForPreTraining model).

- This IS NOT expected if you are initializing BertModel from the checkpoint of a model that you expect to be exactly identical (initializing a BertForSequenceClassification model from a BertForSequenceClassification model).

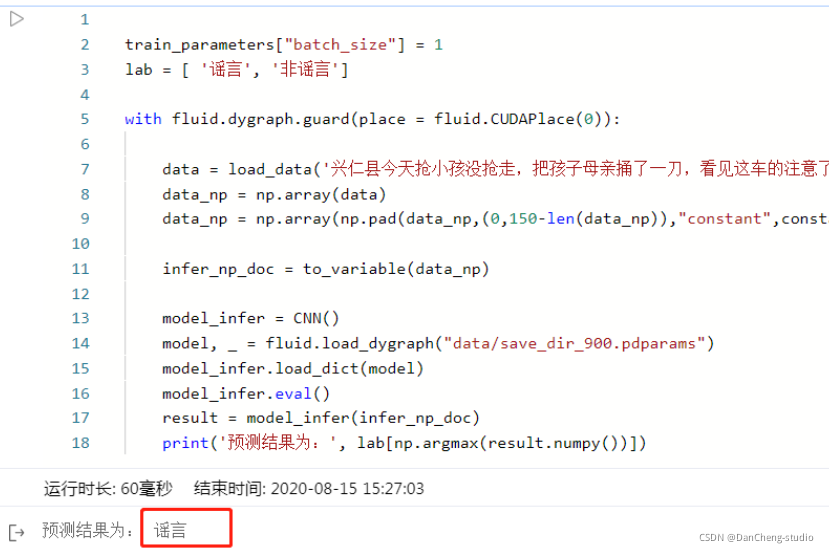

Traceback (most recent call last):

File "detector_sam_demo.py", line 511, in <module>

main()

File "detector_sam_demo.py", line 438, in main

det_model = build_detecter(args)

File "detector_sam_demo.py", line 160, in build_detecter

detecter = __build_grounding_dino_model(args)

File "detector_sam_demo.py", line 117, in __build_grounding_dino_model

checkpoint = torch.load(args.det_weight, map_location='cpu')

File "/home/hadoop/anaconda3/envs/mmdet-sam/lib/python3.8/site-packages/torch/serialization.py", line 600, in load

with _open_zipfile_reader(opened_file) as opened_zipfile:

File "/home/hadoop/anaconda3/envs/mmdet-sam/lib/python3.8/site-packages/torch/serialization.py", line 242, in __init__

super(_open_zipfile_reader, self).__init__(torch._C.PyTorchFileReader(name_or_buffer))

OSError: [Errno 22] Invalid argument

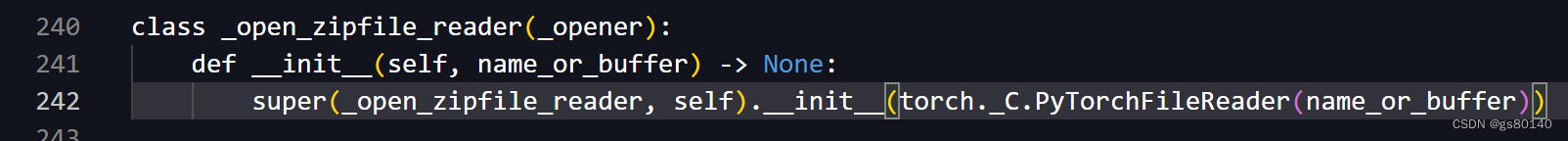

其中 242行代码长这样

这个语句是打开一个文件, 检查输入参数

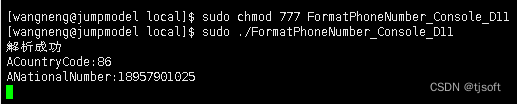

模型地址: ../models/groundingdino_swint_ogc.pth

发现此模型只有44k, 肯定不对, 重新下载模型, 有662M, 重新下载模型重新跑

遇到此问题, 在网上搜答案是找不到的, 还是要分析好自己的输入参数