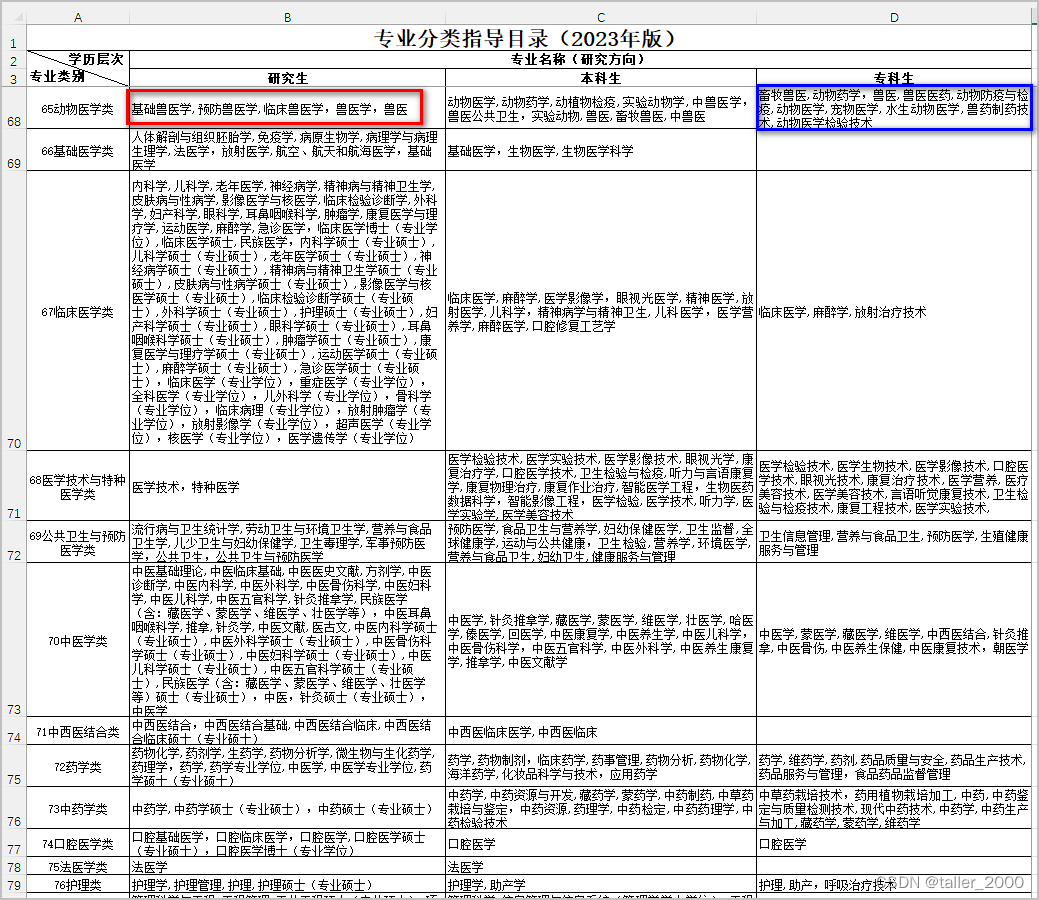

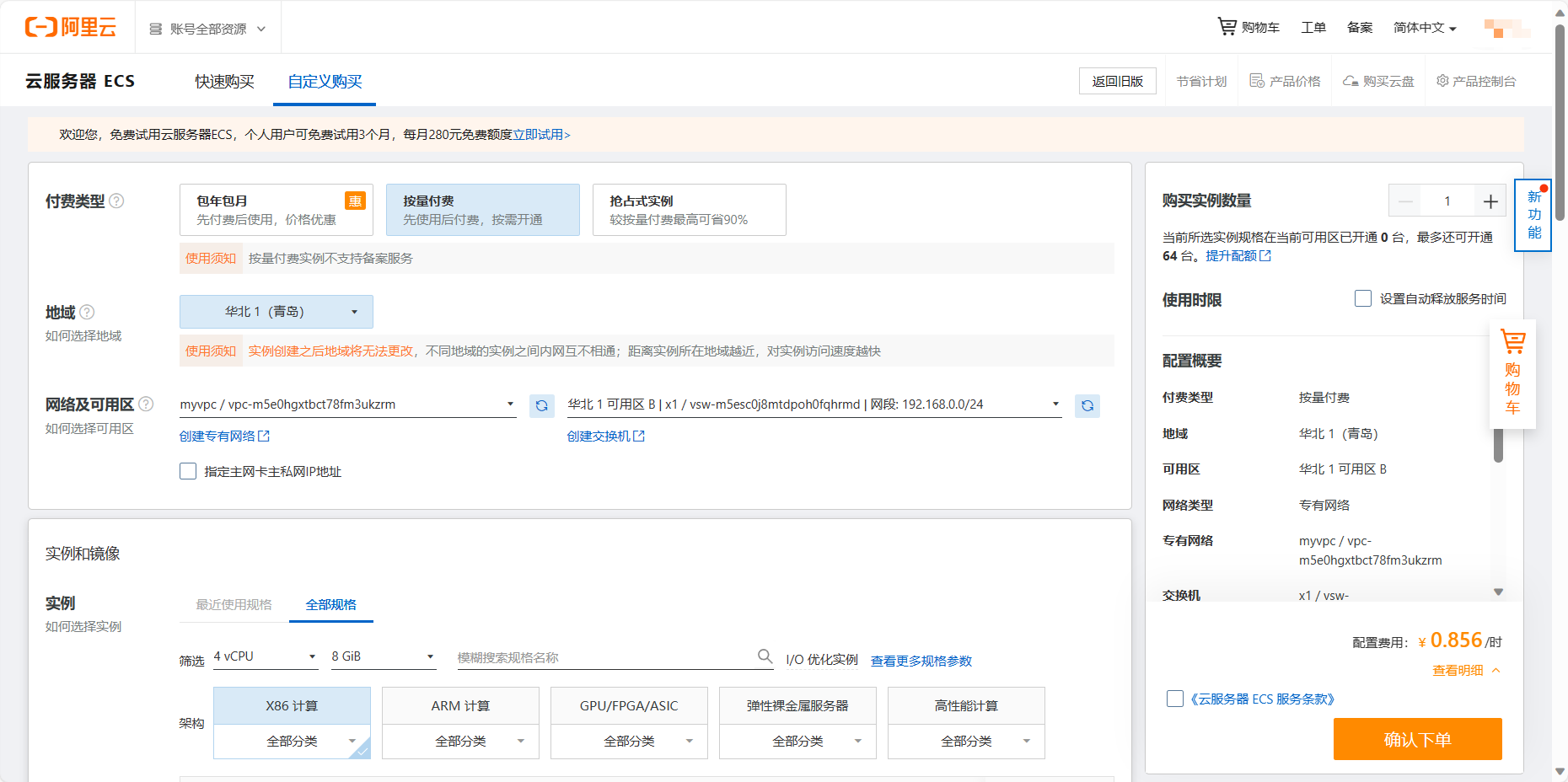

阿里云ESC

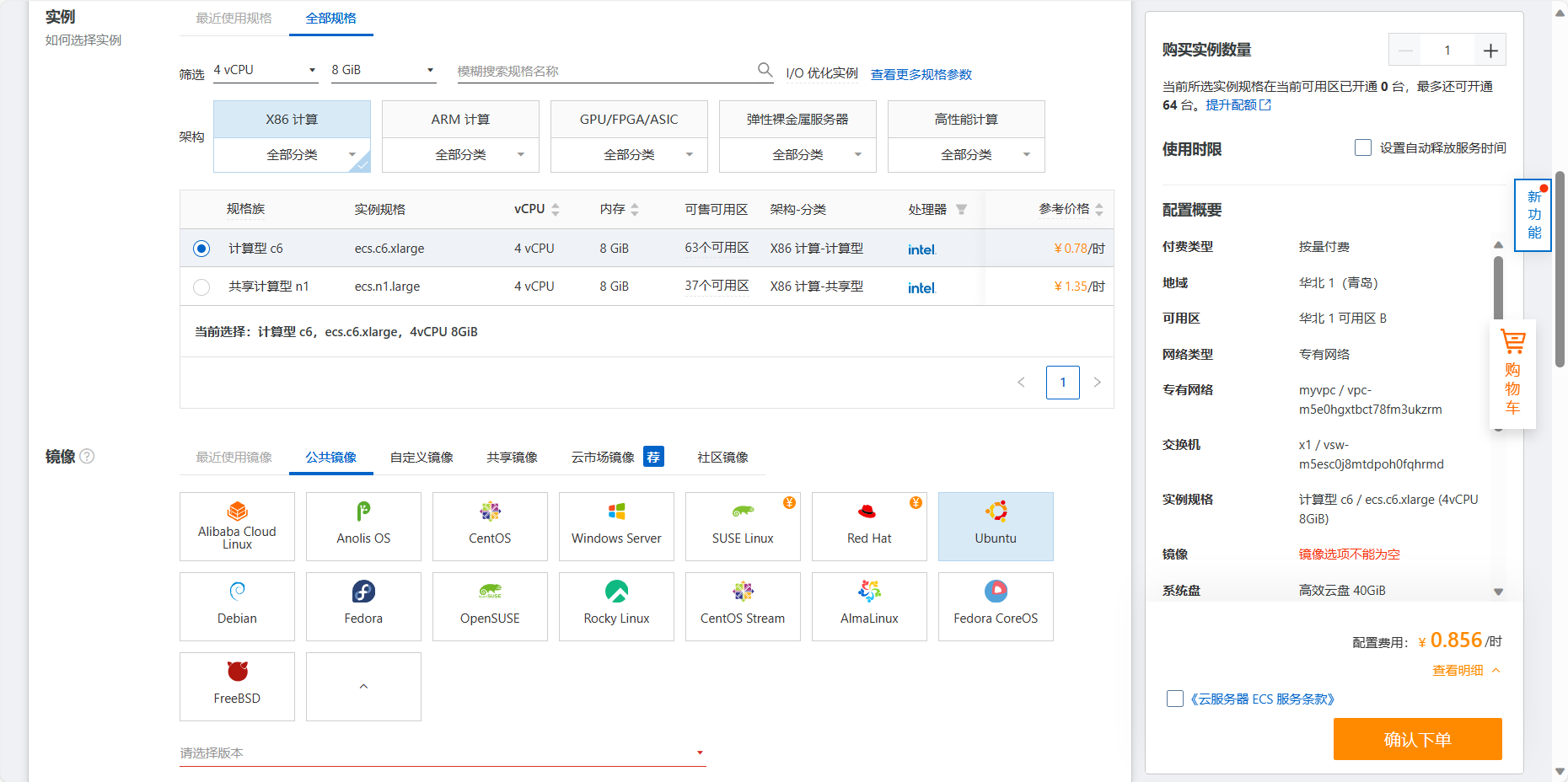

创建实例

填入密码即可

云上的防火墙相关设置就是安全组

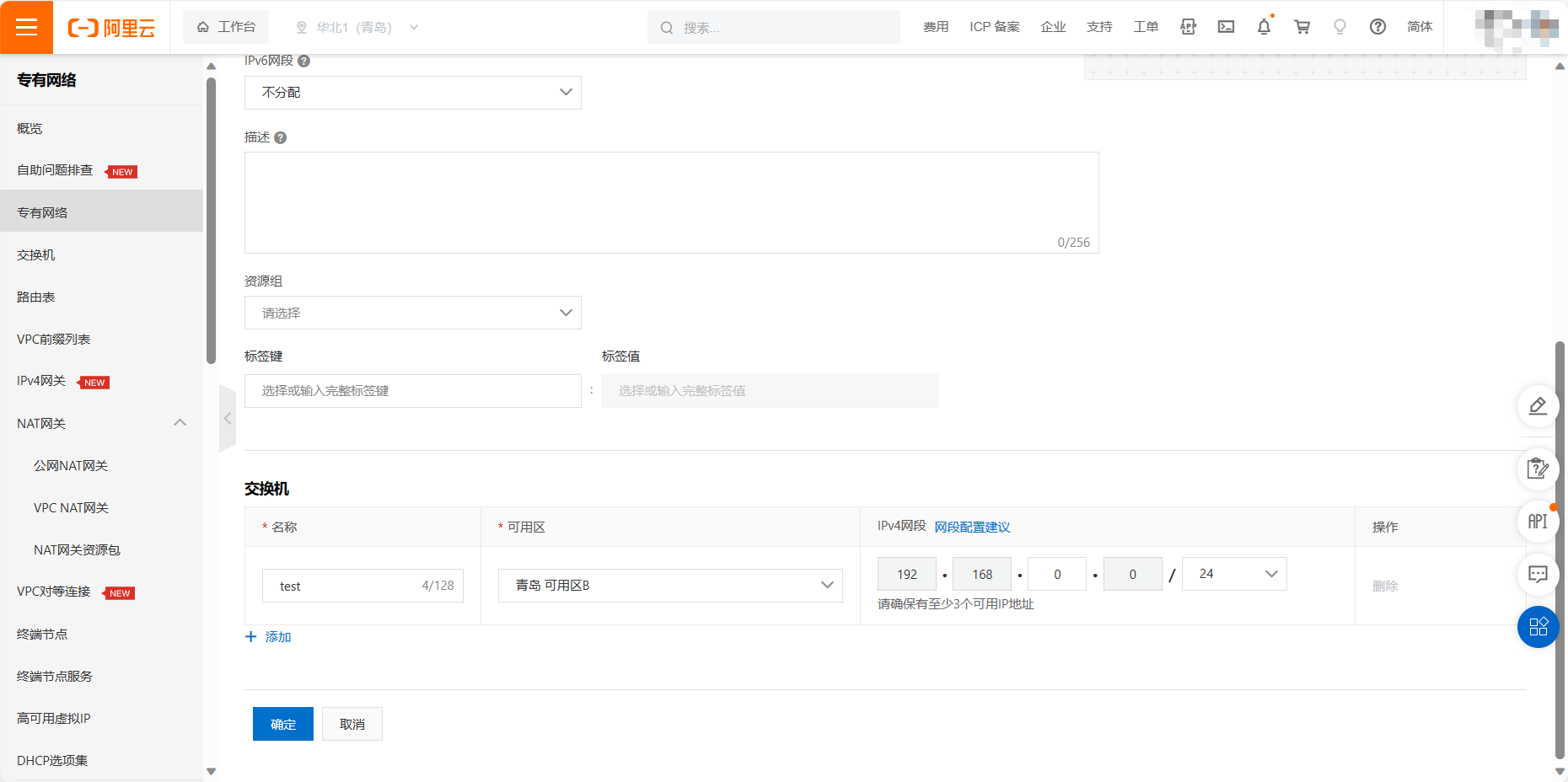

vpc 专有网络 划分私有ip 子网

vpc 隔离环境域 不同的vpc下 即使相同的子网也不互通

使用交换机继续划分子网

停止 释放 不收钱

k8s

服务器 4核8G*1 + 8核16G *2

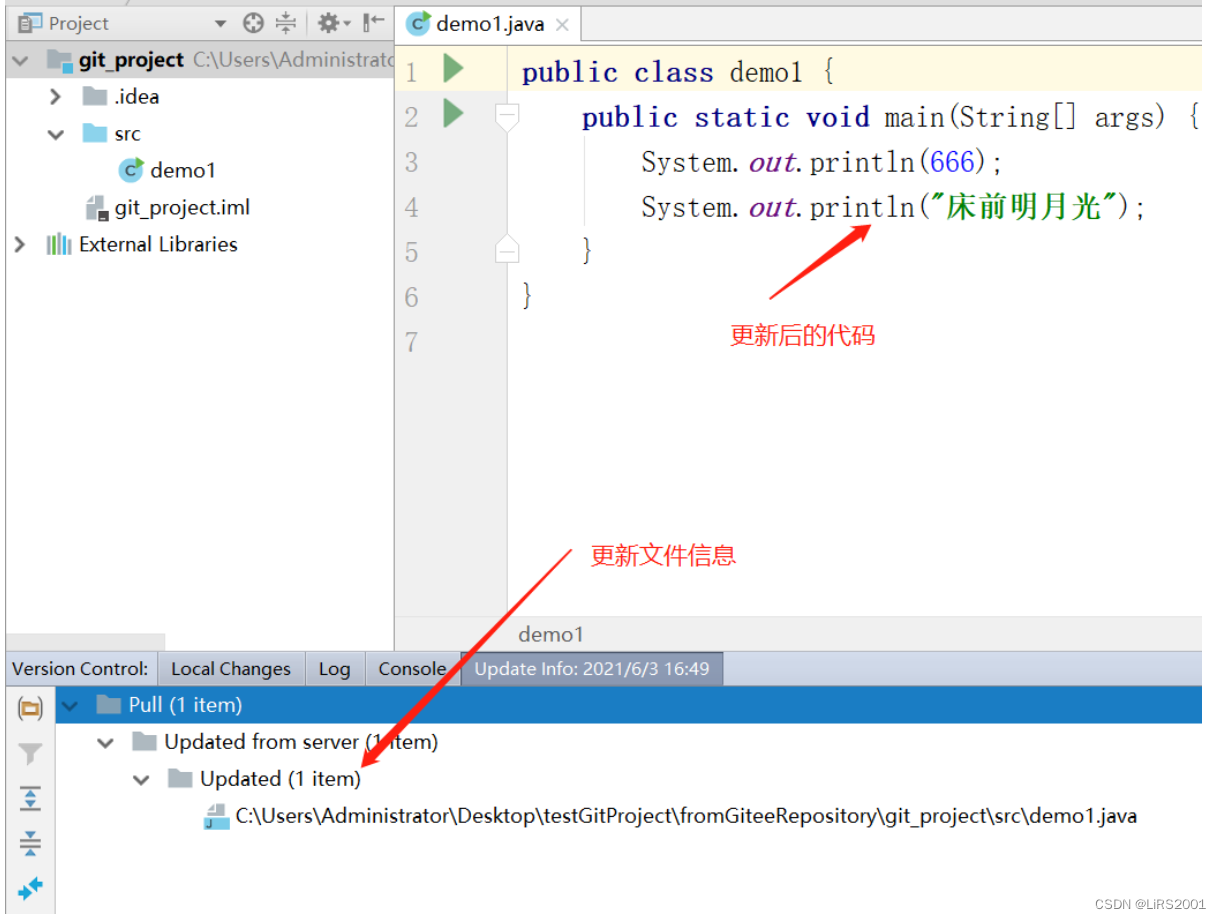

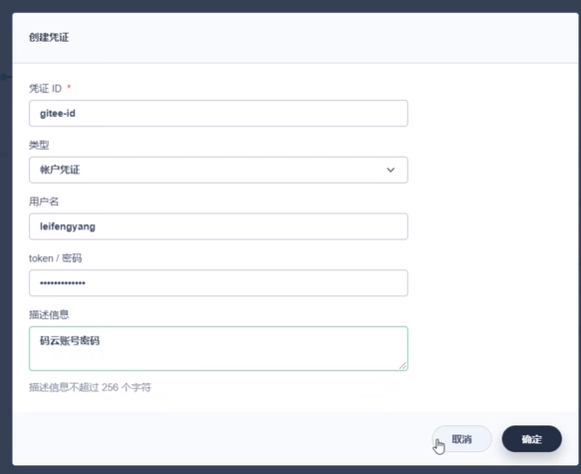

git 创建凭证

pipeline 发邮箱 (p124)

k8s 想要发邮件 admin 账号 登录 平台管理 平台设置 通知管理 邮件

admin 工作负载 jenkinsfile 编辑配置文件 配置邮箱

两个地址都需要配置

pipeline 推送镜像

创建凭证

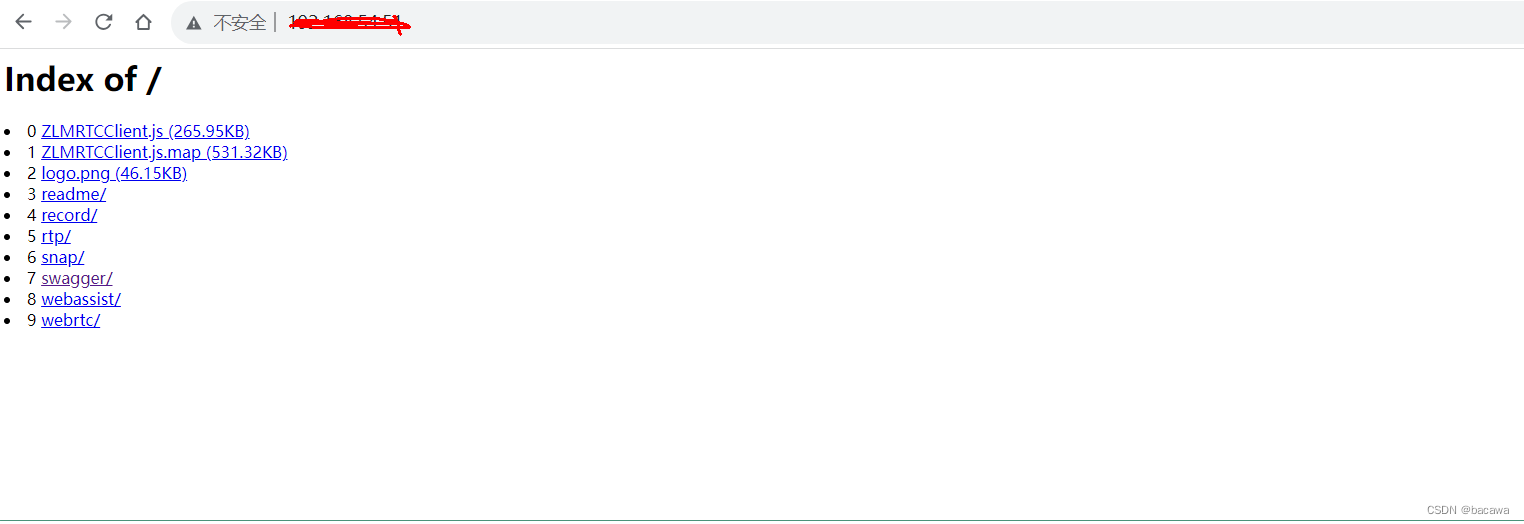

容器镜像服务 个人即可 创建仓库和命名空间 从一个仓库的基本信息中找到仓库地址

例如registry.cn-hangzhou.aliyuncs.com

找到命名空间 例如 jhj

deploy 部署

创建kubeconfig

拉取镜像 由于我们使用阿里云的 所以需要使用阿里云

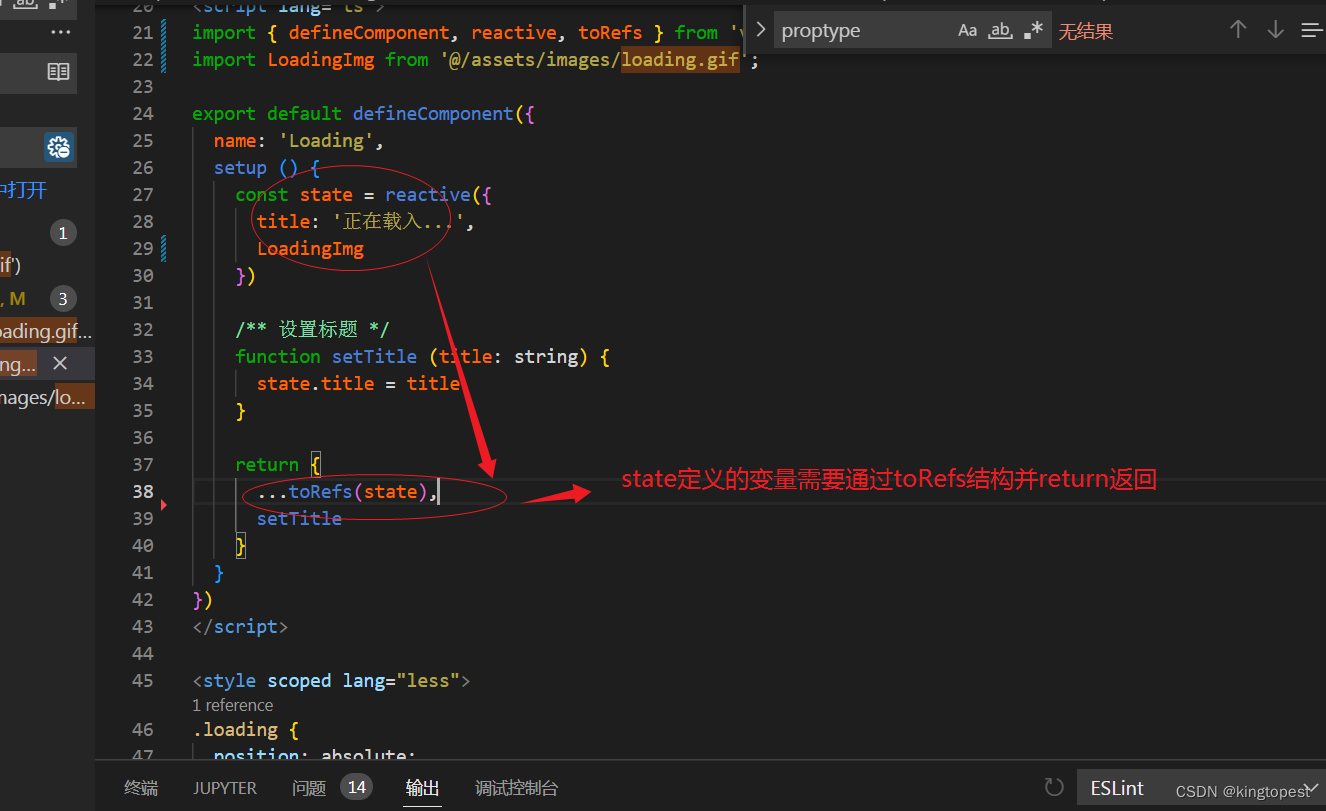

同时在项目的配置中心 密钥处配置

密钥类型

k8s kubekey 部署

删除之前部署的kk

./kk delete cluster -f config-sample.yaml

下载配置文件

export KKZONE=cn

curl -sfL https://get-kk.kubesphere.io | VERSION=v2.2.2 sh -

生成配置文件

./kk create config --name kk-k8s

使用内部负载均衡部署

apiVersion: kubekey.kubesphere.io/v1alpha2

kind: Cluster

metadata:

name: kk-k8s

spec:

hosts:

- {name: node1, address: 172.16.0.2, internalAddress: 172.16.0.2, user: ubuntu, password: "Qcloud@123"}

- {name: node2, address: 172.16.0.3, internalAddress: 172.16.0.3, user: ubuntu, password: "Qcloud@123"}

roleGroups:

etcd:

- node1

control-plane:

- node1

worker:

- node1

- node2

controlPlaneEndpoint:

## Internal loadbalancer for apiservers

internalLoadbalancer: haproxy

domain: lb.kubesphere.local

address: ""

port: 6443

kubernetes:

version: v1.23.8

clusterName: cluster.local

autoRenewCerts: true

containerManager: docker

etcd:

type: kubekey

network:

plugin: calico

kubePodsCIDR: 10.233.64.0/18

kubeServiceCIDR: 10.233.0.0/18

## multus support. https://github.com/k8snetworkplumbingwg/multus-cni

multusCNI:

enabled: false

registry:

privateRegistry: ""

namespaceOverride: ""

registryMirrors: []

insecureRegistries: []

addons: []

部署

./kk create clusrer -f kk-k8s.yaml --with-kubeshpere v3.2.0

如果想同时部署kubeshpere 加上 --with-kubeshpere v3.2.0 即可

查看集群状态

kubectl get node -o wide

kubeshpere 默认密码为admin

配置文件详解

apiVersion: kubekey.kubesphere.io/v1alpha2

kind: Cluster

metadata:

name: kk-k8s

spec:

hosts:

#address 公网地址 internalAddress 内网地址 账户名 密码

- {name: node1, address: 172.16.0.2, internalAddress: 172.16.0.2, user: ubuntu, password: "Qcloud@123"}

- {name: node2, address: 172.16.0.3, internalAddress: 172.16.0.3, user: ubuntu, password: "Qcloud@123"}

roleGroups:

#为了部署高可用的 三台节点可以同时为etcd 生产环境一般不同于主节点与工作节点的额外节点

etcd:

- node1

control-plane:

- node1

worker:

- node1

- node2

controlPlaneEndpoint:

## Internal loadbalancer for apiservers

internalLoadbalancer: haproxy # 内部负载均衡器 代理woker节点

domain: lb.kubesphere.local

address: "" #如果使用外部负载均衡 就在配置外部负载均衡的地址

port: 6443

kubernetes:

version: v1.23.8 #版本

clusterName: cluster.local # 集群名称

autoRenewCerts: true

containerManager: docker

etcd:

type: kubekey

network:

plugin: calico #网络

kubePodsCIDR: 10.233.64.0/18 #子网划分

kubeServiceCIDR: 10.233.0.0/18

## multus support. https://github.com/k8snetworkplumbingwg/multus-cni

multusCNI:

enabled: false

registry:

privateRegistry: "" #镜像仓库 默认部署

namespaceOverride: ""

registryMirrors: []

insecureRegistries: []

addons: [] #插件

如果想直接创建一个带kubesphere 的配置文件

./kk create config --with-kubesphere v3.2.0 -f test.yaml

apiVersion: kubekey.kubesphere.io/v1alpha2

kind: Cluster

metadata:

name: sample

spec:

hosts:

- {name: node1, address: 172.16.0.2, internalAddress: 172.16.0.2, user: ubuntu, password: "Qcloud@123"}

- {name: node2, address: 172.16.0.3, internalAddress: 172.16.0.3, user: ubuntu, password: "Qcloud@123"}

roleGroups:

etcd:

- node1

control-plane:

- node1

worker:

- node1

- node2

controlPlaneEndpoint:

## Internal loadbalancer for apiservers

# internalLoadbalancer: haproxy

domain: lb.kubesphere.local

address: ""

port: 6443

kubernetes:

version: v1.23.8

clusterName: cluster.local

autoRenewCerts: true

containerManager: docker

etcd:

type: kubekey

network:

plugin: calico

kubePodsCIDR: 10.233.64.0/18

kubeServiceCIDR: 10.233.0.0/18

## multus support. https://github.com/k8snetworkplumbingwg/multus-cni

multusCNI:

enabled: false

registry:

privateRegistry: ""

namespaceOverride: ""

registryMirrors: []

insecureRegistries: []

addons: []

---

apiVersion: installer.kubesphere.io/v1alpha1

kind: ClusterConfiguration

metadata:

name: ks-installer

namespace: kubesphere-system

labels:

version: v3.2.0

spec:

persistence:

storageClass: ""

authentication:

jwtSecret: ""

zone: ""

local_registry: ""

# dev_tag: ""

etcd:

monitoring: false

endpointIps: localhost

port: 2379

tlsEnable: true

common:

core:

console:

enableMultiLogin: true

port: 30880

type: NodePort

# apiserver:

# resources: {}

# controllerManager:

# resources: {}

redis:

enabled: false

volumeSize: 2Gi

openldap:

enabled: false

volumeSize: 2Gi

minio:

volumeSize: 20Gi

monitoring:

# type: external

endpoint: http://prometheus-operated.kubesphere-monitoring-system.svc:9090

GPUMonitoring:

enabled: false

gpu:

kinds:

- resourceName: "nvidia.com/gpu"

resourceType: "GPU"

default: true

es:

# master:

# volumeSize: 4Gi

# replicas: 1

# resources: {}

# data:

# volumeSize: 20Gi

# replicas: 1

# resources: {}

logMaxAge: 7

elkPrefix: logstash

basicAuth:

enabled: false

username: ""

password: ""

externalElasticsearchUrl: ""

externalElasticsearchPort: ""

alerting:

enabled: false

# thanosruler:

# replicas: 1

# resources: {}

auditing:

enabled: false

# operator:

# resources: {}

# webhook:

# resources: {}

devops:

enabled: false #想用devops 就选择true

jenkinsMemoryLim: 2Gi

jenkinsMemoryReq: 1500Mi

jenkinsVolumeSize: 8Gi

jenkinsJavaOpts_Xms: 512m

jenkinsJavaOpts_Xmx: 512m

jenkinsJavaOpts_MaxRAM: 2g

events:

enabled: false

# operator:

# resources: {}

# exporter:

# resources: {}

# ruler:

# enabled: true

# replicas: 2

# resources: {}

logging:

enabled: false

containerruntime: docker

logsidecar:

enabled: true

replicas: 2

# resources: {}

metrics_server:

enabled: false

monitoring:

storageClass: ""

# kube_rbac_proxy:

# resources: {}

# kube_state_metrics:

# resources: {}

# prometheus:

# replicas: 1

# volumeSize: 20Gi

# resources: {}

# operator:

# resources: {}

# adapter:

# resources: {}

# node_exporter:

# resources: {}

# alertmanager:

# replicas: 1

# resources: {}

# notification_manager:

# resources: {}

# operator:

# resources: {}

# proxy:

# resources: {}

gpu:

nvidia_dcgm_exporter:

enabled: false

# resources: {}

multicluster:

clusterRole: none

network:

networkpolicy:

enabled: false

ippool:

type: none

topology:

type: none

openpitrix:

store:

enabled: false

servicemesh:

enabled: false

kubeedge:

enabled: false

cloudCore:

nodeSelector: {"node-role.kubernetes.io/worker": ""}

tolerations: []

cloudhubPort: "10000"

cloudhubQuicPort: "10001"

cloudhubHttpsPort: "10002"

cloudstreamPort: "10003"

tunnelPort: "10004"

cloudHub:

advertiseAddress:

- ""

nodeLimit: "100"

service:

cloudhubNodePort: "30000"

cloudhubQuicNodePort: "30001"

cloudhubHttpsNodePort: "30002"

cloudstreamNodePort: "30003"

tunnelNodePort: "30004"

edgeWatcher:

nodeSelector: {"node-role.kubernetes.io/worker": ""}

tolerations: []

edgeWatcherAgent:

nodeSelector: {"node-role.kubernetes.io/worker": ""}

tolerations: []

kubekey官网详细的参考

https://github.com/kubesphere/kubekey/blob/master/docs/config-example.md

kubesphere 其实就是ks-installer

https://github.com/kubesphere/ks-installer/blob/master/deploy/cluster-configuration.yaml

如果想开启其他配置可以去查看api 开启配置

https://github.com/kubesphere/kubekey/tree/master/cmd/kk/apis/kubekey/v1alpha2

kk 增加节点

修改config.yaml

执行

./kk add nodes -f config.yaml

kk删除节点

执行

./kk delete node node3 -f config.yaml

集群证书管理

查看到期时间

./kk certs check-expiraton -f config.yaml

更新集群证书

默认在 /etc/kubernetes/pki

执行命令更新证书

./kk certs renew -f config.yaml

1.2 2.0 插件自动安装 自动更新

应用商店

在安装后 开启一些组件 crud 中 配置项改为true

https://kubesphere.io/zh/docs/v3.4/pluggable-components/app-store/