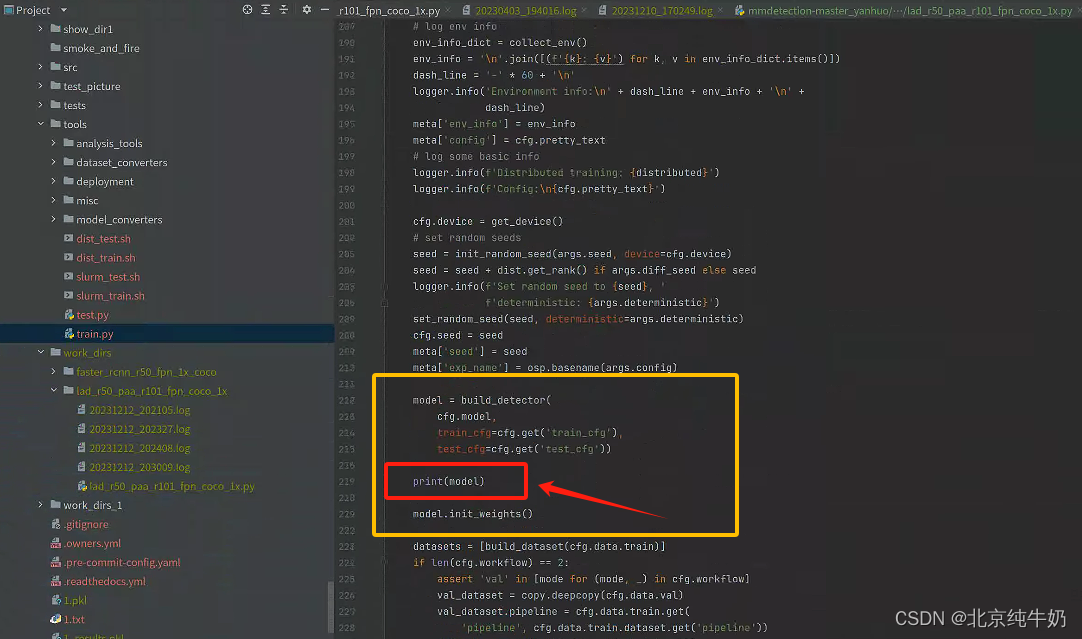

控制台输入:python tools/train.py /home/yuan3080/桌面/detection_paper_6/mmdetection-master1/mmdetection-master_yanhuo/work_dirs/lad_r50_paa_r101_fpn_coco_1x/lad_r50_a_r101_fpn_coco_1x.py

这个是输出方法里面的,不是原始方法。

如下所示,加一个print(model)就可以

,然后运行:控制台输入

之后,之后输出即可,如下所示:

LAD(

(backbone): Res2Net(

(stem): Sequential(

(0): Conv2d(3, 32, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(1): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(32, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(4): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(5): ReLU(inplace=True)

(6): Conv2d(32, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(7): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(8): ReLU(inplace=True)

)

(maxpool): MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)

(layer1): Res2Layer(

(0): Bottle2neck(

(conv1): Conv2d(64, 104, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(104, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(104, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(downsample): Sequential(

(0): AvgPool2d(kernel_size=1, stride=1, padding=0)

(1): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(convs): ModuleList(

(0): Conv2d(26, 26, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): Conv2d(26, 26, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(2): Conv2d(26, 26, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(bns): ModuleList(

(0): BatchNorm2d(26, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): BatchNorm2d(26, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): BatchNorm2d(26, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): Bottle2neck(

(conv1): Conv2d(256, 104, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(104, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(104, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(convs): ModuleList(

(0): Conv2d(26, 26, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): Conv2d(26, 26, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(2): Conv2d(26, 26, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(bns): ModuleList(

(0): BatchNorm2d(26, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): BatchNorm2d(26, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): BatchNorm2d(26, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(2): Bottle2neck(

(conv1): Conv2d(256, 104, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(104, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(104, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(convs): ModuleList(

(0): Conv2d(26, 26, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): Conv2d(26, 26, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(2): Conv2d(26, 26, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(bns): ModuleList(

(0): BatchNorm2d(26, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): BatchNorm2d(26, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): BatchNorm2d(26, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

)

(layer2): Res2Layer(

(0): Bottle2neck(

(conv1): Conv2d(256, 208, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(208, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(208, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(downsample): Sequential(

(0): AvgPool2d(kernel_size=2, stride=2, padding=0)

(1): Conv2d(256, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(pool): AvgPool2d(kernel_size=3, stride=2, padding=1)

(convs): ModuleList(

(0): Conv2d(52, 52, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(1): Conv2d(52, 52, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(2): Conv2d(52, 52, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

)

(bns): ModuleList(

(0): BatchNorm2d(52, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): BatchNorm2d(52, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): BatchNorm2d(52, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): Bottle2neck(

(conv1): Conv2d(512, 208, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(208, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(208, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(convs): ModuleList(

(0): Conv2d(52, 52, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): Conv2d(52, 52, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(2): Conv2d(52, 52, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(bns): ModuleList(

(0): BatchNorm2d(52, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): BatchNorm2d(52, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): BatchNorm2d(52, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(2): Bottle2neck(

(conv1): Conv2d(512, 208, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(208, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(208, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(convs): ModuleList(

(0): Conv2d(52, 52, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): Conv2d(52, 52, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(2): Conv2d(52, 52, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(bns): ModuleList(

(0): BatchNorm2d(52, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): BatchNorm2d(52, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): BatchNorm2d(52, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(3): Bottle2neck(

(conv1): Conv2d(512, 208, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(208, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(208, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(convs): ModuleList(

(0): Conv2d(52, 52, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): Conv2d(52, 52, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(2): Conv2d(52, 52, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(bns): ModuleList(

(0): BatchNorm2d(52, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): BatchNorm2d(52, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): BatchNorm2d(52, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

)

(layer3): Res2Layer(

(0): Bottle2neck(

(conv1): Conv2d(512, 416, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(416, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(416, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(downsample): Sequential(

(0): AvgPool2d(kernel_size=2, stride=2, padding=0)

(1): Conv2d(512, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(2): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(pool): AvgPool2d(kernel_size=3, stride=2, padding=1)

(convs): ModuleList(

(0): Conv2d(104, 104, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(1): Conv2d(104, 104, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(2): Conv2d(104, 104, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

)

(bns): ModuleList(

(0): BatchNorm2d(104, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): BatchNorm2d(104, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): BatchNorm2d(104, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): Bottle2neck(

(conv1): Conv2d(1024, 416, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(416, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(416, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(convs): ModuleList(

(0): Conv2d(104, 104, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): Conv2d(104, 104, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(2): Conv2d(104, 104, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(bns): ModuleList(

(0): BatchNorm2d(104, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): BatchNorm2d(104, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): BatchNorm2d(104, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(2): Bottle2neck(

(conv1): Conv2d(1024, 416, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(416, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(416, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(convs): ModuleList(

(0): Conv2d(104, 104, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): Conv2d(104, 104, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(2): Conv2d(104, 104, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(bns): ModuleList(

(0): BatchNorm2d(104, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): BatchNorm2d(104, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): BatchNorm2d(104, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(3): Bottle2neck(

(conv1): Conv2d(1024, 416, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(416, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(416, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(convs): ModuleList(

(0): Conv2d(104, 104, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): Conv2d(104, 104, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(2): Conv2d(104, 104, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(bns): ModuleList(

(0): BatchNorm2d(104, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): BatchNorm2d(104, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): BatchNorm2d(104, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(4): Bottle2neck(

(conv1): Conv2d(1024, 416, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(416, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(416, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(convs): ModuleList(

(0): Conv2d(104, 104, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): Conv2d(104, 104, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(2): Conv2d(104, 104, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(bns): ModuleList(

(0): BatchNorm2d(104, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): BatchNorm2d(104, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): BatchNorm2d(104, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(5): Bottle2neck(

(conv1): Conv2d(1024, 416, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(416, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(416, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(convs): ModuleList(

(0): Conv2d(104, 104, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): Conv2d(104, 104, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(2): Conv2d(104, 104, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(bns): ModuleList(

(0): BatchNorm2d(104, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): BatchNorm2d(104, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): BatchNorm2d(104, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

)

(layer4): Res2Layer(

(0): Bottle2neck(

(conv1): Conv2d(1024, 832, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(832, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(832, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(downsample): Sequential(

(0): AvgPool2d(kernel_size=2, stride=2, padding=0)

(1): Conv2d(1024, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

(2): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(pool): AvgPool2d(kernel_size=3, stride=2, padding=1)

(convs): ModuleList(

(0): Conv2d(208, 208, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(1): Conv2d(208, 208, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(2): Conv2d(208, 208, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

)

(bns): ModuleList(

(0): BatchNorm2d(208, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): BatchNorm2d(208, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): BatchNorm2d(208, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): Bottle2neck(

(conv1): Conv2d(2048, 832, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(832, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(832, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(convs): ModuleList(

(0): Conv2d(208, 208, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): Conv2d(208, 208, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(2): Conv2d(208, 208, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(bns): ModuleList(

(0): BatchNorm2d(208, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): BatchNorm2d(208, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): BatchNorm2d(208, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(2): Bottle2neck(

(conv1): Conv2d(2048, 832, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(832, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(832, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(convs): ModuleList(

(0): Conv2d(208, 208, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): Conv2d(208, 208, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(2): Conv2d(208, 208, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(bns): ModuleList(

(0): BatchNorm2d(208, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): BatchNorm2d(208, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): BatchNorm2d(208, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

)

)

init_cfg={'type': 'Pretrained', 'checkpoint': 'torchvision://resnet50'}

(neck): FPN(

(lateral_convs): ModuleList(

(0): ConvModule(

(conv): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1))

)

(1): ConvModule(

(conv): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1))

)

(2): ConvModule(

(conv): Conv2d(2048, 256, kernel_size=(1, 1), stride=(1, 1))

)

)

(fpn_convs): ModuleList(

(0): ConvModule(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

(1): ConvModule(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

(2): ConvModule(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

(3): ConvModule(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1))

)

(4): ConvModule(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1))

)

)

)

init_cfg={'type': 'Xavier', 'layer': 'Conv2d', 'distribution': 'uniform'}

(bbox_head): LADHead(

(loss_cls): FocalLoss()

(loss_bbox): GIoULoss()

(relu): ReLU(inplace=True)

(cls_convs): ModuleList(

(0): ConvModule(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(gn): GroupNorm(32, 256, eps=1e-05, affine=True)

(activate): ReLU(inplace=True)

)

(1): ConvModule(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(gn): GroupNorm(32, 256, eps=1e-05, affine=True)

(activate): ReLU(inplace=True)

)

(2): ConvModule(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(gn): GroupNorm(32, 256, eps=1e-05, affine=True)

(activate): ReLU(inplace=True)

)

(3): ConvModule(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(gn): GroupNorm(32, 256, eps=1e-05, affine=True)

(activate): ReLU(inplace=True)

)

)

(reg_convs): ModuleList(

(0): ConvModule(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(gn): GroupNorm(32, 256, eps=1e-05, affine=True)

(activate): ReLU(inplace=True)

)

(1): ConvModule(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(gn): GroupNorm(32, 256, eps=1e-05, affine=True)

(activate): ReLU(inplace=True)

)

(2): ConvModule(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(gn): GroupNorm(32, 256, eps=1e-05, affine=True)

(activate): ReLU(inplace=True)

)

(3): ConvModule(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(gn): GroupNorm(32, 256, eps=1e-05, affine=True)

(activate): ReLU(inplace=True)

)

)

(atss_cls): Conv2d(256, 2, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(atss_reg): Conv2d(256, 4, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(atss_centerness): Conv2d(256, 1, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(scales): ModuleList(

(0): Scale()

(1): Scale()

(2): Scale()

(3): Scale()

(4): Scale()

)

(loss_centerness): CrossEntropyLoss(avg_non_ignore=False)

)