1. 背景:

项目中使用到了纹理进行插值的加速,因此记录一些自己在学习tex2D的一些过程

2. 代码:

#include "cuda_runtime.h"

#include "device_launch_parameters.h"

#include <assert.h>

#include <stdio.h>

#include <iostream>

#include <cuda_fp16.h>

#include <vector>

void Data2Half(half* pDst, const int16_t* pSrc, const int Ndots);

static __global__ void Tex2DTest(cudaTextureObject_t p_rf_data, float* pfRes1, float* pfRes2);

static __global__ void data2half(half* pDst, const int16_t* pSrc, const int Ndots)

{

const int tid = blockIdx.x * blockDim.x + threadIdx.x;

if (tid >= Ndots)

return;

pDst[tid] = __short2half_rn(pSrc[tid]);

}

cudaTextureObject_t m_tex = 0;

cudaArray* m_pRFData = nullptr;

int16_t* m_i16RFDataBuffer = nullptr; // 设备端的RF数据

half* m_pHalfRFDataCache = nullptr; // 转换为半浮点型的RF数据缓存,用于将SHORT类型转换为FLOAT类型

int main()

{

const int nRx = 2;

const int Nsample = 2;

const int IQ = 1;

cudaError_t error;

cudaChannelFormatDesc channelDesc = cudaCreateChannelDescHalf();

error = cudaMallocArray(&m_pRFData, &channelDesc, nRx * IQ, Nsample, cudaArrayTextureGather);

assert(m_pRFData);

cudaResourceDesc texRes;

memset(&texRes, 0, sizeof(cudaResourceDesc));

texRes.resType = cudaResourceTypeArray;

texRes.res.array.array = m_pRFData;

cudaTextureDesc texDescr;

memset(&texDescr, 0, sizeof(cudaTextureDesc));

texDescr.normalizedCoords = false;

texDescr.filterMode = cudaFilterModeLinear; // 这里很重要

texDescr.addressMode[0] = cudaAddressModeBorder;

texDescr.addressMode[1] = cudaAddressModeBorder;

error = cudaCreateTextureObject(&m_tex, &texRes, &texDescr, NULL);

//int16_t pi16Src[nRx * Nsample * IQ] = {1, 11, 2, 22,

// 3, 33, 4, 44,

// 5, 55, 6, 66,

// 7, 77, 8, 88};

//int16_t pi16Src[nRx * Nsample * IQ] = { 1, 11, 2, 22,

// 3, 33, 4, 44};

int16_t pi16Src[nRx * Nsample * IQ] = { 1,2,

3,4 };

error = cudaMalloc(&m_i16RFDataBuffer, sizeof(int16_t) * nRx * IQ * Nsample);

error = cudaMemcpy(m_i16RFDataBuffer, pi16Src, sizeof(int16_t) * nRx * IQ * Nsample, cudaMemcpyHostToDevice);

error = cudaMalloc(&m_pHalfRFDataCache, sizeof(half) * nRx * IQ * Nsample);

Data2Half(m_pHalfRFDataCache, m_i16RFDataBuffer, nRx * IQ * Nsample);

error = cudaMemcpy2DToArray(m_pRFData, 0, 0, m_pHalfRFDataCache, sizeof(half) * nRx * IQ, sizeof(half) * nRx * IQ, Nsample, cudaMemcpyDeviceToDevice);

float* pf_res1 = nullptr;

float* pf_res2 = nullptr;

error = cudaMalloc(&pf_res1, nRx * Nsample * sizeof(float)); cudaMemset(pf_res1, 0, nRx * Nsample * sizeof(float));

error = cudaMalloc(&pf_res2, nRx * Nsample * sizeof(float)); cudaMemset(pf_res2, 0, nRx * Nsample * sizeof(float));

error = cudaGetLastError();

dim3 block_dim = dim3(1, 1);

dim3 grid_dim = dim3(1, 1);

Tex2DTest << <grid_dim, block_dim >> > (m_tex, pf_res1, pf_res2);

cudaDeviceSynchronize();

std::vector<float> vf_res_1(nRx * Nsample, 0);

std::vector<float> vf_res_2(nRx * Nsample, 0);

cudaMemcpy(vf_res_1.data(), pf_res1, sizeof(float) * vf_res_1.size(), cudaMemcpyDeviceToHost);

cudaMemcpy(vf_res_2.data(), pf_res2, sizeof(float) * vf_res_2.size(), cudaMemcpyDeviceToHost);

return 0;

}

void Data2Half(half* pDst, const int16_t* pSrc, const int Ndots)

{

dim3 block = dim3(512, 1);

dim3 grid = dim3((Ndots - 1) / block.x + 1, 1);

data2half << < grid, block >> > (pDst, pSrc, Ndots);

}

static __global__ void Tex2DTest(cudaTextureObject_t p_rf_data, float *pfRes1, float *pfRes2)

{

for (size_t y = 0; y < 2; ++y)

{

for (size_t x = 0; x < 2; ++x)

{

float value = tex2D<float>(p_rf_data, x, y);

//pfRes1[y * 4 + y] =

printf("x: %f\n", value);

}

}

}

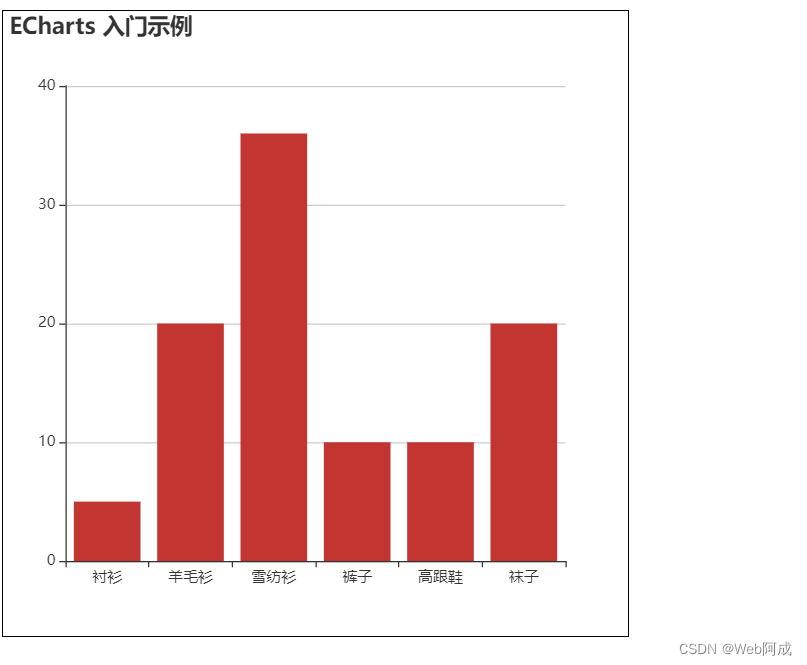

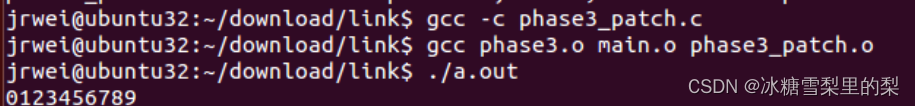

3. 输出分析:

可以看到执行结果是

为什么呢?

原因是因为tex2D插值导致的,上面测试数据是

1 2

3 4

那在进行插值的时候会变成

0 0 0 0

0 1 2 0

0 3 4 0

每个点的输出都是当前前和左上角3个点进行平均计算出来的

比如第一个输出计算为:(1 + 0 + 0 + 0)/4 = 0.25

最后一个输出的计算为:(1 + 2 + 3 + 4) / 4 = 2.5

4. 问题

上面只是单独数据实数点的计算,如果我的数据集合是复数怎么办?

比如一组2 * 2大小的数据对

(1, 2, 3, 4;

5, 6, 7, 8)

数据实际表示含义是

(1 + j * 2, 3 + j * 4;

5 + j * 6, 7 + j * 8)

这种情况下怎么做到正确插值呢,比如第一个实数点的输出结果应该是

(1 + 0 + 0 + 0)/ 4

最后一个实数点的输出应该是:

(1 + 3 + 5 + 7) / 4

同理,最后一个虚数点的输出应该是:

(2 + 4 + 6 + 8)/ 4

5. 解决

#include "cuda_runtime.h"

#include "device_launch_parameters.h"

#include <assert.h>

#include <stdio.h>

#include <iostream>

#include <cuda_fp16.h>

#include <vector>

void Data2Half(half* pDst, const int16_t* pSrc, const int Ndots);

static __global__ void Tex2DTest(cudaTextureObject_t p_rf_data, float* pfRes1, float* pfRes2);

static __global__ void data2half(half* pDst, const int16_t* pSrc, const int Ndots)

{

const int tid = blockIdx.x * blockDim.x + threadIdx.x;

if (tid >= Ndots)

return;

pDst[tid] = __short2half_rn(pSrc[tid]);

}

cudaTextureObject_t m_tex = 0;

cudaArray* m_pRFData = nullptr;

int16_t* m_i16RFDataBuffer = nullptr; // 设备端的RF数据

half* m_pHalfRFDataCache = nullptr; // 转换为半浮点型的RF数据缓存,用于将SHORT类型转换为FLOAT类型

using namespace std;

int main()

{

const int nRx = 2;

const int Nsample = 2;

const int IQ = 2;

cudaError_t error;

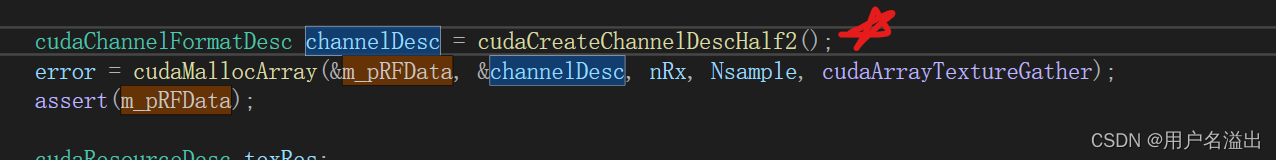

cudaChannelFormatDesc channelDesc = cudaCreateChannelDescHalf2();

error = cudaMallocArray(&m_pRFData, &channelDesc, nRx, Nsample, cudaArrayTextureGather);

assert(m_pRFData);

cudaResourceDesc texRes;

memset(&texRes, 0, sizeof(cudaResourceDesc));

texRes.resType = cudaResourceTypeArray;

texRes.res.array.array = m_pRFData;

cudaTextureDesc texDescr;

memset(&texDescr, 0, sizeof(cudaTextureDesc));

texDescr.normalizedCoords = false;

texDescr.filterMode = cudaFilterModeLinear; // 这里很重要

texDescr.addressMode[0] = cudaAddressModeBorder;

texDescr.addressMode[1] = cudaAddressModeBorder;

error = cudaCreateTextureObject(&m_tex, &texRes, &texDescr, NULL);

//int16_t pi16Src[nRx * Nsample * IQ] = {1, 11, 2, 22,

// 3, 33, 4, 44,

// 5, 55, 6, 66,

// 7, 77, 8, 88};

//int16_t pi16Src[nRx * Nsample * IQ] = { 1, 11, 2, 22,

// 3, 33, 4, 44};

int16_t pi16Src[nRx * Nsample * IQ] = { 1, 2, 3, 4,

5, 6, 7, 8 };

error = cudaMalloc(&m_i16RFDataBuffer, sizeof(int16_t) * nRx * IQ * Nsample);

error = cudaMemcpy(m_i16RFDataBuffer, pi16Src, sizeof(int16_t) * nRx * IQ * Nsample, cudaMemcpyHostToDevice);

error = cudaMalloc(&m_pHalfRFDataCache, sizeof(half) * nRx * IQ * Nsample);

Data2Half(m_pHalfRFDataCache, m_i16RFDataBuffer, nRx * IQ * Nsample);

error = cudaMemcpy2DToArray(m_pRFData, 0, 0, m_pHalfRFDataCache, sizeof(half2) * nRx, sizeof(half2) * nRx, Nsample, cudaMemcpyDeviceToDevice);

float* pf_res1 = nullptr;

float* pf_res2 = nullptr;

error = cudaMalloc(&pf_res1, nRx * Nsample * sizeof(float)); cudaMemset(pf_res1, 0, nRx * Nsample * sizeof(float));

error = cudaMalloc(&pf_res2, nRx * Nsample * sizeof(float)); cudaMemset(pf_res2, 0, nRx * Nsample * sizeof(float));

error = cudaGetLastError();

dim3 block_dim = dim3(1, 1);

dim3 grid_dim = dim3(1, 1);

Tex2DTest << <grid_dim, block_dim >> > (m_tex, pf_res1, pf_res2);

cudaDeviceSynchronize();

std::vector<float> vf_res_1(nRx * Nsample, 0);

std::vector<float> vf_res_2(nRx * Nsample, 0);

cudaMemcpy(vf_res_1.data(), pf_res1, sizeof(float) * vf_res_1.size(), cudaMemcpyDeviceToHost);

cudaMemcpy(vf_res_2.data(), pf_res2, sizeof(float) * vf_res_2.size(), cudaMemcpyDeviceToHost);

return 0;

}

void Data2Half(half* pDst, const int16_t* pSrc, const int Ndots)

{

dim3 block = dim3(512, 1);

dim3 grid = dim3((Ndots - 1) / block.x + 1, 1);

data2half << < grid, block >> > (pDst, pSrc, Ndots);

}

static __global__ void Tex2DTest(cudaTextureObject_t p_rf_data, float* pfRes1, float* pfRes2)

{

for (size_t y = 0; y < 2; ++y)

{

for (size_t x = 0; x < 2; ++x)

{

float2 value = tex2D<float2>(p_rf_data, x, y);

//pfRes1[y * 4 + y] =

printf("x: %f, y: %f", value.x, value.y);

// printf("x: %f, y: %f\n", value.x, value.y);

}

printf("\n");

}

}

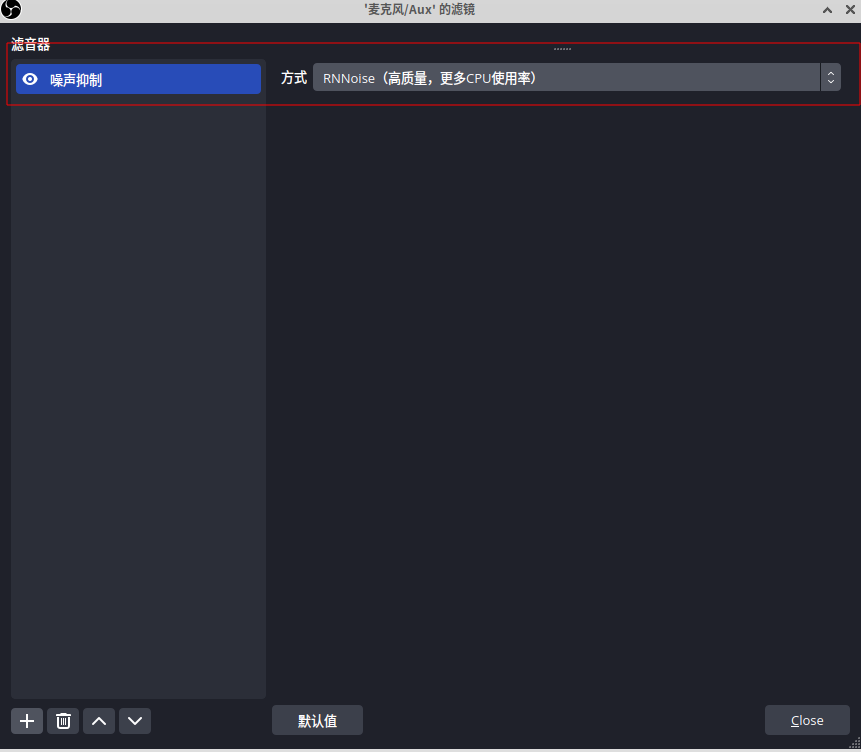

其实关键是在tex2D的构造

然后按照half2的方式进行排布就好了