Kubeadm搭建kubernetes集群

环境说明

| 角色 | ip | 操作系统 |组件 |

| – | – | – |

| master | 192.168.226.10 |centos8 | docker,kubectl,kubeadm,kubelet |

| node1 | 192.168.226.20 |centos8 |docker,kubectl,kubeadm,kubelet|

| node2 | 192.168.226.30 |centos8 |docker,kubectl,kubeadm,kubelet|

配置各个主机的主机名解析文件

[root@master ~]# vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.226.10 master.example.com master

192.168.226.20 node1.example.com node1

192.168.226.30 node2.example.com node2

[root@master ~]# scp /etc/hosts root@node1:/etc/hosts

[root@master ~]# scp /etc/hosts root@node2:/etc/hosts

设置时钟同步

master

[root@master ~]# vim /etc/chrony.conf

local stratum 10

[root@master ~]# systemctl restart chronyd

[root@master ~]# systemctl enable chronyd

[root@master ~]# hwclock -w

node1and2

[root@node1 ~]# vim /etc/chrony.conf

#pool 2.centos.pool.ntp.org iburst

server master.example.com iburst

[root@node1 ~]# systemctl restart chronyd

[root@node1 ~]# systemctl enable chronyd

[root@node1 ~]# hwclock -w

[root@node2 ~]# vim /etc/chrony.conf

#pool 2.centos.pool.ntp.org iburst

server master.example.com iburst

[root@node2 ~]# systemctl restart chronyd

[root@node2 ~]# systemctl enable chronyd

[root@node2 ~]# hwclock -w

禁用firewalld、selinux、postfix三个主机都需要做

systemctl stop firewalld

systemctl disable firewalld

vim /etc/selinux/conf

SELINUX=disabled

setenforce 0

systemctl stop postfix

systemctl disabled postfix

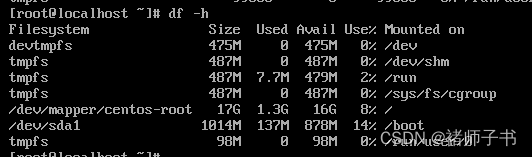

禁用swap分区 三个主机都需要做

vim /etc/fstab

# /dev/mapper/cs-swap none swap defaults 0 0

swapoff -a

开启IP转发,和修改内核信息—三个节点都需要配置

master

[root@master ~]# vim /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

[root@master ~]# modprobe br_netfilter

[root@master ~]# sysctl -p /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

node1

[root@node1 ~]# vim /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

[root@node1 ~]# modprobe br_netfilter

[root@node1 ~]# sysctl -p /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

node2

[root@node2 ~]# vim /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

[root@node2 ~]# modprobe br_netfilter

[root@node2 ~]# sysctl -p /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

配置IPVS功能(三个节点都做)

master

[root@master ~]# vim /etc/sysconfig/modules/ipvs.modules

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

[root@master ~]# chmod +x /etc/sysconfig/modules/ipvs.modules

[root@master ~]# bash /etc/sysconfig/modules/ipvs.modules

[root@master ~]# lsmod | grep -e ip_vs

ip_vs_sh 16384 0

ip_vs_wrr 16384 0

ip_vs_rr 16384 0

ip_vs 172032 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr

nf_conntrack 172032 2 nf_nat,ip_vs

nf_defrag_ipv6 20480 2 nf_conntrack,ip_vs

libcrc32c 16384 5 nf_conntrack,nf_nat,nf_tables,xfs,ip_vs

[root@master ~]# reboot

node1

[root@node1 ~]# vim /etc/sysconfig/modules/ipvs.modules

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

[root@node1 ~]# chmod +x /etc/sysconfig/modules/ipvs.modules

[root@node1 ~]# bash /etc/sysconfig/modules/ipvs.modules

[root@node1 ~]# lsmod | grep -e ip_vs

ip_vs_sh 16384 0

ip_vs_wrr 16384 0

ip_vs_rr 16384 0

ip_vs 172032 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr

nf_conntrack 172032 2 nf_nat,ip_vs

nf_defrag_ipv6 20480 2 nf_conntrack,ip_vs

libcrc32c 16384 5 nf_conntrack,nf_nat,nf_tables,xfs,ip_vs

[root@node1 ~]# reboot

node2

[root@node2 ~]# vim /etc/sysconfig/modules/ipvs.modules

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

[root@node2 ~]# chmod +x /etc/sysconfig/modules/ipvs.modules

[root@node2 ~]# bash /etc/sysconfig/modules/ipvs.modules

[root@node2 ~]# lsmod | grep -e ip_vs

ip_vs_sh 16384 0

ip_vs_wrr 16384 0

ip_vs_rr 16384 0

ip_vs 172032 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr

nf_conntrack 172032 2 nf_nat,ip_vs

nf_defrag_ipv6 20480 2 nf_conntrack,ip_vs

libcrc32c 16384 5 nf_conntrack,nf_nat,nf_tables,xfs,ip_vs

[root@node2 ~]# reboot

安装docker

配置镜像仓库

[root@master yum.repos.d]# wget -O /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-vault-8.5.2111.repo

[root@master yum.repos.d]# yum install -y https://mirrors.aliyun.com/epel/epel-release-latest-8.noarch.rpm

[root@master yum.repos.d]# sed -i 's|^#baseurl=https://download.example/pub|baseurl=https://mirrors.aliyun.com|' /etc/yum.repos.d/epel*

[root@master yum.repos.d]# sed -i 's|^metalink|#metalink|' /etc/yum.repos.d/epel*

[root@master yum.repos.d]# wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

[root@master yum.repos.d]# scp /etc/yum.repos.d/* root@node1:/etc/yum.repos.d/

root@node1's password:

CentOS-Base.repo 100% 2495 3.3MB/s 00:00

docker-ce.repo 100% 2081 2.9MB/s 00:00

epel-modular.repo 100% 1692 2.3MB/s 00:00

epel.repo 100% 1326 1.0MB/s 00:00

epel-testing-modular.repo 100% 1791 1.1MB/s 00:00

epel-testing.repo 100% 1425 1.3MB/s 00:00

[root@master yum.repos.d]# scp /etc/yum.repos.d/* root@node2:/etc/yum.repos.d/

root@node2's password:

CentOS-Base.repo 100% 2495 3.1MB/s 00:00

docker-ce.repo 100% 2081 2.9MB/s 00:00

epel-modular.repo 100% 1692 2.4MB/s 00:00

epel.repo 100% 1326 1.1MB/s 00:00

epel-testing-modular.repo 100% 1791 2.6MB/s 00:00

epel-testing.repo 100% 1425 2.0MB/s 00:00

安装docker

master

[root@master yum.repos.d]# dnf -y install docker-ce --allowerasing

[root@master yum.repos.d]# systemctl restart docker

[root@master yum.repos.d]# systemctl enable docker

[root@master ~]# vim /etc/docker/daemon.json

[root@master ~]# cat /etc/docker/daemon.json

{

"registry-mirrors": [" https://wlfs9l74.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2"

}

[root@master ~]# systemctl daemon-reload

[root@master ~]# systemctl restart docker

node1

[root@node1 yum.repos.d]# dnf -y install docker-ce --allowerasing

[root@node1 yum.repos.d]# systemctl restart docker

[root@node1 yum.repos.d]# systemctl enable docker

[root@node1 ~]# vim /etc/docker/daemon.json

[root@node1 ~]# cat /etc/docker/daemon.json

{

"registry-mirrors": [" https://wlfs9l74.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2"

}

[root@node1 ~]# systemctl daemon-reload

[root@node1 ~]# systemctl restart docker

node2

[root@node2 yum.repos.d]# dnf -y install docker-ce --allowerasing

[root@node2 yum.repos.d]# systemctl restart docker

s[root@node2 yum.repos.d]# systemctl enable docker

[root@node2 ~]# vim /etc/docker/daemon.json

[root@node2 ~]# cat /etc/docker/daemon.json

{

"registry-mirrors": [" https://wlfs9l74.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2"

}

[root@node2 ~]# systemctl daemon-reload

[root@node2 ~]# systemctl restart docker

安装k8s组件 三台主机操作均相同

配置仓库 k8s的镜像在国外所以此处切换为国内的镜像源

[root@master ~]# cat > /etc/yum.repos.d/kubernetes.repo << EOF

> [kubernetes]

> name=Kubernetes

> baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

> enabled=1

> gpgcheck=0

> repo_gpgcheck=0

> gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

> EOF

2、安装kubeadm kubelet kubectl工具 三台主机操作均相同

[root@master ~]# systemctl restart kubelet.service

[root@master ~]# systemctl enable kubelet.service

为确保后面集群初始化及加入集群能够成功执行,需要配置containerd的配置文件/etc/containerd/config.toml,此操作需要在所有节点执行

将/etc/containerd/config.toml文件中的k8s镜像仓库改为registry.aliyuncs.com/google_containers

[root@node2 ~]# containerd config default > /etc/containerd/config.toml

[root@node2 ~]# vim /etc/containerd/config.toml

sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.6"

[root@master ~]# systemctl restart containerd.service

[root@master ~]# systemctl enable containerd

部署k8s的master节点

[root@master ~]# kubeadm init \

> --apiserver-advertise-address=192.168.226.10 \

> --image-repository registry.aliyuncs.com/google_containers \

> --kubernetes-version v1.25.4 \

> --service-cidr=10.96.0.0/12 \

> --pod-network-cidr=10.244.0.0/16

将初始化 内容找个地方保存起来

[root@master ~]# vim /etc/profile.d/k8s.sh

[root@master ~]# cat /etc/profile.d/k8s.sh

export KUBECONFIG=/etc/kubernetes/admin.conf

[root@master ~]# source /etc/profile.d/k8s.sh

[root@master ~]# mkdir -p $HOME/.kube

[root@master ~]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master ~]# chown $(id -u):$(id -g) $HOME/.kube/config

安装pod网络插件

[root@master ~]# wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

[root@master ~]# kubectl apply -f kube-flannel.yml

[root@master ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

master.example.com Ready control-plane 4m56s v1.25.4

将node节点加入到k8s集群中

node1

[root@node1 ~]# kubeadm join 192.168.226.10:6443 --token dqghdl.zvhwusmbjhsw6jmu \

> --discovery-token-ca-cert-hash sha256:059bc62aa0351a194a78ce77f78e1449435443e0764f3623de79870b4a9a541b

node2

[root@node2 ~]# kubeadm join 192.168.226.10:6443 --token dqghdl.zvhwusmbjhsw6jmu \

> --discovery-token-ca-cert-hash sha256:059bc62aa0351a194a78ce77f78e1449435443e0764f3623de79870b4a9a541b

查看

[root@master ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

master.example.com Ready control-plane 4m12s v1.25.4

node1.example.com Ready <none> 97s v1.25.4

node2.example.com Ready <none> 93s v1.25.4

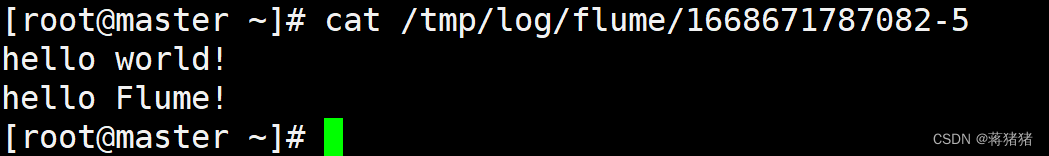

使用k8s集群创建一个pod,运行nginx容器,然后进行测试

[root@master ~]# kubectl create deployment nginx --image nginx

deployment.apps/nginx created

[root@master ~]# kubectl expose deployment nginx --port 80 --type NodePort

[root@master ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-76d6c9b8c-45wpt 1/1 Running 0 37s 10.244.1.2 node1.example.com <none> <none>

[root@master ~]# kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 6m38s

nginx NodePort 10.105.34.189 <none> 80:32516/TCP 51s

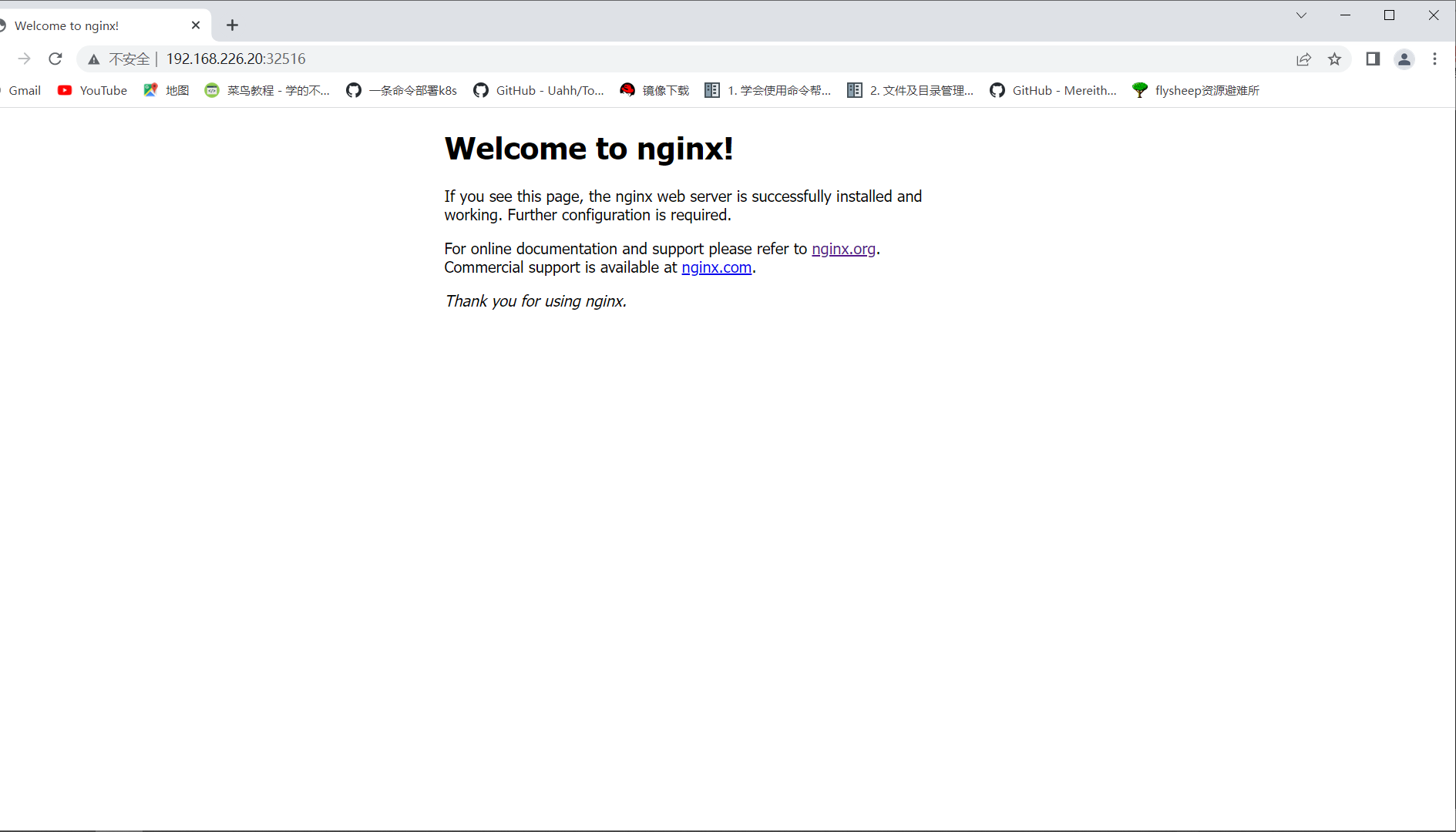

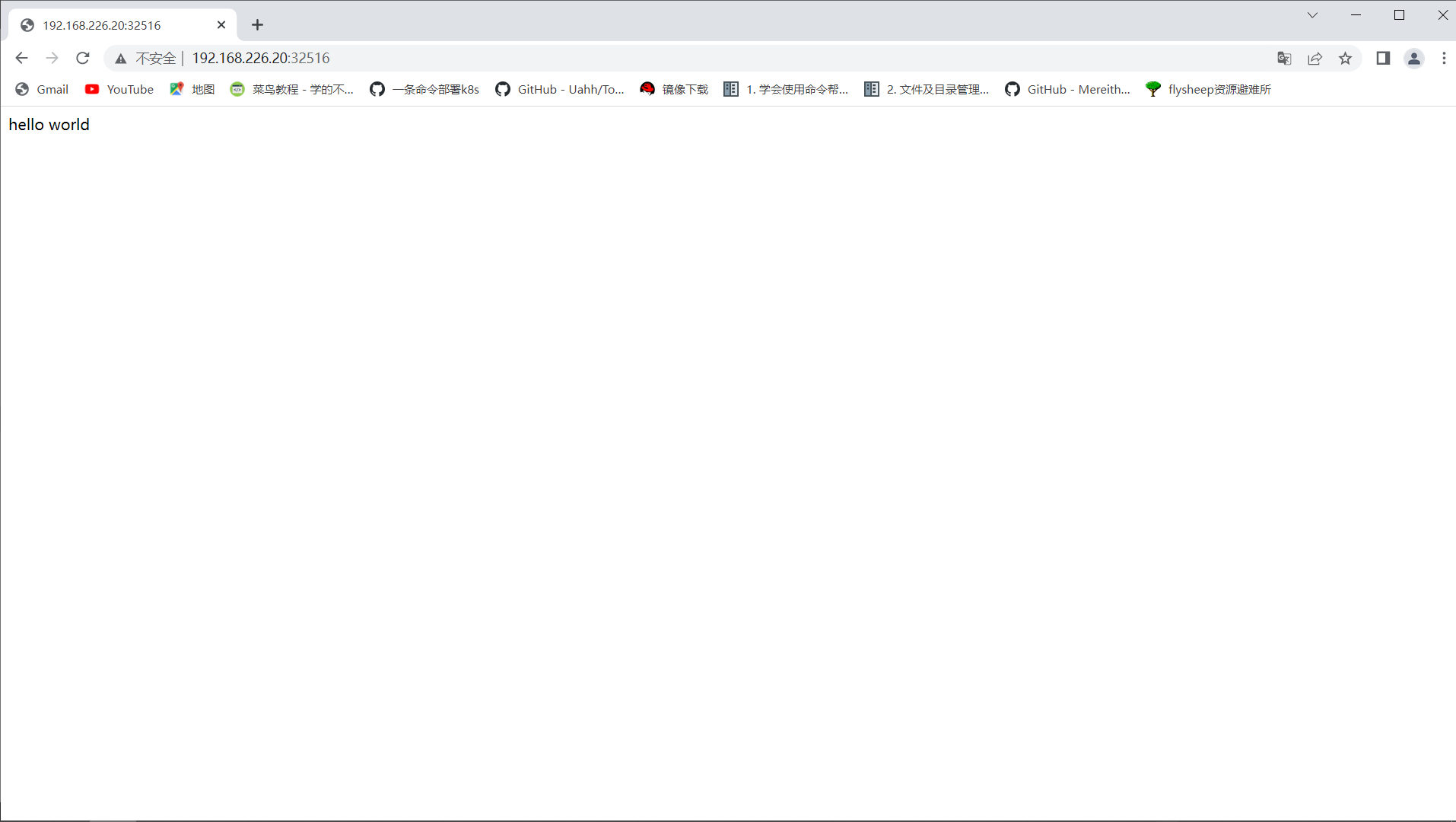

测试访问

修改默认网页

[root@master ~]# kubectl exec -it pod/nginx-76d6c9b8c-45wpt -- /bin/bash

root@nginx-76d6c9b8c-45wpt:/# cd /usr/share/nginx/html/

root@nginx-76d6c9b8c-45wpt:/usr/share/nginx/html# ls

50x.html index.html

root@nginx-76d6c9b8c-45wpt:/usr/share/nginx/html# echo "hello world" > index.html

访问

![[Games 101] Lecture 10 Geometry 1 (Introduction)](https://img-blog.csdnimg.cn/img_convert/e6245309a23812900e2b5aa87e2fc004.png)

![[附源码]Python计算机毕业设计bugbase管理系统](https://img-blog.csdnimg.cn/28c2e62ecf58470d83180aee53d3b8ed.png)

![[附源码]java毕业设计篮球俱乐部管理系统](https://img-blog.csdnimg.cn/c7ba9786349743e9a8038e5fab5f888c.png)