文章目录

- 一、导入相关包

- 二、加载数据集

- 三、划分数据集

- 四、数据集预处理

- 五、创建模型(区别一)

- 六、创建评估函数

- 七、创建 TrainingArguments(区别二)

- 八、创建 Trainer(区别三)

- 九、模型训练

- 十、模型训练(自动搜索)(区别四)

- 启动 tensorboard

- 以文本分类为例

六、Trainer和文本分类

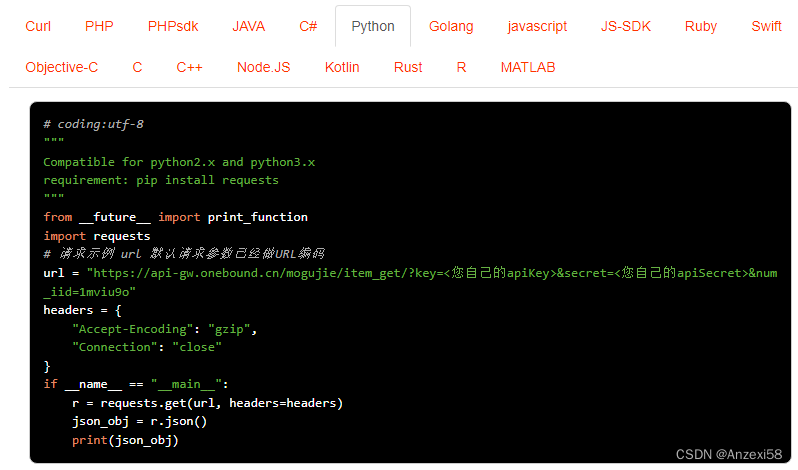

一、导入相关包

!pip install transformers datasets evaluate accelerate

from transformers import AutoTokenizer, AutoModelForSequenceClassification, Trainer, TrainingArguments

from datasets import load_dataset

二、加载数据集

dataset = load_dataset("csv", data_files="./ChnSentiCorp_htl_all.csv", split="train")

dataset = dataset.filter(lambda x: x["review"] is not None)

dataset

'''

Dataset({

features: ['label', 'review'],

num_rows: 7765

})

'''

三、划分数据集

datasets = dataset.train_test_split(test_size=0.1)

datasets

'''

DatasetDict({

train: Dataset({

features: ['label', 'review'],

num_rows: 6988

})

test: Dataset({

features: ['label', 'review'],

num_rows: 777

})

})

'''

四、数据集预处理

import torch

tokenizer = AutoTokenizer.from_pretrained("hfl/rbt3")

def process_function(examples):

tokenized_examples = tokenizer(examples["review"], max_length=128, truncation=True)

tokenized_examples["labels"] = examples["label"]

return tokenized_examples

tokenized_datasets = datasets.map(process_function, batched=True,

remove_columns=datasets["train"].column_names)

tokenized_datasets

'''

DatasetDict({

train: Dataset({

features: ['input_ids', 'token_type_ids', 'attention_mask', 'labels'],

num_rows: 6988

})

test: Dataset({

features: ['input_ids', 'token_type_ids', 'attention_mask', 'labels'],

num_rows: 777

})

})

'''

五、创建模型(区别一)

def model_init():

model = AutoModelForSequenceClassification.from_pretrained("hfl/rbt3")

return model

六、创建评估函数

import evaluate

acc_metric = evaluate.load("accuracy")

f1_metirc = evaluate.load("f1")

def eval_metric(eval_predict):

predictions, labels = eval_predict

predictions = predictions.argmax(axis=-1)

acc = acc_metric.compute(predictions=predictions, references=labels)

f1 = f1_metirc.compute(predictions=predictions, references=labels)

acc.update(f1)

return acc

七、创建 TrainingArguments(区别二)

logging_steps=500为了防止多次训练 log 太多可以增大logging_steps

train_args = TrainingArguments(output_dir="./checkpoints", # 输出文件夹

per_device_train_batch_size=64, # 训练时的batch_size

per_device_eval_batch_size=128, # 验证时的batch_size

logging_steps=500, # log 打印的频率

evaluation_strategy="epoch", # 评估策略

save_strategy="epoch", # 保存策略

save_total_limit=3, # 最大保存数

learning_rate=2e-5, # 学习率

weight_decay=0.01, # weight_decay

metric_for_best_model="f1", # 设定评估指标

load_best_model_at_end=True) # 训练完成后加载最优模型

八、创建 Trainer(区别三)

- 没有指定

model而是指定model_init

from transformers import DataCollatorWithPadding

trainer = Trainer(model_init=model_init,

args=train_args,

train_dataset=tokenized_datasets["train"],

eval_dataset=tokenized_datasets["test"],

data_collator=DataCollatorWithPadding(tokenizer=tokenizer),

compute_metrics=eval_metric)

# 之前

from transformers import DataCollatorWithPadding

trainer = Trainer(model=model,

args=train_args,

train_dataset=tokenized_datasets["train"],

eval_dataset=tokenized_datasets["test"],

data_collator=DataCollatorWithPadding(tokenizer=tokenizer),

compute_metrics=eval_metric)

九、模型训练

trainer.train()

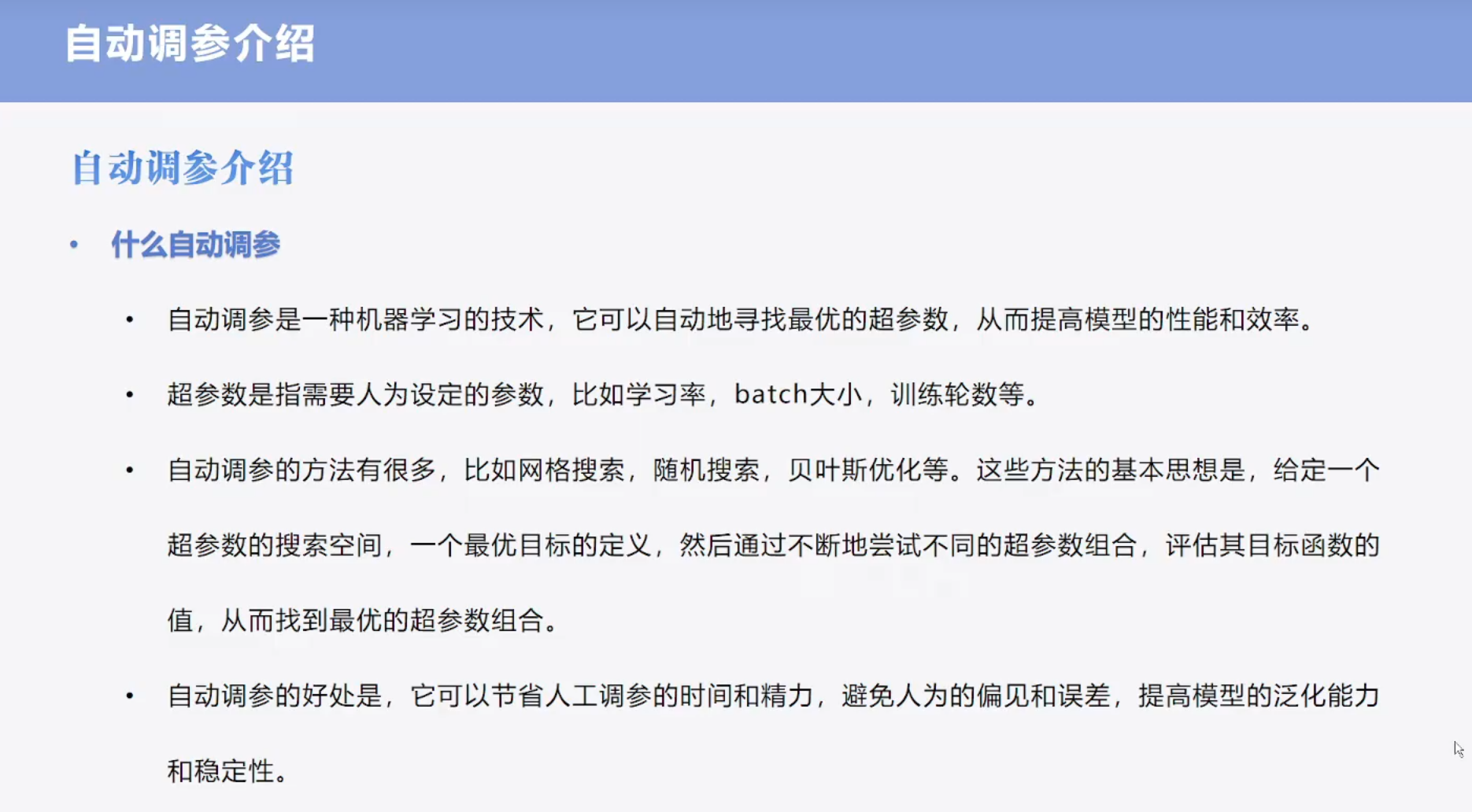

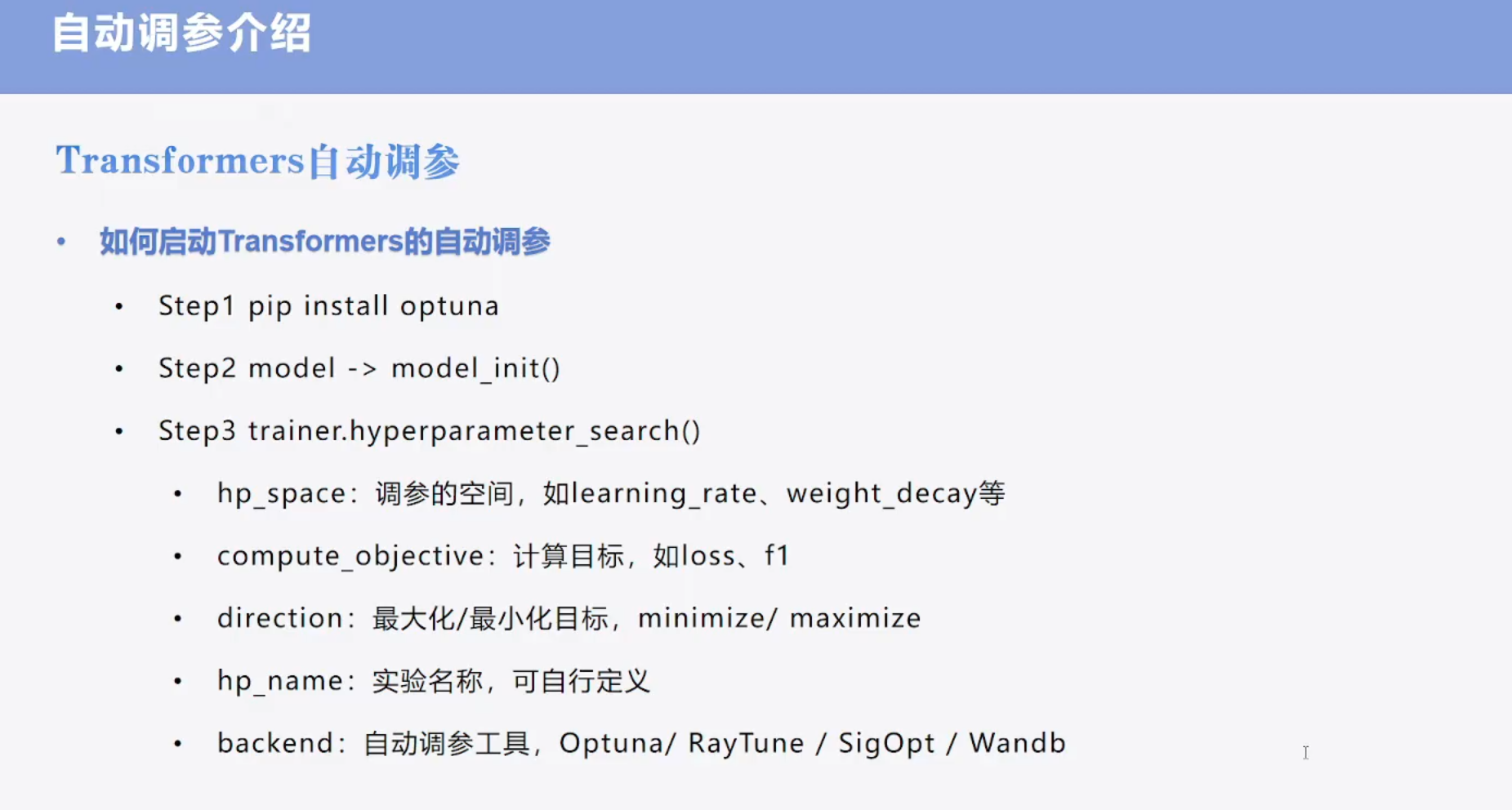

十、模型训练(自动搜索)(区别四)

!pip install optuna

- 使用默认的超参数空间

compute_objective=lambda x: x["eval_f1"]中的x是指的评价函数的返回值,在这里因为没有显示的指定评价函数返回值的key,所以f1的key采用默认值eval_f1

trainer.hyperparameter_search(compute_objective=lambda x: x["eval_f1"], direction="maximize", n_trials=10)

- 自定义超参数空间

- 可以在default_hp_space_optuna 函数中增加 trainer 的选项

def default_hp_space_optuna(trial):

return {

"learning_rate": trial.suggest_float("learning_rate", 1e-6, 1e-4, log=True),

"num_train_epochs": trial.suggest_int("num_train_epochs", 1, 5),

"seed": trial.suggest_int("seed", 1, 40),

"per_device_train_batch_size": trial.suggest_categorical("per_device_train_batch_size", [4, 8, 16, 32, 64]),

"optim": trial.suggest_categorical("optim", ["sgd", "adamw_hf"]),

}

trainer.hyperparameter_search(hp_space=default_hp_space_optuna, compute_objective=lambda x: x["eval_f1"], direction="maximize", n_trials=10)

启动 tensorboard

- 进入运行日志文件夹

- 终端启动

!tensorboard --logdir runs

- jupyter 启动

# 运行这行代码将加载 TensorBoard并允许我们将其用于可视化

%reload_ext tensorboard

%tensorboard --logdir=./runs/