这里是MIT 6.5940 Fall 2023的第一个实验Lab1的一些笔记,课程传送门:Han Lab

Setup

First, install the required packages and download the datasets and pretrained model. Here we use CIFAR10 dataset and VGG network which is the same as what we used in the Lab 0 tutorial.

print('Installing torchprofile...')

!pip install torchprofile 1>/dev/null

print('All required packages have been successfully installed!')

# 导包

import copy

import math

import random

import time

from collections import OrderedDict, defaultdict

from typing import Union, List

import numpy as np

import torch

from matplotlib import pyplot as plt

from torch import nn

from torch.optim import *

from torch.optim.lr_scheduler import *

from torch.utils.data import DataLoader

from torchprofile import profile_macs

from torchvision.datasets import *

from torchvision.transforms import *

from tqdm.auto import tqdm

from torchprofile import profile_macs

import os

os.environ['CUDA_VISIBLE_DEVICES'] = '1' # 指定要使用哪块GPU

assert torch.cuda.is_available(), \

"The current runtime does not have CUDA support." \

"Please go to menu bar (Runtime - Change runtime type) and select GPU"

"""

这段代码是用于设置随机数生成器种子

random.seed(0): 这行代码设置 Python 内置的 random 库的随机数生成器的种子。

np.random.seed(0): 这行代码设置 NumPy 库的随机数生成器的种子。

torch.manual_seed(0): 这行代码设置 PyTorch 库的随机数生成器的种子。

"""

random.seed(0)

np.random.seed(0)

torch.manual_seed(0)

"""

从给定的 URL 下载文件到指定的目录:

url: 要下载的文件的 URL

model_dir: 文件下载后保存的目录,默认为当前目录 '.'

overwrite: 如果为 True, 则在文件已存在的情况下会覆盖该文件。默认为 False

"""

def download_url(url, model_dir='.', overwrite=False):

import os, sys, ssl

from urllib.request import urlretrieve

ssl._create_default_https_context = ssl._create_unverified_context

target_dir = url.split('/')[-1] # 从 URL 中提取文件名

model_dir = os.path.expanduser(model_dir)

try:

if not os.path.exists(model_dir): # 如果指定的目录不存在,则创建它

os.makedirs(model_dir)

model_dir = os.path.join(model_dir, target_dir) # 将目标文件名附加到目录路径上

cached_file = model_dir # 设置缓存文件的完整路径

# 检查文件是否存在,如果不存在或者允许覆盖,则执行下载

if not os.path.exists(cached_file) or overwrite:

# 向标准错误输出下载信息

sys.stderr.write('Downloading: "{}" to {}\n'.format(url, cached_file))

urlretrieve(url, cached_file) # 使用 urlretrieve 函数从指定的 URL 下载文件

return cached_file # 如果下载成功,返回缓存文件的路径

except Exception as e:

# remove lock file so download can be executed next time.

# 删除可能存在的锁文件,以便下次可以重新尝试下载。

os.remove(os.path.join(model_dir, 'download.lock'))

# 输出错误信息

sys.stderr.write('Failed to download from url %s' % url + '\n' + str(e) + '\n')

return None # 如果下载失败,返回 None

"""

这个 VGG 网络的实现使用了卷积层、批归一化层、ReLU 激活层和最大池化层来构建其主体,最后通过一个线性层进行分类。这种网络结构通常用于图像分类任务。

"""

class VGG(nn.Module):

# 这是 VGG 网络的架构。数字代表卷积层的输出通道数,'M' 代表最大池化层。

ARCH = [64, 128, 'M', 256, 256, 'M', 512, 512, 'M', 512, 512, 'M']

def __init__(self) -> None:

super().__init__()

layers = [] # 初始化一个空列表,用于存储网络层

counts = defaultdict(int) # 使用 defaultdict 来记录每种类型的层的数量

# 一个内部函数,用于向 layers 列表添加层。

def add(name: str, layer: nn.Module) -> None:

# 向 layers 列表中添加元素。每个元素是一个元组,包含两个部分:层的名称(如 'conv', 'bn', 'relu', 'pool')和每种类型的层被添加的次数

layers.append((f"{name}{counts[name]}", layer))

counts[name] += 1

in_channels = 3 # 初始输入通道数为 3(对于 RGB 图像)

for x in self.ARCH:

# 如果 x 不是 'M',则添加一个卷积层(Conv2d)、一个批归一化层(BatchNorm2d)和一个激活层(ReLU)

if x != 'M':

# conv-bn-relu

add("conv", nn.Conv2d(in_channels, x, 3, padding=1, bias=False))

add("bn", nn.BatchNorm2d(x))

add("relu", nn.ReLU(True))

in_channels = x

# 如果 x 是 'M',则添加一个最大池化层(MaxPool2d)

else:

# maxpool

add("pool", nn.MaxPool2d(2))

# 使用 OrderedDict 将 layers 列表转换为一个有序字典,然后创建一个 Sequential 模型作为网络的主体。

self.backbone = nn.Sequential(OrderedDict(layers))

# 定义一个线性层作为分类器,输入特征数为 512,输出特征数为 10(对应于 10 个类别)。

self.classifier = nn.Linear(512, 10)

def forward(self, x: torch.Tensor) -> torch.Tensor:

# backbone: [N, 3, 32, 32] => [N, 512, 2, 2]

x = self.backbone(x)

# avgpool: [N, 512, 2, 2] => [N, 512]

# 对特征图进行平均池化,将其从 [N, 512, 2, 2] 转换为 [N, 512]。

x = x.mean([2, 3])

# classifier: [N, 512] => [N, 10]

# 将平均池化后的特征通过分类器。

x = self.classifier(x)

return x # 返回分类器的输出

"""

这个 train 函数封装了神经网络训练的典型步骤:数据加载、前向传播、损失计算、反向传播、权重更新,以及可选的回调函数执行。这样的封装使得训练过程更加模块化和易于管理。

"""

def train(

model: nn.Module, # 要训练的神经网络模型,是一个 nn.Module 对象

dataloader: DataLoader, # 用于加载训练数据的 DataLoader 对象

criterion: nn.Module, # 损失函数,用于计算模型输出和目标之间的误差

optimizer: Optimizer, # 优化器,用于更新模型的权重

scheduler: LambdaLR, # 学习率调度器,用于调整优化器的学习率

callbacks = None # 可选的回调函数列表,用于在训练过程中执行额外的操作

) -> None:

# 将模型设置为训练模式。这对于某些类型的层(如 Dropout 和 BatchNorm)是必要的,因为它们在训练和评估模式下的行为不同。

model.train()

for inputs, targets in tqdm(dataloader, desc='train', leave=False):

# Move the data from CPU to GPU

inputs = inputs.cuda()

targets = targets.cuda()

# Reset the gradients (from the last iteration)

optimizer.zero_grad()

# Forward inference

outputs = model(inputs)

loss = criterion(outputs, targets)

# Backward propagation

loss.backward()

# Update optimizer and LR scheduler

optimizer.step()

scheduler.step()

if callbacks is not None:

for callback in callbacks:

callback()

"""

这个 evaluate 函数通过遍历评估数据集,计算模型的准确率,从而评估模型的性能。使用 @torch.inference_mode() 装饰器可以提高评估过程的效率。

"""

@torch.inference_mode()

def evaluate(

model: nn.Module, # 要评估的神经网络模型,是一个 nn.Module 对象

dataloader: DataLoader, # 用于加载评估数据的 DataLoader 对象

verbose=True, # 一个布尔值,用于控制是否显示进度条

) -> float:

# 将模型设置为评估模式。这对于某些类型的层(如 Dropout 和 BatchNorm)是必要的,因为它们在训练和评估模式下的行为不同。

model.eval()

num_samples = 0 # 记录处理的样本总数

num_correct = 0 # 记录正确预测的样本数

for inputs, targets in tqdm(dataloader, desc="eval", leave=False,

disable=not verbose):

# Move the data from CPU to GPU

inputs = inputs.cuda()

targets = targets.cuda()

# Inference

outputs = model(inputs)

# Convert logits to class indices

# 将模型的输出(通常是 logits)转换为类别索引。argmax(dim=1) 选择每个样本最高概率的类别。

outputs = outputs.argmax(dim=1)

# Update metrics

num_samples += targets.size(0)

num_correct += (outputs == targets).sum()

return (num_correct / num_samples * 100).item() # 计算准确率(百分比)

Helper Functions (Flops, Model Size calculation, etc.)

# 计算模型的乘加操作(MACs,Multiply-Accumulate Operations)的数量

def get_model_macs(model, inputs) -> int:

return profile_macs(model, inputs)

# 计算给定张量tensor的稀疏性

def get_sparsity(tensor: torch.Tensor) -> float:

"""

calculate the sparsity of the given tensor

sparsity = #zeros / #elements = 1 - #nonzeros / #elements

返回张量的稀疏性,计算为零元素的比例

"""

return 1 - float(tensor.count_nonzero()) / tensor.numel()

# 计算整个模型的稀疏性

def get_model_sparsity(model: nn.Module) -> float:

"""

calculate the sparsity of the given model

sparsity = #zeros / #elements = 1 - #nonzeros / #elements

"""

num_nonzeros, num_elements = 0, 0

for param in model.parameters():

num_nonzeros += param.count_nonzero()

num_elements += param.numel()

# 返回模型的稀疏性,计算为所有参数中零元素的比例

return 1 - float(num_nonzeros) / num_elements

# 计算模型的参数数量 count_nonzero_only: 是否只计算非零权重

def get_num_parameters(model: nn.Module, count_nonzero_only=False) -> int:

"""

calculate the total number of parameters of model

:param count_nonzero_only: only count nonzero weights

"""

num_counted_elements = 0

for param in model.parameters():

if count_nonzero_only:

num_counted_elements += param.count_nonzero()

else:

num_counted_elements += param.numel()

# 返回模型的参数数量

return num_counted_elements

# 计算模型的大小

def get_model_size(model: nn.Module, data_width=32, count_nonzero_only=False) -> int:

"""

calculate the model size in bits

:param data_width: #bits per element

:param count_nonzero_only: only count nonzero weights

"""

return get_num_parameters(model, count_nonzero_only) * data_width

Byte = 8

KiB = 1024 * Byte

MiB = 1024 * KiB

GiB = 1024 * MiB

Define misc functions for verification.

"""

用于测试和展示细粒度剪枝(fine-grained pruning)在一个张量上的效果。

细粒度剪枝是一种在神经网络中减少参数数量的技术,通常用于模型压缩和优化。

test_tensor: 要进行剪枝的测试张量。

test_mask: 一个布尔张量,表示剪枝后应保留的元素。

target_sparsity: 目标稀疏度,即剪枝后张量中零元素的比例。

target_nonzeros: 目标非零元素的数量(可选)。

"""

def test_fine_grained_prune(

test_tensor=torch.tensor([[-0.46, -0.40, 0.39, 0.19, 0.37],

[0.00, 0.40, 0.17, -0.15, 0.16],

[-0.20, -0.23, 0.36, 0.25, 0.03],

[0.24, 0.41, 0.07, 0.13, -0.15],

[0.48, -0.09, -0.36, 0.12, 0.45]]),

test_mask=torch.tensor([[True, True, False, False, False],

[False, True, False, False, False],

[False, False, False, False, False],

[False, True, False, False, False],

[True, False, False, False, True]]),

target_sparsity=0.75, target_nonzeros=None):

# 以图形方式展示一个张量,其中零值和非零值用不同的颜色表示,同时在每个元素的位置上显示其数值

def plot_matrix(tensor, ax, title):

# vmin=0, vmax=1: 设置颜色映射的最小值和最大值。cmap='tab20c': 指定用于颜色映射的颜色表。

ax.imshow(tensor.cpu().numpy() == 0, vmin=0, vmax=1, cmap='tab20c')

ax.set_title(title) # 设置图的标题

# 清除 x 轴和 y 轴的刻度标签

ax.set_yticklabels([])

ax.set_xticklabels([])

for i in range(tensor.shape[1]):

for j in range(tensor.shape[0]):

text = ax.text(j, i, f'{tensor[i, j].item():.2f}',

ha="center", va="center", color="k")

test_tensor = test_tensor.clone() # 深拷贝

"""

plt.subplots 创建一个图形 (fig) 和一组子图轴 (axes)。

参数 1, 2 指定子图的布局,这里是 1 行 2 列,即创建两个水平排列的子图。

figsize=(6, 10) 设置图形的大小,宽度为 6 英寸,高度为 10 英寸。

"""

fig, axes = plt.subplots(1,2, figsize=(6, 10))

# axes.ravel() 将 axes 数组扁平化,使其成为一维数组。

# ax_left, ax_right 分别是这两个子图的轴对象。ax_left 是第一个子图的轴,ax_right 是第二个子图的轴。

ax_left, ax_right = axes.ravel()

# 将 test_tensor 的内容绘制在 ax_left(即第一个子图)上。

plot_matrix(test_tensor, ax_left, 'dense tensor')

sparsity_before_pruning = get_sparsity(test_tensor)

mask = fine_grained_prune(test_tensor, target_sparsity)

sparsity_after_pruning = get_sparsity(test_tensor)

sparsity_of_mask = get_sparsity(mask)

# # 将 剪枝后的 test_tensor 的内容绘制在 ax_left(即第一个子图)上。

plot_matrix(test_tensor, ax_right, 'sparse tensor')

fig.tight_layout()

plt.show()

print('* Test fine_grained_prune()')

print(f' target sparsity: {target_sparsity:.2f}')

print(f' sparsity before pruning: {sparsity_before_pruning:.2f}')

print(f' sparsity after pruning: {sparsity_after_pruning:.2f}')

print(f' sparsity of pruning mask: {sparsity_of_mask:.2f}')

if target_nonzeros is None:

if test_mask.equal(mask):

print('* Test passed.')

else:

print('* Test failed.')

else:

if mask.count_nonzero() == target_nonzeros:

print('* Test passed.')

else:

print('* Test failed.')

Load the pretrained model and the CIFAR- 10 dataset.

# 这里定义了一个URL,它指向预训练的VGG模型权重文件。这个文件是一个.pth文件,通常用于存储PyTorch模型的权重。

checkpoint_url = "https://hanlab18.mit.edu/files/course/labs/vgg.cifar.pretrained.pth"

# 这行代码首先调用download_url函数来下载预训练权重文件。下载后,使用torch.load加载这个文件,并将其内容映射到CPU内存上。

# checkpoint变量现在包含了模型的状态字典(state_dict),这是一个包含所有模型权重的字典。

checkpoint = torch.load(download_url(checkpoint_url), map_location="cpu")

# 这里创建了一个新的VGG模型实例,并使用.cuda()方法将其移动到GPU上。

model = VGG().cuda()

print(f"=> loading checkpoint '{checkpoint_url}'")

# 这行代码将下载的预训练权重(存储在checkpoint['state_dict']中)加载到刚刚创建的VGG模型中。这是通过调用模型的load_state_dict方法实现的。

model.load_state_dict(checkpoint['state_dict'])

# 这里定义了一个匿名函数recover_model,当调用这个函数时,它会再次将预训练权重加载到模型中。这个函数可以在以后需要恢复模型到这个预训练状态时使用。

recover_model = lambda: model.load_state_dict(checkpoint['state_dict'])

"""

为CIFAR10数据集设置预处理步骤,加载数据集,并为训练和测试创建相应的数据加载器,以便在后续的模型训练和评估中使用。

"""

# 设置图像大小为32x32像素,这是CIFAR10数据集中图像的标准尺寸。

image_size = 32

transforms = {

"train": Compose([

RandomCrop(image_size, padding=4), # 随机裁剪图像,并在四周添加4像素的填充。

RandomHorizontalFlip(), # 随机水平翻转图像。

ToTensor(), # 将图像转换为PyTorch张量,并将像素值从[0, 255]缩放到[0.0, 1.0]。

]),

"test": ToTensor(),

}

dataset = {} # 创建一个空字典来存储数据集。

for split in ["train", "test"]:

dataset[split] = CIFAR10(

root="data/cifar10",

train=(split == "train"),

download=True,

transform=transforms[split],

)

dataloader = {} # 创建一个空字典来存储数据加载器。

for split in ['train', 'test']:

dataloader[split] = DataLoader(

dataset[split],

batch_size=512,

shuffle=(split == 'train'),

num_workers=0,

pin_memory=True,

)

Let’s First Evaluate the Accuracy and Model Size of Dense Model

Neural networks have become ubiquitous in many applications. Here we have loaded a pretrained VGG model for classifying images in CIFAR10 dataset.

Let’s first evaluate the accuracy and model size of this model.

dense_model_accuracy = evaluate(model, dataloader['test'])

dense_model_size = get_model_size(model)

print(f"dense model has accuracy={dense_model_accuracy:.2f}%")

print(f"dense model has size={dense_model_size/MiB:.2f} MiB")

"""

输出结果

dense model has accuracy=92.95%

dense model has size=35.20 MiB

"""

While large neural networks are very powerful, their size consumes considerable storage, memory bandwidth, and computational resources. As we can see from the results above, a model for the task as simple as classifying 32 × 32 32\times32 32×32 images into 10 classes can be as large as 35 MiB. For embedded mobile applications, these resource demands become prohibitive.

Therefore, neural network pruning is exploited to facilitates storage and transmission of mobile applications incorporating DNNs.

The goal of pruning is to reduce the model size while maintaining the accuracy.

Let’s see the distribution of weight values

Before we jump into pruning, let’s see the distribution of weight values in the dense model.

"""

这个函数通过绘制直方图来可视化神经网络模型中的权重分布,有助于分析模型的权重特性,

例如是否有大量的权重接近零,或者权重是否遵循特定的分布(如正态分布)。

model: 要分析的神经网络模型。

bins: 直方图中的柱状条数, 默认为256。

count_nonzero_only: 是否只计算非零权重, 默认为False。

"""

def plot_weight_distribution(model, bins=256, count_nonzero_only=False):

# 创建一个3x3的子图布局,总尺寸为10x6英寸。

fig, axes = plt.subplots(3,3, figsize=(10, 6))

# 将2D的子图数组转换为1D数组,以便于迭代。

axes = axes.ravel()

plot_index = 0 # 用于跟踪当前正在处理的子图的索引

# 遍历模型参数, 迭代模型的每个参数。

for name, param in model.named_parameters():

# param.dim() > 1 的参数通常是权重矩阵,而维度为1的参数通常是偏置向量。在这个函数中,我们只对权重矩阵感兴趣,因为它们通常包含更多信息。

if param.dim() > 1:

ax = axes[plot_index] # 选择当前子图

# 如果只考虑非零权重

if count_nonzero_only:

param_cpu = param.detach().view(-1).cpu() # 将参数转换为1D数组并移动到CPU

param_cpu = param_cpu[param_cpu != 0].view(-1) # 过滤掉所有零值

ax.hist(param_cpu, bins=bins, density=True,

color = 'blue', alpha = 0.5) # 绘制非零权重的直方图

else:

ax.hist(param.detach().view(-1).cpu(), bins=bins, density=True,

color = 'blue', alpha = 0.5) # 绘制所有权重的直方图

ax.set_xlabel(name) # 设置x轴标签为参数的名称

ax.set_ylabel('density') # 设置y轴标签为“density”

plot_index += 1

fig.suptitle('Histogram of Weights') # 设置总标题

fig.tight_layout() # 自动调整子图参数,使之填充整个图表区域

fig.subplots_adjust(top=0.925) # 调整顶部边距

plt.show() # 显示图表

plot_weight_distribution(model)

画出权重分布如下图所示:

Fine-grained Pruning

In this section, we will implement and perform fine-grained pruning.

Fine-grained pruning removes the synapses with lowest importance. The weight tensor W W W will become sparse after fine-grained pruning, which can be described with sparsity:

> s p a r s i t y : = # Z e r o s / # W = 1 − # N o n z e r o s / # W \mathrm{sparsity} := \#\mathrm{Zeros} / \#W = 1 - \#\mathrm{Nonzeros} / \#W sparsity:=#Zeros/#W=1−#Nonzeros/#W

where # W \#W #W is the number of elements in W W W.

In practice, given the target sparsity s s s, the weight tensor W W W is multiplied with a binary mask M M M to disregard removed weight:

> v t h r = kthvalue ( I m p o r t a n c e , # W ⋅ s ) v_{\mathrm{thr}} = \texttt{kthvalue}(Importance, \#W \cdot s) vthr=kthvalue(Importance,#W⋅s)

> M = I m p o r t a n c e > v t h r M = Importance > v_{\mathrm{thr}} M=Importance>vthr

> W = W ⋅ M W = W \cdot M W=W⋅M

where I m p o r t a n c e Importance Importance is importance tensor with the same shape of W W W, kthvalue ( X , k ) \texttt{kthvalue}(X, k) kthvalue(X,k) finds the k k k-th smallest value of tensor X X X, v t h r v_{\mathrm{thr}} vthr is the threshold value.

如何进行Fine-grained Pruning呢?

Magnitude-based Pruning

For fine-grained pruning, a widely-used importance is the magnitude of weight value, i.e.,

I m p o r t a n c e = ∣ W ∣ Importance=|W| Importance=∣W∣

This is known as Magnitude-based Pruning

如何进行Magnitude-based Pruning呢?

Fine-grained Pruning 和 Magnitude-based Pruning 的区别?

Please complete the following magnitude-based fine-grained pruning function.

Hint:

-

In step 1, we calculate the number of zeros (

num_zeros) after pruning. Note thatnum_zerosshould be an integer. You could use eitherround()orint()to convert a floating number into an integer. Here we useround(). -

In step 2, we calculate the

importanceof weight tensor. Pytorch providestorch.abs(),torch.Tensor.abs(),torch.Tensor.abs_()APIs. -

In step 3, we calculate the pruning

thresholdso that all synapses with importance smaller thanthresholdwill be removed. Pytorch providestorch.kthvalue(),torch.Tensor.kthvalue(),torch.topk()APIs. -

In step 4, we calculate the pruning

maskbased on thethreshold. 1 in themaskindicates the synapse will be kept, and 0 in themaskindicates the synapse will be removed.mask = importance > threshold. Pytorch providestorch.gt()API.

"""

实现了一个称为“细粒度剪枝”(fine-grained pruning)的过程,用于在神经网络中减少权重的数量。这种剪枝方法基于权重的重要性,通常是通过权重的绝对值来衡量的。

"""

def fine_grained_prune(tensor: torch.Tensor, sparsity : float) -> torch.Tensor:

"""

magnitude-based pruning for single tensor

:param tensor: torch.(cuda.)Tensor, weight of conv/fc layer

:param sparsity: float, pruning sparsity

sparsity = #zeros / #elements = 1 - #nonzeros / #elements

:return:

torch.(cuda.)Tensor, mask for zeros

"""

# 这行代码确保稀疏度值在0和1之间。如果稀疏度小于0,则设置为0;如果大于1,则设置为1。

sparsity = min(max(0.0, sparsity), 1.0)

# 如果稀疏度为1(即100%稀疏),则将张量中的所有元素设置为0,并返回一个与原张量形状相同、全为0的张量。

if sparsity == 1.0:

tensor.zero_()

return torch.zeros_like(tensor)

# 如果稀疏度为0(即0%稀疏),则返回一个与原张量形状相同、全为1的张量。

elif sparsity == 0.0:

return torch.ones_like(tensor)

num_elements = tensor.numel() # 计算给定张量 tensor 中的元素总数

##################### YOUR CODE STARTS HERE #####################

# Step 1: calculate the #zeros (please use round())

# num_zeros = round(0)

# 计算需要剪枝的元素数量,即将要设置为零的权重数量。这是通过将张量中的总元素数乘以稀疏度并四舍五入得到的。

num_zeros = round(sparsity * num_elements)

# Step 2: calculate the importance of weight

# importance = 0

# 计算每个权重的绝对值,作为其重要性的度量。权重的绝对值越小,被认为越不重要,因此更有可能被剪枝。

importance = torch.abs(tensor)

# Step 3: calculate the pruning threshold

# threshold = 0

# 找出所有权重中第 k 小的值,作为剪枝的阈值。这里 k 是保留的非零元素的数量。

threshold = torch.kthvalue(importance.view(-1), k=num_elements - num_zeros).values.item()

# Step 4: get binary mask (1 for nonzeros, 0 for zeros)

# mask = 0

# 创建一个与原张量形状相同的掩码,其中大于阈值的元素为1(保留),小于或等于阈值的元素为0(剪枝)。

mask = importance.gt(threshold).type_as(tensor)

##################### YOUR CODE ENDS HERE #######################

# Step 5: apply mask to prune the tensor

# 将掩码应用于原始张量。这是一个原地操作,意味着它直接修改了原始张量,而不是创建一个新的副本。

tensor.mul_(mask)

return mask

Let’s verify the functionality of defined fine-grained pruning by applying the function above on a dummy tensor.

test_fine_grained_prune()

运行结果:

The last cell plots the tensor before and after pruning. Nonzeros are rendered in blue while zeros are rendered in gray. Please modify the value of target_sparsity in the following code cell so that there are only 10 nonzeros in the sparse tensor after pruning.

##################### YOUR CODE STARTS HERE #####################

# target_sparsity = 0 25 * target_sparsity = 10 -> target_sparsity = 0.4

target_sparsity = 0.4 # please modify the value of target_sparsity

##################### YOUR CODE ENDS HERE #####################

test_fine_grained_prune(target_sparsity=target_sparsity, target_nonzeros=10)

We now wrap the fine-grained pruning function into a class for pruning the whole model. In class FineGrainedPruner, we have to keep a record of the pruning masks so that we could apply the masks whenever the model weights change to make sure the model keep sparse all the time.

"""

这个类封装了用于对神经网络模型进行细粒度剪枝

model: 要剪枝的神经网络模型。

sparsity_dict: 一个字典,键是模型中层的名称,值是对应层的剪枝稀疏度。稀疏度定义了要剪枝(置零)的权重比例。

self.masks: 在初始化时,通过调用静态方法 prune 来生成每个层的剪枝掩码。这些掩码将用于后续的剪枝操作。

"""

class FineGrainedPruner:

def __init__(self, model, sparsity_dict):

self.masks = FineGrainedPruner.prune(model, sparsity_dict)

# @torch.no_grad() 装饰器表明在执行此方法时不需要计算梯度,这是因为剪枝通常在模型评估阶段进行,不涉及梯度的计算。

@torch.no_grad()

def apply(self, model): # 这是一个实例方法,用于将剪枝掩码应用于模型的参数。

# 方法遍历模型的所有参数,如果参数的名称在 self.masks 中,则将该参数与其对应的掩码相乘,从而实现剪枝。

for name, param in model.named_parameters():

if name in self.masks:

param *= self.masks[name]

@staticmethod # 这是一个静态方法,用于生成剪枝掩码。

@torch.no_grad()

def prune(model, sparsity_dict):

masks = dict() # 生成的掩码存储在 masks 字典中,以参数的名称为键。

# 方法遍历模型的所有参数。如果参数的维度大于1(通常表示卷积层或全连接层的权重),则对该参数进行剪枝,

# 使用 fine_grained_prune 函数和 sparsity_dict 中指定的稀疏度。

for name, param in model.named_parameters():

if param.dim() > 1: # we only prune conv and fc weights

masks[name] = fine_grained_prune(param, sparsity_dict[name])

return masks

Sensitivity Scan

Different layers contribute differently to the model performance. It is challenging to decide the proper sparsity for each layer. A widely used approach is sensitivity scan.

During the sensitivity scan, at each time, we will only prune one layer to see the accuracy degradation. By scanning different sparsities, we could draw the sensitivity curve (i.e., accuracy vs. sparsity) of the corresponding layer.

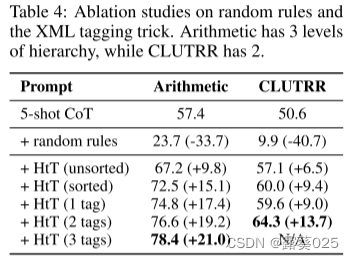

Here is an example figure for sensitivity curves. The x-axis is the sparsity or the percentage of #parameters dropped (i.e., sparsity). The y-axis is the validation accuracy. (This is Figure 6 in Learning both Weights and Connections for Efficient Neural Networks)

The following code cell defines the sensitivity scan function that returns the sparsities scanned, and a list of accuracies for each weight to be pruned.

"""

它用于评估在对神经网络模型进行不同程度的稀疏化(即剪枝)时,模型的性能(准确度)如何变化。

这是一种在模型压缩和优化中常用的技术,用于找出模型对参数剪枝的敏感程度。

model: 要评估的神经网络模型。

dataloader: 用于评估模型性能的数据加载器。

scan_step, scan_start, scan_end: 这些参数定义了剪枝稀疏度的扫描范围和步长。稀疏度从 scan_start 开始,以 scan_step 的步长增加,直到 scan_end。

verbose: 是否打印详细信息。

"""

@torch.no_grad()

def sensitivity_scan(model, dataloader, scan_step=0.1, scan_start=0.4, scan_end=1.0, verbose=True):

# 存储不同稀疏度的值

sparsities = np.arange(start=scan_start, stop=scan_end, step=scan_step)

# 存储对应于每个稀疏度的模型准确度

accuracies = []

# named_conv_weights: 从模型中提取所有卷积层的权重。

named_conv_weights = [(name, param) for (name, param) in model.named_parameters() if param.dim() > 1]

# 遍历模型中的卷积层权重 对于每一层卷积权重,执行以下操作:

for i_layer, (name, param) in enumerate(named_conv_weights):

param_clone = param.detach().clone() # 克隆原始权重,以便在修改后可以恢复。

accuracy = []

# 对于每个稀疏度值,使用 fine_grained_prune 函数对权重进行剪枝,然后使用 evaluate 函数评估剪枝后模型的准确度。

for sparsity in tqdm(sparsities, desc=f'scanning {i_layer}/{len(named_conv_weights)} weight - {name}'):

fine_grained_prune(param.detach(), sparsity=sparsity)

acc = evaluate(model, dataloader, verbose=False)

if verbose: # 如果 verbose 为真,打印当前稀疏度和对应的准确度。

print(f'\r sparsity={sparsity:.2f}: accuracy={acc:.2f}%', end='')

# restore 恢复权重到剪枝前的状态。

param.copy_(param_clone)

accuracy.append(acc) # 将每个稀疏度下的准确度添加到 accuracy 列表中。

if verbose:

print(f'\r sparsity=[{",".join(["{:.2f}".format(x) for x in sparsities])}]: accuracy=[{", ".join(["{:.2f}%".format(x) for x in accuracy])}]', end='')

accuracies.append(accuracy)

return sparsities, accuracies

Please run the following cells to plot the sensitivity curves. It should take around 2 minutes to finish.

sparsities, accuracies = sensitivity_scan(

model, dataloader['test'], scan_step=0.1, scan_start=0.4, scan_end=1.0)

运行结果:

"""

绘制神经网络模型在不同剪枝稀疏度下的性能(准确度)变化的图表。

sparsities: 稀疏度的数组,表示不同的剪枝水平。

accuracies: 每个稀疏度下的模型准确度数组。

dense_model_accuracy: 未剪枝(密集)模型的准确度。

"""

def plot_sensitivity_scan(sparsities, accuracies, dense_model_accuracy):

# 计算一个准确度的下限,这通常是为了在图表中提供一个参考线,以便比较剪枝后的模型性能与原始模型的性能差异。

lower_bound_accuracy = 100 - (100 - dense_model_accuracy) * 1.5

# 使用 plt.subplots 创建多个子图,每个子图对应模型中的一个卷积层。figsize=(15,8): 设置整个图表的大小。

fig, axes = plt.subplots(3, int(math.ceil(len(accuracies) / 3)),figsize=(15,8))

# axes.ravel(): 将多维数组扁平化为一维数组,使得可以通过索引访问子图。

axes = axes.ravel()

plot_index = 0

# 遍历模型的每个参数,只关注维度大于1的参数(通常是卷积层的权重)。对于每个这样的参数,绘制一个曲线图,显示在不同稀疏度下的准确度。

# 设置子图的标题、x轴和y轴标签、刻度、图例等。

for name, param in model.named_parameters(): # 绘制每个卷积层的性能曲线

if param.dim() > 1:

ax = axes[plot_index]

curve = ax.plot(sparsities, accuracies[plot_index])

line = ax.plot(sparsities, [lower_bound_accuracy] * len(sparsities))

ax.set_xticks(np.arange(start=0.4, stop=1.0, step=0.1))

ax.set_ylim(80, 95)

ax.set_title(name)

ax.set_xlabel('sparsity')

ax.set_ylabel('top-1 accuracy')

ax.legend([

'accuracy after pruning',

f'{lower_bound_accuracy / dense_model_accuracy * 100:.0f}% of dense model accuracy'

])

ax.grid(axis='x')

plot_index += 1

fig.suptitle('Sensitivity Curves: Validation Accuracy vs. Pruning Sparsity') # 设置整个图表的标题。

fig.tight_layout() # 调整子图的布局,以确保标签和标题不重叠。

fig.subplots_adjust(top=0.925) # 进一步调整子图布局。

plt.show()

plot_sensitivity_scan(sparsities, accuracies, dense_model_accuracy)

运行结果:

#Parameters of each layer

In addition to accuracy, the number of each layer’s parameters also affects the decision on sparsity selection. Layers with more #parameters require larger sparsities.

Please run the following code cell to plot the distribution of #parameters in the whole model.

"""

绘制神经网络模型中各层参数数量的分布图。这个函数有助于可视化模型中不同层的参数规模,从而更好地理解模型的复杂性和各层的重要性。

"""

def plot_num_parameters_distribution(model):

num_parameters = dict() # 初始化一个字典来存储每层的参数数量

for name, param in model.named_parameters(): # 遍历模型的所有参数

# 这个条件检查参数是否是多维的(通常是权重矩阵或卷积核),从而排除偏置等一维参数。

if param.dim() > 1:

num_parameters[name] = param.numel() # 对于每个多维参数,计算其元素总数(即参数数量)并存储在字典中。

fig = plt.figure(figsize=(8, 6))

plt.grid(axis='y') # 在y轴方向添加网格线,以便更好地阅读条形图。

# 使用条形图展示每层的参数数量。x轴是层的名称,y轴是对应的参数数量。

plt.bar(list(num_parameters.keys()), list(num_parameters.values()))

plt.title('#Parameter Distribution') # 设置图表的标题

plt.ylabel('Number of Parameters') # 设置y轴的标签

plt.xticks(rotation=60) # 将x轴的刻度标签旋转60度,通常是为了防止层名称重叠和提高可读性。

plt.tight_layout() # 自动调整子图参数,使之填充整个图表区域。

plt.show() # 显示图表。

# 调用此函数并传入一个神经网络模型,以绘制并显示该模型中各层参数数量的分布。

plot_num_parameters_distribution(model)

运行结果:

Select Sparsity Based on Sensitivity Curves and #Parameters Distribution

Based on the sensitivity curves and the distribution of #parameters in the model, please select the sparsity for each layer.

Note that the overall compression ratio of pruned model mostly depends on the layers with larger #parameters, and different layers have different sensitivity to pruning (see Question 4).

Please make sure that after pruning, the sparse model is 25% of the size of the dense model, and validation accuracy is higher than 92.5 after finetuning.

Hint:

-

The layer with more #parameters should have larger sparsity. (see Figure #Parameter Distribution)

-

The layer that is sensitive to the pruning sparsity (i.e., the accuracy will drop quickly as sparsity becomes higher) should have smaller sparsity. (see Figure Sensitivity Curves)

recover_model()

sparsity_dict = {

##################### YOUR CODE STARTS HERE #####################

# please modify the sparsity value of each layer

# please DO NOT modify the key of sparsity_dict

'backbone.conv0.weight': 0.6,

'backbone.conv1.weight': 0.7,

'backbone.conv2.weight': 0.7,

'backbone.conv3.weight': 0.7,

'backbone.conv4.weight': 0.8,

'backbone.conv5.weight': 0.9,

'backbone.conv6.weight': 0.9,

'backbone.conv7.weight': 0.9,

'classifier.weight': 0.7

##################### YOUR CODE ENDS HERE #######################

}

Please run the following cell to prune the model according to your defined sparsity_dict, and print the information of sparse model.

"""

这段代码展示了在神经网络模型上应用细粒度剪枝(Fine-Grained Pruning)的过程,并对剪枝后的模型进行了一系列的评估和可视化。

"""

# 创建一个 FineGrainedPruner 对象,它将根据 sparsity_dict 中定义的稀疏度对 model 进行剪枝。这里的稀疏度指定了每一层要剪枝的比例。

pruner = FineGrainedPruner(model, sparsity_dict)

# # 打印剪枝后的稀疏度信息

print(f'After pruning with sparsity dictionary')

for name, sparsity in sparsity_dict.items():

print(f' {name}: {sparsity:.2f}')

# 计算并打印实际稀疏度

print(f'The sparsity of each layer becomes')

for name, param in model.named_parameters():

if name in sparsity_dict:

print(f' {name}: {get_sparsity(param):.2f}')

# 计算并打印剪枝后模型的大小

sparse_model_size = get_model_size(model, count_nonzero_only=True)

print(f"Sparse model has size={sparse_model_size / MiB:.2f} MiB = {sparse_model_size / dense_model_size * 100:.2f}% of dense model size")

# 评估剪枝后模型的准确度

sparse_model_accuracy = evaluate(model, dataloader['test'])

print(f"Sparse model has accuracy={sparse_model_accuracy:.2f}% before fintuning")

# 绘制剪枝后模型的权重分布图,只考虑非零权重。这有助于可视化剪枝对模型权重分布的影响。

plot_weight_distribution(model, count_nonzero_only=True)

"""

输出结果

After pruning with sparsity dictionary

backbone.conv0.weight: 0.60

backbone.conv1.weight: 0.70

backbone.conv2.weight: 0.70

backbone.conv3.weight: 0.70

backbone.conv4.weight: 0.80

backbone.conv5.weight: 0.90

backbone.conv6.weight: 0.90

backbone.conv7.weight: 0.90

classifier.weight: 0.70

The sparsity of each layer becomes

backbone.conv0.weight: 0.40

backbone.conv1.weight: 0.30

backbone.conv2.weight: 0.30

backbone.conv3.weight: 0.30

backbone.conv4.weight: 0.20

backbone.conv5.weight: 0.10

backbone.conv6.weight: 0.10

backbone.conv7.weight: 0.10

classifier.weight: 0.30

Sparse model has size=30.50 MiB = 86.63% of dense model size

Sparse model has accuracy=92.36% before fintuning

"""

画出示意图:

Finetune the fine-grained pruned model

As we can see from the outputs of previous cell, even though fine-grained pruning reduces the most of model weights, the accuracy of model also dropped. Therefore, we have to finetune the sparse model to recover the accuracy.

Please run the following cell to finetune the sparse model. It should take around 3 minutes to finish.

"""

对一个经过细粒度剪枝(Fine-Grained Pruning)的稀疏神经网络模型进行微调(Finetuning)的过程。微调是在模型剪枝后的一个重要步骤,用于恢复或提升模型的性能。

"""

# 设置微调的总轮数为5

num_finetune_epochs = 5

# 创建一个随机梯度下降(SGD)优化器,用于微调模型。这里设置了学习率、动量和权重衰减

optimizer = torch.optim.SGD(model.parameters(), lr=0.01, momentum=0.9, weight_decay=1e-4)

# 设置一个余弦退火学习率调度器,用于在微调过程中调整学习率

scheduler = torch.optim.lr_scheduler.CosineAnnealingLR(optimizer, num_finetune_epochs)

# 设置损失函数为交叉熵损失,这在分类任务中很常见

criterion = nn.CrossEntropyLoss()

best_sparse_model_checkpoint = dict() # 创建一个字典来保存最佳模型的状态

best_accuracy = 0 # 初始化最佳准确度为0

print(f'Finetuning Fine-grained Pruned Sparse Model')

# 循环进行 num_finetune_epochs 轮微调

for epoch in range(num_finetune_epochs):

# At the end of each train iteration, we have to apply the pruning mask

# to keep the model sparse during the training

"""

在每轮微调中,首先使用 train 函数对模型进行训练。训练过程中,使用 callbacks 参数确保在每次训练迭代结束时应用剪枝掩码,以保持模型的稀疏性。

"""

train(model, dataloader['train'], criterion, optimizer, scheduler,

callbacks=[lambda: pruner.apply(model)])

# 使用 evaluate 函数在测试数据集上评估模型的准确度

accuracy = evaluate(model, dataloader['test'])

is_best = accuracy > best_accuracy

# 如果当前轮次的准确度高于之前的最佳准确度,则更新 best_sparse_model_checkpoint 为当前模型的状态,并更新 best_accuracy。

if is_best:

best_sparse_model_checkpoint['state_dict'] = copy.deepcopy(model.state_dict())

best_accuracy = accuracy

# 打印当前轮次的准确度和目前为止的最佳准确度。

print(f' Epoch {epoch+1} Accuracy {accuracy:.2f}% / Best Accuracy: {best_accuracy:.2f}%')

运行结果:

Run the following cell to see the information of best finetuned sparse model.

# load the best sparse model checkpoint to evaluate the final performance

# 加载经过微调的最佳稀疏模型,并评估其最终性能。

model.load_state_dict(best_sparse_model_checkpoint['state_dict'])

sparse_model_size = get_model_size(model, count_nonzero_only=True)

print(f"Sparse model has size={sparse_model_size / MiB:.2f} MiB = {sparse_model_size / dense_model_size * 100:.2f}% of dense model size")

sparse_model_accuracy = evaluate(model, dataloader['test'])

print(f"Sparse model has accuracy={sparse_model_accuracy:.2f}% after fintuning")

"""

输出结果

Sparse model has size=30.50 MiB = 86.63% of dense model size

Sparse model has accuracy=93.05% after fintuning

"""

Channel Pruning

In this section, we will implement the channel pruning. Channel pruning removes an entire channel, so that it can achieve inference speed up on existing hardware like GPUs. Similarly, we remove the channels whose weights are of smaller magnitudes (measured by Frobenius norm).

# firstly, let's restore the model weights to the original dense version

# and check the validation accuracy

recover_model()

dense_model_accuracy = evaluate(model, dataloader['test'])

print(f"dense model has accuracy={dense_model_accuracy:.2f}%")

"""

输出结果

dense model has accuracy=92.95%

"""

Remove Channel Weights

Unlike fine-grained pruning, we can remove the weights entirely from the tensor in channel pruning. That is to say, the number of output channels is reduced:

> # o u t _ c h a n n e l s n e w = # o u t _ c h a n n e l s o r i g i n ⋅ ( 1 − s p a r s i t y ) \#\mathrm{out\_channels}_{\mathrm{new}} = \#\mathrm{out\_channels}_{\mathrm{origin}} \cdot (1 - \mathrm{sparsity}) #out_channelsnew=#out_channelsorigin⋅(1−sparsity)

The weight tensor W W W is still dense after channel pruning. Thus, we will refer to sparsity as prune ratio.

Like fine-grained pruning, we can use different pruning rates for different layers. However, we use a uniform pruning rate for all the layers for now. We are targeting 2x computation reduction, which is roughly 30% uniform pruning rate (think about why).

Feel free to try out different pruning ratios per layer at the end of this section. You can pass in a list of ratios to the channel_prune function.

Please complete the following functions for channel pruning.

Here we naively prune all output channels other than the first # o u t _ c h a n n e l s n e w \#\mathrm{out\_channels}_{\mathrm{new}} #out_channelsnew channels.

def get_num_channels_to_keep(channels: int, prune_ratio: float) -> int:

"""A function to calculate the number of layers to PRESERVE after pruning

Note that preserve_rate = 1. - prune_ratio

"""

##################### YOUR CODE STARTS HERE #####################

preserve_ratio = 1. - prune_ratio

return int(round(channels * preserve_ratio))

##################### YOUR CODE ENDS HERE #####################

@torch.no_grad()

def channel_prune(model: nn.Module,

prune_ratio: Union[List, float]) -> nn.Module:

"""Apply channel pruning to each of the conv layer in the backbone

Note that for prune_ratio, we can either provide a floating-point number,

indicating that we use a uniform pruning rate for all layers, or a list of

numbers to indicate per-layer pruning rate.

prune_ratio既可以表示一个统一的剪枝比例(单个浮点数),也可以表示针对每个卷积层不同的剪枝比例(列表形式)。

"""

# sanity check of provided prune_ratio

# 使用assert语句确保提供的prune_ratio变量是一个浮点数或列表

assert isinstance(prune_ratio, (float, list))

# 计算卷积层数量

n_conv = len([m for m in model.backbone if isinstance(m, nn.Conv2d)])

# note that for the ratios, it affects the previous conv output and next

# conv input, i.e., conv0 - ratio0 - conv1 - ratio1-...

"""

如果prune_ratio是一个列表,则代码通过assert检查其长度是否为卷积层数量减一(n_conv - 1)。之所以是n_conv - 1,

是因为剪枝比例影响的是相邻卷积层之间的通道数,例如conv0的输出通道数会影响conv1的输入通道数,因此每对相邻的卷积层需要一个剪枝比例。

如果prune_ratio是一个浮点数,意味着用户想要对所有层应用相同的剪枝比例,

代码会将这个浮点数扩展成一个列表,列表的长度是n_conv - 1,列表中的每个元素都是这个相同的浮点数。

"""

if isinstance(prune_ratio, list):

assert len(prune_ratio) == n_conv - 1

else: # convert float to list

prune_ratio = [prune_ratio] * (n_conv - 1)

# we prune the convs in the backbone with a uniform ratio

model = copy.deepcopy(model) # prevent overwrite

# we only apply pruning to the backbone features

all_convs = [m for m in model.backbone if isinstance(m, nn.Conv2d)]

all_bns = [m for m in model.backbone if isinstance(m, nn.BatchNorm2d)]

# apply pruning. we naively keep the first k channels

assert len(all_convs) == len(all_bns)

"""

这段代码执行了实际的通道剪枝操作。它遍历每一对相邻的卷积层(prev_conv和next_conv),

并根据每层的剪枝比例(prune_ratio)来调整它们的参数。

"""

# 使用enumerate函数遍历prune_ratio列表,i_ratio是索引,p_ratio是对应的剪枝比例。

for i_ratio, p_ratio in enumerate(prune_ratio):

# 对于每个剪枝比例,选择当前层(prev_conv)和下一层(next_conv)的卷积层以及当前层的批归一化层(prev_bn)。

prev_conv = all_convs[i_ratio]

prev_bn = all_bns[i_ratio]

next_conv = all_convs[i_ratio + 1]

original_channels = prev_conv.out_channels # same as next_conv.in_channels

# 通过调用get_num_channels_to_keep函数计算在当前层(prev_conv)中应该保留多少输出通道。

n_keep = get_num_channels_to_keep(original_channels, p_ratio)

prev_conv.weight = nn.Parameter(prev_conv.weight.detach()[:n_keep])

prev_bn.weight = nn.Parameter(prev_bn.weight.detach()[:n_keep])

prev_bn.bias = nn.Parameter(prev_bn.bias.detach()[:n_keep])

prev_bn.running_mean = prev_bn.running_mean.detach()[:n_keep]

prev_bn.running_var = prev_bn.running_var.detach()[:n_keep]

# prune the input of the next conv (hint: just one line of code)

##################### YOUR CODE STARTS HERE #####################

# 修改next_conv.weight以匹配前一层的输出通道剪枝。由于next_conv的输入通道与prev_conv的输出通道是对应的,

# 因此需要确保next_conv.weight的输入通道数与prev_conv的输出通道数相匹配。

# 四个维度:输出通道数(即卷积核的数量)、输入通道数(即上一层输出的特征图数量)、卷积核的高度、卷积核的宽度

next_conv.weight = nn.Parameter(next_conv.weight.detach()[:, :n_keep, :, :])

##################### YOUR CODE ENDS HERE #####################

return model

Run the following cell to perform a sanity check to make sure the implementation is correct.

dummy_input = torch.randn(1, 3, 32, 32).cuda()

pruned_model = channel_prune(model, prune_ratio=0.3)

pruned_macs = get_model_macs(pruned_model, dummy_input)

assert pruned_macs == 305388064

print('* Check passed. Right MACs for the pruned model.')

Now let’s evaluate the performance of the model after uniform channel pruning with 30% pruning rate.

As you may see, directly removing 30% of the channels leads to low accuracy.

pruned_model_accuracy = evaluate(pruned_model, dataloader['test'])

print(f"pruned model has accuracy={pruned_model_accuracy:.2f}%")

"""

输出结果

pruned model has accuracy=28.15%

"""

Ranking Channels by Importance

As you can see, removing the first 30% of channels in all layers leads to significant accuracy reduction. One potential method to remedy the issue is to find the less important channel weights to remove. A popular criterion for importance is to use the Frobenius norm of the weights corresponding to each input channel:

> i m p o r t a n c e i = ∥ W i ∥ 2 , i = 0 , 1 , 2 , ⋯ , # i n _ c h a n n e l s − 1 importance_{i} = \|W_{i}\|_2, \;\; i = 0, 1, 2,\cdots, \#\mathrm{in\_channels}-1 importancei=∥Wi∥2,i=0,1,2,⋯,#in_channels−1

We can sort the channel weights from more important to less important, and then keep the frst k k k channels for each layer.

Please complete the following functions for sorting the weight tensor based on the Frobenius norm.

Hint:

- To calculate Frobenius norm of a tensor, Pytorch provides

torch.normAPIs.

"""

为了计算并排序一个卷积层中每个输入通道的重要性。这个函数是在神经网络模型的通道剪枝(channel pruning)过程中使用的,用于确定哪些通道是重要的,哪些可以被剪枝掉。

weight: 这个参数是一个卷积层的权重张量。

"""

# function to sort the channels from important to non-important

def get_input_channel_importance(weight):

# 在卷积层的权重张量中,通常形状是 [输出通道数, 输入通道数, 高度, 宽度]。这里通过 weight.shape[1] 获取输入通道数。

in_channels = weight.shape[1] # 获取权重张量的输入通道数

importances = [] # 用于存储每个输入通道的重要性

# compute the importance for each input channel

for i_c in range(weight.shape[1]): # 计算每个输入通道的重要性

channel_weight = weight.detach()[:, i_c]

##################### YOUR CODE STARTS HERE #####################

importance = torch.norm(channel_weight, p=2) # L2 norm as importance

##################### YOUR CODE ENDS HERE #####################

importances.append(importance.view(1))

# 将所有通道的重要性值连接成一个张量并返回

return torch.cat(importances)

"""

用于在神经网络模型中对卷积层的通道进行排序。这个过程基于通道的重要性,目的是为了优化网络结构,可能用于提高网络的效率或准确性。

"""

@torch.no_grad()

def apply_channel_sorting(model):

# 创建模型的深拷贝,以避免修改原始模型。

model = copy.deepcopy(model) # do not modify the original model

# fetch all the conv and bn layers from the backbone

# 获取卷积和批归一化层

all_convs = [m for m in model.backbone if isinstance(m, nn.Conv2d)]

all_bns = [m for m in model.backbone if isinstance(m, nn.BatchNorm2d)]

# iterate through conv layers

# 遍历卷积层进行排序:

for i_conv in range(len(all_convs) - 1):

# each channel sorting index, we need to apply it to:

# - the output dimension of the previous conv

# - the previous BN layer

# - the input dimension of the next conv (we compute importance here)

# 对于每一对相邻的卷积层 prev_conv 和 next_conv,以及与 prev_conv 相关联的批归一化层 prev_bn,进行以下操作:

prev_conv = all_convs[i_conv]

prev_bn = all_bns[i_conv]

next_conv = all_convs[i_conv + 1]

# note that we always compute the importance according to input channels

# 计算 next_conv 的输入通道的重要性。

importance = get_input_channel_importance(next_conv.weight)

# sorting from large to small

# 根据重要性对通道进行降序排序,得到排序索引。

sort_idx = torch.argsort(importance, descending=True)

# apply to previous conv and its following bn

prev_conv.weight.copy_(torch.index_select(prev_conv.weight.detach(), 0, sort_idx))

for tensor_name in ['weight', 'bias', 'running_mean', 'running_var']:

tensor_to_apply = getattr(prev_bn, tensor_name)

tensor_to_apply.copy_(torch.index_select(tensor_to_apply.detach(), 0, sort_idx))

# apply to the next conv input (hint: one line of code)

##################### YOUR CODE STARTS HERE #####################

next_conv.weight.copy_(torch.index_select(next_conv.weight.detach(), 1, sort_idx))

##################### YOUR CODE ENDS HERE #####################

return model

Now run the following cell to sanity check if the results are correct.

print('Before sorting...')

dense_model_accuracy = evaluate(model, dataloader['test'])

print(f"dense model has accuracy={dense_model_accuracy:.2f}%")

print('After sorting...')

sorted_model = apply_channel_sorting(model)

sorted_model_accuracy = evaluate(sorted_model, dataloader['test'])

print(f"sorted model has accuracy={sorted_model_accuracy:.2f}%")

# make sure accuracy does not change after sorting, since it is

# equivalent transform

assert abs(sorted_model_accuracy - dense_model_accuracy) < 0.1

print('* Check passed.')

"""

输出结果

Before sorting...

dense model has accuracy=92.95%

After sorting...

sorted model has accuracy=92.95%

* Check passed.

"""

Finally, we compare the pruned models’ accuracy with and without sorting.

channel_pruning_ratio = 0.3 # pruned-out ratio

print(" * Without sorting...")

pruned_model = channel_prune(model, channel_pruning_ratio)

pruned_model_accuracy = evaluate(pruned_model, dataloader['test'])

print(f"pruned model has accuracy={pruned_model_accuracy:.2f}%")

print(" * With sorting...")

sorted_model = apply_channel_sorting(model)

pruned_model = channel_prune(sorted_model, channel_pruning_ratio)

pruned_model_accuracy = evaluate(pruned_model, dataloader['test'])

print(f"pruned model has accuracy={pruned_model_accuracy:.2f}%")

"""

输出结果

* Without sorting...

pruned model has accuracy=28.15%

* With sorting...

pruned model has accuracy=36.81%

"""

As you can see, the channel sorting can slightly improve the pruned model’s accuracy, but there is still a huge degrade, which is quite common for channel pruning. But luckily, we can perform fine-tuning to recover the accuracy.

"""

这段代码是一个典型的神经网络微调(finetuning)过程,用于优化一个已经被剪枝(pruned)的模型。

"""

num_finetune_epochs = 5

optimizer = torch.optim.SGD(pruned_model.parameters(), lr=0.01, momentum=0.9, weight_decay=1e-4)

scheduler = torch.optim.lr_scheduler.CosineAnnealingLR(optimizer, num_finetune_epochs)

criterion = nn.CrossEntropyLoss()

best_accuracy = 0

for epoch in range(num_finetune_epochs):

train(pruned_model, dataloader['train'], criterion, optimizer, scheduler)

accuracy = evaluate(pruned_model, dataloader['test'])

is_best = accuracy > best_accuracy

if is_best:

best_accuracy = accuracy

print(f'Epoch {epoch+1} Accuracy {accuracy:.2f}% / Best Accuracy: {best_accuracy:.2f}%')

"""

输出结果

Epoch 1 Accuracy 91.54% / Best Accuracy: 91.54%

Epoch 2 Accuracy 91.67% / Best Accuracy: 91.67%

Epoch 3 Accuracy 92.04% / Best Accuracy: 92.04%

Epoch 4 Accuracy 92.20% / Best Accuracy: 92.20%

Epoch 5 Accuracy 92.30% / Best Accuracy: 92.30%

"""

Measure acceleration from pruning

After fine-tuning, the model almost recovers the accuracy. You may have already learned that channel pruning is usually more difficult to recover accuracy compared to fine-grained pruning. However, it directly leads to a smaller model size and smaller computation without specialized model format. It can also run faster on GPUs. Now we compare the model size, computation, and latency of the pruned model.

"""

这段代码是用于测量和比较原始模型与剪枝后模型在不同方面的性能。具体来说它测量了模型的延迟(Latency)、计算量(MACs,Multiply-Accumulate Operations),

以及模型大小(参数数量)。这些测量对于理解模型优化的效果非常重要,特别是在边缘设备上的应用场景中。

"""

# helper functions to measure latency of a regular PyTorch models.

# Unlike fine-grained pruning, channel pruning

# can directly leads to model size reduction and speed up.

@torch.no_grad()

def measure_latency(model, dummy_input, n_warmup=20, n_test=100):

model.eval()

# warmup

for _ in range(n_warmup):

_ = model(dummy_input)

# real test

t1 = time.time()

for _ in range(n_test):

_ = model(dummy_input)

t2 = time.time()

return (t2 - t1) / n_test # average latency

table_template = "{:<15} {:<15} {:<15} {:<15}"

print (table_template.format('', 'Original','Pruned','Reduction Ratio'))

# 1. measure the latency of the original model and the pruned model on CPU

# which simulates inference on an edge device

dummy_input = torch.randn(1, 3, 32, 32).to('cpu')

pruned_model = pruned_model.to('cpu')

model = model.to('cpu')

pruned_latency = measure_latency(pruned_model, dummy_input)

original_latency = measure_latency(model, dummy_input)

print(table_template.format('Latency (ms)',

round(original_latency * 1000, 1),

round(pruned_latency * 1000, 1),

round(original_latency / pruned_latency, 1)))

# 2. measure the computation (MACs)

original_macs = get_model_macs(model, dummy_input)

pruned_macs = get_model_macs(pruned_model, dummy_input)

print(table_template.format('MACs (M)',

round(original_macs / 1e6),

round(pruned_macs / 1e6),

round(original_macs / pruned_macs, 1)))

# 3. measure the model size (params)

original_param = get_num_parameters(model)

pruned_param = get_num_parameters(pruned_model)

print(table_template.format('Param (M)',

round(original_param / 1e6, 2),

round(pruned_param / 1e6, 2),

round(original_param / pruned_param, 1)))

# put model back to cuda

pruned_model = pruned_model.to('cuda')

model = model.to('cuda')

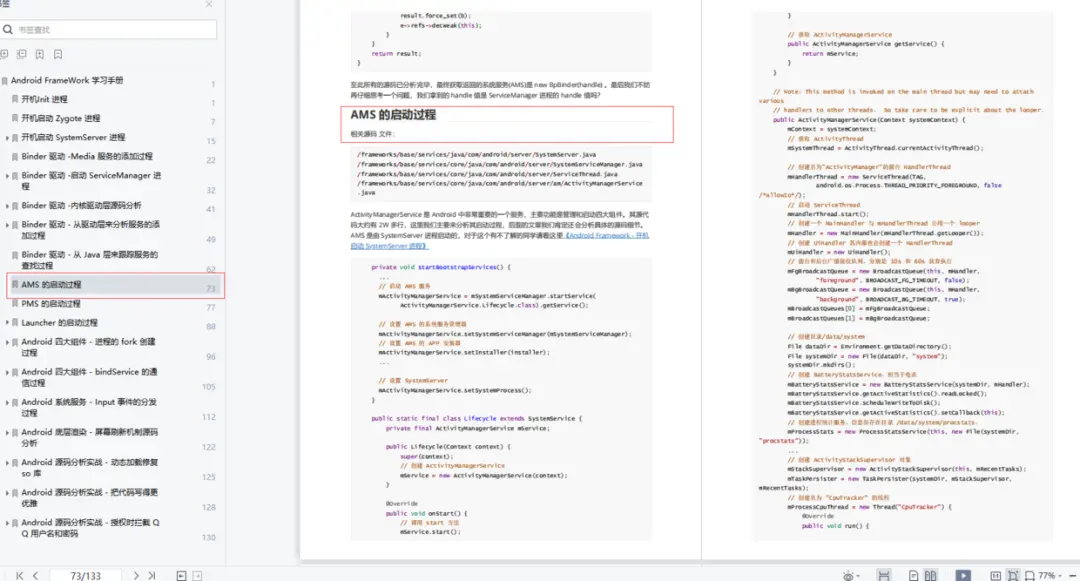

运行结果:

以上大概就是这个lab1的全部内容。