文章目录

- 一、导入相关包

- 二、加载数据集

- 三、数据预处理

- 四、创建模型

- 五、创建评估函数

- 六、配置训练参数

- 七、创建训练器

- 八、模型训练

- 九、模型预测

一、导入相关包

- DataCollatorForTokenClassification 用于 Token 级别的分类任务

import evaluate

from datasets import load_dataset

from transformers import AutoTokenizer, AutoModelForTokenClassification,

TrainingArguments, Trainer, DataCollatorForTokenClassification

二、加载数据集

# 如果可以联网,直接使用load_dataset进行加载,cache_dir将数据缓冲到该位置

ner_datasets = load_dataset("peoples_daily_ner", cache_dir="./data")

# 如果无法联网,则使用下面的方式加载数据集

# from datasets import DatasetDict

# ner_datasets = DatasetDict.load_from_disk("ner_data")

ner_datasets

'''

DatasetDict({

train: Dataset({

features: ['id', 'tokens', 'ner_tags'],

num_rows: 20865

})

validation: Dataset({

features: ['id', 'tokens', 'ner_tags'],

num_rows: 2319

})

test: Dataset({

features: ['id', 'tokens', 'ner_tags'],

num_rows: 4637

})

})

'''

- 查看一条数据

ner_datasets["train"][0]

'''

{'id': '0',

'tokens': ['海',

'钓',

'比',

'赛',

'地',

'点',

'在',

'厦',

'门',

'与',

'金',

'门',

'之',

'间',

'的',

'海',

'域',

'。'],

'ner_tags': [0, 0, 0, 0, 0, 0, 0, 5, 6, 0, 5, 6, 0, 0, 0, 0, 0, 0]}

'''

- 查看特征信息

ner_datasets["train"].features

'''

{'id': Value(dtype='string', id=None),

'tokens': Sequence(feature=Value(dtype='string', id=None), length=-1, id=None),

'ner_tags': Sequence(feature=ClassLabel(names=['O', 'B-PER', 'I-PER', 'B-ORG', 'I-ORG', 'B-LOC', 'I-LOC'], id=None), length=-1, id=None)}

'''

- 获取标签信息

label_list = ner_datasets["train"].features["ner_tags"].feature.names

label_list

'''

['O', 'B-PER', 'I-PER', 'B-ORG', 'I-ORG', 'B-LOC', 'I-LOC']

'''

三、数据预处理

tokenizer = AutoTokenizer.from_pretrained("hfl/chinese-macbert-base")

tokenizer(ner_datasets["train"][0]["tokens"], is_split_into_words=True)

# 对于已经做好tokenize的数据,要指定is_split_into_words参数为True

'''

{'input_ids': [101, 3862, 7157, 3683, 6612, 1765, 4157, 1762, 1336, 7305, 680, 7032, 7305, 722, 7313, 4638, 3862, 1818, 511, 102], 'token_type_ids': [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0], 'attention_mask': [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1]}

'''

否则

tokenizer(ner_datasets["train"][0]["tokens"], is_split_into_words=False)

'''

{'input_ids': [[101, 3862, 102], [101, 7157, 102], [101, 3683, 102], [101, 6612, 102], [101, 1765, 102], [101, 4157, 102], [101, 1762, 102], [101, 1336, 102], [101, 7305, 102], [101, 680, 102], [101, 7032, 102], [101, 7305, 102], [101, 722, 102], [101, 7313, 102], [101, 4638, 102], [101, 3862, 102], [101, 1818, 102], [101, 511, 102]], 'token_type_ids': [[0, 0, 0], [0, 0, 0], [0, 0, 0], [0, 0, 0], [0, 0, 0], [0, 0, 0], [0, 0, 0], [0, 0, 0], [0, 0, 0], [0, 0, 0], [0, 0, 0], [0, 0, 0], [0, 0, 0], [0, 0, 0], [0, 0, 0], [0, 0, 0], [0, 0, 0], [0, 0, 0]], 'attention_mask': [[1, 1, 1], [1, 1, 1], [1, 1, 1], [1, 1, 1], [1, 1, 1], [1, 1, 1], [1, 1, 1], [1, 1, 1], [1, 1, 1], [1, 1, 1], [1, 1, 1], [1, 1, 1], [1, 1, 1], [1, 1, 1], [1, 1, 1], [1, 1, 1], [1, 1, 1], [1, 1, 1]]}

'''

- attention_mask取值为1指的是不需要做mask

- 字词分词的时候(例如下面的英文数据)会存在一个 token 对应若干 id,所以不能简单的一一对应

res = tokenizer("interesting word")

res

'''

{'input_ids': [101, 10673, 12865, 12921, 8181, 8681, 102], 'token_type_ids': [0, 0, 0, 0, 0, 0, 0], 'attention_mask': [1, 1, 1, 1, 1, 1, 1]}

'''

- 解决方案:word_ids(),实现标签映射

res.word_ids()

'''

[None, 0, 0, 0, 0, 1, None] # None代表的是特殊token

'''

- 实现label和token的一一对应

# 借助word_ids 实现标签映射

def process_function(examples):

tokenized_exmaples = tokenizer(examples["tokens"], max_length=128, truncation=True, is_split_into_words=True)

# 实现label和token的一一对应

labels = []

for i, label in enumerate(examples["ner_tags"]):

word_ids = tokenized_exmaples.word_ids(batch_index=i)

label_ids = []

for word_id in word_ids:

if word_id is None:

label_ids.append(-100) # -100 softmax后会置为0

else:

label_ids.append(label[word_id])

labels.append(label_ids)

tokenized_exmaples["labels"] = labels

return tokenized_exmaples

tokenized_datasets = ner_datasets.map(process_function, batched=True)

tokenized_datasets

'''

DatasetDict({

train: Dataset({

features: ['id', 'tokens', 'ner_tags', 'input_ids', 'token_type_ids', 'attention_mask', 'labels'],

num_rows: 20865

})

validation: Dataset({

features: ['id', 'tokens', 'ner_tags', 'input_ids', 'token_type_ids', 'attention_mask', 'labels'],

num_rows: 2319

})

test: Dataset({

features: ['id', 'tokens', 'ner_tags', 'input_ids', 'token_type_ids', 'attention_mask', 'labels'],

num_rows: 4637

})

})

'''

print(tokenized_datasets["train"][0])

'''

{'id': '0',

'tokens': ['海', '钓', '比', '赛', '地', '点', '在', '厦', '门', '与', '金', '门', '之', '间', '的', '海', '域', '。'],

'ner_tags': [0, 0, 0, 0, 0, 0, 0, 5, 6, 0, 5, 6, 0, 0, 0, 0, 0, 0], 'input_ids': [101, 3862, 7157, 3683, 6612, 1765, 4157, 1762, 1336, 7305, 680, 7032, 7305, 722, 7313, 4638, 3862, 1818, 511, 102],

'token_type_ids': [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0],

'attention_mask': [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1],

'labels': [-100, 0, 0, 0, 0, 0, 0, 0, 5, 6, 0, 5, 6, 0, 0, 0, 0, 0, 0, -100]}

'''

四、创建模型

- 对于所有的非二分类任务,切记要指定num_labels,否则就会device错误

# 对于所有的非二分类任务,切记要指定num_labels,否则就会device错误

model = AutoModelForTokenClassification.from_pretrained("hfl/chinese-macbert-base",

num_labels=len(label_list))

model.config.num_labels

'''

7

'''

五、创建评估函数

# seqeval = evaluate.load("seqeval_metric.py") # 本地的加载方式

!pip install seqeval

seqeval = evaluate.load("seqeval")

seqeval

'''

Args:

predictions: List of List of predicted labels (Estimated targets as returned by a tagger)

references: List of List of reference labels (Ground truth (correct) target values)

suffix: True if the IOB prefix is after type, False otherwise. default: False

scheme: Specify target tagging scheme. Should be one of ["IOB1", "IOB2", "IOE1", "IOE2", "IOBES", "BILOU"].

default: None

mode: Whether to count correct entity labels with incorrect I/B tags as true positives or not.

If you want to only count exact matches, pass mode="strict". default: None.

sample_weight: Array-like of shape (n_samples,), weights for individual samples. default: None

zero_division: Which value to substitute as a metric value when encountering zero division. Should be on of 0, 1,

"warn". "warn" acts as 0, but the warning is raised.

Returns:

'scores': dict. Summary of the scores for overall and per type

Overall:

'accuracy': accuracy,

'precision': precision,

'recall': recall,

'f1': F1 score, also known as balanced F-score or F-measure,

Per type:

'precision': precision,

'recall': recall,

'f1': F1 score, also known as balanced F-score or F-measure

Examples:

>>> predictions = [['O', 'O', 'B-MISC', 'I-MISC', 'I-MISC', 'I-MISC', 'O'], ['B-PER', 'I-PER', 'O']]

>>> references = [['O', 'O', 'O', 'B-MISC', 'I-MISC', 'I-MISC', 'O'], ['B-PER', 'I-PER', 'O']]

>>> seqeval = evaluate.load("seqeval")

>>> results = seqeval.compute(predictions=predictions, references=references)

>>> print(list(results.keys()))

['MISC', 'PER', 'overall_precision', 'overall_recall', 'overall_f1', 'overall_accuracy']

>>> print(results["overall_f1"])

0.5

>>> print(results["PER"]["f1"])

1.0

""", stored examples: 0)

'''

import numpy as np

def eval_metric(pred):

# 返回的predictions只是logits

predictions, labels = pred

predictions = np.argmax(predictions, axis=-1)

# 将id转换为原始的字符串类型的标签

true_predictions = [

[label_list[p] for p, l in zip(prediction, label) if l != -100] # simple

for prediction, label in zip(predictions, labels) # batch

]

true_labels = [

[label_list[l] for p, l in zip(prediction, label) if l != -100] # simple

for prediction, label in zip(predictions, labels) # batch

]

result = seqeval.compute(predictions=true_predictions, references=true_labels, mode="strict", scheme="IOB2")

return {

"f1": result["overall_f1"]

}

六、配置训练参数

huggingface transformers使用指南之二——方便的trainer

详解Hugging Face Transformers的TrainingArguments_若石之上的博客-CSDN博客

LLM大模型之Trainer以及训练参数

!pip install accelerate # Using the `Trainer` with `PyTorch` requires `accelerate>=0.20.1`

args = TrainingArguments(

output_dir="models_for_ner", # 断点和运行日志记录保存文件夹

per_device_train_batch_size=64,

per_device_eval_batch_size=128,

evaluation_strategy="epoch", # 评价策略,需要和保存策略保持一致

save_strategy="epoch",

metric_for_best_model="f1", # 选取最好模型的指标,这里是评价函数返回的字典的key f1

load_best_model_at_end=True, # 训练完后加载最好的模型

logging_steps=50, # 日志打印步数

num_train_epochs=3

)

# 如果使用evaluation_strategy="steps",

# 则需要指定eval_steps参数,否则eval_steps=logging_steps

args = TrainingArguments(

output_dir="models_for_ner", # 断点和运行记录保存文件夹

per_device_train_batch_size=64,

per_device_eval_batch_size=128,

evaluation_strategy="steps", # 评价策略,需要和保存策略保持一致

save_strategy="steps",

metric_for_best_model="f1", # 选取最好模型的指标,这里是评价函数返回的字典的key f1

load_best_model_at_end=True, # 训练完后加载最好的模型

logging_steps=50, # 日志打印步数

num_train_epochs=1

)

- 采用第二种参数:

七、创建训练器

- 如果只有训练集还想实现在训练的时候评估的效果,则只需要将eval_dataset=tokenized_datasets[“train”] 即可

trainer = Trainer(

model=model,

args=args,

train_dataset=tokenized_datasets["train"],

eval_dataset=tokenized_datasets["validation"],

compute_metrics=eval_metric,

data_collator=DataCollatorForTokenClassification(tokenizer=tokenizer)

)

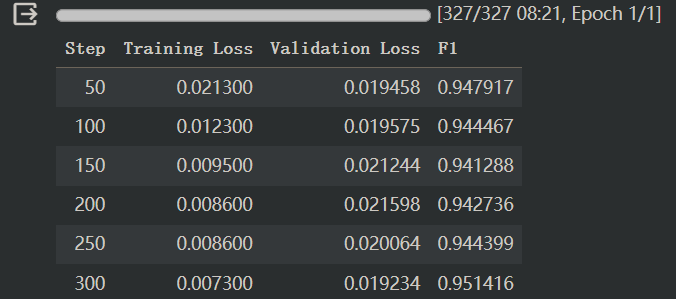

八、模型训练

trainer.train()

- 模型评估

trainer.evaluate() # 默认使用trainer中指定的eval_dataset

# 也可以更换其他数据集

trainer.evaluate(eval_dataset=tokenized_datasets["test"])

九、模型预测

res = trainer.predict(tokenized_datasets["test"])

res.predictions.argmax(axis=-1)

'''

array([[0, 0, 0, ..., 0, 0, 0],

[0, 0, 0, ..., 0, 0, 0],

[0, 0, 0, ..., 0, 0, 0],

...,

[0, 0, 0, ..., 0, 0, 0],

[0, 0, 0, ..., 0, 0, 0],

[0, 0, 0, ..., 0, 0, 0]])

'''

res.predictions.argmax(axis=-1)[0]

'''

array([0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 5, 5, 0, 5, 5, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0])

'''

from transformers import pipeline

# 使用pipeline进行推理,要指定id2label

model.config.id2label = {idx: label for idx, label in enumerate(label_list)}

model.config

'''

BertConfig {

"_name_or_path": "hfl/chinese-macbert-base",

"architectures": [

"BertForTokenClassification"

],

"attention_probs_dropout_prob": 0.1,

"classifier_dropout": null,

"directionality": "bidi",

"gradient_checkpointing": false,

"hidden_act": "gelu",

"hidden_dropout_prob": 0.1,

"hidden_size": 768,

"id2label": {

"0": "O",

"1": "B-PER",

"2": "I-PER",

"3": "B-ORG",

"4": "I-ORG",

"5": "B-LOC",

"6": "I-LOC"

},

"initializer_range": 0.02,

"intermediate_size": 3072,

"label2id": {

"LABEL_0": 0,

"LABEL_1": 1,

"LABEL_2": 2,

"LABEL_3": 3,

"LABEL_4": 4,

"LABEL_5": 5,

"LABEL_6": 6

},

"layer_norm_eps": 1e-12,

"max_position_embeddings": 512,

"model_type": "bert",

"num_attention_heads": 12,

"num_hidden_layers": 12,

"pad_token_id": 0,

"pooler_fc_size": 768,

"pooler_num_attention_heads": 12,

"pooler_num_fc_layers": 3,

"pooler_size_per_head": 128,

"pooler_type": "first_token_transform",

"position_embedding_type": "absolute",

"torch_dtype": "float32",

"transformers_version": "4.35.0",

"type_vocab_size": 2,

"use_cache": true,

"vocab_size": 21128

}

'''

# 如果模型是基于GPU训练的,那么推理时要指定device

# 对于NER任务,可以指定aggregation_strategy为simple,得到具体的实体的结果,而不是token的结果

ner_pipe = pipeline("token-classification",

model=model,

tokenizer=tokenizer,

device=0,

aggregation_strategy="simple")

res = ner_pipe("小明在北京上班")

res

'''

Asking to truncate to max_length but no maximum length is provided and the model has no predefined maximum length. Default to no truncation.

[{'entity_group': 'PER',

'score': 0.44049227,

'word': '明',

'start': 1,

'end': 2},

{'entity_group': 'LOC',

'score': 0.9994525,

'word': '北 京',

'start': 3,

'end': 5}]

'''

# 可以用model_max_length参数解决Asking to truncate to max_length but no maximum length is provided and the model has no predefined maximum length. Default to no truncation.

tokenizer = AutoTokenizer.from_pretrained('google/bert_uncased_L-4_H-256_A-4',

model_max_length=512)

# 指定aggregation_strategy为simple

ner_pipe = pipeline("token-classification", model=model, tokenizer=tokenizer, device=0)

res = ner_pipe("小明在北京上班")

res

'''

[{'entity': 'I-PER',

'score': 0.44049227,

'index': 2,

'word': '明',

'start': 1,

'end': 2},

{'entity': 'B-LOC',

'score': 0.99940526,

'index': 4,

'word': '北',

'start': 3,

'end': 4},

{'entity': 'I-LOC',

'score': 0.9994997,

'index': 5,

'word': '京',

'start': 4,

'end': 5}]

'''

# 根据start和end取实际的结果

ner_result = {}

x = "小明在北京上班"

for r in res:

if r["entity_group"] not in ner_result:

ner_result[r["entity_group"]] = []

ner_result[r["entity_group"]].append(x[r["start"]: r["end"]])

ner_result

'''

{'PER': ['明'], 'LOC': ['北京']}

'''

![【每日逆向】BUUCTF--[ACTF新生赛2020] easyre](https://img-blog.csdnimg.cn/84ea09655eb3496cb5db400816930735.png)