文章目录

- 书籍推荐

- 正则抓取腾讯动漫数据

- Flask展示数据

书籍推荐

如果你对Python网络爬虫感兴趣,强烈推荐你阅读《Python网络爬虫入门到实战》。这本书详细介绍了Python网络爬虫的基础知识和高级技巧,是每位爬虫开发者的必读之作。详细介绍见👉: 《Python网络爬虫入门到实战》 书籍介绍

正则抓取腾讯动漫数据

import requests

import re

import threading

from queue import Queue

def format_html(html):

li_pattern = re.compile('<li class="ret-search-item clearfix">[\s\S]+?</li>')

title_pattern = re.compile('title="(.*?)"')

img_src_pattern = re.compile('data-original="(.*?)"')

update_pattern = re.compile('<span class="mod-cover-list-text">(.*?)</span>')

tags_pattern = re.compile('<span href="/Comic/all/theme/.*?" target="_blank">(.*?)</span>')

popularity_pattern = re.compile('<span>人气:<em>(.*?)</em></span>')

items = li_pattern.findall(html)

for item in items:

title = title_pattern.search(item).group(1)

img_src = img_src_pattern.search(item).group(1)

update_info = update_pattern.search(item).group(1)

tags = tags_pattern.findall(item)

popularity = popularity_pattern.search(item).group(1)

data_queue.put(f'{title},{img_src},{update_info},{"#".join(tags)},{popularity}\n')

def run(index):

try:

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36'

}

response = requests.get(f"https://ac.qq.com/Comic/index/page/{index}", headers=headers)

html = response.text

format_html(html)

except Exception as e:

print(f"Error occurred while processing page {index}: {e}")

finally:

semaphore.release()

if __name__ == "__main__":

data_queue = Queue()

semaphore = threading.BoundedSemaphore(5)

lst_record_threads = []

for index in range(1, 3):

print(f"正在抓取{index}")

semaphore.acquire()

t = threading.Thread(target=run, args=(index,))

t.start()

lst_record_threads.append(t)

for rt in lst_record_threads:

rt.join()

with open("./qq_comic_data.csv", "a+", encoding="gbk") as f:

while not data_queue.empty():

f.write(data_queue.get())

print("数据爬取完毕")

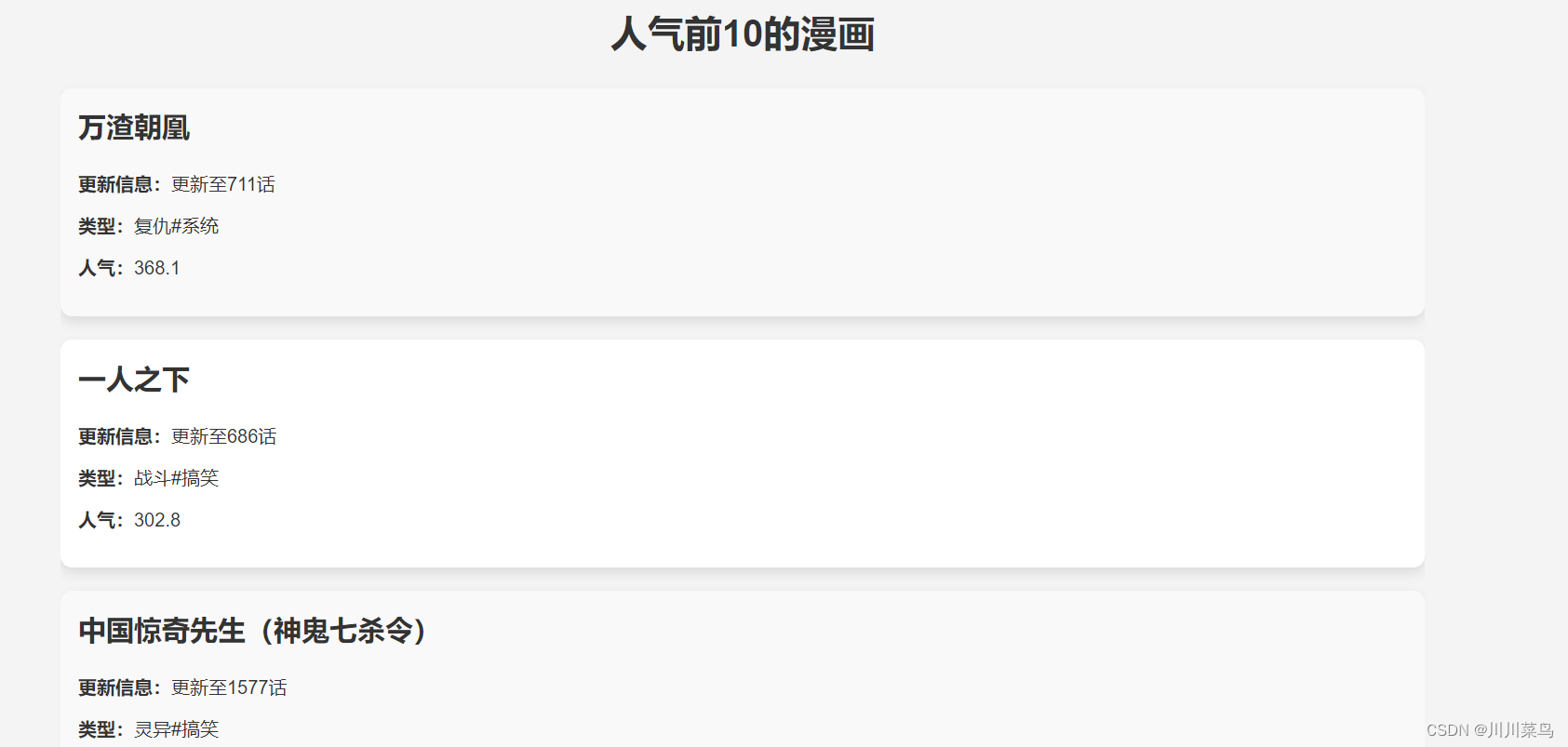

Flask展示数据

上面能够实现爬取数据,但是我希望展示在前端。

main.py代码如下:

# coding= gbk

from flask import Flask, render_template

import csv

app = Flask(__name__)

def read_data_from_csv():

with open("qq_comic_data.csv", "r", encoding="utf-8") as f:

reader = csv.reader(f)

data = list(reader)[1:] # 跳过标题行

# 统一转换人气数据为浮点数(单位:亿)

for row in data:

popularity = row[4]

if '亿' in popularity:

row[4] = float(popularity.replace('亿', ''))

elif '万' in popularity:

row[4] = float(popularity.replace('万', '')) / 10000 # 将万转换为亿

# 按人气排序并保留前10条记录

data.sort(key=lambda x: x[4], reverse=True)

return data[:10]

@app.route('/')

def index():

comics = read_data_from_csv()

return render_template('index.html', comics=comics)

if __name__ == '__main__':

app.run(debug=True)

templates/index.html如下:

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<title>漫画信息</title>

<style>

body {

font-family: Arial, sans-serif;

background-color: #f4f4f4;

color: #333;

line-height: 1.6;

padding: 20px;

}

.container {

width: 80%;

margin: auto;

overflow: hidden;

}

h1 {

text-align: center;

color: #333;

}

.comic {

background: #fff;

margin-bottom: 20px;

padding: 15px;

border-radius: 10px;

box-shadow: 0 5px 10px rgba(0,0,0,0.1);

}

.comic h2 {

margin-top: 0;

}

.comic p {

line-height: 1.25;

}

.comic:nth-child(even) {

background: #f9f9f9;

}

</style>

</head>

<body>

<div class="container">

<h1>人气前10的漫画</h1>

{% for comic in comics %}

<div class="comic">

<h2>{{ comic[0] }}</h2>

<p><strong>更新信息:</strong>{{ comic[2] }}</p>

<p><strong>类型:</strong>{{ comic[3] }}</p>

<p><strong>人气:</strong>{{ comic[4] }}</p>

</div>

{% endfor %}

</div>

</body>

</html>

效果如下: