报错1 :is group-writable, and the group is not root. Its permissions are 0775,

datanode启动时,日志报错

1.“xxxx” is group-writable, and the group is not root. Its permissions are 0775, and it is owned by gid 3245. Please fix this or select a different socket path.

从报错可以看出,hadoop的目录结构中,权限不对,重置下目录权限

chown 755 hadoop/ 再启动datanode,查查日志,又是

xxx is group-writable, and the group is not root. Its permissions are 0775, and it is owned by gid 3245. Please fix this or select a different socket path

没办法,换了个目录生成socket文件,并设置权限为755,datanode启动正常

报错2:we cannot start a localDataXceiverServer because libhadoop cannot be loaded.

java.lang.RuntimeException: Although a UNIX domain socket path is configured as /app/log4x/apps/hadoop/etc/DN_PORT, we cannot start a localDataXceiverServer because libhadoop cannot be loaded.

1.检查主机情况

# hadoop checknative -a

23/11/07 21:13:08 DEBUG util.NativeCodeLoader: Trying to load the custom-built native-hadoop library...

23/11/07 21:13:08 DEBUG util.NativeCodeLoader: Failed to load native-hadoop with error: java.lang.UnsatisfiedLinkError: no hadoop in java.library.path

23/11/07 21:13:08 DEBUG util.NativeCodeLoader: java.library.path=:/app/log4x/apps/hadoop/lib/native/Linux-amd64-64/:/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib

23/11/07 21:13:08 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

23/11/07 21:13:08 DEBUG util.Shell: setsid exited with exit code 0

Native library checking:

hadoop: false

zlib: false

snappy: false

lz4: false

bzip2: false

openssl: false

23/11/07 21:13:08 INFO util.ExitUtil: Exiting with status 12.新加配置

在~/.bash_profile文件中,新增配置,指定nvtive的路径

export JAVA_LIBRARY_PATH=/app/log4x/apps/hadoop/lib/native

source ~/.bash_porfile

# hadoop checknative -a

23/11/08 16:06:00 WARN bzip2.Bzip2Factory: Failed to load/initialize native-bzip2 library system-native, will use pure-Java version

23/11/08 16:06:00 INFO zlib.ZlibFactory: Successfully loaded & initialized native-zlib library

Native library checking:

hadoop: true /app/log4x/apps/hadoop/lib/native/libhadoop.so

zlib: true /lib64/libz.so.1

snappy: true /lib64/libsnappy.so.1

lz4: true revision:99

bzip2: false

openssl: false Cannot load libcrypto.so (libcrypto.so: cannot open shared object file: No such file or directory)!

23/11/08 16:06:00 INFO util.ExitUtil: Exiting with status 1报错3:IPC's epoch 1 is not the current writer epoch 0

org.apache.hadoop.hdfs.qjournal.client.QuorumException: Got too many exceptions to achieve quorum size 3/5. 4 exceptions thrown:

10.255.33.120:43001: IPC's epoch 1 is not the current writer epoch 0

奇怪了,我也报出这样的问题,看了别人的解决思路

1,先把报错关键信息 "IPC's epoch is less than the last promised epoch" 贴到google上查了一下,大部分外国人的回答都是因为网络原因引起的.

2,据上,经过看日志,每次启动另一个namenode的时候都会去探测三个 journalnode服务的8485端口,提示是faild的,

说明最有可能是网络问题,排查如下:

ifconfig -a看网卡是否有丢包,

查看/etc/sysconfig/selinux 配置 SELINUX=disabled 是否是对的,

/etc/init.d/iptables status 查看防火墙是否运行,因为我们hadoop是运行内网环境,记得之前部署的时候,防火墙是关闭的, 看来问题找到了

/etc/init.d/iptables stop

先后检查了,三个 journalnode服务器的防火墙,都莫名其妙的启着的,马上关闭

再重新启动两个namenode,查看日志,正常了,

我检查了下我的这边:

1.SELINUX都是disabled

# ansible -i hosts tt -m shell -a "sudo cat /etc/sysconfig/selinux | grep SELINUX"

[WARNING]: Consider using 'become', 'become_method', and 'become_user' rather than running sudo

host_121 | CHANGED | rc=0 >>

# SELINUX= can take one of these three values:

SELINUX=disabled

# SELINUXTYPE= can take one of three two values:

SELINUXTYPE=targeted

host_118 | CHANGED | rc=0 >>

# SELINUX= can take one of these three values:

SELINUX=disabled

# SELINUXTYPE= can take one of three two values:

SELINUXTYPE=targeted

host_119 | CHANGED | rc=0 >>

# SELINUX= can take one of these three values:

SELINUX=disabled

# SELINUXTYPE= can take one of three two values:

SELINUXTYPE=targeted

host_122 | CHANGED | rc=0 >>

# SELINUX= can take one of these three values:

SELINUX=disabled

# SELINUXTYPE= can take one of three two values:

SELINUXTYPE=targeted

host_120 | CHANGED | rc=0 >>

# SELINUX= can take one of these three values:

SELINUX=disabled

# SELINUXTYPE= can take one of three two values:

SELINUXTYPE=targeted

host_126 | CHANGED | rc=0 >>

# SELINUX= can take one of these three values:

SELINUX=disabled

# SELINUXTYPE= can take one of three two values:

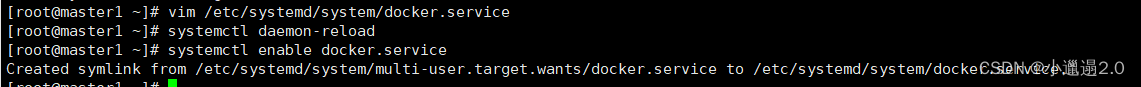

SELINUXTYPE=targeted 2.查看防火墙状态

有关着的也有起着的。我以为是防火墙的问题,但看了另一套hadoop的环境信息,都是在相通主机上,用户名不通而已,不可能只对当前用户不起作用。

# ansible -i hosts tt -m shell -a "systemctl status iptables |grep Active:"

host_118 | CHANGED | rc=0 >>

Active: failed (Result: exit-code) since ? 2022-04-26 11:04:51 CST; 1 years 6 months ago

host_121 | CHANGED | rc=0 >>

Active: active (exited) since ? 2022-04-26 11:05:40 CST; 1 years 6 months ago

host_119 | CHANGED | rc=0 >>

Active: active (exited) since ? 2022-04-26 10:59:41 CST; 1 years 6 months ago

host_122 | CHANGED | rc=0 >>

Active: active (exited) since ? 2021-03-18 17:13:00 CST; 2 years 7 months ago

host_120 | CHANGED | rc=0 >>

Active: failed (Result: exit-code) since ? 2022-04-26 11:05:06 CST; 1 years 6 months ago

host_126 | CHANGED | rc=0 >>

Active: active (exited) since ? 2021-03-18 15:58:10 CST; 2 years 7 months ago3.查看 journalnode日志

2023-11-08 11:46:08,640 INFO org.apache.hadoop.hdfs.server.common.Storage: Formatting journal Storage Directory /app/log4x/apps/hadoop/jn/log4x-hcluster with nsid: 1531841238

2023-11-08 11:46:08,642 INFO org.apache.hadoop.hdfs.server.common.Storage: Lock on /app/log4x/apps/hadoop/jn/log4x-hcluster/in_use.lock acquired by nodename 3995@hkcrmlog04

2023-11-08 11:49:37,343 INFO org.apache.hadoop.hdfs.qjournal.server.Journal: Updating lastPromisedEpoch from 0 to 1 for client /10.255.33.121

2023-11-08 11:49:37,345 INFO org.apache.hadoop.ipc.Server: IPC Server handler 2 on 43001, call org.apache.hadoop.hdfs.qjournal.protocol.QJournalProtocol.journal from 10.255.33.121:51086 Call#65 Retry#0

java.io.IOException: IPC's epoch 1 is not the current writer epoch 0

at org.apache.hadoop.hdfs.qjournal.server.Journal.checkWriteRequest(Journal.java:445)

at org.apache.hadoop.hdfs.qjournal.server.Journal.journal(Journal.java:342)

at org.apache.hadoop.hdfs.qjournal.server.JournalNodeRpcServer.journal(JournalNodeRpcServer.java:148)

at org.apache.hadoop.hdfs.qjournal.protocolPB.QJournalProtocolServerSideTranslatorPB.journal(QJournalProtocolServerSideTranslatorPB.java:158)

at org.apache.hadoop.hdfs.qjournal.protocol.QJournalProtocolProtos$QJournalProtocolService$2.callBlockingMethod(QJournalProtocolProtos.java:25421)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:619)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:975)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2040)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2036)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1692)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2034)

确实更防火墙没关系,format了namenode节点后,根据hdfs-site.xml文件中配置的sockt路径,创建了sock。

# ll

total 4

srwxr-xr-x 1 log4x log4x 0 Nov 8 15:05 DN_PORT

drwxr-xr-x 2 log4x log4x 4096 Nov 8 14:47 hadoop

[log4x@hkcrmlog04 etc]$ pwd

/app/log4x/apps/hadoop/etc

[log4x@hkcrmlog04 etc]$ grep -ir 'DN_PORT' hadoop/

hadoop/hdfs-site.xml.bajk: <value>/app/ailog4x/hadoop/etc/DN_PORT</value>

hadoop/hdfs-site.xml.1107bak: <value>/app/log4x/apps/hadoop/etc/DN_PORT</value>

hadoop/hdfs-site.xml: <value>/app/log4x/apps/hadoop/etc/DN_PORT</value>4.说明

4.1 nc -Ul DN_PORT 创建为socket 。若命令不存在,yum install -y nc安装即可

4.2 chmod 666 DN_PORT 权限给到666

4.2 chmod -R 755 hadoop/ 整个hadoop的权限给到755

报错4:Operation category JOURNAL is not supported in state standby

Operation category JOURNAL is not supported in state standby。。。

Call From hkcrmlog03/10.255.33.120 to hkcrmlog04:40101 failed on connection exception: java.net.ConnectException: Connection refused; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.net.NetUtils.wrapWithMessage(NetUtils.java:791)

at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:731)

at org.apache.hadoop.ipc.Client.call(Client.java:1474)

at org.apache.hadoop.ipc.Client.call(Client.java:1401)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:232)

at com.sun.proxy.$Proxy15.rollEditLog(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.NamenodeProtocolTranslatorPB.rollEditLog(NamenodeProtocolTranslatorPB.java:148)

at org.apache.hadoop.hdfs.server.namenode.ha.EditLogTailer.triggerActiveLogRoll(EditLogTailer.java:271)

at org.apache.hadoop.hdfs.server.namenode.ha.EditLogTailer.access$600(EditLogTailer.java:61)

at org.apache.hadoop.hdfs.server.namenode.ha.EditLogTailer$EditLogTailerThread.doWork(EditLogTailer.java:313)

at org.apache.hadoop.hdfs.server.namenode.ha.EditLogTailer$EditLogTailerThread.access$200(EditLogTailer.java:282)

at org.apache.hadoop.hdfs.server.namenode.ha.EditLogTailer$EditLogTailerThread$1.run(EditLogTailer.java:299)

at org.apache.hadoop.security.SecurityUtil.doAsLoginUserOrFatal(SecurityUtil.java:412)

at org.apache.hadoop.hdfs.server.namenode.ha.EditLogTailer$EditLogTailerThread.run(EditLogTailer.java:295)

Caused by: java.net.ConnectException: Connection refused

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:715)

at org.apache.hadoop.net.SocketIOWithTimeout.connect(SocketIOWithTimeout.java:206)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:530)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:494)

at org.apache.hadoop.ipc.Client$Connection.setupConnection(Client.java:609)

at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:707)

at org.apache.hadoop.ipc.Client$Connection.access$2800(Client.java:370)

at org.apache.hadoop.ipc.Client.getConnection(Client.java:1523)

at org.apache.hadoop.ipc.Client.call(Client.java:1440)

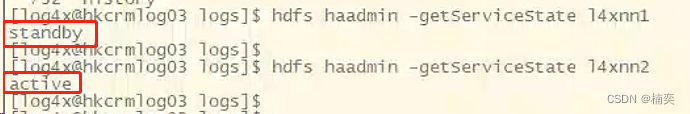

1.查看状态

# bin/hdfs haadmin -getServiceState l4xnn2

standby

[log4x@hkcrmlog03 hadoop]$

[log4x@hkcrmlog03 hadoop]$ bin/hdfs haadmin -getServiceState l4xnn1

standby

# bin/hdfs haadmin -transitionToActive --forcemanual l4xnn1

You have specified the forcemanual flag. This flag is dangerous, as it can induce a split-brain scenario that WILL CORRUPT your HDFS namespace, possibly irrecoverably.

It is recommended not to use this flag, but instead to shut down the cluster and disable automatic failover if you prefer to manually manage your HA state.

You may abort safely by answering 'n' or hitting ^C now.

Are you sure you want to continue? (Y or N) y

23/11/08 15:46:00 WARN ha.HAAdmin: Proceeding with manual HA state management even though

automatic failover is enabled for NameNode at /10.255.33.121:40101

23/11/08 15:46:00 WARN ha.HAAdmin: Proceeding with manual HA state management even though

automatic failover is enabled for NameNode at /10.255.33.120:40101

[log4x@hkcrmlog03 hadoop]$

[log4x@hkcrmlog03 hadoop]$

[log4x@hkcrmlog03 hadoop]$

[log4x@hkcrmlog03 hadoop]$ bin/hdfs haadmin -getServiceState l4xnn1

active

[log4x@hkcrmlog03 hadoop]$ bin/hdfs haadmin -getServiceState l4xnn2

standbynamenode的日志已正常。

报错5:Error replaying edit log at offset 0. Expected transaction ID was 1

org.apache.hadoop.hdfs.server.namenode.EditLogInputException: Error replaying edit log at offset 0. Expected transaction ID was 1

在节点上从新执行了

# $HADOOP_PREFIX/bin/hdfs namenode -format在启动namenode

# $HADOOP_PREFIX/sbin/hadoop-daemon.sh --script hdfs start namenode$ bin/hdfs haadmin -getServiceState l4xnn1

active

[log4x@hkcrmlog03 hadoop]$

[log4x@hkcrmlog03 hadoop]$ bin/hdfs haadmin -getServiceState l4xnn2

standbynamenode已正常。

报错6:journal Storage Directory

journal Storage Directory /app/log4x/apps/hadoop/jn/log4x-hcluster: NameNode

大概为journalnode保存的元数据和namenode的不一致,导致,3台机器中有2台报了这个错误。

在nn1上启动journalnode,再执行hdfs namenode -initializeSharedEdits,使得journalnode与namenode保持一致。再重新启动namenode就没有问题了。

在查看2个namenode的状态。

报错7:Decided to synchronize log to startTxId: 1

Decided to synchronize log to startTxId: 1

namenode元数据被破坏,需要修复

解决恢复一下namenode

hadoop namenode –recover