service微服务

创建测试示例

vim myapp.ymlapiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: myapp

name: myapp

spec:

replicas: 6

selector:

matchLabels:

app: myapp

template:

metadata:

labels:

app: myapp

spec:

containers:

- image: myapp:v1

name: myapp

---

apiVersion: v1

kind: Service

metadata:

labels:

app: myapp

name: myapp

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: myapp

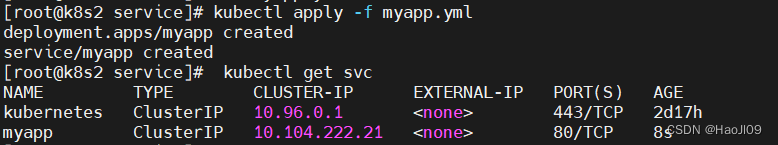

type: ClusterIPkubectl apply -f myapp.yml

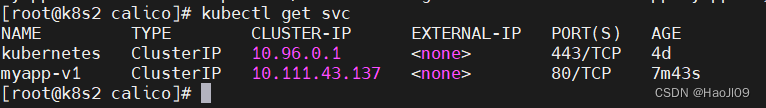

kubectl get svc

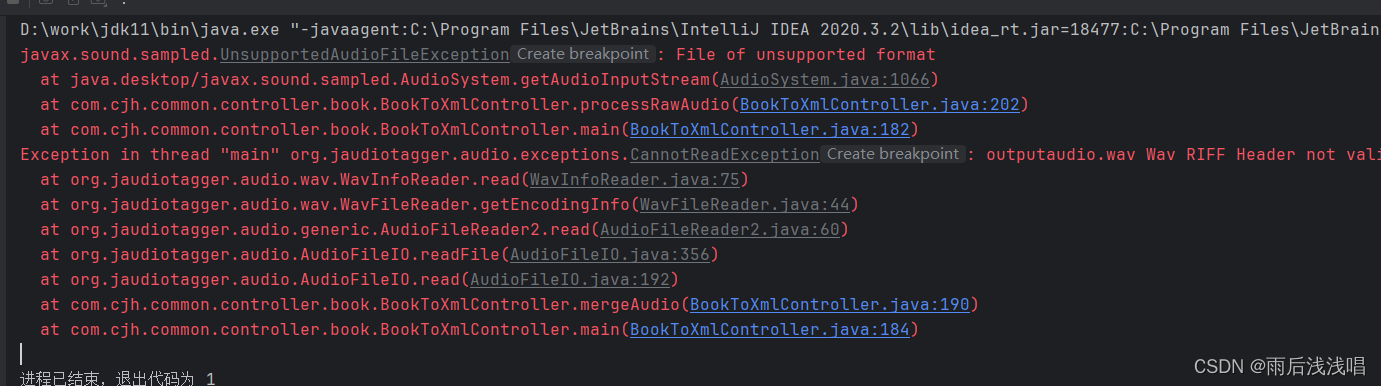

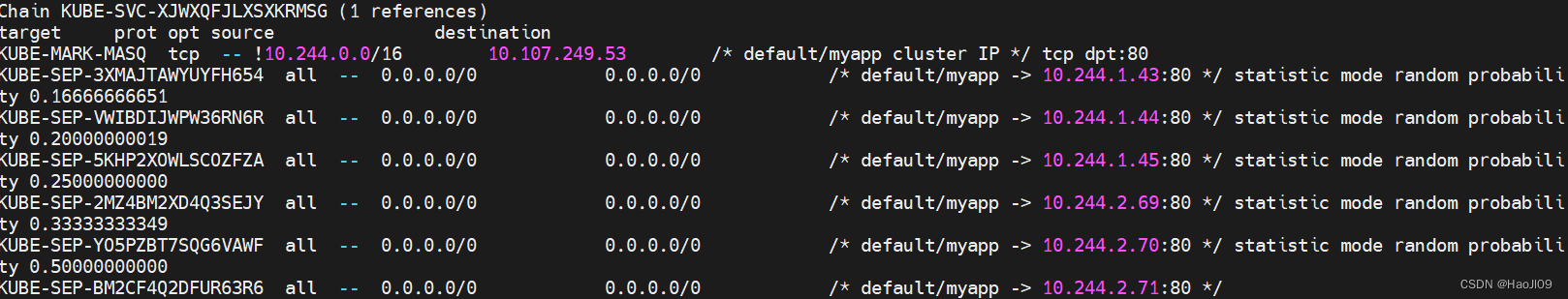

默认使用iptables调度

ipvs模式

修改proxy配置

kubectl -n kube-system edit cm kube-proxy...

mode: "ipvs"重启pod

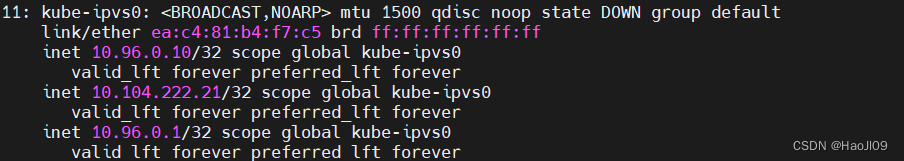

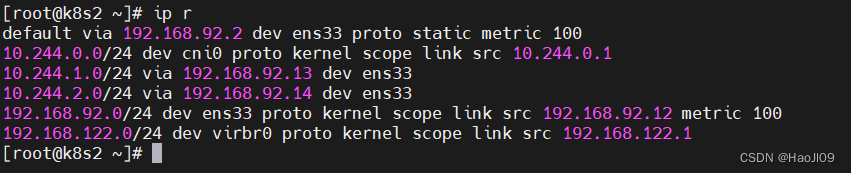

kubectl -n kube-system get pod|grep kube-proxy | awk '{system("kubectl -n kube-system delete pod "$1"")}'切换ipvs模式后,kube-proxy会在宿主机上添加一个虚拟网卡:kube-ipvs0,并分配service IP

ip a

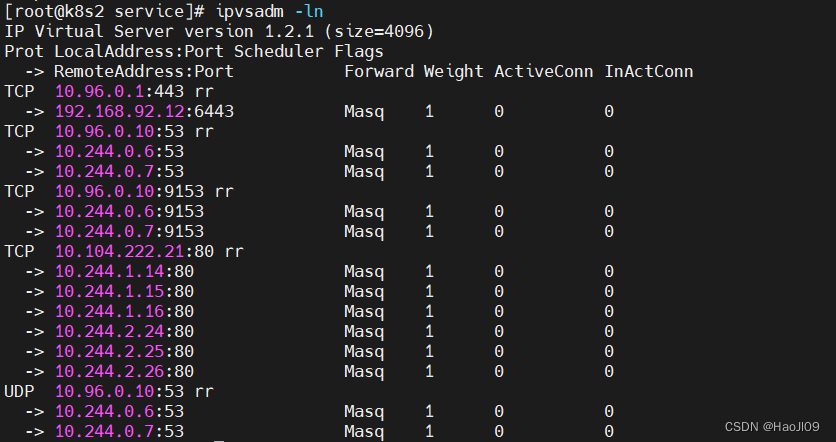

ipvsadm -ln

clusterip

clusterip模式只能在集群内访问

vim myapp.yml---

apiVersion: v1

kind: Service

metadata:

labels:

app: myapp

name: myapp

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: myapp

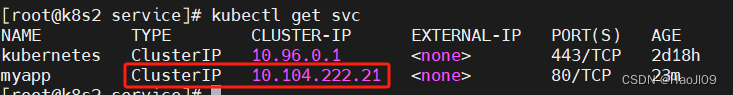

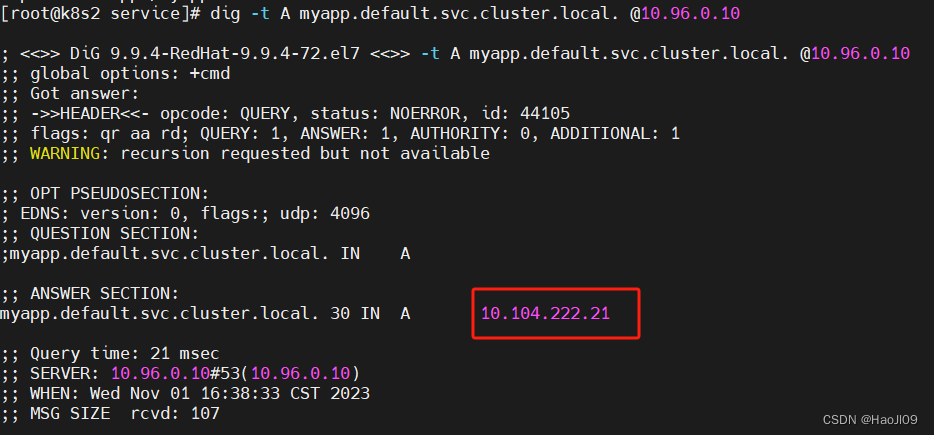

type: ClusterIPservice创建后集群DNS提供解析

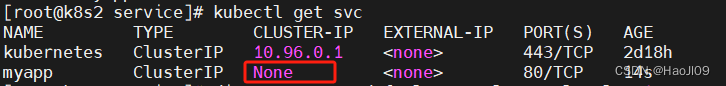

kubectl get svc

dig -t A myapp.default.svc.cluster.local. @10.96.0.10

headless

vim myapp.yml---

apiVersion: v1

kind: Service

metadata:

labels:

app: myapp

name: myapp

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: myapp

type: ClusterIP

clusterIP: Nonekubectl delete svc myapp

kubectl apply -f myapp.ymlheadless模式不分配vip

kubectl get svc

headless通过svc名称访问,由集群内dns提供解析

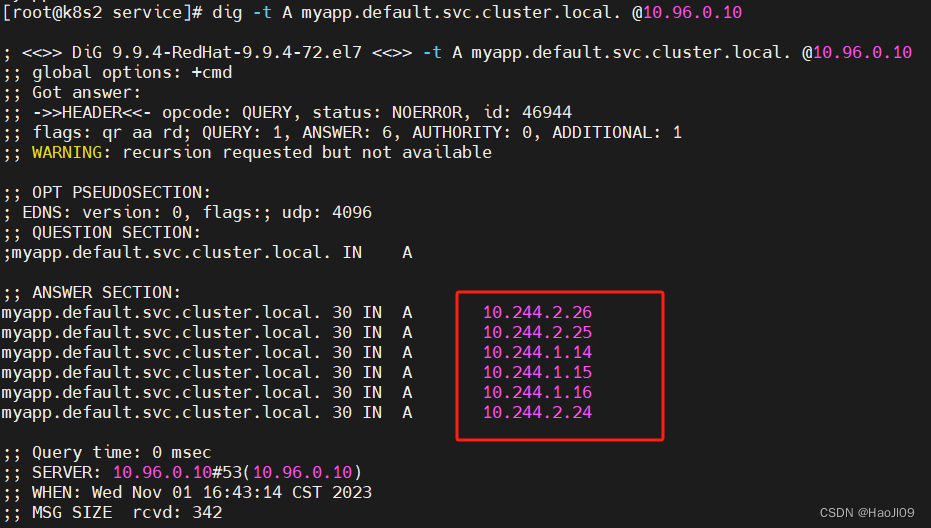

dig -t A myapp.default.svc.cluster.local. @10.96.0.10

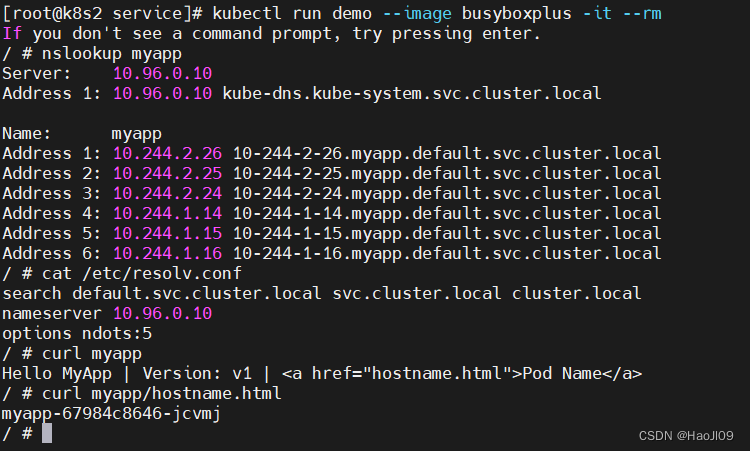

集群内直接使用service名称访问

kubectl run demo --image busyboxplus -it --rm

nslookup myapp

cat /etc/resolv.conf

curl myapp

curl myapp/hostname.html

nodeport

vim myapp.yml---

apiVersion: v1

kind: Service

metadata:

labels:

app: myapp

name: myapp

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: myapp

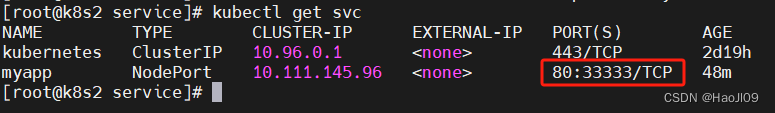

type: NodePortkubectl apply -f myapp.yml

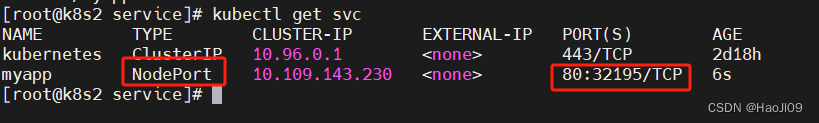

kubectl get svc

nodeport在集群节点上绑定端口,一个端口对应一个服务

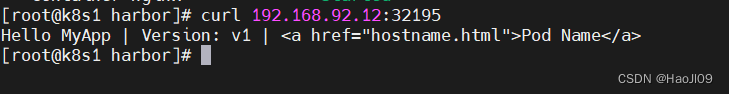

curl 192.168.92.12:32195

loadbalancer

vim myapp.yml---

apiVersion: v1

kind: Service

metadata:

labels:

app: myapp

name: myapp

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: myapp

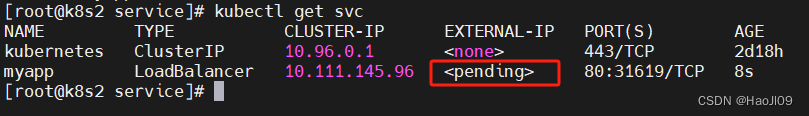

type: LoadBalancerkubectl apply -f myapp.yml默认无法分配外部访问IP

kubectl get svc

LoadBalancer模式适用云平台,裸金属环境需要安装metallb提供支持

metallb

官网:https://metallb.universe.tf/installation/

kubectl edit configmap -n kube-system kube-proxyapiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: "ipvs"

ipvs:

strictARP: truekubectl -n kube-system get pod|grep kube-proxy | awk '{system("kubectl -n kube-system delete pod "$1"")}'下载部署文件

wget https://raw.githubusercontent.com/metallb/metallb/v0.13.12/config/manifests/metallb-native.yaml修改文件中镜像地址,与harbor仓库路径保持一致

vim metallb-native.yaml...

image: metallb/speaker:v0.13.12

image: metallb/controller:v0.13.12上传镜像到harbor

docker pull quay.io/metallb/controller:v0.13.12

docker pull quay.io/metallb/speaker:v0.13.12

docker tag quay.io/metallb/controller:v0.13.12 reg.westos.org/metallb/controller:v0.13.12

docker tag quay.io/metallb/speaker:v0.13.12 reg.westos.org/metallb/speaker:v0.13.12

docker push reg.westos.org/metallb/controller:v0.13.12

docker push reg.westos.org/metallb/speaker:v0.13.12

部署服务

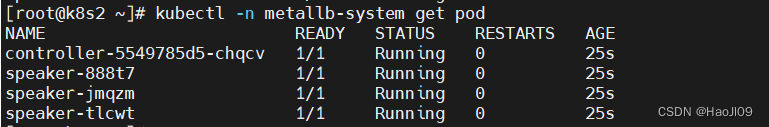

kubectl apply -f metallb-native.yaml

kubectl -n metallb-system get pod

配置分配地址段

vim config.yamlapiVersion: metallb.io/v1beta1

kind: IPAddressPool

metadata:

name: first-pool

namespace: metallb-system

spec:

addresses:

- 192.168.92.100-192.168.92.200

---

apiVersion: metallb.io/v1beta1

kind: L2Advertisement

metadata:

name: example

namespace: metallb-system

spec:

ipAddressPools:

- first-pool

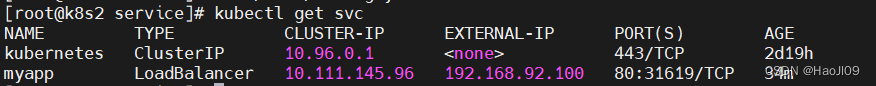

kubectl apply -f config.yaml

kubectl get svc

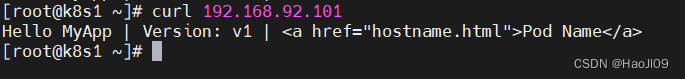

通过分配地址从集群外访问服务

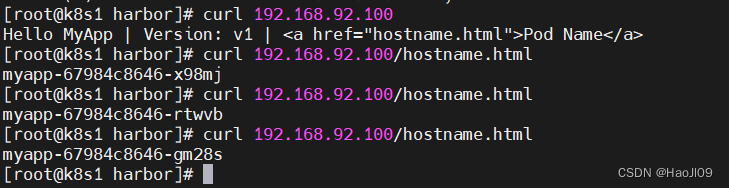

curl 192.168.92.100

curl 192.168.92.100/hostname.html

curl 192.168.92.100/hostname.html

curl 192.168.92.100/hostname.html

nodeport默认端口

vim myapp.ymlapiVersion: v1

kind: Service

metadata:

labels:

app: myapp

name: myapp

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

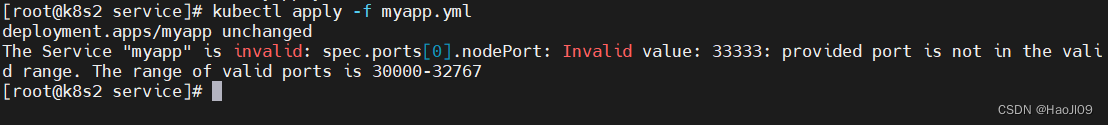

nodePort: 33333

selector:

app: myapp

type: NodePortkubectl apply -f myapp.yml

nodeport默认端口是30000-32767,超出会报错

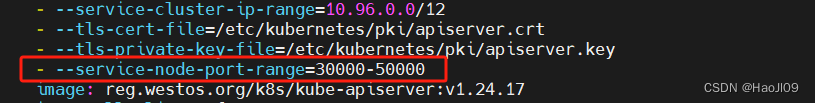

vim /etc/kubernetes/manifests/kube-apiserver.yaml添加如下参数,端口范围可以自定义

- --service-node-port-range=30000-50000

修改后api-server会自动重启,等apiserver正常启动后才能操作集群

externalname

vim externalname.yamlapiVersion: v1

kind: Service

metadata:

name: my-service

spec:

type: ExternalName

externalName: www.westos.orgkubectl apply -f externalname.yaml

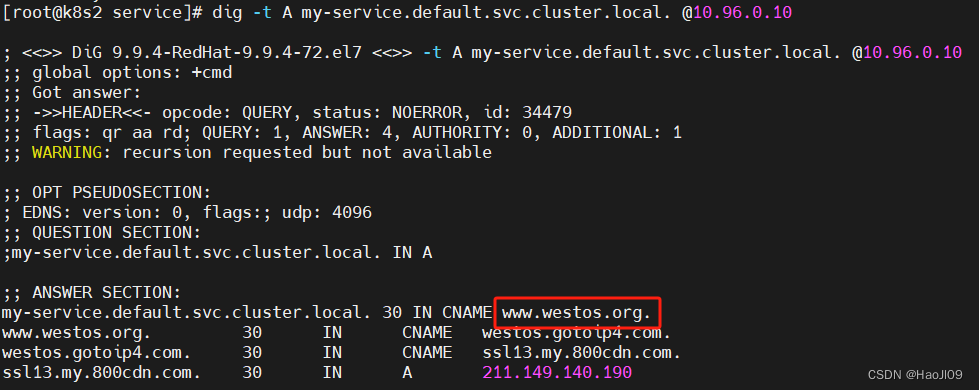

dig -t A my-service.default.svc.cluster.local. @10.96.0.10

ingress-nginx

部署

官网:https://kubernetes.github.io/ingress-nginx/deploy/#bare-metal-clusters

下载部署文件

wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.8.2/deploy/static/provider/baremetal/deploy.yaml上传镜像到harbor

docker pull dyrnq/ingress-nginx-controller:v1.8.2

docker pull dyrnq/kube-webhook-certgen:v20230407

docker tag dyrnq/ingress-nginx-controller:v1.8.2 reg.westos.org/ingress-nginx/controller:v1.8.2

docker tag dyrnq/kube-webhook-certgen:v20230407 reg.westos.org/ingress-nginx/kube-webhook-certgen:v20230407

docker push reg.westos.org/ingress-nginx/controller:v1.8.2

docker push reg.westos.org/ingress-nginx/kube-webhook-certgen:v20230407

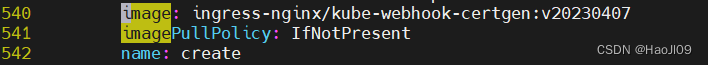

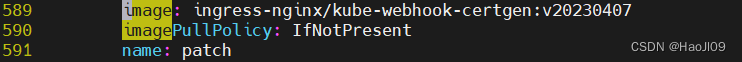

修改3个镜像路径

vim deploy.yaml...

image: ingress-nginx/controller:v1.8.2

...

image: ingress-nginx/kube-webhook-certgen:v20230407

...

image: ingress-nginx/kube-webhook-certgen:v20230407![]()

kubectl apply -f deploy.yaml

kubectl -n ingress-nginx get pod

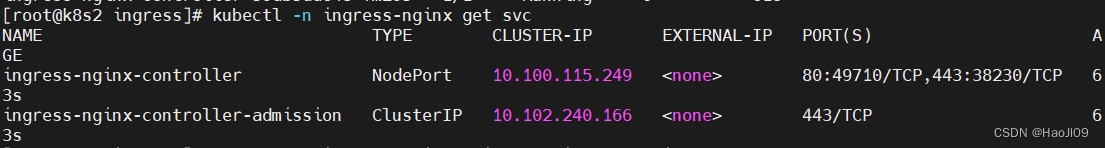

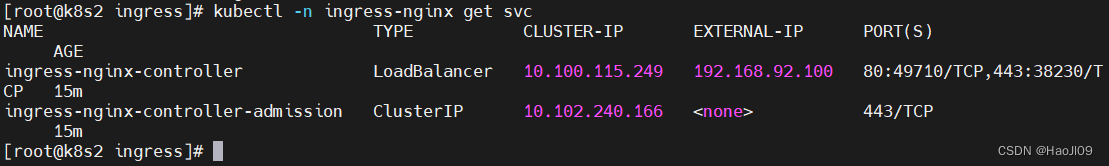

kubectl -n ingress-nginx get svc

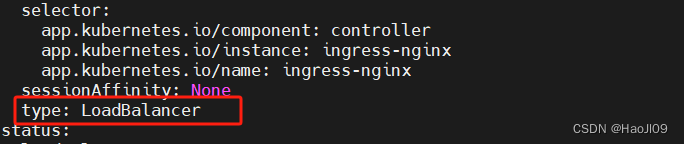

修改为LoadBalancer方式

kubectl -n ingress-nginx edit svc ingress-nginx-controllertype: LoadBalancer

kubectl -n ingress-nginx get svc

创建ingress策略

vim ingress.ymlapiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: minimal-ingress

spec:

ingressClassName: nginx

rules:

- http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: myapp

port:

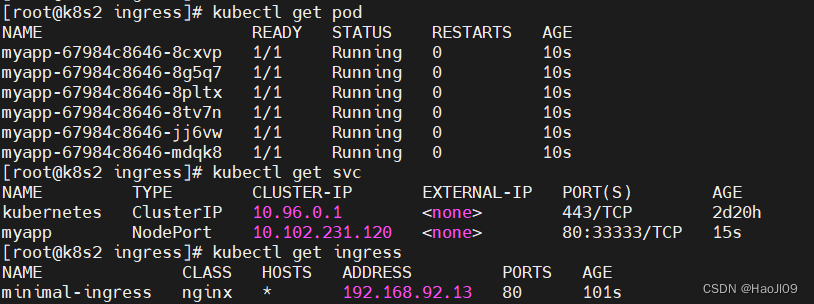

number: 80kubectl apply -f ingress.ymlkubectl get pod

kubectl get svc

kubectl get ingress

ingress必须和输出的service资源处于同一namespace

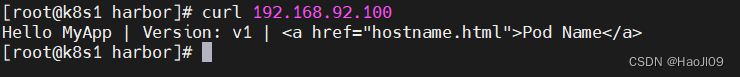

测试

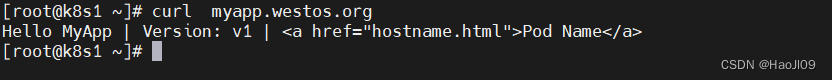

curl 192.168.92.100

回收资源

kubectl delete -f myapp.yml

kubectl delete -f ingress.yml基于路径访问

文档: https://v1-25.docs.kubernetes.io/zh-cn/docs/concepts/services-networking/ingress/

创建svc

vim myapp-v1.ymlapiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: myapp-v1

name: myapp-v1

spec:

replicas: 3

selector:

matchLabels:

app: myapp-v1

template:

metadata:

labels:

app: myapp-v1

spec:

containers:

- image: myapp:v1

name: myapp-v1

---

apiVersion: v1

kind: Service

metadata:

labels:

app: myapp-v1

name: myapp-v1

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: myapp-v1

type: ClusterIPkubectl apply -f myapp-v1.ymlvim myapp-v2.ymlapiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: myapp-v2

name: myapp-v2

spec:

replicas: 3

selector:

matchLabels:

app: myapp-v2

template:

metadata:

labels:

app: myapp-v2

spec:

containers:

- image: myapp:v2

name: myapp-v2

---

apiVersion: v1

kind: Service

metadata:

labels:

app: myapp-v2

name: myapp-v2

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: myapp-v2

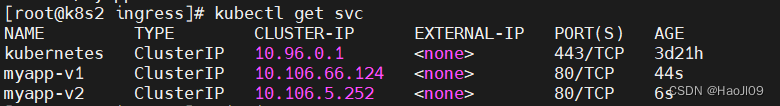

type: ClusterIPkubectl apply -f myapp-v2.ymlkubectl get svc

创建ingress

vim ingress1.ymlapiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: minimal-ingress

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

ingressClassName: nginx

rules:

- host: myapp.westos.org

http:

paths:

- path: /v1

pathType: Prefix

backend:

service:

name: myapp-v1

port:

number: 80

- path: /v2

pathType: Prefix

backend:

service:

name: myapp-v2

port:

number: 80kubectl apply -f ingress1.yml

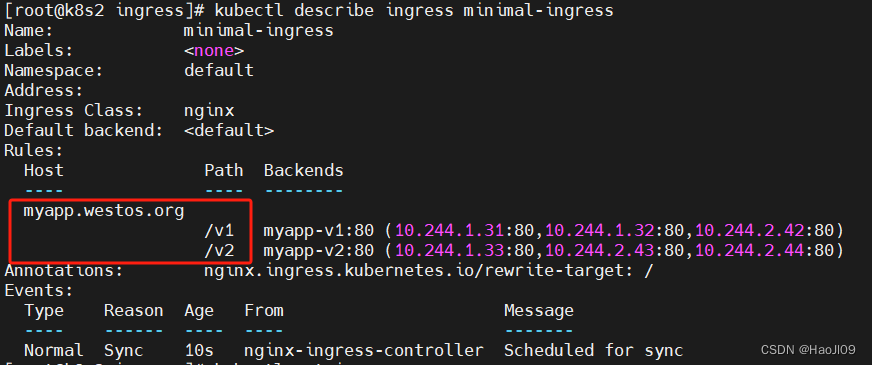

kubectl describe ingress minimal-ingress

测试

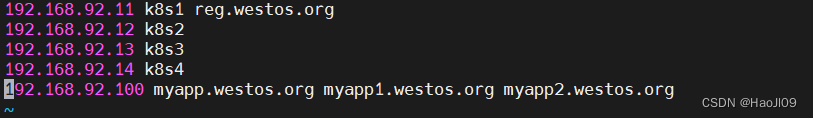

vim /etc/hosts...

192.168.92.100 myapp.westos.org myapp1.westos.org myapp2.westos.org

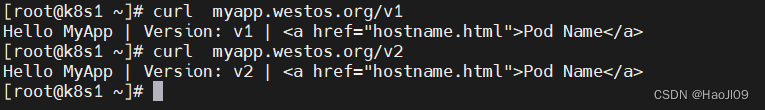

curl myapp.westos.org/v1

curl myapp.westos.org/v2

回收

kubectl delete -f ingress1.yml基于域名访问

vim ingress2.ymlapiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: minimal-ingress

spec:

ingressClassName: nginx

rules:

- host: myapp1.westos.org

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: myapp-v1

port:

number: 80

- host: myapp2.westos.org

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: myapp-v2

port:

number: 80kubectl apply -f ingress2.yml

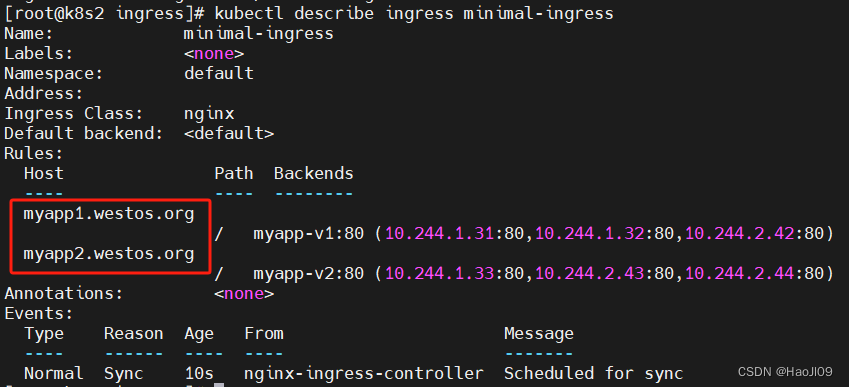

kubectl describe ingress minimal-ingress

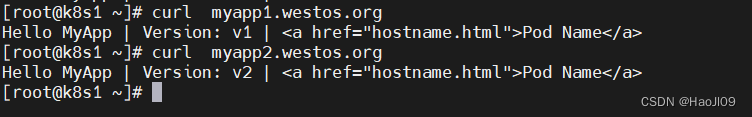

测试

curl myapp1.westos.org

curl myapp2.westos.org

回收

kubectl delete -f ingress2.ymlTLS加密

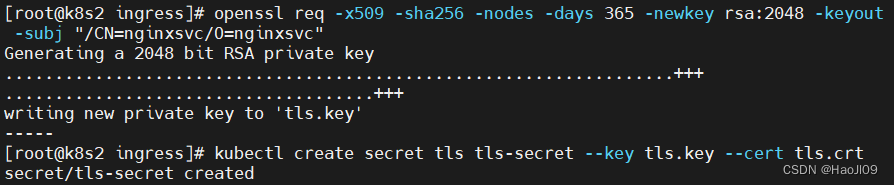

创建证书

openssl req -x509 -sha256 -nodes -days 365 -newkey rsa:2048 -keyout tls.key -out tls.crt -subj "/CN=nginxsvc/O=nginxsvc"

kubectl create secret tls tls-secret --key tls.key --cert tls.crt

vim ingress3.ymlapiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-tls

spec:

tls:

- hosts:

- myapp.westos.org

secretName: tls-secret

ingressClassName: nginx

rules:

- host: myapp.westos.org

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: myapp-v1

port:

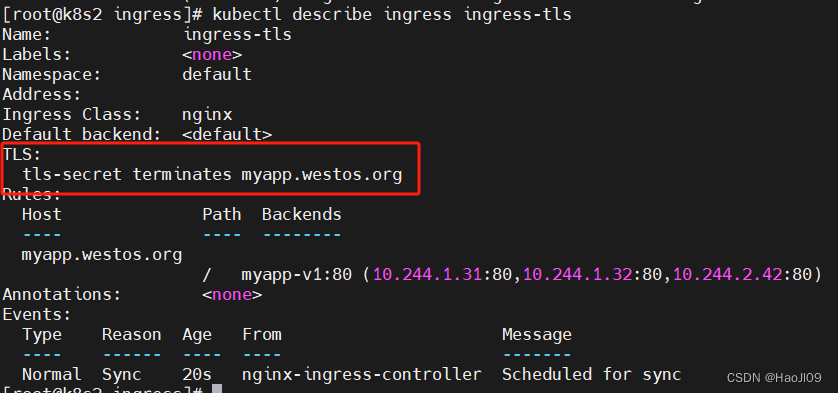

number: 80kubectl apply -f ingress3.yml

kubectl describe ingress ingress-tls

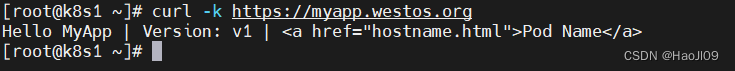

测试

curl -k https://myapp.westos.org

auth认证

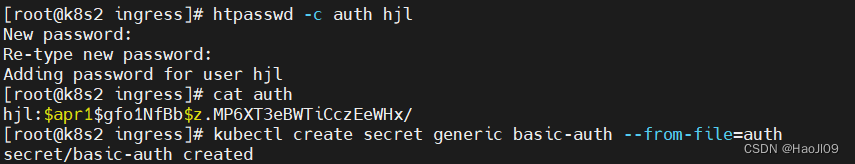

创建认证文件

yum install -y httpd-tools

htpasswd -c auth hjl

cat auth

kubectl create secret generic basic-auth --from-file=auth

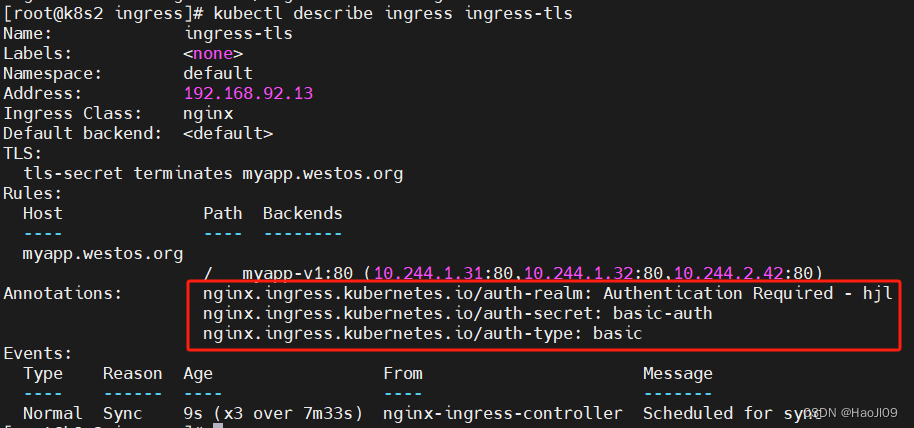

vim ingress3.ymlapiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-tls

annotations:

nginx.ingress.kubernetes.io/auth-type: basic

nginx.ingress.kubernetes.io/auth-secret: basic-auth

nginx.ingress.kubernetes.io/auth-realm: 'Authentication Required - hjl'

spec:

tls:

- hosts:

- myapp.westos.org

secretName: tls-secret

ingressClassName: nginx

rules:

- host: myapp.westos.org

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: myapp-v1

port:

number: 80kubectl apply -f ingress3.yml

kubectl describe ingress ingress-tls

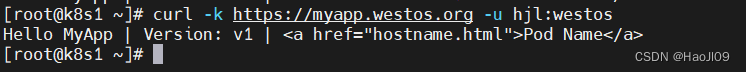

测试

curl -k https://myapp.westos.org -u hjl:westos

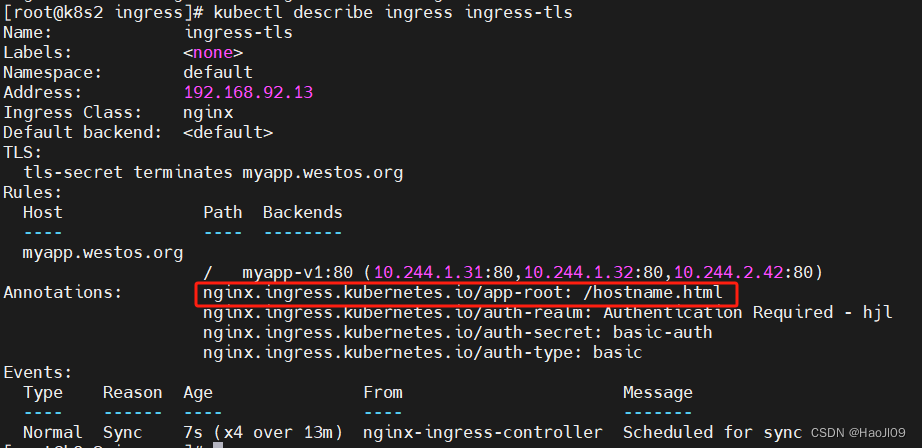

rewrite重定向

示例1:

vim ingress3.ymlapiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-tls

annotations:

nginx.ingress.kubernetes.io/auth-type: basic

nginx.ingress.kubernetes.io/auth-secret: basic-auth

nginx.ingress.kubernetes.io/auth-realm: 'Authentication Required - hjl'

nginx.ingress.kubernetes.io/app-root: /hostname.html

spec:

tls:

- hosts:

- myapp.westos.org

secretName: tls-secret

ingressClassName: nginx

rules:

- host: myapp.westos.org

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: myapp-v1

port:

number: 80kubectl apply -f ingress3.yml

kubectl describe ingress ingress-tls

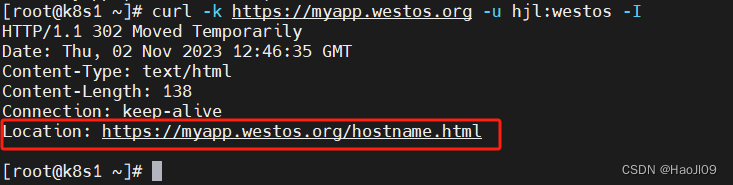

测试

curl -k https://myapp.westos.org -u hjl:westos -I

示例二:

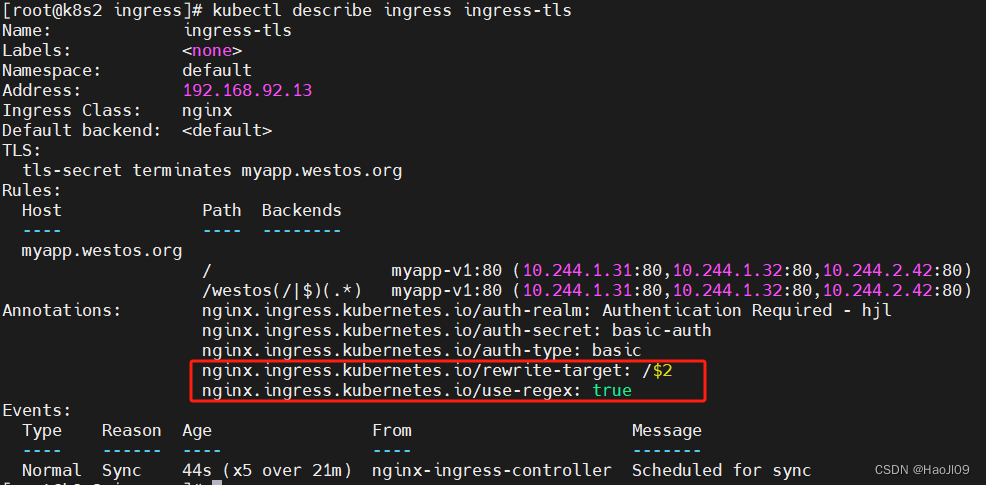

vim ingress3.ymlapiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-tls

annotations:

nginx.ingress.kubernetes.io/auth-type: basic

nginx.ingress.kubernetes.io/auth-secret: basic-auth

nginx.ingress.kubernetes.io/auth-realm: 'Authentication Required - hjl'

#nginx.ingress.kubernetes.io/app-root: /hostname.html

nginx.ingress.kubernetes.io/use-regex: "true"

nginx.ingress.kubernetes.io/rewrite-target: /$2

spec:

tls:

- hosts:

- myapp.westos.org

secretName: tls-secret

ingressClassName: nginx

rules:

- host: myapp.westos.org

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: myapp-v1

port:

number: 80

- path: /westos(/|$)(.*)

pathType: ImplementationSpecific

backend:

service:

name: myapp-v1

port:

number: 80kubectl apply -f ingress3.yml

kubectl describe ingress ingress-tls

测试

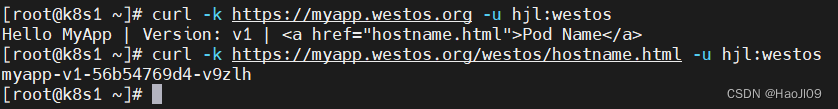

curl -k https://myapp.westos.org -u hjl:westos

curl -k https://myapp.westos.org/westos/hostname.html -u hjl:westos

回收

kubectl delete -f ingress3.ymlcanary金丝雀发布

基于header灰度

vim ingress4.ymlapiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: myapp-v1-ingress

spec:

ingressClassName: nginx

rules:

- host: myapp.westos.org

http:

paths:

- pathType: Prefix

path: /

backend:

service:

name: myapp-v1

port:

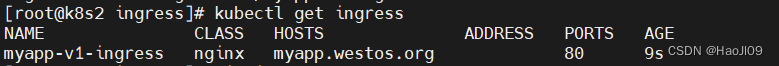

number: 80kubectl apply -f ingress4.yml

kubectl get ingress

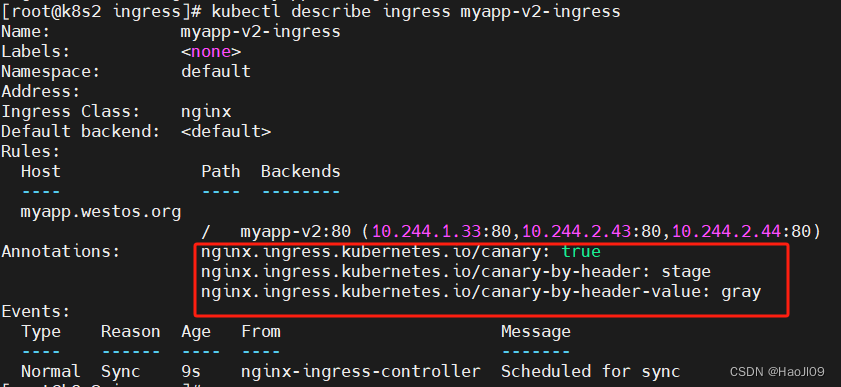

vim ingress5.ymlapiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

nginx.ingress.kubernetes.io/canary: "true"

nginx.ingress.kubernetes.io/canary-by-header: stage

nginx.ingress.kubernetes.io/canary-by-header-value: gray

name: myapp-v2-ingress

spec:

ingressClassName: nginx

rules:

- host: myapp.westos.org

http:

paths:

- pathType: Prefix

path: /

backend:

service:

name: myapp-v2

port:

number: 80[root@k8s2 ingress]# kubectl apply -f ingress5.yml

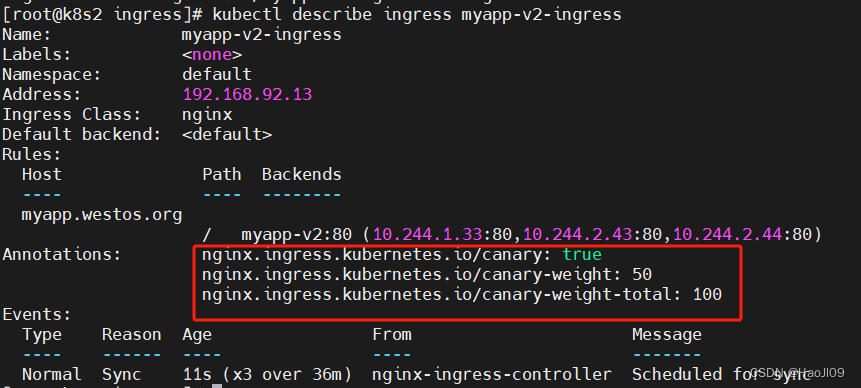

kubectl describe ingress myapp-v2-ingress

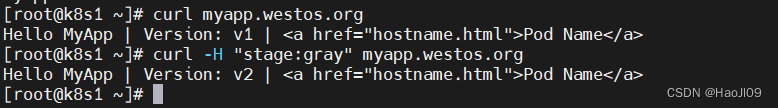

测试

curl myapp.westos.org

curl -H "stage: gray" myapp.westos.org

基于权重灰度

vim ingress5.ymlapiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

nginx.ingress.kubernetes.io/canary: "true"

#nginx.ingress.kubernetes.io/canary-by-header: stage

#nginx.ingress.kubernetes.io/canary-by-header-value: gray

nginx.ingress.kubernetes.io/canary-weight: "50"

nginx.ingress.kubernetes.io/canary-weight-total: "100"

name: myapp-v2-ingress

spec:

ingressClassName: nginx

rules:

- host: myapp.westos.org

http:

paths:

- pathType: Prefix

path: /

backend:

service:

name: myapp-v2

port:

number: 80kubectl apply -f ingress5.yml

kubectl describe ingress myapp-v2-ingress

测试

vim ingress.sh#!/bin/bash

v1=0

v2=0

for (( i=0; i<100; i++))

do

response=`curl -s myapp.westos.org |grep -c v1`

v1=`expr $v1 + $response`

v2=`expr $v2 + 1 - $response`

done

echo "v1:$v1, v2:$v2"sh ingress.sh

回收

kubectl delete -f ingress5.yml业务域拆分

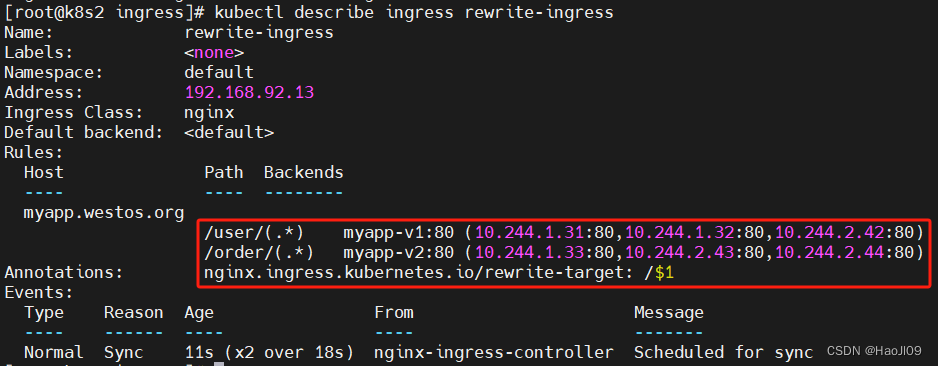

vim ingress6.ymlapiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /$1

name: rewrite-ingress

spec:

ingressClassName: nginx

rules:

- host: myapp.westos.org

http:

paths:

- path: /user/(.*)

pathType: Prefix

backend:

service:

name: myapp-v1

port:

number: 80

- path: /order/(.*)

pathType: Prefix

backend:

service:

name: myapp-v2

port:

number: 80kubectl apply -f ingress6.yml

kubectl describe ingress rewrite-ingress

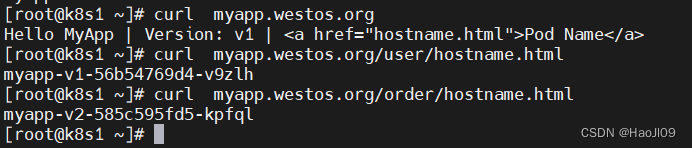

测试

curl myapp.westos.org

curl myapp.westos.org/user/hostname.html

curl myapp.westos.org/order/hostname.html

回收

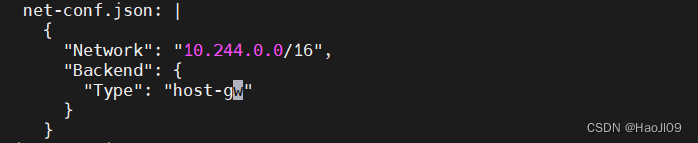

kubectl delete -f ingress6.ymlflannel网络插件

使用host-gw模式

kubectl -n kube-flannel edit cm kube-flannel-cfg 重启pod生效

重启pod生效

kubectl -n kube-flannel delete pod --all

calico网络插件

部署

删除flannel插件

kubectl delete -f kube-flannel.yml删除所有节点上flannel配置文件,避免冲突

[root@k8s2 ~]# rm -f /etc/cni/net.d/10-flannel.conflist

[root@k8s3 ~]# rm -f /etc/cni/net.d/10-flannel.conflist

[root@k8s4 ~]# rm -f /etc/cni/net.d/10-flannel.conflist下载部署文件

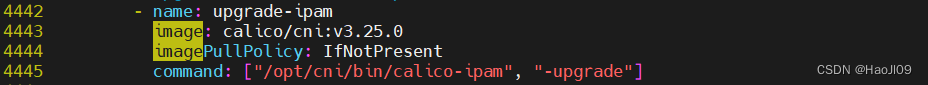

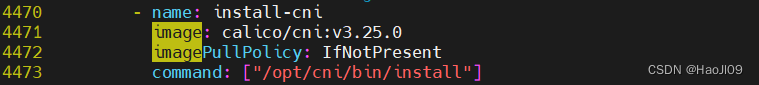

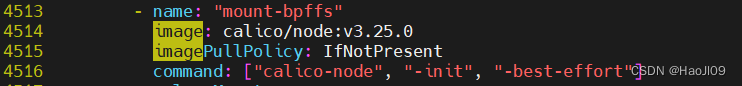

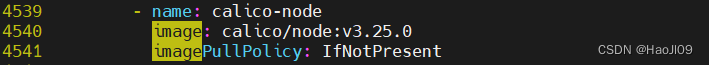

wget https://raw.githubusercontent.com/projectcalico/calico/v3.25.0/manifests/calico.yaml修改镜像路径

vim calico.yaml

下载镜像

docker push reg.westos.org/calico/kube-controllers:v3.25.0

docker push reg.westos.org/calico/cni:v3.25.0

docker push reg.westos.org/calico/node:v3.25.0上传镜像到harbor

部署calico

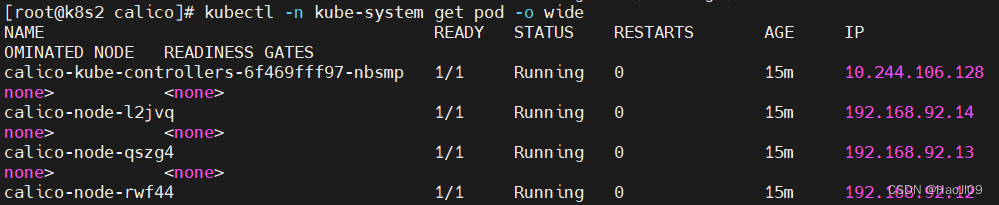

kubectl apply -f calico.yaml

kubectl -n kube-system get pod -o wide

重启所有集群节点,让pod重新分配IP

等待集群重启正常后测试网络

curl myapp.westos.org网络策略

限制pod流量

vim networkpolicy.yamlapiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: test-network-policy

namespace: default

spec:

podSelector:

matchLabels:

app: myapp-v1

policyTypes:

- Ingress

ingress:

- from:

- podSelector:

matchLabels:

role: test

ports:

- protocol: TCP

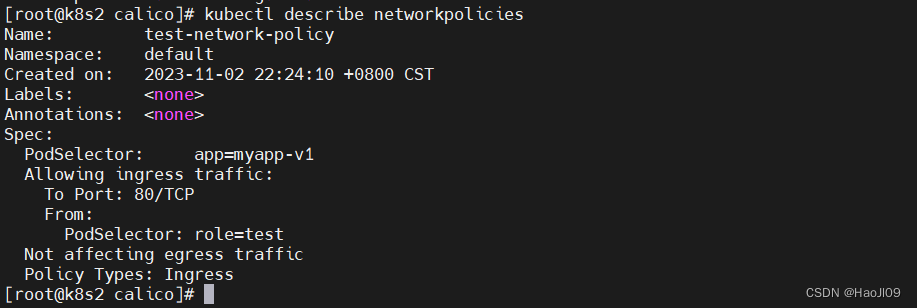

port: 80kubectl apply -f networkpolicy.yaml

kubectl describe networkpolicies

控制的对象是具有app=myapp-v1标签的pod

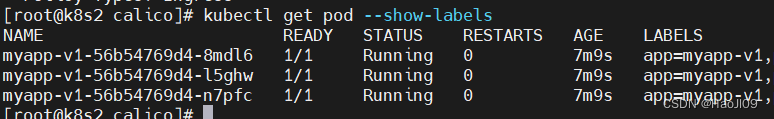

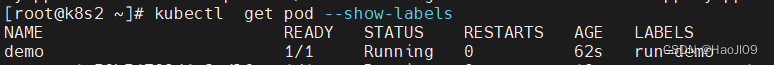

kubectl get pod --show-labels

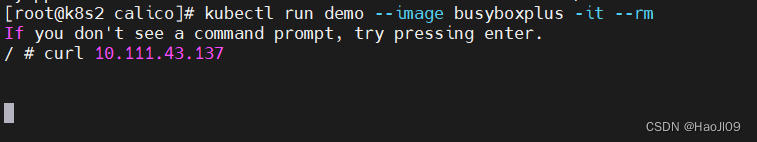

此时访问svc是不通的

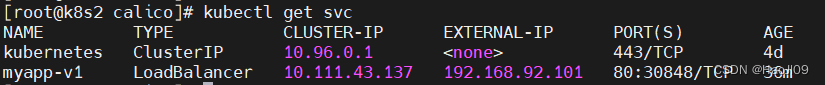

kubectl get svc

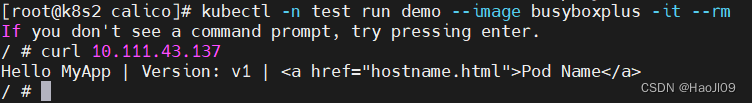

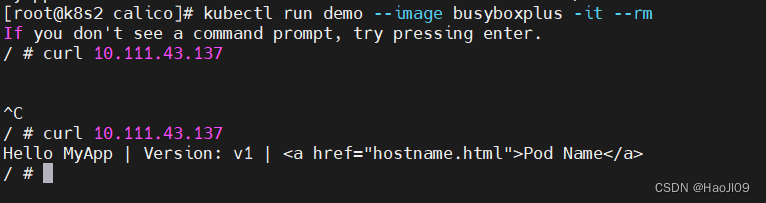

kubectl run demo --image busyboxplus -it --rm

/ # curl 10.111.43.137

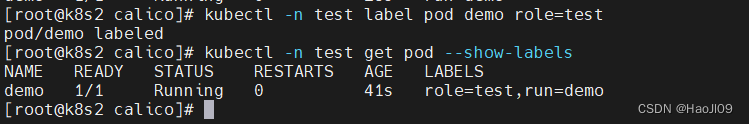

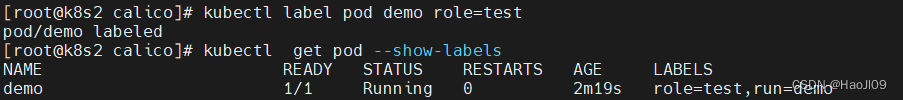

给测试pod添加指定标签后,可以访问

kubectl get pod --show-labels

kubectl label pod demo role=test

kubectl get pod --show-labels

/ # curl 10.111.43.137

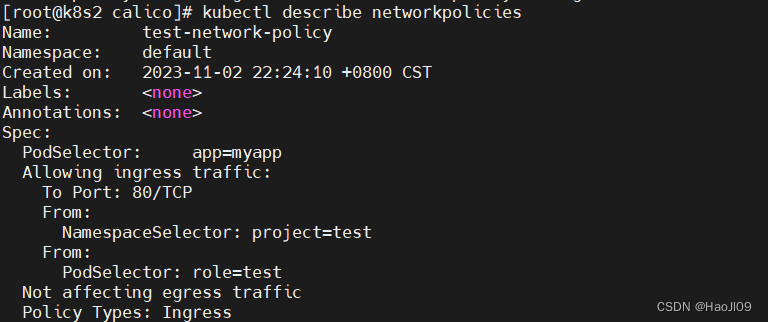

限制namespace流量

vim networkpolicy.yamlapiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: test-network-policy

namespace: default

spec:

podSelector:

matchLabels:

app: myapp

policyTypes:

- Ingress

ingress:

- from:

- namespaceSelector:

matchLabels:

project: test

- podSelector:

matchLabels:

role: test

ports:

- protocol: TCP

port: 80kubectl apply -f networkpolicy.yaml

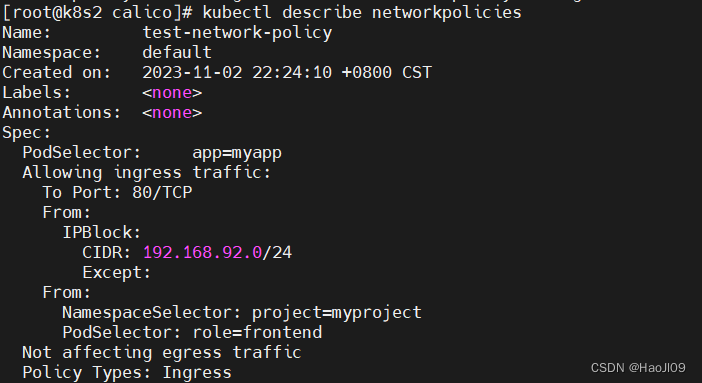

kubectl describe networkpolicies

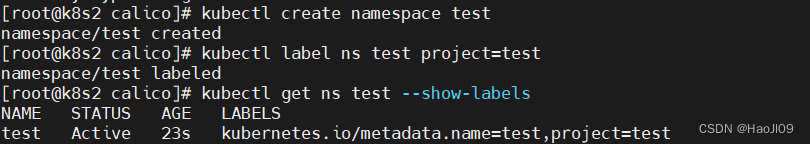

kubectl create namespace test给namespace添加指定标签

kubectl label ns test project=test

kubectl get ns test --show-labels

kubectl -n test run demo --image busyboxplus -it --rm

/ # curl 10.111.43.137 同时限制namespace和pod

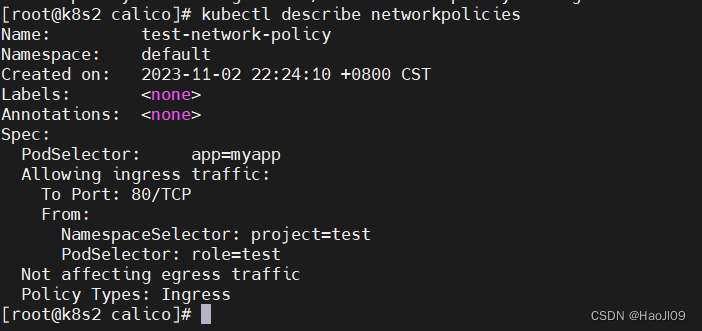

vim networkpolicy.yamlapiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: test-network-policy

namespace: default

spec:

podSelector:

matchLabels:

app: myapp

policyTypes:

- Ingress

ingress:

- from:

- namespaceSelector:

matchLabels:

project: test

podSelector:

matchLabels:

role: test

ports:

- protocol: TCP

port: 80kubectl apply -f networkpolicy.yaml

kubectl describe networkpolicies

给test命令空间中的pod添加指定标签后才能访问

[root@k8s2 calico]# kubectl -n test label pod demo role=test

[root@k8s2 calico]# kubectl -n test get pod --show-labels限制集群外部流量

vim networkpolicy.yamlapiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: test-network-policy

namespace: default

spec:

podSelector:

matchLabels:

app: myapp

policyTypes:

- Ingress

ingress:

- from:

- ipBlock:

cidr: 192.168.56.0/24

- namespaceSelector:

matchLabels:

project: myproject

podSelector:

matchLabels:

role: frontend

ports:

- protocol: TCP

port: 80kubectl apply -f networkpolicy.yaml

kubectl describe networkpolicies

kubectl get svc

curl 192.168.92.101