结论:hostapath作为一种存储类型是支持使用pv及pvc声明使用的。

缘由:最近在寻求云计算方向的运维管理岗位,周五晚上参加了一个头部大厂的西安岗位电面,面试人似乎不情愿作为本场考评的面试官,我在电子会议等了大约17分钟对方才姗姗迟来,张口就问H厂计算产品的各部件工作原理是什么(恕我已经在工作简历中描述清楚了,我只是部署使用过H厂的产品,在公开的技术资料中并未见到各部件的工作原理详细描述,因为各厂商都宣称自己的产品基于开源产品进行了深度重构,并以商业机密为由拒绝披露),在谈到Kubernetes云原生的存储实现时,对方趾高气扬地打断我道:hostpath不能使用pvc动态申明使用。昨天我查阅了Kubernetes的产品文档、专题书籍和相关的博客文章均为明确定义hostpath不能使用pvc动态申明使用 ,最多的描述是在业务生产环境中不建议使用hostapath作为存储。

好吧,实践是检验真理正确与否的唯一标准,到实验靶场上验证一下使用hostapath 做 k8s-APP 的持久化存储是否可行就一清二楚了。

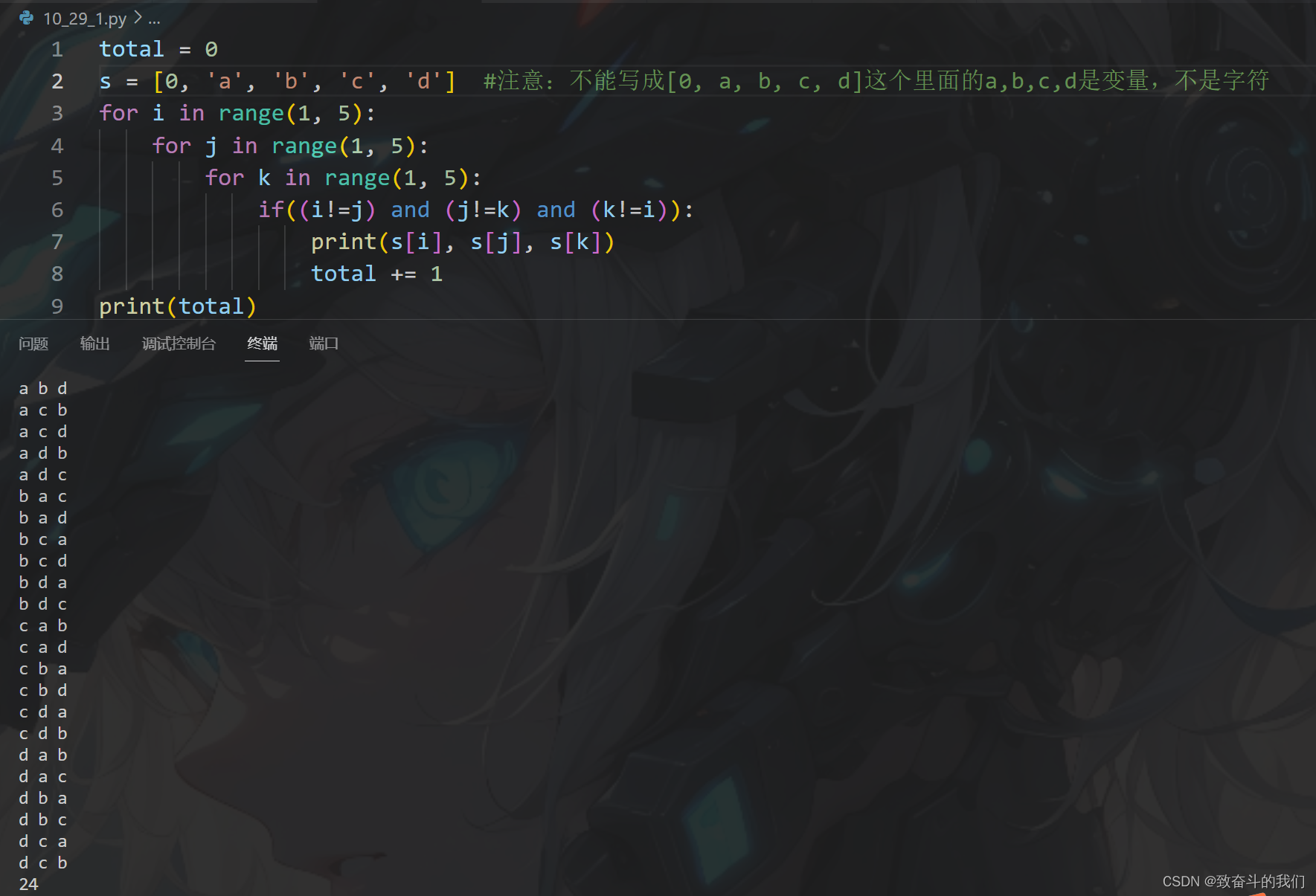

Kubernetes靶场环境:

1master和2slave组成最小化集群,宿主机OS为CentOS7-2207版本,搭建的Kubernetes集群是 基于containerd的 version 1.28.0 版本的。

宿主机规格为 2C/2G、20GB硬盘。

具体操作过程描述如下:

1 使用 crictl image pull 拉取 halo 的docker镜像到本地镜像仓库;

2 编写 Kubernetes-APP 的 yaml配置文件

halo-deployment.yaml

halo-deployment.yaml.original

halo-namespace.yaml

halo-network-service.yaml

halo-persistent-volume-claim.yaml

halo-persistent-volume.yaml

其中 halo-deployment.yaml.original 用于以非PV的形式部署 halo,目的在于验证 halo 是否可以运行再 containerd 上。

验证使用到配置文件的内容如下

slax@slax:~/k8s部署halo博客$

slax@slax:~/k8s部署halo博客$ cat halo-namespace.yaml

apiVersion: v1

kind: Namespace

metadata:

name: halo

labels:

name: halo

slax@slax:~/k8s部署halo博客$

slax@slax:~/k8s部署halo博客$ cat halo-persistent-volume.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: root-halo

namespace: halo

spec:

capacity:

storage: 2Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Recycle

hostPath:

path: /home/centos7-00/k8shostpath/halo

slax@slax:~/k8s部署halo博客$

slax@slax:~/k8s部署halo博客$ cat halo-persistent-volume-claim.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: root-halo

namespace: halo

spec:

accessModes: - ReadWriteOnce

resources:

requests:

storage: 2Gi

slax@slax:~/k8s部署halo博客$

slax@slax:~/k8s部署halo博客$ cat halo-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: halo

name: halo-deployment20231028

namespace: halo

spec:

replicas: 1

selector:

matchLabels:

app: halo

template:

metadata:

labels:

app: halo

spec:

containers: - image: docker.io/halohub/halo

ports: - containerPort: 80

name: halo

volumeMounts: - name: root-halo

mountPath: /root/.halo2

volumes: - name: root-halo

persistentVolumeClaim:

claimName: root-halo

slax@slax:~/k8s部署halo博客$

slax@slax:~/k8s部署halo博客$ cat halo-network-service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app: halo

name: halo-deployment20231028

namespace: halo

spec:

ports: - port: 8090

name: halo-network-service80

protocol: TCP

targetPort: 8090

nodePort: 30009

selector:

app: halo

type: NodePort

slax@slax:~/k8s部署halo博客$

3 创建 namespace

4 创建 pv

5 创建 pvc

6 创建 deployment

7 创建 service

具体操作过程如下:

[root@master0 centos7-00]#

[root@master0 centos7-00]# ls -F Working/

halo-deployment.yaml halo-deployment.yaml.original

halo-namespace.yaml halo-network-service.yaml

halo-persistent-volume-claim.yaml halo-persistent-volume.yaml

[root@master0 centos7-00]#

[root@master0 centos7-00]# kubectl create -f

Working/halo-namespace.yaml

namespace/halo created

[root@master0 centos7-00]#

[root@master0 centos7-00]# kubectl get namespaces

NAME STATUS AGE

default Active 44dhalo Active 14s

kube-flannel Active 44d

kube-node-lease Active 44d

kube-public Active 44d

kube-system Active 44d

[root@master0 centos7-00]#

[root@master0 centos7-00]# kubectl describe namespace halo

Name: halo

Labels: kubernetes.io/metadata.name=halo

name=halo

Annotations:

Status: Active

No resource quota.

No LimitRange resource.

[root@master0 centos7-00]#

[root@master0 centos7-00]# kubectl create -f

Working/halo-persistent-volume.yaml

persistentvolume/root-halo created

[root@master0 centos7-00]#

[root@master0 centos7-00]# kubectl get pv -n halo

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM

STORAGECLASS REASON AGE

root-halo 2Gi RWO Recycle Bound

halo/root-halo 58s

[root@master0 centos7-00]#

[root@master0 centos7-00]# kubectl create -f

Working/halo-persistent-volume-claim.yamlpersistentvolumeclaim/root-halo created

[root@master0 centos7-00]#

[root@master0 centos7-00]# kubectl get pvc -n halo

NAME STATUS VOLUME CAPACITY ACCESS MODES

STORAGECLASS AGE

root-halo Bound root-halo 2Gi RWO

36s

[root@master0 centos7-00]#

[root@master0 centos7-00]# kubectl create -f

Working/halo-deployment.yaml

deployment.apps/halo-deployment20231028 created

[root@master0 centos7-00]#

[root@master0 centos7-00]# kubectl get pods -n halo

NAME READY STATUS

RESTARTS AGE

halo-deployment20231028-7c46d785c7-72hxf 1/1 Running 0

19s

[root@master0 centos7-00]#

[root@master0 centos7-00]# kubectl describe deployment

halo-deployment20231028 -n halo

Name: halo-deployment20231028

Namespace: halo

CreationTimestamp: Sun, 29 Oct 2023 12:24:53 +0800

Labels: app=halo

Annotations: deployment.kubernetes.io/revision: 1

Selector: app=halo

Replicas: 1 desired | 1 updated | 1 total | 1 available

| 0 unavailable

StrategyType: RollingUpdateMinReadySeconds: 0

RollingUpdateStrategy: 25% max unavailable, 25% max surge

Pod Template:

Labels: app=halo

Containers:

halo:

Image: docker.io/halohub/halo

Port: 80/TCP

Host Port: 0/TCP

Environment:

Mounts:

/root/.halo2 from root-halo (rw)

Volumes:

root-halo:

Type: PersistentVolumeClaim (a reference to a

PersistentVolumeClaim in the same namespace)

ClaimName: root-halo

ReadOnly: false

Conditions:

Type Status Reason

Available True MinimumReplicasAvailable

Progressing True NewReplicaSetAvailable

OldReplicaSets:

NewReplicaSet: halo-deployment20231028-7c46d785c7 (1/1 replicas

created)

Events:

Type Reason Age From Message

—— ——— —— —— ———-Normal ScalingReplicaSet 5m40s deployment-controller Scaled up

replica set halo-deployment20231028-7c46d785c7 to 1

[root@master0 centos7-00]#

[root@master0 centos7-00]# kubectl describe pod

halo-deployment20231028-7c46d785c7-72hxf -n halo

Name: halo-deployment20231028-7c46d785c7-72hxf

Namespace: halo

Priority: 0

Service Account: default

Node: slave1/192.168.136.152

Start Time: Sun, 29 Oct 2023 12:24:54 +0800

Labels: app=halo

pod-template-hash=7c46d785c7

Annotations:

Status: Running

IP: 10.244.2.3

IPs:

IP: 10.244.2.3

Controlled By: ReplicaSet/halo-deployment20231028-7c46d785c7

Containers:

halo:

Container ID:

containerd://5b908410f29ec3aa2ba55613842a69c105320d8aa633cd7acc12e3e

8afcb5a78

Image: docker.io/halohub/halo

Image ID:

docker.io/halohub/halo@sha256:994537a47aff491b29251665dc86ef2a87bd70

00516c330b5e636ca4b029d35c

Port: 80/TCPHost Port: 0/TCP

State: Running

Started: Sun, 29 Oct 2023 12:25:10 +0800

Ready: True

Restart Count: 0

Environment:

Mounts:

/root/.halo2 from root-halo (rw)

/var/run/secrets/kubernetes.io/serviceaccount from

kube-api-access-m7lqg (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

root-halo:

Type: PersistentVolumeClaim (a reference to a

PersistentVolumeClaim in the same namespace)

ClaimName: root-halo

ReadOnly: false

kube-api-access-m7lqg:

Type: Projected (a volume that contains injected

data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional:

DownwardAPI: trueQoS Class: BestEffort

Node-Selectors:

Tolerations: node.kubernetes.io/not-ready:NoExecute

op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

Normal Scheduled 7m23s default-scheduler Successfully assigned

halo/halo-deployment20231028-7c46d785c7-72hxf to slave1

Normal Pulling 7m23s kubelet Pulling image

“docker.io/halohub/halo”

Normal Pulled 7m7s kubelet Successfully pulled

image “docker.io/halohub/halo” in 15.665s (15.665s including waiting)

Normal Created 7m7s kubelet Created container halo

Normal Started 7m7s kubelet Started container halo

[root@master0 centos7-00]#

[root@master0 centos7-00]# kubectl create -f

Working/halo-network-service.yaml

service/halo-deployment20231028 created

[root@master0 centos7-00]#

[root@master0 centos7-00]# kubectl get services -n halo

NAME TYPE CLUSTER-IP EXTERNAL-IP

PORT(S) AGE

halo-deployment20231028 NodePort 10.108.173.68

8090:30009/TCP 27s

[root@master0 centos7-00]#

[root@master0 centos7-00]# kubectl describe servicehalo-deployment20231028 -n halo

Name: halo-deployment20231028

Namespace: halo

Labels: app=halo

Annotations:

Selector: app=halo

Type: NodePort

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.108.173.68

IPs: 10.108.173.68

Port: halo-network-service80 8090/TCP

TargetPort: 8090/TCP

NodePort: halo-network-service80 30009/TCP

Endpoints: 10.244.2.3:8090

Session Affinity: None

External Traffic Policy: Cluster

Events:

[root@master0 centos7-00]#

[root@master0 centos7-00]# kubectl get pod -n halo

NAME READY STATUS

RESTARTS AGE

halo-deployment20231028-7c46d785c7-72hxf 1/1 Running 0

12m

[root@master0 centos7-00]#

[root@master0 centos7-00]# kubectl logs -f -n halo

halo-deployment20231028-7c46d785c7-72hxf

/ / / /_ / /__

/ // / `/ / \

/ __ / // / / // /

// //__,//___/

Version: 1.4.16

2023-10-29 12:25:13.363 INFO 7 —- [ main]

run.halo.app.Application : Starting Application

v1.4.16 using Java 11.0.11 on halo-deployment20231028-7c46d785c7-72hxf

with PID 7 (/application/BOOT-INF/classes started by root in

/application)

2023-10-29 12:25:13.365 INFO 7 —- [ main]

run.halo.app.Application : No active profile set,

falling back to default profiles: default

2023-10-29 12:25:15.203 INFO 7 —-

[ main] .s.d.r.c.RepositoryConfigurationDelegate :

Bootstrapping Spring Data JPA repositories in DEFAULT mode.

2023-10-29 12:25:15.441 INFO 7 —-

[ main] .s.d.r.c.RepositoryConfigurationDelegate : Finished

Spring Data repository scanning in 225 ms. Found 22 JPA repository

interfaces.

2023-10-29 12:25:17.525 INFO 7 —- [ main]

org.eclipse.jetty.util.log : Logging initialized @6453ms

to org.eclipse.jetty.util.log.Slf4jLog

2023-10-29 12:25:17.898 INFO 7 —- [ main]

o.s.b.w.e.j.JettyServletWebServerFactory : Server initialized with port:

8090

2023-10-29 12:25:17.921 INFO 7 —- [ main]

org.eclipse.jetty.server.Server : jetty-9.4.42.v20210604;built: 2021-06-04T17:33:38.939Z; git:

5cd5e6d2375eeab146813b0de9f19eda6ab6e6cb; jvm 11.0.11+9

2023-10-29 12:25:18.045 INFO 7 —- [ main]

o.e.j.s.h.ContextHandler.application : Initializing Spring

embedded WebApplicationContext

2023-10-29 12:25:18.046 INFO 7 —- [ main]

w.s.c.ServletWebServerApplicationContext : Root WebApplicationContext:

initialization completed in 4598 ms

2023-10-29 12:25:18.524 INFO 7 —- [ main]

run.halo.app.config.HaloConfiguration : Halo cache store load impl :

[class run.halo.app.cache.InMemoryCacheStore]

2023-10-29 12:25:18.778 INFO 7 —- [ main]

com.zaxxer.hikari.HikariDataSource : HikariPool-1 - Starting…

2023-10-29 12:25:19.209 INFO 7 —- [ main]

com.zaxxer.hikari.HikariDataSource : HikariPool-1 - Start

completed.

2023-10-29 12:25:20.136 INFO 7 —- [ main]

o.hibernate.jpa.internal.util.LogHelper : HHH000204: Processing

PersistenceUnitInfo [name: default]

2023-10-29 12:25:20.216 INFO 7 —- [ main]

org.hibernate.Version : HHH000412: Hibernate ORM

core version 5.4.32.Final

2023-10-29 12:25:20.299 INFO 7 —- [ main]

o.hibernate.annotations.common.Version : HCANN000001: Hibernate

Commons Annotations {5.1.2.Final}

2023-10-29 12:25:20.609 INFO 7 —- [ main]

org.hibernate.dialect.Dialect : HHH000400: Using dialect:

org.hibernate.dialect.H2Dialect

2023-10-29 12:25:22.775 INFO 7 —- [ main]o.h.e.t.j.p.i.JtaPlatformInitiator : HHH000490: Using

JtaPlatform implementation:

[org.hibernate.engine.transaction.jta.platform.internal.NoJtaPlatfor

m]

2023-10-29 12:25:22.789 INFO 7 —- [ main]

j.LocalContainerEntityManagerFactoryBean : Initialized JPA

EntityManagerFactory for persistence unit ‘default’

2023-10-29 12:25:24.479 INFO 7 —- [ main]

org.eclipse.jetty.server.session : DefaultSessionIdManager

workerName=node0

2023-10-29 12:25:24.480 INFO 7 —- [ main]

org.eclipse.jetty.server.session : No SessionScavenger set,

using defaults

2023-10-29 12:25:24.480 INFO 7 —- [ main]

org.eclipse.jetty.server.session : node0 Scavenging every

660000ms

2023-10-29 12:25:24.494 INFO 7 —- [ main]

o.e.jetty.server.handler.ContextHandler : Started

o.s.b.w.e.j.JettyEmbeddedWebAppContext@2cc3df02{application,/,[file:

///tmp/jetty-docbase.8090.3227147541353187501/,

jar:file:/application/BOOT-INF/lib/springfox-swagger-ui-3.0.0.jar!/M

ETA-INF/resources],AVAILABLE}

2023-10-29 12:25:24.494 INFO 7 —- [ main]

org.eclipse.jetty.server.Server : Started @13424ms

2023-10-29 12:25:25.565 INFO 7 —- [ main]

run.halo.app.handler.file.FileHandlers : Registered 9 file

handler(s)

2023-10-29 12:25:29.824 INFO 7 —- [ main]

o.s.b.a.e.web.EndpointLinksResolver : Exposing 4 endpoint(s)beneath base path ‘/api/admin/actuator’

2023-10-29 12:25:29.887 INFO 7 —- [ main]

o.e.j.s.h.ContextHandler.application : Initializing Spring

DispatcherServlet ‘dispatcherServlet’

2023-10-29 12:25:29.887 INFO 7 —- [ main]

o.s.web.servlet.DispatcherServlet : Initializing Servlet

‘dispatcherServlet’

2023-10-29 12:25:29.889 INFO 7 —- [ main]

o.s.web.servlet.DispatcherServlet : Completed initialization in

1 ms

2023-10-29 12:25:29.898 INFO 7 —- [ main]

o.e.jetty.server.AbstractConnector : Started

ServerConnector@2f8c4fae{HTTP/1.1, (http/1.1)}{0.0.0.0:8090}

2023-10-29 12:25:29.899 INFO 7 —- [ main]

o.s.b.web.embedded.jetty.JettyWebServer : Jetty started on port(s)

8090 (http/1.1) with context path ‘/‘

2023-10-29 12:25:29.948 INFO 7 —- [ main]

run.halo.app.Application : Started Application in

18.115 seconds (JVM running for 18.879)

2023-10-29 12:25:29.953 INFO 7 —- [ main]

run.halo.app.listener.StartedListener : Starting migrate

database…

2023-10-29 12:25:30.080 INFO 7 —- [ main]

o.f.c.internal.license.VersionPrinter : Flyway Community Edition

7.5.1 by Redgate

2023-10-29 12:25:30.100 INFO 7 —- [ main]

o.f.c.i.database.base.DatabaseType : Database:

jdbc:h2:file:/root/.halo//db/halo (H2 1.4)

2023-10-29 12:25:30.120 INFO 7 —- [ main]o.f.c.i.s.JdbcTableSchemaHistory : Repair of failed migration

in Schema History table “PUBLIC”.”flyway_schema_history” not necessary

as table doesn’t exist.

2023-10-29 12:25:30.154 INFO 7 —- [ main]

o.f.core.internal.command.DbRepair : Successfully repaired

schema history table “PUBLIC”.”flyway_schema_history” (execution time

00:00.036s).

2023-10-29 12:25:30.169 INFO 7 —- [ main]

o.f.c.internal.license.VersionPrinter : Flyway Community Edition

7.5.1 by Redgate

2023-10-29 12:25:30.196 INFO 7 —- [ main]

o.f.core.internal.command.DbValidate : Successfully validated 4

migrations (execution time 00:00.016s)

2023-10-29 12:25:30.245 INFO 7 —- [ main]

o.f.c.i.s.JdbcTableSchemaHistory : Creating Schema History

table “PUBLIC”.”flyway_schema_history” with baseline …

2023-10-29 12:25:30.321 INFO 7 —- [ main]

o.f.core.internal.command.DbBaseline : Successfully baselined

schema with version: 1

2023-10-29 12:25:30.359 INFO 7 —- [ main]

o.f.core.internal.command.DbMigrate : Current version of schema

“PUBLIC”: 1

2023-10-29 12:25:30.363 INFO 7 —- [ main]

o.f.core.internal.command.DbMigrate : Migrating schema “PUBLIC”

to version “2 - migrate 1.2.0-beta.1 to 1.2.0-beta.2”

2023-10-29 12:25:30.421 INFO 7 —- [ main]

o.f.core.internal.command.DbMigrate : Migrating schema “PUBLIC”

to version “3 - migrate 1.3.0-beta.1 to 1.3.0-beta.2”

2023-10-29 12:25:30.562 INFO 7 —- [ main]o.f.core.internal.command.DbMigrate : Migrating schema “PUBLIC”

to version “4 - migrate 1.3.0-beta.2 to 1.3.0-beta.3”

2023-10-29 12:25:30.609 INFO 7 —- [ main]

o.f.core.internal.command.DbMigrate : Successfully applied 3

migrations to schema “PUBLIC” (execution time 00:00.269s)

2023-10-29 12:25:30.611 INFO 7 —- [ main]

run.halo.app.listener.StartedListener : Migrate database succeed.

2023-10-29 12:25:30.612 INFO 7 —- [ main]

run.halo.app.listener.StartedListener : Created backup directory:

[/tmp/halo-backup]

2023-10-29 12:25:30.612 INFO 7 —- [ main]

run.halo.app.listener.StartedListener : Created data export

directory: [/tmp/halo-data-export]

2023-10-29 12:25:30.740 INFO 7 —- [ main]

run.halo.app.listener.StartedListener : Copied theme folder from

[/application/BOOT-INF/classes/templates/themes] to

[/root/.halo/templates/themes/caicai_anatole]

2023-10-29 12:25:30.792 INFO 7 —- [ main]

run.halo.app.listener.StartedListener : Halo started at

http://127.0.0.1:8090

2023-10-29 12:25:30.792 INFO 7 —- [ main]

run.halo.app.listener.StartedListener : Halo admin started at

http://127.0.0.1:8090/admin

2023-10-29 12:25:30.792 INFO 7 —- [ main]

run.halo.app.listener.StartedListener : Halo has started

successfully!

^C

[root@master0 centos7-00]#