1. 基础环境优化

hostnamectl set-hostname master1 && bash

hostnamectl set-hostname node1 && bash

hostnamectl set-hostname node2 && bash

cat >> /etc/hosts << EOF

192.168.0.34 master1

192.168.0.45 node1

192.168.0.209 node2

EOF

#所有机器上都操作

ssh-keygen -t rsa #一路回车,不输入密码

###把本地的ssh公钥文件安装到远程主机对应的账户

for i in master1 node1 node2 ;do ssh-copy-id -i .ssh/id_rsa.pub $i ;done

#关闭防火墙:

systemctl stop firewalld

systemctl disable firewalld

#关闭selinux:

sed -i 's/enforcing/disabled/' /etc/selinux/config # 永久

setenforce 0 # 临时

#关闭swap:

swapoff -a # 临时

sed -i 's/.*swap.*/#&/' /etc/fstab # 永久

#时间同步

yum install ntpdate -y

ntpdate time.windows.com

#设置yum源

yum -y install wget

sudo yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

rpm -ivh http://mirrors.aliyun.com/epel/epel-release-latest-7.noarch.rpm

#安装依赖组件,也就是上面的Kubernetes依赖里的内容,全部安装

yum install -y ebtables socat ipset conntrack wget curl

2. 安装kubesphere(只在master上操作)

export KKZONE=cn

curl -sfL https://get-kk.kubesphere.io | VERSION=v2.2.2 sh -

chmod +x kk

#集群配置,创建配置文件,config-sample.yaml

./kk create config --with-kubernetes v1.22.10 --with-kubesphere v3.3.0 #创建配置文件

[root@k8s-master ~]# ./kk create config --with-kubernetes v1.22.10 --with-kubesphere v3.3.0

Generate KubeKey config file successfully

[root@k8s-master ~]# ll

total 70340

-rw-r–r-- 1 root root 4773 Oct 22 18:29 config-sample.yaml

-rwxr-xr-x 1 1001 121 54910976 Jul 26 2022 kk

drwxr-xr-x 4 root root 4096 Oct 22 18:26 kubekey

-rw-r–r-- 1 root root 17102249 Oct 22 18:25 kubekey-v2.2.2-linux-amd64.tar.gz

[root@k8s-master ~]#

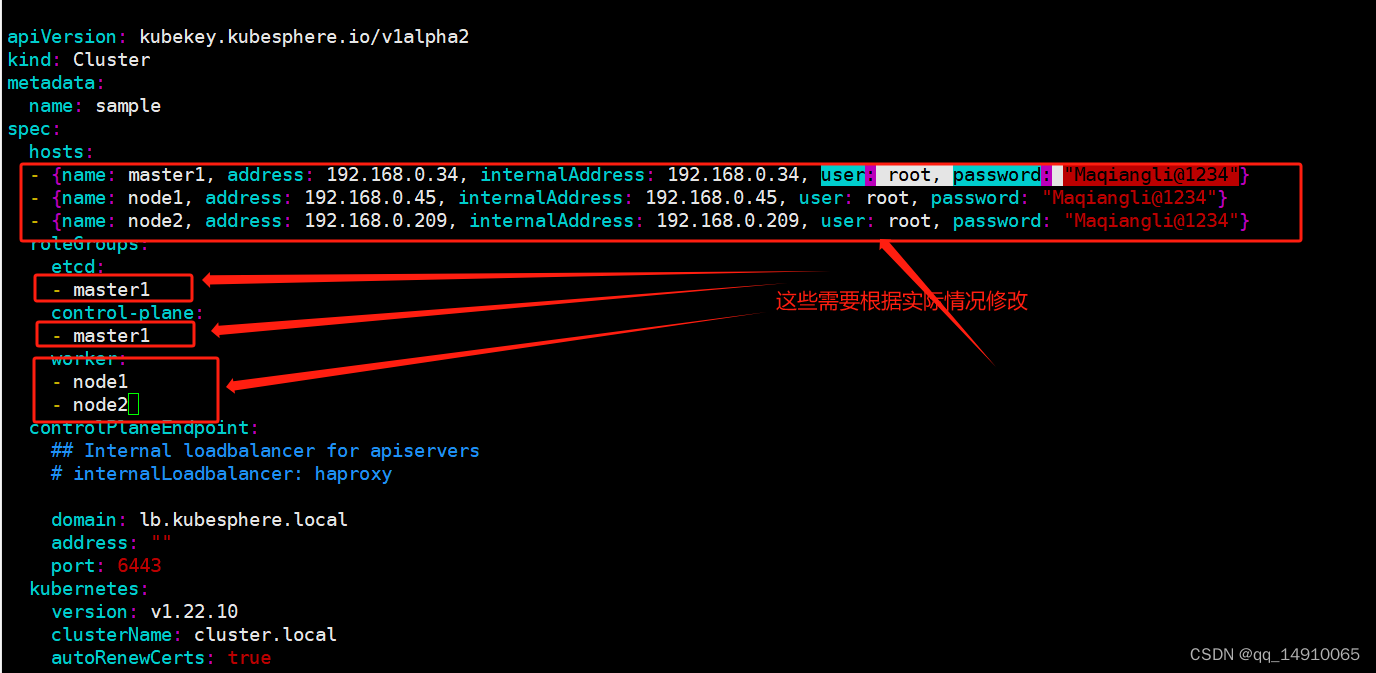

编辑config-sample.yaml

3. 安装kubesphere

#启动脚本和配置文件

./kk create cluster -f config-sample.yaml

这是安装记录的全过程:

[root@master1 ~]# ./kk create cluster -f config-sample.yaml

_ __ _ _ __

| | / / | | | | / /

| |/ / _ _| |__ ___| |/ / ___ _ _

| \| | | | '_ \ / _ \ \ / _ \ | | |

| |\ \ |_| | |_) | __/ |\ \ __/ |_| |

\_| \_/\__,_|_.__/ \___\_| \_/\___|\__, |

__/ |

|___/

19:05:34 CST [GreetingsModule] Greetings

19:05:34 CST message: [master1]

Greetings, KubeKey!

19:05:34 CST message: [node2]

Greetings, KubeKey!

19:05:34 CST message: [node1]

Greetings, KubeKey!

19:05:34 CST success: [master1]

19:05:34 CST success: [node2]

19:05:34 CST success: [node1]

19:05:34 CST [NodePreCheckModule] A pre-check on nodes

19:05:35 CST success: [node2]

19:05:35 CST success: [master1]

19:05:35 CST success: [node1]

19:05:35 CST [ConfirmModule] Display confirmation form

+---------+------+------+---------+----------+-------+-------+---------+-----------+--------+--------+------------+------------+-------------+------------------+--------------+

| name | sudo | curl | openssl | ebtables | socat | ipset | ipvsadm | conntrack | chrony | docker | containerd | nfs client | ceph client | glusterfs client | time |

+---------+------+------+---------+----------+-------+-------+---------+-----------+--------+--------+------------+------------+-------------+------------------+--------------+

| master1 | y | y | y | y | y | y | | y | y | | | | | | CST 19:05:35 |

| node1 | y | y | y | y | y | y | | y | y | | | | | | CST 19:05:35 |

| node2 | y | y | y | y | y | y | | y | y | | | | | | CST 19:05:35 |

+---------+------+------+---------+----------+-------+-------+---------+-----------+--------+--------+------------+------------+-------------+------------------+--------------+

This is a simple check of your environment.

Before installation, ensure that your machines meet all requirements specified at

https://github.com/kubesphere/kubekey#requirements-and-recommendations

Continue this installation? [yes/no]: yes

19:05:37 CST success: [LocalHost]

19:05:37 CST [NodeBinariesModule] Download installation binaries

19:05:37 CST message: [localhost]

downloading amd64 kubeadm v1.22.10 ...

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 43.7M 100 43.7M 0 0 1026k 0 0:00:43 0:00:43 --:--:-- 1194k

19:06:21 CST message: [localhost]

downloading amd64 kubelet v1.22.10 ...

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 115M 100 115M 0 0 1024k 0 0:01:55 0:01:55 --:--:-- 1162k

19:08:18 CST message: [localhost]

downloading amd64 kubectl v1.22.10 ...

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 44.7M 100 44.7M 0 0 1031k 0 0:00:44 0:00:44 --:--:-- 1197k

19:09:02 CST message: [localhost]

downloading amd64 helm v3.6.3 ...

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 43.0M 100 43.0M 0 0 996k 0 0:00:44 0:00:44 --:--:-- 1024k

19:09:47 CST message: [localhost]

downloading amd64 kubecni v0.9.1 ...

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 37.9M 100 37.9M 0 0 1022k 0 0:00:37 0:00:37 --:--:-- 1173k

19:10:25 CST message: [localhost]

downloading amd64 crictl v1.24.0 ...

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 13.8M 100 13.8M 0 0 1049k 0 0:00:13 0:00:13 --:--:-- 1174k

19:10:38 CST message: [localhost]

downloading amd64 etcd v3.4.13 ...

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 16.5M 100 16.5M 0 0 1040k 0 0:00:16 0:00:16 --:--:-- 1142k

19:10:55 CST message: [localhost]

downloading amd64 docker 20.10.8 ...

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 58.1M 100 58.1M 0 0 24.8M 0 0:00:02 0:00:02 --:--:-- 24.8M

19:10:58 CST success: [LocalHost]

19:10:58 CST [ConfigureOSModule] Prepare to init OS

19:10:58 CST success: [node1]

19:10:58 CST success: [node2]

19:10:58 CST success: [master1]

19:10:58 CST [ConfigureOSModule] Generate init os script

19:10:58 CST success: [node1]

19:10:58 CST success: [node2]

19:10:58 CST success: [master1]

19:10:58 CST [ConfigureOSModule] Exec init os script

19:10:59 CST stdout: [node1]

setenforce: SELinux is disabled

Disabled

vm.swappiness = 1

net.core.somaxconn = 1024

net.ipv4.tcp_max_tw_buckets = 5000

net.ipv4.tcp_max_syn_backlog = 1024

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-arptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_local_reserved_ports = 30000-32767

vm.max_map_count = 262144

fs.inotify.max_user_instances = 524288

kernel.pid_max = 65535

19:10:59 CST stdout: [node2]

setenforce: SELinux is disabled

Disabled

vm.swappiness = 1

net.core.somaxconn = 1024

net.ipv4.tcp_max_tw_buckets = 5000

net.ipv4.tcp_max_syn_backlog = 1024

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-arptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_local_reserved_ports = 30000-32767

vm.max_map_count = 262144

fs.inotify.max_user_instances = 524288

kernel.pid_max = 65535

19:10:59 CST stdout: [master1]

setenforce: SELinux is disabled

Disabled

vm.swappiness = 1

net.core.somaxconn = 1024

net.ipv4.tcp_max_tw_buckets = 5000

net.ipv4.tcp_max_syn_backlog = 1024

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-arptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_local_reserved_ports = 30000-32767

vm.max_map_count = 262144

fs.inotify.max_user_instances = 524288

kernel.pid_max = 65535

19:10:59 CST success: [node1]

19:10:59 CST success: [node2]

19:10:59 CST success: [master1]

19:10:59 CST [ConfigureOSModule] configure the ntp server for each node

19:10:59 CST skipped: [node2]

19:10:59 CST skipped: [master1]

19:10:59 CST skipped: [node1]

19:10:59 CST [KubernetesStatusModule] Get kubernetes cluster status

19:10:59 CST success: [master1]

19:10:59 CST [InstallContainerModule] Sync docker binaries

19:11:03 CST success: [master1]

19:11:03 CST success: [node2]

19:11:03 CST success: [node1]

19:11:03 CST [InstallContainerModule] Generate docker service

19:11:04 CST success: [node1]

19:11:04 CST success: [node2]

19:11:04 CST success: [master1]

19:11:04 CST [InstallContainerModule] Generate docker config

19:11:04 CST success: [node1]

19:11:04 CST success: [master1]

19:11:04 CST success: [node2]

19:11:04 CST [InstallContainerModule] Enable docker

19:11:05 CST success: [node1]

19:11:05 CST success: [master1]

19:11:05 CST success: [node2]

19:11:05 CST [InstallContainerModule] Add auths to container runtime

19:11:05 CST skipped: [node2]

19:11:05 CST skipped: [node1]

19:11:05 CST skipped: [master1]

19:11:05 CST [PullModule] Start to pull images on all nodes

19:11:05 CST message: [node2]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/pause:3.5

19:11:05 CST message: [master1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/pause:3.5

19:11:05 CST message: [node1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/pause:3.5

19:11:07 CST message: [node2]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-proxy:v1.22.10

19:11:07 CST message: [node1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-proxy:v1.22.10

19:11:07 CST message: [master1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-apiserver:v1.22.10

19:11:21 CST message: [node2]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/coredns:1.8.0

19:11:21 CST message: [node1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/coredns:1.8.0

19:11:24 CST message: [master1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controller-manager:v1.22.10

19:11:29 CST message: [node2]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/k8s-dns-node-cache:1.15.12

19:11:29 CST message: [node1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/k8s-dns-node-cache:1.15.12

19:11:41 CST message: [master1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-scheduler:v1.22.10

19:11:42 CST message: [node1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controllers:v3.23.2

19:11:49 CST message: [master1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-proxy:v1.22.10

19:11:58 CST message: [node1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/cni:v3.23.2

19:12:04 CST message: [master1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/coredns:1.8.0

19:12:12 CST message: [master1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/k8s-dns-node-cache:1.15.12

19:12:26 CST message: [master1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controllers:v3.23.2

19:12:42 CST message: [master1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/cni:v3.23.2

19:12:42 CST message: [node1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/node:v3.23.2

19:13:12 CST message: [node2]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controllers:v3.23.2

19:13:24 CST message: [node1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/pod2daemon-flexvol:v3.23.2

19:13:25 CST message: [master1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/node:v3.23.2

19:13:28 CST message: [node2]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/cni:v3.23.2

19:14:07 CST message: [master1]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/pod2daemon-flexvol:v3.23.2

19:14:13 CST message: [node2]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/node:v3.23.2

19:14:55 CST message: [node2]

downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/pod2daemon-flexvol:v3.23.2

19:14:59 CST success: [node1]

19:14:59 CST success: [master1]

19:14:59 CST success: [node2]

19:14:59 CST [ETCDPreCheckModule] Get etcd status

19:14:59 CST success: [master1]

19:14:59 CST [CertsModule] Fetch etcd certs

19:14:59 CST success: [master1]

19:14:59 CST [CertsModule] Generate etcd Certs

[certs] Generating "ca" certificate and key

[certs] admin-master1 serving cert is signed for DNS names [etcd etcd.kube-system etcd.kube-system.svc etcd.kube-system.svc.cluster.local lb.kubesphere.local localhost master1 node1 node2] and IPs [127.0.0.1 ::1 192.168.0.34 192.168.0.45 192.168.0.209]

[certs] member-master1 serving cert is signed for DNS names [etcd etcd.kube-system etcd.kube-system.svc etcd.kube-system.svc.cluster.local lb.kubesphere.local localhost master1 node1 node2] and IPs [127.0.0.1 ::1 192.168.0.34 192.168.0.45 192.168.0.209]

[certs] node-master1 serving cert is signed for DNS names [etcd etcd.kube-system etcd.kube-system.svc etcd.kube-system.svc.cluster.local lb.kubesphere.local localhost master1 node1 node2] and IPs [127.0.0.1 ::1 192.168.0.34 192.168.0.45 192.168.0.209]

19:15:00 CST success: [LocalHost]

19:15:00 CST [CertsModule] Synchronize certs file

19:15:01 CST success: [master1]

19:15:01 CST [CertsModule] Synchronize certs file to master

19:15:01 CST skipped: [master1]

19:15:01 CST [InstallETCDBinaryModule] Install etcd using binary

19:15:02 CST success: [master1]

19:15:02 CST [InstallETCDBinaryModule] Generate etcd service

19:15:02 CST success: [master1]

19:15:02 CST [InstallETCDBinaryModule] Generate access address

19:15:02 CST success: [master1]

19:15:02 CST [ETCDConfigureModule] Health check on exist etcd

19:15:02 CST skipped: [master1]

19:15:02 CST [ETCDConfigureModule] Generate etcd.env config on new etcd

19:15:02 CST success: [master1]

19:15:02 CST [ETCDConfigureModule] Refresh etcd.env config on all etcd

19:15:02 CST success: [master1]

19:15:02 CST [ETCDConfigureModule] Restart etcd

19:15:06 CST stdout: [master1]

Created symlink from /etc/systemd/system/multi-user.target.wants/etcd.service to /etc/systemd/system/etcd.service.

19:15:06 CST success: [master1]

19:15:06 CST [ETCDConfigureModule] Health check on all etcd

19:15:06 CST success: [master1]

19:15:06 CST [ETCDConfigureModule] Refresh etcd.env config to exist mode on all etcd

19:15:06 CST success: [master1]

19:15:06 CST [ETCDConfigureModule] Health check on all etcd

19:15:06 CST success: [master1]

19:15:06 CST [ETCDBackupModule] Backup etcd data regularly

19:15:06 CST success: [master1]

19:15:06 CST [ETCDBackupModule] Generate backup ETCD service

19:15:06 CST success: [master1]

19:15:06 CST [ETCDBackupModule] Generate backup ETCD timer

19:15:07 CST success: [master1]

19:15:07 CST [ETCDBackupModule] Enable backup etcd service

19:15:07 CST success: [master1]

19:15:07 CST [InstallKubeBinariesModule] Synchronize kubernetes binaries

19:15:15 CST success: [master1]

19:15:15 CST success: [node2]

19:15:15 CST success: [node1]

19:15:15 CST [InstallKubeBinariesModule] Synchronize kubelet

19:15:15 CST success: [node2]

19:15:15 CST success: [master1]

19:15:15 CST success: [node1]

19:15:15 CST [InstallKubeBinariesModule] Generate kubelet service

19:15:15 CST success: [node2]

19:15:15 CST success: [node1]

19:15:15 CST success: [master1]

19:15:15 CST [InstallKubeBinariesModule] Enable kubelet service

19:15:15 CST success: [node1]

19:15:15 CST success: [node2]

19:15:15 CST success: [master1]

19:15:15 CST [InstallKubeBinariesModule] Generate kubelet env

19:15:16 CST success: [master1]

19:15:16 CST success: [node2]

19:15:16 CST success: [node1]

19:15:16 CST [InitKubernetesModule] Generate kubeadm config

19:15:16 CST success: [master1]

19:15:16 CST [InitKubernetesModule] Init cluster using kubeadm

19:15:27 CST stdout: [master1]

W1022 19:15:16.479674 23830 utils.go:69] The recommended value for "clusterDNS" in "KubeletConfiguration" is: [10.233.0.10]; the provided value is: [169.254.25.10]

[init] Using Kubernetes version: v1.22.10

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local lb.kubesphere.local localhost master1 master1.cluster.local node1 node1.cluster.local node2 node2.cluster.local] and IPs [10.233.0.1 192.168.0.34 127.0.0.1 192.168.0.45 192.168.0.209]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] External etcd mode: Skipping etcd/ca certificate authority generation

[certs] External etcd mode: Skipping etcd/server certificate generation

[certs] External etcd mode: Skipping etcd/peer certificate generation

[certs] External etcd mode: Skipping etcd/healthcheck-client certificate generation

[certs] External etcd mode: Skipping apiserver-etcd-client certificate generation

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 7.002336 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.22" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node master1 as control-plane by adding the labels: [node-role.kubernetes.io/master(deprecated) node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node master1 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: 1zr4e1.dj4l7h0k6rblrzb7

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join lb.kubesphere.local:6443 --token 1zr4e1.dj4l7h0k6rblrzb7 \

--discovery-token-ca-cert-hash sha256:34a0b4f8551f17b5d032bd9d9d3dd1d553fbabc7f0ee09734085e5d531bd6418 \

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join lb.kubesphere.local:6443 --token 1zr4e1.dj4l7h0k6rblrzb7 \

--discovery-token-ca-cert-hash sha256:34a0b4f8551f17b5d032bd9d9d3dd1d553fbabc7f0ee09734085e5d531bd6418

19:15:27 CST success: [master1]

19:15:27 CST [InitKubernetesModule] Copy admin.conf to ~/.kube/config

19:15:27 CST success: [master1]

19:15:27 CST [InitKubernetesModule] Remove master taint

19:15:27 CST skipped: [master1]

19:15:27 CST [InitKubernetesModule] Add worker label

19:15:27 CST skipped: [master1]

19:15:27 CST [ClusterDNSModule] Generate coredns service

19:15:28 CST success: [master1]

19:15:28 CST [ClusterDNSModule] Override coredns service

19:15:28 CST stdout: [master1]

service "kube-dns" deleted

19:15:29 CST stdout: [master1]

service/coredns created

Warning: resource clusterroles/system:coredns is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

clusterrole.rbac.authorization.k8s.io/system:coredns configured

19:15:29 CST success: [master1]

19:15:29 CST [ClusterDNSModule] Generate nodelocaldns

19:15:29 CST success: [master1]

19:15:29 CST [ClusterDNSModule] Deploy nodelocaldns

19:15:29 CST stdout: [master1]

serviceaccount/nodelocaldns created

daemonset.apps/nodelocaldns created

19:15:29 CST success: [master1]

19:15:29 CST [ClusterDNSModule] Generate nodelocaldns configmap

19:15:29 CST success: [master1]

19:15:29 CST [ClusterDNSModule] Apply nodelocaldns configmap

19:15:30 CST stdout: [master1]

configmap/nodelocaldns created

19:15:30 CST success: [master1]

19:15:30 CST [KubernetesStatusModule] Get kubernetes cluster status

19:15:30 CST stdout: [master1]

v1.22.10

19:15:30 CST stdout: [master1]

master1 v1.22.10 [map[address:192.168.0.34 type:InternalIP] map[address:master1 type:Hostname]]

19:15:30 CST stdout: [master1]

I1022 19:15:30.575041 25671 version.go:255] remote version is much newer: v1.28.2; falling back to: stable-1.22

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

63ee559515c0d77547d1fcd24a58242a3199e88ed8a320321b05d81eb250274a

19:15:30 CST stdout: [master1]

secret/kubeadm-certs patched

19:15:31 CST stdout: [master1]

secret/kubeadm-certs patched

19:15:31 CST stdout: [master1]

secret/kubeadm-certs patched

19:15:31 CST stdout: [master1]

9h3nip.2552zirb9kdsggxw

19:15:31 CST success: [master1]

19:15:31 CST [JoinNodesModule] Generate kubeadm config

19:15:31 CST skipped: [master1]

19:15:31 CST success: [node1]

19:15:31 CST success: [node2]

19:15:31 CST [JoinNodesModule] Join control-plane node

19:15:31 CST skipped: [master1]

19:15:31 CST [JoinNodesModule] Join worker node

19:15:49 CST stdout: [node2]

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

W1022 19:15:44.065251 11754 utils.go:69] The recommended value for "clusterDNS" in "KubeletConfiguration" is: [10.233.0.10]; the provided value is: [169.254.25.10]

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

19:15:49 CST stdout: [node1]

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

W1022 19:15:44.065086 11775 utils.go:69] The recommended value for "clusterDNS" in "KubeletConfiguration" is: [10.233.0.10]; the provided value is: [169.254.25.10]

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

19:15:49 CST success: [node2]

19:15:49 CST success: [node1]

19:15:49 CST [JoinNodesModule] Copy admin.conf to ~/.kube/config

19:15:49 CST skipped: [master1]

19:15:49 CST [JoinNodesModule] Remove master taint

19:15:49 CST skipped: [master1]

19:15:49 CST [JoinNodesModule] Add worker label to master

19:15:49 CST skipped: [master1]

19:15:49 CST [JoinNodesModule] Synchronize kube config to worker

19:15:49 CST success: [node1]

19:15:49 CST success: [node2]

19:15:49 CST [JoinNodesModule] Add worker label to worker

19:15:50 CST stdout: [node1]

node/node1 labeled

19:15:50 CST stdout: [node2]

node/node2 labeled

19:15:50 CST success: [node1]

19:15:50 CST success: [node2]

19:15:50 CST [DeployNetworkPluginModule] Generate calico

19:15:50 CST success: [master1]

19:15:50 CST [DeployNetworkPluginModule] Deploy calico

19:15:51 CST stdout: [master1]

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

serviceaccount/calico-node created

deployment.apps/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

poddisruptionbudget.policy/calico-kube-controllers created

19:15:51 CST success: [master1]

19:15:51 CST [ConfigureKubernetesModule] Configure kubernetes

19:15:51 CST success: [node2]

19:15:51 CST success: [master1]

19:15:51 CST success: [node1]

19:15:51 CST [ChownModule] Chown user $HOME/.kube dir

19:15:51 CST success: [node2]

19:15:51 CST success: [node1]

19:15:51 CST success: [master1]

19:15:51 CST [AutoRenewCertsModule] Generate k8s certs renew script

19:15:51 CST success: [master1]

19:15:51 CST [AutoRenewCertsModule] Generate k8s certs renew service

19:15:51 CST success: [master1]

19:15:51 CST [AutoRenewCertsModule] Generate k8s certs renew timer

19:15:52 CST success: [master1]

19:15:52 CST [AutoRenewCertsModule] Enable k8s certs renew service

19:15:52 CST success: [master1]

19:15:52 CST [SaveKubeConfigModule] Save kube config as a configmap

19:15:52 CST success: [LocalHost]

19:15:52 CST [AddonsModule] Install addons

19:15:52 CST success: [LocalHost]

19:15:52 CST [DeployStorageClassModule] Generate OpenEBS manifest

19:15:52 CST success: [master1]

19:15:52 CST [DeployStorageClassModule] Deploy OpenEBS as cluster default StorageClass

19:15:54 CST success: [master1]

19:15:54 CST [DeployKubeSphereModule] Generate KubeSphere ks-installer crd manifests

19:15:54 CST success: [master1]

19:15:54 CST [DeployKubeSphereModule] Apply ks-installer

19:15:56 CST stdout: [master1]

namespace/kubesphere-system created

serviceaccount/ks-installer created

customresourcedefinition.apiextensions.k8s.io/clusterconfigurations.installer.kubesphere.io created

clusterrole.rbac.authorization.k8s.io/ks-installer created

clusterrolebinding.rbac.authorization.k8s.io/ks-installer created

deployment.apps/ks-installer created

19:15:56 CST success: [master1]

19:15:56 CST [DeployKubeSphereModule] Add config to ks-installer manifests

19:15:56 CST success: [master1]

19:15:56 CST [DeployKubeSphereModule] Create the kubesphere namespace

19:15:57 CST success: [master1]

19:15:57 CST [DeployKubeSphereModule] Setup ks-installer config

19:15:58 CST stdout: [master1]

secret/kube-etcd-client-certs created

19:15:58 CST success: [master1]

19:15:58 CST [DeployKubeSphereModule] Apply ks-installer

19:15:58 CST stdout: [master1]

namespace/kubesphere-system unchanged

serviceaccount/ks-installer unchanged

customresourcedefinition.apiextensions.k8s.io/clusterconfigurations.installer.kubesphere.io unchanged

clusterrole.rbac.authorization.k8s.io/ks-installer unchanged

clusterrolebinding.rbac.authorization.k8s.io/ks-installer unchanged

deployment.apps/ks-installer unchanged

clusterconfiguration.installer.kubesphere.io/ks-installer created

19:15:58 CST success: [master1]

#####################################################

### Welcome to KubeSphere! ###

#####################################################

Console: http://192.168.0.34:30880

Account: admin

Password: P@88w0rd

NOTES:

1. After you log into the console, please check the

monitoring status of service components in

"Cluster Management". If any service is not

ready, please wait patiently until all components

are up and running.

2. Please change the default password after login.

#####################################################

https://kubesphere.io 2023-10-22 19:23:58

#####################################################

19:24:01 CST success: [master1]

19:24:01 CST Pipeline[CreateClusterPipeline] execute successfully

Installation is complete.

Please check the result using the command:

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l 'app in (ks-install, ks-installer)' -o jsonpath='{.items[0].metadata.name}') -f

[root@master1 ~]#

[root@master1 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master1 Ready control-plane,master 12m v1.22.10

node1 Ready worker 12m v1.22.10

node2 Ready worker 12m v1.22.10

[root@master1 ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-69cfcfdf6c-98sh6 1/1 Running 1 (12m ago) 12m

calico-node-tk86b 1/1 Running 0 12m

calico-node-tsdll 1/1 Running 0 12m

calico-node-xj82j 1/1 Running 0 12m

coredns-5495dd7c88-j7tj2 1/1 Running 0 12m

coredns-5495dd7c88-l4lt9 1/1 Running 0 12m

kube-apiserver-master1 1/1 Running 0 13m

kube-controller-manager-master1 1/1 Running 0 13m

kube-proxy-n6sxv 1/1 Running 0 12m

kube-proxy-nzhx8 1/1 Running 0 12m

kube-proxy-wlb9d 1/1 Running 0 12m

kube-scheduler-master1 1/1 Running 0 13m

nodelocaldns-5jxcv 1/1 Running 0 12m

nodelocaldns-lrhg2 1/1 Running 0 12m

nodelocaldns-mcsdt 1/1 Running 0 12m

openebs-localpv-provisioner-6f8b56f75-q27h4 1/1 Running 0 12m

snapshot-controller-0 1/1 Running 0 11m

[root@master1 ~]# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-69cfcfdf6c-98sh6 1/1 Running 1 (12m ago) 12m

kube-system calico-node-tk86b 1/1 Running 0 12m

kube-system calico-node-tsdll 1/1 Running 0 12m

kube-system calico-node-xj82j 1/1 Running 0 12m

kube-system coredns-5495dd7c88-j7tj2 1/1 Running 0 13m

kube-system coredns-5495dd7c88-l4lt9 1/1 Running 0 13m

kube-system kube-apiserver-master1 1/1 Running 0 13m

kube-system kube-controller-manager-master1 1/1 Running 0 13m

kube-system kube-proxy-n6sxv 1/1 Running 0 13m

kube-system kube-proxy-nzhx8 1/1 Running 0 13m

kube-system kube-proxy-wlb9d 1/1 Running 0 13m

kube-system kube-scheduler-master1 1/1 Running 0 13m

kube-system nodelocaldns-5jxcv 1/1 Running 0 13m

kube-system nodelocaldns-lrhg2 1/1 Running 0 13m

kube-system nodelocaldns-mcsdt 1/1 Running 0 13m

kube-system openebs-localpv-provisioner-6f8b56f75-q27h4 1/1 Running 0 12m

kube-system snapshot-controller-0 1/1 Running 0 11m

kubesphere-controls-system default-http-backend-56d9d4fdf7-g4942 1/1 Running 0 10m

kubesphere-controls-system kubectl-admin-7685cdd85b-w9hzh 1/1 Running 0 5m30s

kubesphere-monitoring-system alertmanager-main-0 2/2 Running 0 8m2s

kubesphere-monitoring-system alertmanager-main-1 2/2 Running 0 8m1s

kubesphere-monitoring-system alertmanager-main-2 2/2 Running 0 8m

kubesphere-monitoring-system kube-state-metrics-89f49579b-xpwj7 3/3 Running 0 8m13s

kubesphere-monitoring-system node-exporter-45rb9 2/2 Running 0 8m16s

kubesphere-monitoring-system node-exporter-qkdxg 2/2 Running 0 8m15s

kubesphere-monitoring-system node-exporter-xq8zl 2/2 Running 0 8m15s

kubesphere-monitoring-system notification-manager-deployment-6ff7974fbd-5zmdh 2/2 Running 0 6m53s

kubesphere-monitoring-system notification-manager-deployment-6ff7974fbd-wq6bz 2/2 Running 0 6m52s

kubesphere-monitoring-system notification-manager-operator-58bc989b46-29jzz 2/2 Running 0 7m50s

kubesphere-monitoring-system prometheus-k8s-0 2/2 Running 0 8m1s

kubesphere-monitoring-system prometheus-k8s-1 2/2 Running 0 7m59s

kubesphere-monitoring-system prometheus-operator-fc9b55959-lzfph 2/2 Running 0 8m29s

kubesphere-system ks-apiserver-5db774f4f-v5bwh 1/1 Running 0 10m

kubesphere-system ks-console-64b56f967-bdlrx 1/1 Running 0 10m

kubesphere-system ks-controller-manager-7b5f77b47f-zgbgw 1/1 Running 0 10m

kubesphere-system ks-installer-9b4c69688-jl7xf 1/1 Running 0 12m

[root@master1 ~]#

4. 查看kubesphere登录账号和密码

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l 'app in (ks-install, ks-installer)' -o jsonpath='{.items[0].metadata.name}') -f #成功输出,有登录账号和密码和ip

**************************************************

Collecting installation results ...

#####################################################

### Welcome to KubeSphere! ###

#####################################################

Console: http://192.168.0.34:30880

Account: admin

Password: P@88w0rd

具体登录和使用,请参考博客(从第4步开始)

#在kubernetes群集中创建Nginx:

kubectl create deployment nginx --image=nginx

kubectl expose deployment nginx --port=80 --type=NodePort

kubectl scale deployment nginx --replicas=2 #pod副本的数量增加到10个

kubectl get pod,svc -o wide

#浏览器做验证通过

5. 删除集群,重新安装

./kk delete cluster

./kk delete cluster [-f config-sample.yaml] #高级模式删除