💥💥💞💞欢迎来到本博客❤️❤️💥💥

🏆博主优势:🌞🌞🌞博客内容尽量做到思维缜密,逻辑清晰,为了方便读者。

⛳️座右铭:行百里者,半于九十。

📋📋📋本文目录如下:🎁🎁🎁

目录

💥1 概述

📚2 运行结果

2.1 Python运行结果

2.2 Matlab代码实现

🎉3 参考文献

🌈4 Matlab代码、Python代码实现

💥1 概述

本文提出了一种新的优化算法,称为基于领导者的混合优化( HLBO ),它适用于优化挑战。HLBO的主要思想是在混合领导者的指导下对算法种群进行引导。将HLBO的阶段分为勘探和开采两个阶段进行数学建模。通过对23个不同类型的单峰和多峰标准测试函数的求解,检验HLBO在优化中的有效性。单峰函数的优化结果表明,HLBO在局部搜索中具有较高的开发能力,能够更好地收敛到全局最优;而多峰函数的优化结果表明,HLBO在全局搜索中具有较高的探索能力。

📚2 运行结果

2.1 Python运行结果

2.2 Matlab代码实现

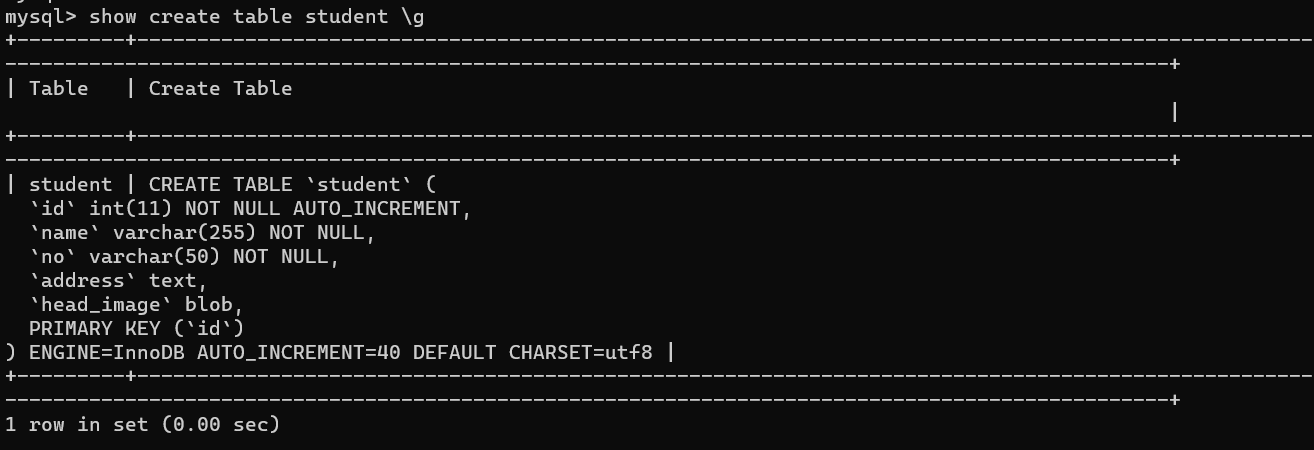

部分代码:

Fun_name='F2'; % Name of the test function that can be from F1 to F23

SearchAgents=10; % Number of search agents

Max_iterations=1000; % Maximum numbef of iterations

% Load details of the selected benchmark function

[lowerbound,upperbound,dimension,fitness]=fun_info(Fun_name);

[Best_score,Best_pos,HLBO_curve]=HLBO(SearchAgents,Max_iterations,lowerbound,upperbound,dimension,fitness);

display(['The best solution obtained by HLBO is : ', num2str(Best_pos)]);

display(['The best optimal value of the objective funciton found by HLBO is : ', num2str(Best_score)]);

%% Draw objective space

plots=semilogx(HLBO_curve,'Color','g');

set(plots,'linewidth',2)

hold on

title('Objective space')

xlabel('Iterations');

ylabel('Best score');

axis tight

grid on

box on

legend('HLBO')Fun_name='F2'; % Name of the test function that can be from F1 to F23

SearchAgents=10; % Number of search agents

Max_iterations=1000; % Maximum numbef of iterations

% Load details of the selected benchmark function

[lowerbound,upperbound,dimension,fitness]=fun_info(Fun_name);

[Best_score,Best_pos,HLBO_curve]=HLBO(SearchAgents,Max_iterations,lowerbound,upperbound,dimension,fitness);

display(['The best solution obtained by HLBO is : ', num2str(Best_pos)]);

display(['The best optimal value of the objective funciton found by HLBO is : ', num2str(Best_score)]);

%% Draw objective space

plots=semilogx(HLBO_curve,'Color','g');

set(plots,'linewidth',2)

hold on

title('Objective space')

xlabel('Iterations');

ylabel('Best score');

axis tight

grid on

box on

legend('HLBO')

🎉3 参考文献

文章中一些内容引自网络,会注明出处或引用为参考文献,难免有未尽之处,如有不妥,请随时联系删除。