一、前言

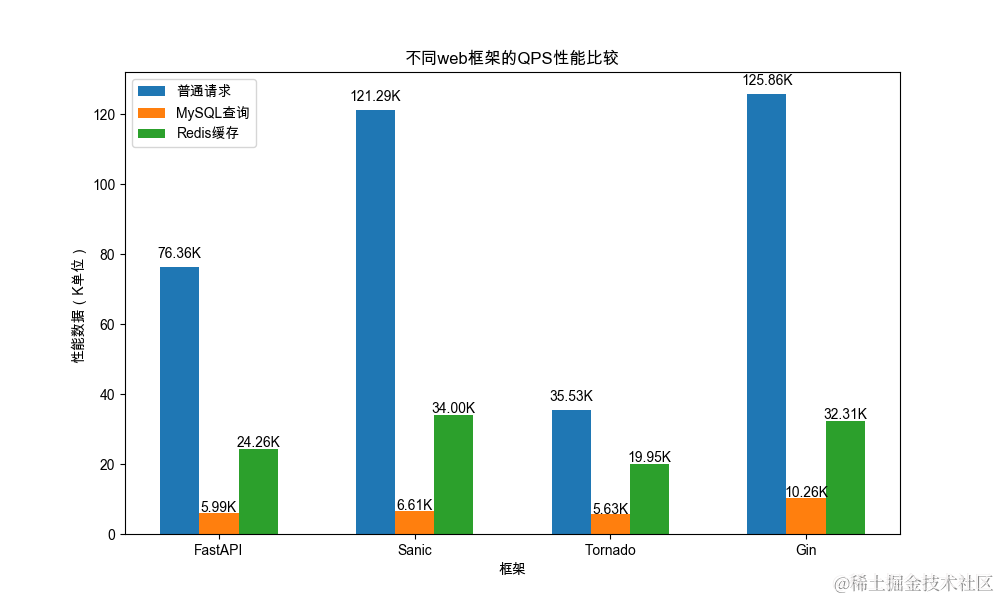

异步编程在构建高性能 Web 应用中起着关键作用,而 FastAPI、Sanic、Tornado 都声称具有卓越的性能。本文将通过性能压测对这些框架与Go的Gin框架进行全面对比,揭示它们之间的差异。

原文:Python异步框架大战:FastAPI、Sanic、Tornado VS Go 的 Gin

二、环境准备

系统环境配置

编程语言

| 语言 | 版本 | 官网/Github |

|---|---|---|

| Python | 3.10.12 | https://www.python.org/ |

| Go | 1.20.5 | https://go.dev/ |

压测工具

| 工具 | 介绍 | 官网/Github |

|---|---|---|

| ab | Apache的压力测试工具,使用简单 | https://httpd.apache.org/docs/2.4/programs/ab.html |

| wrk | 高性能多线程压力测试工具 | https://github.com/wg/wrk |

| JMeter | 功能强大的压力/负载测试工具 | https://github.com/apache/jmeter |

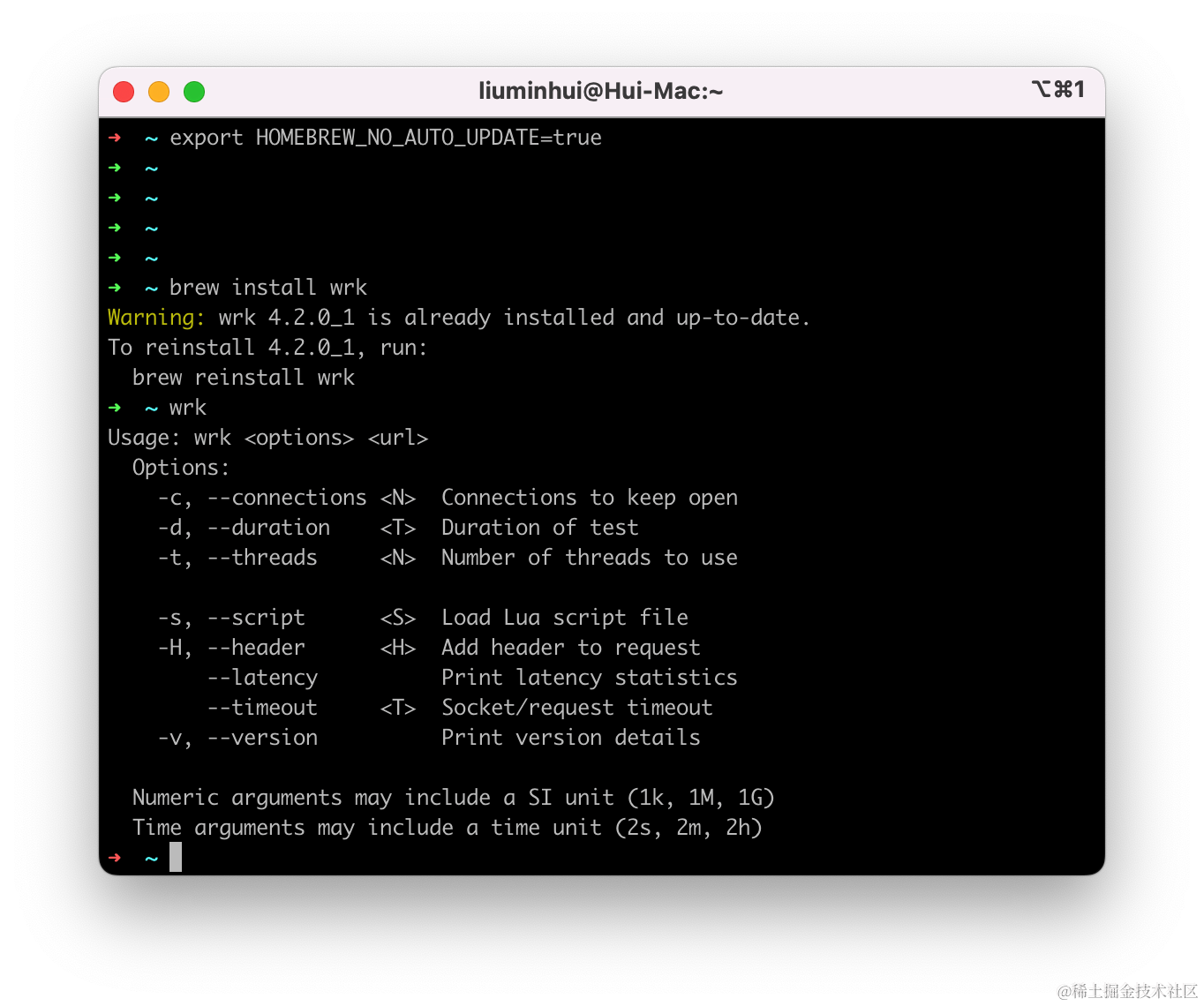

这里选择 wrk 工具进行压测,mac 安装直接通过brew快速安装

brew install wrk

window安装可能要依赖它的子系统才方便安装,或者换成其他的压测工具例如JMeter。

web框架

| 框架 | 介绍 | 压测版本 | 官网/Github |

|---|---|---|---|

| FastAPI | 基于Python的高性能web框架 | 0.103.1 | https://fastapi.tiangolo.com/ |

| Sanic | Python的异步web服务器框架 | 23.6.0 | https://sanic.dev/zh/ |

| Tornado | Python的非阻塞式web框架 | 6.3.3 | https://www.tornadoweb.org/en/stable/ |

| Gin | Go语言的web框架 | 1.9.1 | https://gin-gonic.com/ |

| Fiber | todo | todo | https://gofiber.io/ |

| Flask | todo | todo | https://github.com/pallets/flask |

| Django | todo | todo | https://www.djangoproject.com/ |

数据库配置

| 数据库名 | 介绍 | 压测版本 | 依赖库 |

|---|---|---|---|

| MySQL | 关系型数据库 | 8.0 | sqlalchemy+aiomysql |

| Redis | NoSQL数据库 | 7.2 | aioredis |

三、wrk 工具 http压测

FastAPI

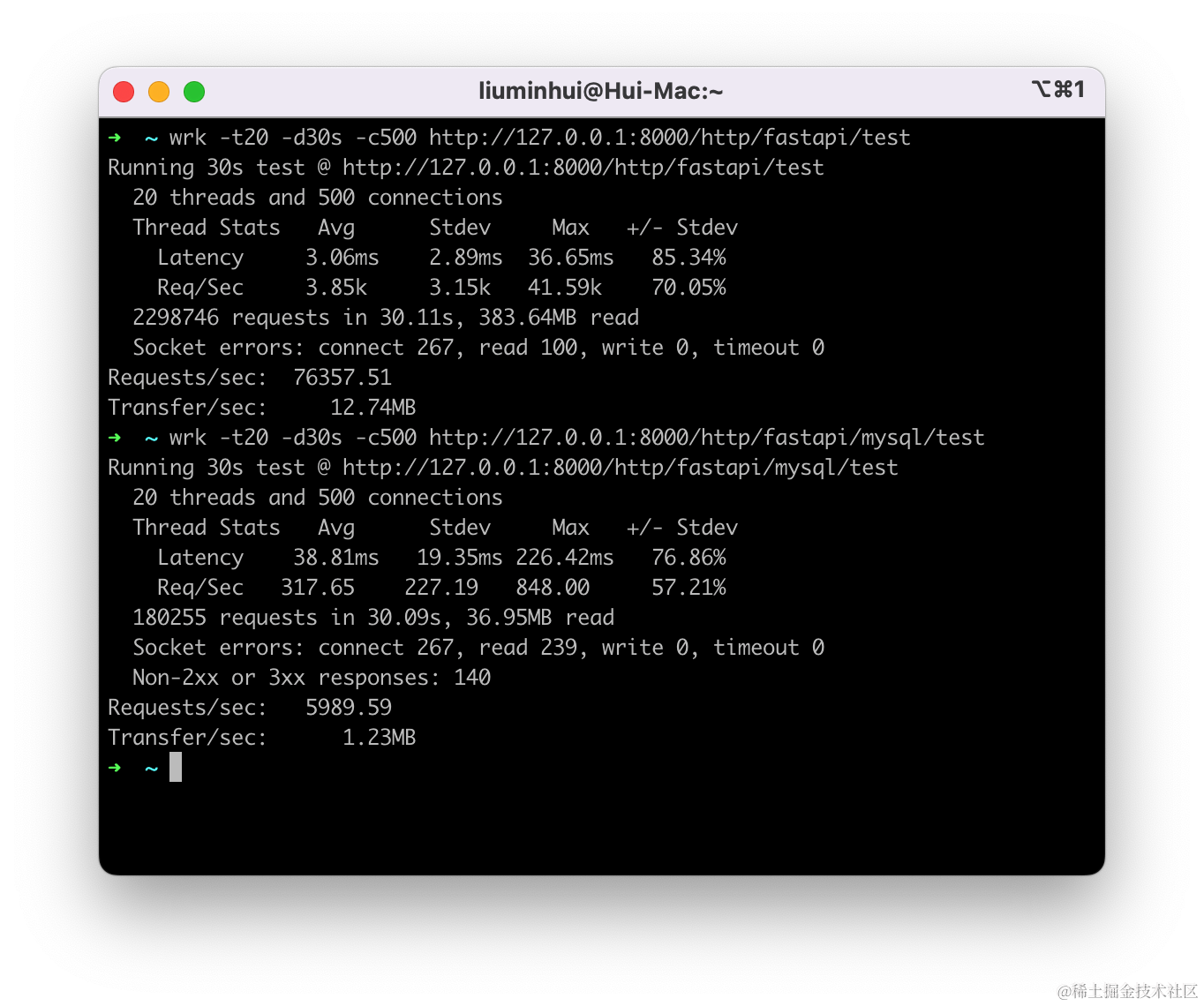

普通http请求压测

依赖安装

pip install fastapi==0.103.1

pip install uvicorn==0.23.2

编写测试路由

from fastapi import FastAPI

app = FastAPI(summary="fastapi性能测试")

@app.get(path="/http/fastapi/test")

async def fastapi_test():

return {"code": 0, "message": "fastapi_http_test", "data": {}}

Uvicorn 运行,这里是起四个进程运行部署

uvicorn fastapi_test:app --log-level critical --port 8000 --workers 4

wrk压测

开20个线程,建立500个连接,持续请求30s

wrk -t20 -d30s -c500 http://127.0.0.1:8000/http/fastapi/test

压测结果

➜ ~ wrk -t20 -d30s -c500 http://127.0.0.1:8000/http/fastapi/test

Running 30s test @ http://127.0.0.1:8000/http/fastapi/test

20 threads and 500 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 3.06ms 2.89ms 36.65ms 85.34%

Req/Sec 3.85k 3.15k 41.59k 70.05%

2298746 requests in 30.11s, 383.64MB read

Socket errors: connect 267, read 100, write 0, timeout 0

Requests/sec: 76357.51

Transfer/sec: 12.74MB

Thread Stats 这里是 20、30个压测线程的平均结果指标

- 平均延迟(Avg Latency):每个线程的平均响应延迟

- 标准差(Stdev Latency):每个线程延迟的标准差

- 最大延迟(Max Latency):每个线程遇到的最大延迟

- 延迟分布(+/- Stdev Latency):每个线程延迟分布情况

- 每秒请求数(Req/Sec):每个线程每秒完成的请求数

- 请求数分布(+/- Stdev Req/Sec):每个线程请求数的分布情况

Socket errors: connect 267, read 100, write 0, timeout 0,是压测过程中socket的错误统计

- connect:连接错误,表示在压测过程中,总共有 267 次连接异常

- read:读取错误,表示有 100 次读取数据异常

- write:写入错误,表示有0次写入异常

- timeout:超时错误,表示有0次超时

MySQL数据查询请求压测

这里在简单试下数据库查询时候的情况

首先先补充下项目依赖

pip install hui-tools[db-orm, db-redis]==0.2.0

hui-tools是我自己开发的一个工具库,欢迎大家一起来贡献。https://github.com/HuiDBK/py-tools

#!/usr/bin/python3

# -*- coding: utf-8 -*-

# @Author: Hui

# @Desc: { fastapi性能测试 }

# @Date: 2023/09/10 12:24

import uvicorn

from fastapi import FastAPI

from py_tools.connections.db.mysql import SQLAlchemyManager, DBManager

app = FastAPI(summary="fastapi性能测试")

async def init_orm():

db_client = SQLAlchemyManager(

host="127.0.0.1",

port=3306,

user="root",

password="123456",

db_name="house_rental"

)

db_client.init_mysql_engine()

DBManager.init_db_client(db_client)

@app.on_event("startup")

async def startup_event():

"""项目启动时准备环境"""

await init_orm()

@app.get(path="/http/fastapi/mysql/test")

async def fastapi_mysql_query_test():

sql = "select id, username, role from user_basic where username='hui'"

ret = await DBManager().run_sql(sql)

column_names = [desc[0] for desc in ret.cursor.description]

result_tuple = ret.fetchone()

user_info = dict(zip(column_names, result_tuple))

return {"code": 0, "message": "fastapi_http_test", "data": {**user_info}}

wrk压测

wrk -t20 -d30s -c500 http://127.0.0.1:8000/http/fastapi/mysql/test

➜ ~ wrk -t20 -d30s -c500 http://127.0.0.1:8000/http/fastapi/mysql/test

Running 30s test @ http://127.0.0.1:8000/http/fastapi/mysql/test

20 threads and 500 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 38.81ms 19.35ms 226.42ms 76.86%

Req/Sec 317.65 227.19 848.00 57.21%

180255 requests in 30.09s, 36.95MB read

Socket errors: connect 267, read 239, write 0, timeout 0

Non-2xx or 3xx responses: 140

Requests/sec: 5989.59

Transfer/sec: 1.23MB

可以发现就加入一个简单的数据库查询,QPS从 76357.51 降到 5989.59 足足降了有10倍多,其实是单机数据库处理不过来太多请求,并发的瓶颈是在数据库,可以尝试加个redis缓存对比MySQL来说并发提升了多少。

Redis缓存查询压测

#!/usr/bin/python3

# -*- coding: utf-8 -*-

# @Author: Hui

# @Desc: { fastapi性能测试 }

# @Date: 2023/09/10 12:24

import json

from datetime import timedelta

import uvicorn

from fastapi import FastAPI

from py_tools.connections.db.mysql import SQLAlchemyManager, DBManager

from py_tools.connections.db.redis_client import RedisManager

app = FastAPI(summary="fastapi性能测试")

async def init_orm():

db_client = SQLAlchemyManager(

host="127.0.0.1",

port=3306,

user="root",

password="123456",

db_name="house_rental"

)

db_client.init_mysql_engine()

DBManager.init_db_client(db_client)

async def init_redis():

RedisManager.init_redis_client(

async_client=True,

host="127.0.0.1",

port=6379,

db=0,

)

@app.on_event("startup")

async def startup_event():

"""项目启动时准备环境"""

await init_orm()

await init_redis()

@app.get(path="/http/fastapi/redis/{username}")

async def fastapi_redis_query_test(username: str):

# 先判断缓存有没有

user_info = await RedisManager.client.get(name=username)

if user_info:

user_info = json.loads(user_info)

return {"code": 0, "message": "fastapi_redis_test", "data": {**user_info}}

sql = f"select id, username, role from user_basic where username='{username}'"

ret = await DBManager().run_sql(sql)

column_names = [desc[0] for desc in ret.cursor.description]

result_tuple = ret.fetchone()

user_info = dict(zip(column_names, result_tuple))

# 存入redis缓存中, 3min

await RedisManager.client.set(

name=user_info.get("username"),

value=json.dumps(user_info),

ex=timedelta(minutes=3)

)

return {"code": 0, "message": "fastapi_redis_test", "data": {**user_info}}

if __name__ == '__main__':

uvicorn.run(app)

运行

wrk -t20 -d30s -c500 http://127.0.0.1:8000/http/fastapi/redis/hui

结果

➜ ~ wrk -t20 -d30s -c500 http://127.0.0.1:8000/http/fastapi/redis/hui

Running 30s test @ http://127.0.0.1:8000/http/fastapi/redis/hui

20 threads and 500 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 9.60ms 5.59ms 126.63ms 88.41%

Req/Sec 1.22k 0.91k 3.45k 57.54%

730083 requests in 30.10s, 149.70MB read

Socket errors: connect 267, read 101, write 0, timeout 0

Requests/sec: 24257.09

Transfer/sec: 4.97MB

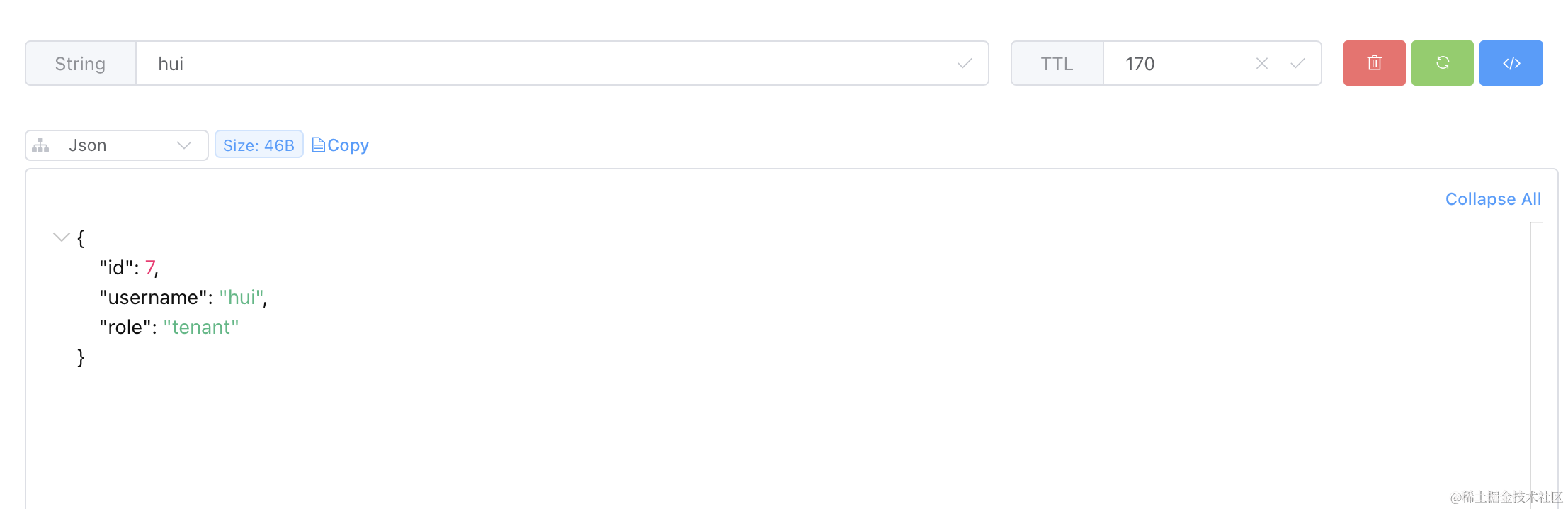

缓存信息

添加了redis缓存,并发能力也提升了不少,因此在业务开发中一些查多改少的数据可以适当的做缓存。

压测结论

| 压测类型 | 测试时长 | 线程数 | 连接数 | 请求总数 | QPS | 平均延迟 | 最大延迟 | 总流量 | 吞吐量/s |

|---|---|---|---|---|---|---|---|---|---|

| 普通请求 | 30s | 20 | 500 | 2298746 | 76357.51 | 3.06ms | 36.65ms | 383.64MB | 12.74MB |

| MySQL查询 | 30s | 20 | 500 | 730083 | 5989.59 | 38.81ms | 226.42ms | 36.95MB | 1.23MB |

| Redis缓存 | 30s | 20 | 500 | 730083 | 24257.09 | 9.60ms | 126.63ms | 149.70MB | 4.97MB |

给 mysql 查询加了个 redis 缓存 qps 提升了 3倍多,对于一些查多改少的数据,根据业务设置适当的缓存可以大大提升系统的吞吐能力。其他框架我就直接上代码测,就不一一赘述了,直接看结果指标。

Sanic

压测方式都是一样的我就不像fastapi一样的一个一个写了,直接写全部压测然后看结果

环境安装

pip install sanic==23.6.0

pip install hui-tools'[db-orm, db-redis]'==0.2.0

编写测试路由

#!/usr/bin/python3

# -*- coding: utf-8 -*-

# @Author: Hui

# @Desc: { sanic性能测试 }

# @Date: 2023/09/10 12:24

import json

from datetime import timedelta

from py_tools.connections.db.mysql import SQLAlchemyManager, DBManager

from py_tools.connections.db.redis_client import RedisManager

from sanic import Sanic

from sanic.response import json as sanic_json

app = Sanic("sanic_test")

async def init_orm():

db_client = SQLAlchemyManager(

host="127.0.0.1",

port=3306,

user="root",

password="123456",

db_name="house_rental"

)

db_client.init_mysql_engine()

DBManager.init_db_client(db_client)

async def init_redis():

RedisManager.init_redis_client(

async_client=True,

host="127.0.0.1",

port=6379,

db=0,

)

@app.listener('before_server_start')

async def server_start_event(app, loop):

await init_orm()

await init_redis()

@app.get(uri="/http/sanic/test")

async def fastapi_test(req):

return sanic_json({"code": 0, "message": "sanic_http_test", "data": {}})

@app.get(uri="/http/sanic/mysql/test")

async def sanic_myql_query_test(req):

sql = "select id, username, role from user_basic where username='hui'"

ret = await DBManager().run_sql(sql)

column_names = [desc[0] for desc in ret.cursor.description]

result_tuple = ret.fetchone()

user_info = dict(zip(column_names, result_tuple))

return sanic_json({"code": 0, "message": "sanic_mysql_test", "data": {**user_info}})

@app.get(uri="/http/sanic/redis/<username>")

async def sanic_redis_query_test(req, username: str):

# 先判断缓存有没有

user_info = await RedisManager.client.get(name=username)

if user_info:

user_info = json.loads(user_info)

return sanic_json({"code": 0, "message": "sanic_redis_test", "data": {**user_info}})

sql = f"select id, username, role from user_basic where username='{username}'"

ret = await DBManager().run_sql(sql)

column_names = [desc[0] for desc in ret.cursor.description]

result_tuple = ret.fetchone()

user_info = dict(zip(column_names, result_tuple))

# 存入redis缓存中, 3min

await RedisManager.client.set(

name=user_info.get("username"),

value=json.dumps(user_info),

ex=timedelta(minutes=3)

)

return sanic_json({"code": 0, "message": "sanic_redis_test", "data": {**user_info}})

def main():

app.run()

if __name__ == '__main__':

# sanic sanic_test.app -p 8001 -w 4 --access-log=False

main()

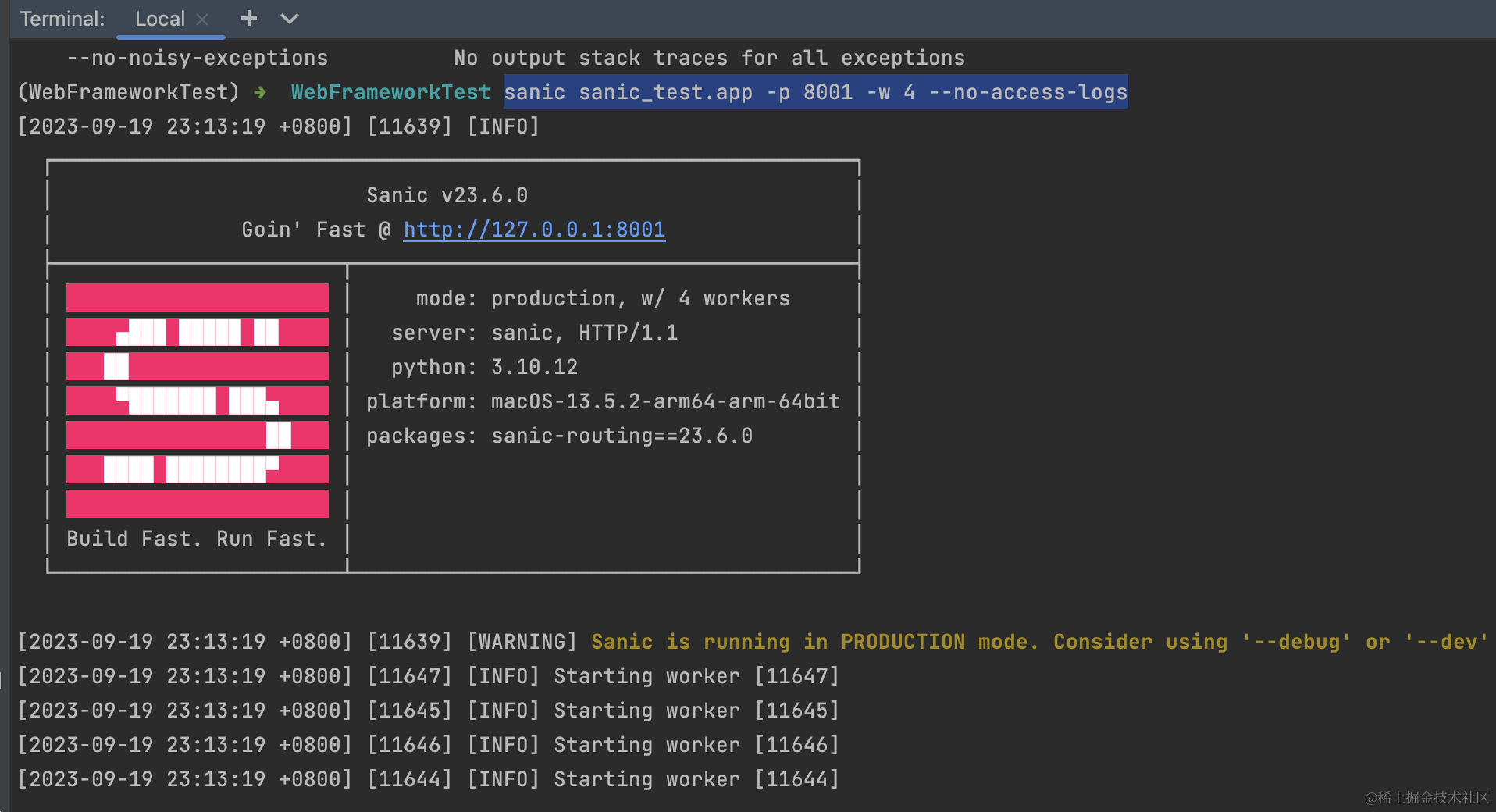

运行

Sanic 内置了一个生产web服务器,可以直接使用

sanic python.sanic_test.app -p 8001 -w 4 --access-log=False

普通http请求压测

同样是起了四个进程看看性能如何

wrk -t20 -d30s -c500 http://127.0.0.1:8001/http/sanic/test

压测结果

➜ ~ wrk -t20 -d30s -c500 http://127.0.0.1:8001/http/sanic/test

Running 30s test @ http://127.0.0.1:8001/http/sanic/test

20 threads and 500 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 1.93ms 2.20ms 61.89ms 91.96%

Req/Sec 6.10k 3.80k 27.08k 69.37%

3651099 requests in 30.10s, 497.92MB read

Socket errors: connect 267, read 163, write 0, timeout 0

Requests/sec: 121286.47

Transfer/sec: 16.54MB

Sanic 果然性能很强,在python中估计数一数二了。

mysql数据查询请求压测

运行

wrk -t20 -d30s -c500 http://127.0.0.1:8001/http/sanic/mysql/test

结果

➜ ~ wrk -t20 -d30s -c500 http://127.0.0.1:8001/http/sanic/mysql/test

Running 30s test @ http://127.0.0.1:8001/http/sanic/mysql/test

20 threads and 500 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 35.22ms 21.75ms 264.37ms 78.52%

Req/Sec 333.14 230.95 1.05k 68.99%

198925 requests in 30.10s, 34.72MB read

Socket errors: connect 267, read 146, write 0, timeout 0

Requests/sec: 6609.65

Transfer/sec: 1.15MB

Redis缓存查询压测

运行

wrk -t20 -d30s -c500 http://127.0.0.1:8001/http/sanic/redis/hui

结果

➜ ~ wrk -t20 -d30s -c500 http://127.0.0.1:8001/http/sanic/redis/hui

Running 30s test @ http://127.0.0.1:8001/http/sanic/redis/hui

20 threads and 500 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 6.91ms 4.13ms 217.47ms 95.62%

Req/Sec 1.71k 0.88k 4.28k 68.05%

1022884 requests in 30.09s, 178.52MB read

Socket errors: connect 267, read 163, write 0, timeout 0

Requests/sec: 33997.96

Transfer/sec: 5.93MB

压测结论

| 压测类型 | 测试时长 | 线程数 | 连接数 | 请求总数 | QPS | 平均延迟 | 最大延迟 | 总流量 | 吞吐量/s |

|---|---|---|---|---|---|---|---|---|---|

| 普通请求 | 30s | 20 | 500 | 3651099 | 121286.47 | 1.93ms | 61.89ms | 497.92MB | 16.54MB |

| MySQL查询 | 30s | 20 | 500 | 198925 | 6609.65 | 35.22ms | 264.37ms | 34.72MB | 1.15MB |

| Redis缓存 | 30s | 20 | 500 | 1022884 | 33997.96 | 6.91ms | 217.47ms | 178.52MB | 5.93MB |

Tornado

环境安装

pip install tornado==6.3.3

pip install gunicorn==21.2.0

pip install hui-tools[db-orm, db-redis]==0.2.0

编写测试路由

#!/usr/bin/python3

# -*- coding: utf-8 -*-

# @Author: Hui

# @Desc: { tornado 性能测试 }

# @Date: 2023/09/20 22:42

import asyncio

from datetime import timedelta

import json

import tornado.web

import tornado.ioloop

from tornado.httpserver import HTTPServer

from py_tools.connections.db.mysql import SQLAlchemyManager, DBManager

from py_tools.connections.db.redis_client import RedisManager

class TornadoBaseHandler(tornado.web.RequestHandler):

pass

class TornadoTestHandler(TornadoBaseHandler):

async def get(self):

self.write({"code": 0, "message": "tornado_http_test", "data": {}})

class TornadoMySQLTestHandler(TornadoBaseHandler):

async def get(self):

sql = "select id, username, role from user_basic where username='hui'"

ret = await DBManager().run_sql(sql)

column_names = [desc[0] for desc in ret.cursor.description]

result_tuple = ret.fetchone()

user_info = dict(zip(column_names, result_tuple))

self.write({"code": 0, "message": "tornado_mysql_test", "data": {**user_info}})

class TornadoRedisTestHandler(TornadoBaseHandler):

async def get(self, username):

user_info = await RedisManager.client.get(name=username)

if user_info:

user_info = json.loads(user_info)

self.write(

{"code": 0, "message": "tornado_redis_test", "data": {**user_info}}

)

return

sql = f"select id, username, role from user_basic where username='{username}'"

ret = await DBManager().run_sql(sql)

column_names = [desc[0] for desc in ret.cursor.description]

result_tuple = ret.fetchone()

user_info = dict(zip(column_names, result_tuple))

# 存入redis缓存中, 3min

await RedisManager.client.set(

name=user_info.get("username"),

value=json.dumps(user_info),

ex=timedelta(minutes=3),

)

self.write({"code": 0, "message": "tornado_redis_test", "data": {**user_info}})

def init_orm():

db_client = SQLAlchemyManager(

host="127.0.0.1",

port=3306,

user="root",

password="123456",

db_name="house_rental",

)

db_client.init_mysql_engine()

DBManager.init_db_client(db_client)

def init_redis():

RedisManager.init_redis_client(

async_client=True,

host="127.0.0.1",

port=6379,

db=0,

)

def init_setup():

init_orm()

init_redis()

def make_app():

init_setup()

return tornado.web.Application(

[

(r"/http/tornado/test", TornadoTestHandler),

(r"/http/tornado/mysql/test", TornadoMySQLTestHandler),

(r"/http/tornado/redis/(.*)", TornadoRedisTestHandler),

]

)

app = make_app()

async def main():

# init_setup()

# app = make_app()

server = HTTPServer(app)

server.bind(8002)

# server.start(4) # start 4 worker

# app.listen(8002)

await asyncio.Event().wait()

if __name__ == "__main__":

# gunicorn -k tornado -w=4 -b=127.0.0.1:8002 python.tornado_test:app

asyncio.run(main())

运行tornado服务

gunicorn -k tornado -w=4 -b=127.0.0.1:8002 python.tornado_test:app

wrk 压测

wrk -t20 -d30s -c500 http://127.0.0.1:8002/http/tornado/test

wrk -t20 -d30s -c500 http://127.0.0.1:8002/http/tornado/mysql/test

wrk -t20 -d30s -c500 http://127.0.0.1:8002/http/tornado/redis/hui

结果

➜ ~ wrk -t20 -d30s -c500 http:// 127.0.0.1 : 8002 /http/tornado/test

Running 30s test @ http://127.0.0.1:8002/http/tornado/test

20 threads and 500 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 6.54ms 1.92ms 34.75ms 63.85%

Req/Sec 1.79k 1.07k 3.83k 56.23%

1068205 requests in 30.07s, 280.15MB read

Socket errors: connect 267, read 98, write 0, timeout 0

Requests/sec: 35525.38

Transfer/sec: 9.32MB

➜ ~ wrk -t20 -d30s -c500 http:// 127.0.0.1 : 8002 /http/tornado/mysql/test

Running 30s test @ http://127.0.0.1:8002/http/tornado/mysql/test

20 threads and 500 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 41.29ms 16.51ms 250.81ms 71.45%

Req/Sec 283.47 188.81 0.95k 65.31%

169471 requests in 30.09s, 51.88MB read

Socket errors: connect 267, read 105, write 0, timeout 0

Requests/sec: 5631.76

Transfer/sec: 1.72MB

➜ ~ wrk -t20 -d30s -c500 http:// 127.0.0.1 : 8002 /http/tornado/redis/hui

Running 30s test @ http://127.0.0.1:8002/http/tornado/redis/hui

20 threads and 500 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 11.69ms 3.83ms 125.75ms 78.27%

Req/Sec 1.00k 537.85 2.20k 64.34%

599840 requests in 30.07s, 183.63MB read

Socket errors: connect 267, read 97, write 0, timeout 0

Non-2xx or 3xx responses: 2

Requests/sec: 19947.28

Transfer/sec: 6.11MB

Gin

环境安装

go get "github.com/gin-gonic/gin"

go get "github.com/go-redis/redis"

go get "gorm.io/driver/mysql"

go get "gorm.io/gorm"

代码编写

package main

import (

"encoding/json"

"time"

"github.com/gin-gonic/gin"

"github.com/go-redis/redis"

"gorm.io/driver/mysql"

"gorm.io/gorm"

"gorm.io/gorm/logger"

)

var (

db *gorm.DB

redisClient *redis.Client

)

type UserBasic struct {

Id int `json:"id"`

Username string `json:"username"`

Role string `json:"role"`

}

func (UserBasic) TableName() string {

return "user_basic"

}

func initDB() *gorm.DB {

var err error

db, err = gorm.Open(mysql.Open("root:123456@/house_rental"), &gorm.Config{

// 将LogMode设置为logger.Silent以禁用日志打印

Logger: logger.Default.LogMode(logger.Silent),

})

if err != nil {

panic("failed to connect database")

}

sqlDB, err := db.DB()

// SetMaxIdleConns sets the maximum number of connections in the idle connection pool.

sqlDB.SetMaxIdleConns(10)

// SetMaxOpenConns sets the maximum number of open connections to the database.

sqlDB.SetMaxOpenConns(30)

// SetConnMaxLifetime sets the maximum amount of time a connection may be reused.

sqlDB.SetConnMaxLifetime(time.Hour)

return db

}

func initRedis() *redis.Client {

redisClient = redis.NewClient(&redis.Options{

Addr: "localhost:6379",

})

return redisClient

}

func jsonTestHandler(c *gin.Context) {

c.JSON(200, gin.H{

"code": 0, "message": "gin json", "data": make(map[string]any),

})

}

func mysqlQueryHandler(c *gin.Context) {

// 查询语句

var user UserBasic

db.First(&user, "username = ?", "hui")

//fmt.Println(user)

// 返回响应

c.JSON(200, gin.H{

"code": 0,

"message": "go mysql test",

"data": user,

})

}

func cacheQueryHandler(c *gin.Context) {

// 从Redis中获取缓存

username := "hui" // 要查询的用户名

cachedUser, err := redisClient.Get(username).Result()

if err == nil {

// 缓存存在,将缓存结果返回给客户端

var user UserBasic

_ = json.Unmarshal([]byte(cachedUser), &user)

c.JSON(200, gin.H{

"code": 0,

"message": "gin redis test",

"data": user,

})

return

}

// 缓存不存在,执行数据库查询

var user UserBasic

db.First(&user, "username = ?", username)

// 将查询结果保存到Redis缓存

userJSON, _ := json.Marshal(user)

redisClient.Set(username, userJSON, time.Minute*2)

// 返回响应

c.JSON(200, gin.H{

"code": 0,

"message": "gin redis test",

"data": user,

})

}

func initDao() {

initDB()

initRedis()

}

func main() {

//r := gin.Default()

r := gin.New()

gin.SetMode(gin.ReleaseMode) // 生产模式

initDao()

r.GET("/http/gin/test", jsonTestHandler)

r.GET("/http/gin/mysql/test", mysqlQueryHandler)

r.GET("/http/gin/redis/test", cacheQueryHandler)

r.Run("127.0.0.1:8003")

}

wrk 压测

wrk -t20 -d30s -c500 http: //127.0.0.1:8003/http/gin/test

wrk -t20 -d30s -c500 http: //127.0.0.1:8003/http/gin/mysql/test

wrk -t20 -d30s -c500 http: //127.0.0.1:8003/http/gin/redis/test

结果

➜ ~ wrk -t20 -d30s -c500 http:// 127.0.0.1 : 8003 /http/gin/test

Running 30s test @ http://127.0.0.1:8003/http/gin/test

20 threads and 500 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 2.45ms 5.68ms 186.48ms 91.70%

Req/Sec 6.36k 5.62k 53.15k 83.99%

3787808 requests in 30.10s, 592.42MB read

Socket errors: connect 267, read 95, write 0, timeout 0

Requests/sec: 125855.41

Transfer/sec: 19.68MB

➜ ~ wrk -t20 -d30s -c500 http:// 127.0.0.1 : 8003 /http/gin/mysql/test

Running 30s test @ http://127.0.0.1:8003/http/gin/mysql/test

20 threads and 500 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 40.89ms 83.70ms 1.12s 90.99%

Req/Sec 522.33 322.88 1.72k 64.84%

308836 requests in 30.10s, 61.26MB read

Socket errors: connect 267, read 100, write 0, timeout 0

Requests/sec: 10260.63

Transfer/sec: 2.04MB

➜ ~

➜ ~ wrk -t20 -d30s -c500 http:// 127.0.0.1 : 8003 /http/gin/redis/test

Running 30s test @ http://127.0.0.1:8003/http/gin/redis/test

20 threads and 500 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 7.18ms 1.76ms 79.40ms 81.93%

Req/Sec 1.63k 1.09k 4.34k 62.59%

972272 requests in 30.10s, 193.79MB read

Socket errors: connect 267, read 104, write 0, timeout 0

Requests/sec: 32305.30

Transfer/sec: 6.44MB

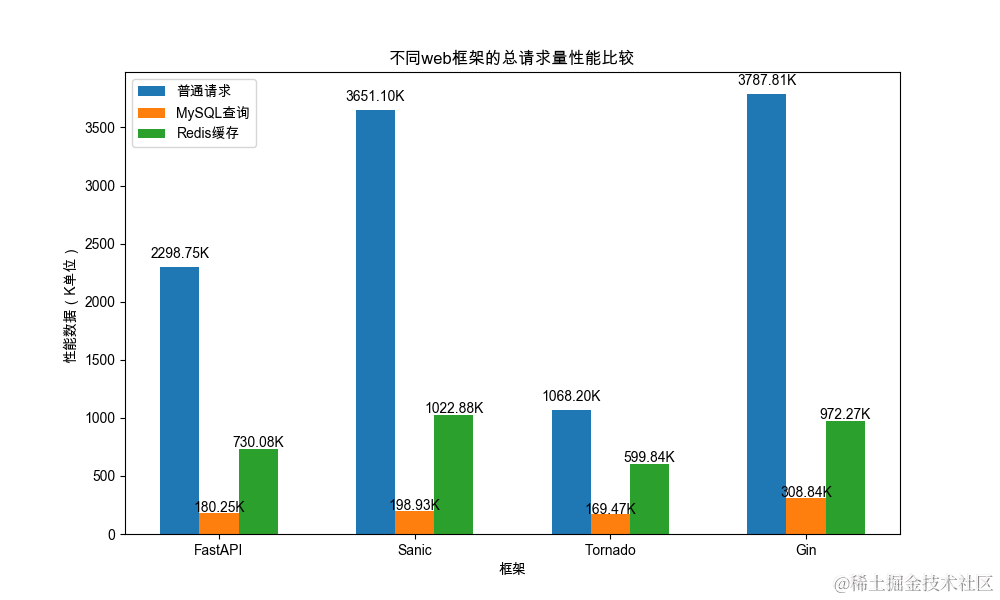

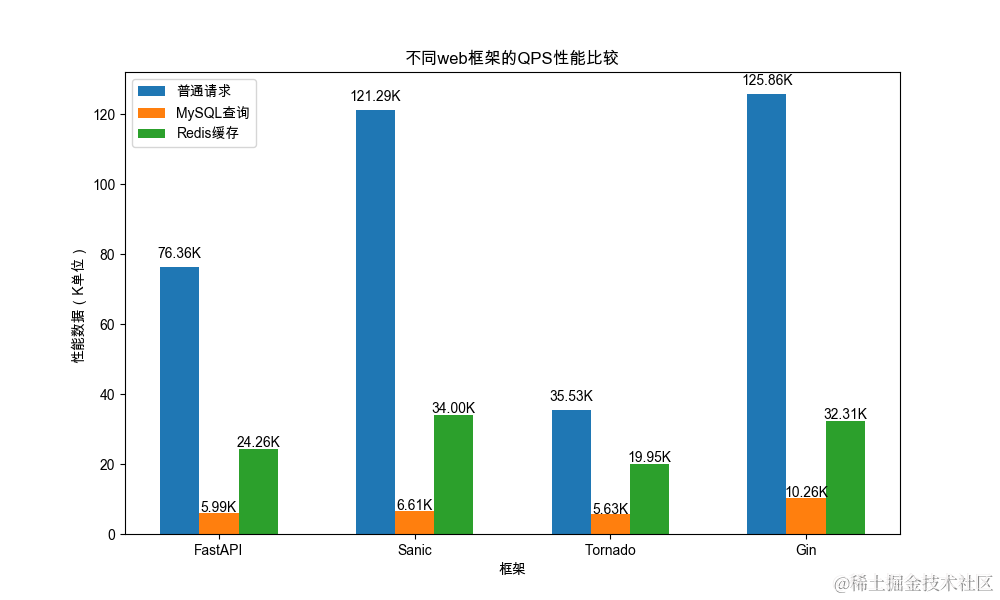

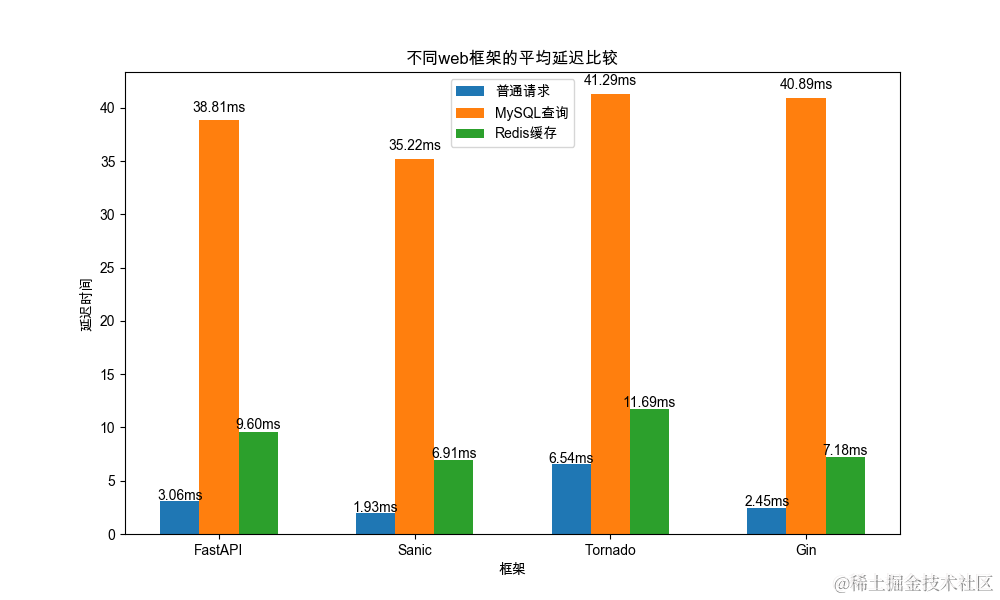

四、总结

| web框架 | 压测类型 | 测试时长 | 线程数 | 连接数 | 请求总数 | QPS | 平均延迟 | 最大延迟 | 总流量 | 吞吐量/s |

|---|---|---|---|---|---|---|---|---|---|---|

| FastAPI | 普通请求 | 30s | 20 | 500 | 2298746(229w) | 76357.51 (76k) | 3.06ms | 36.65ms | 383.64MB | 12.74MB |

| MySQL查询 | 30s | 20 | 500 | 180255 (18w) | 5989.59 (5.9k) | 38.81ms | 226.42ms | 36.95MB | 1.23MB | |

| Redis缓存 | 30s | 20 | 500 | 730083 (73w) | 24257.09 (24k) | 9.60ms | 126.63ms | 149.70MB | 4.97MB | |

| Sanic | 普通请求 | 30s | 20 | 500 | 3651099(365w) | 121286.47(120k) | 1.93ms | 61.89ms | 497.92MB | 16.54MB |

| MySQL查询 | 30s | 20 | 500 | 198925 (19w) | 6609.65 (6k) | 35.22ms | 264.37ms | 34.72MB | 1.15MB | |

| Redis缓存 | 30s | 20 | 500 | 1022884(100w) | 33997.96 (33k) | 6.91ms | 217.47ms | 178.52MB | 5.93MB | |

| Tornado | 普通请求 | 30s | 20 | 500 | 1068205(106w) | 35525.38(35k) | 6.54ms | 34.75ms | 280.15MB | 9.32MB |

| MySQL查询 | 30s | 20 | 500 | 169471 (16w) | 5631.76 (5.6k) | 41.29ms | 250.81ms | 51.88MB | 1.72MB | |

| Redis缓存 | 30s | 20 | 500 | 599840 (59w) | 19947.28 (19k) | 11.69ms | 125.75ms | 183.63MB | 6.11MB | |

| Gin | 普通请求 | 30s | 20 | 500 | 3787808(378w) | 125855.41(125k) | 2.45ms | 186.48ms | 592.42MB | 19.68MB |

| MySQL查询 | 30s | 20 | 500 | 308836 (30w) | 10260.63 (10k) | 40.89ms | 1.12s | 61.26MB | 2.04MB | |

| Redis缓存 | 30s | 20 | 500 | 972272 (97w) | 32305.30(32k) | 7.18ms | 79.40ms | 193.79MB | 6.44MB |

性能

从性能角度来看,各个Web框架的表现如下:

Gin > Sanic > FastAPI > Tornado

Gin:在普通请求方面表现最佳,具有最高的QPS和吞吐量。在MySQL查询中,性能很高,但最大延迟也相对较高。gin承受的并发请求最高有 1w qps,其他python框架都在5-6k qps,但gin的mysql查询请求最大延迟达到了1.12s, 虽然可以接受这么多并发请求,但单机mysql还是处理不过来。

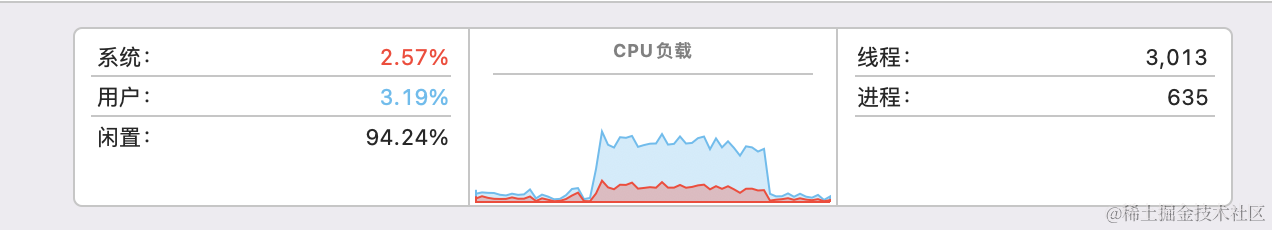

还有非常重要的一点,cpython的多线程由于GIL原因不能充分利用多核CPU,故而都是通过开了四个进程来处理请求,资源开销远远大于go的gin,go底层的GMP的调度策略很强,天然支持并发。

注意:Python使用asyncio语法时切记不要使用同步IO操作不然会堵塞住主线程的事件loop,从而大大降低性能,如果没有异步库支持可以采用线程来处理同步IO。

综合评价

除了性能之外,还有其他因素需要考虑,例如框架的社区活跃性、生态系统、文档质量以及团队熟悉度等。这些因素也应该在选择Web框架时考虑。

最终的选择应该基于具体需求和项目要求。如果性能是最重要的因素之一,那么Sanic和go的一些框架可能是不错的选择。如果您更关注其他方面的因素,可以考虑框架的社区支持和适用性。我个人还是挺喜欢使用FastAPI。

五、测试源代码

https://github.com/HuiDBK/WebFrameworkPressureTest

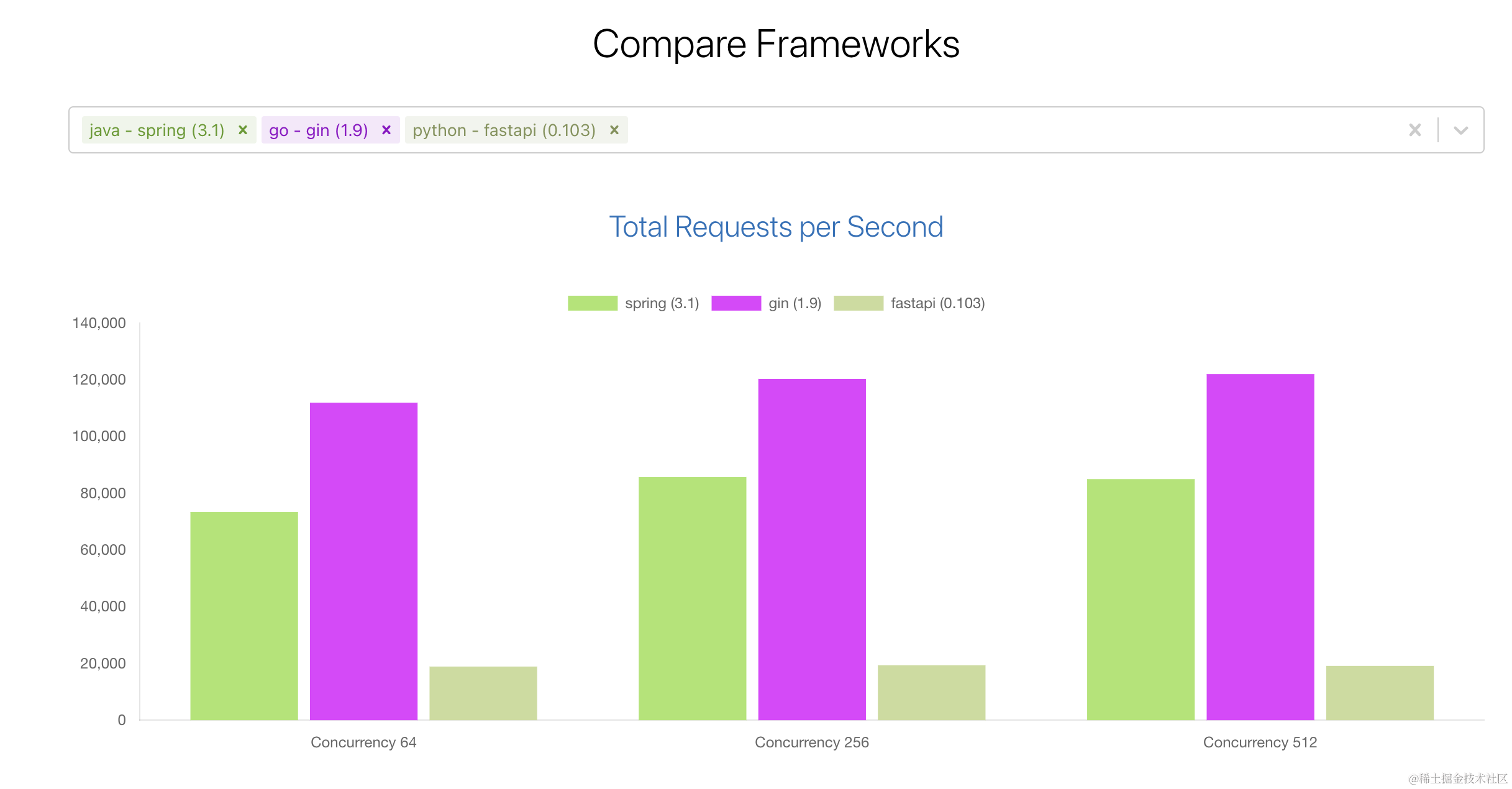

Github上已经有其他语言的web框架的压测,感兴趣也可以去了解下: https://web-frameworks-benchmark.netlify.app/result

不知道为啥他们测试的python性能好低,可能异步没用对😄