写目录

- yolov8 模型部署--TensorRT部署

- 1、模型导出为onnx格式

- 2、模型onnx格式转engine 部署

yolov8 模型部署–TensorRT部署

1、模型导出为onnx格式

-

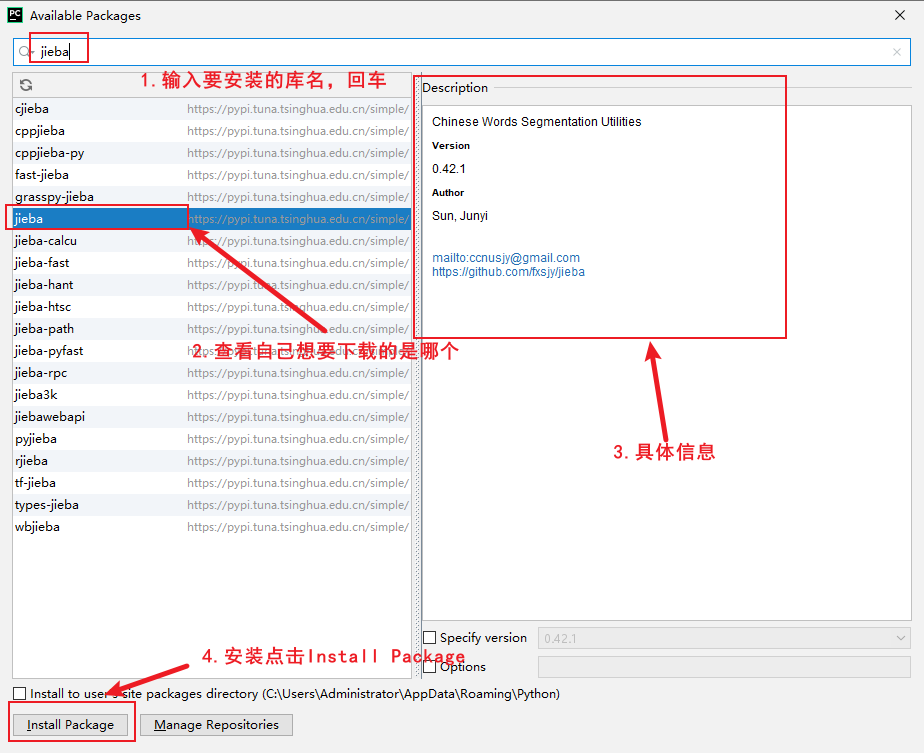

如果要用TensorRT部署YOLOv8,需要先使用下面的命令将模型导出为onnx格式:

yolo export model=yolov8n.pt format=onnx -

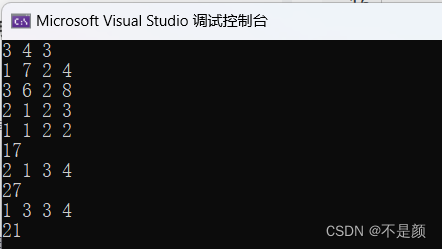

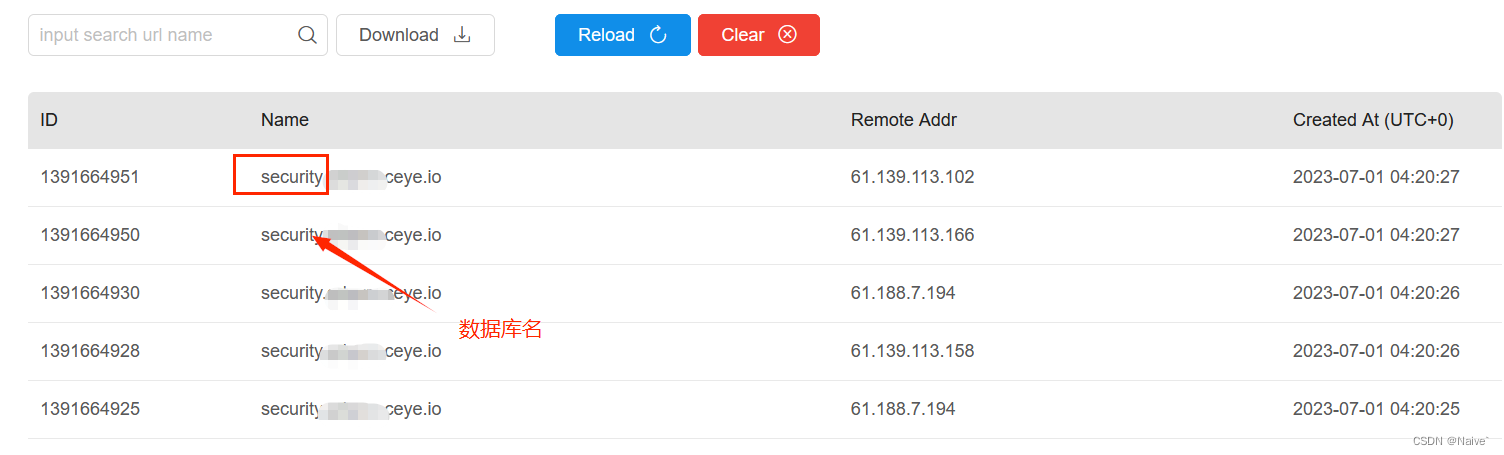

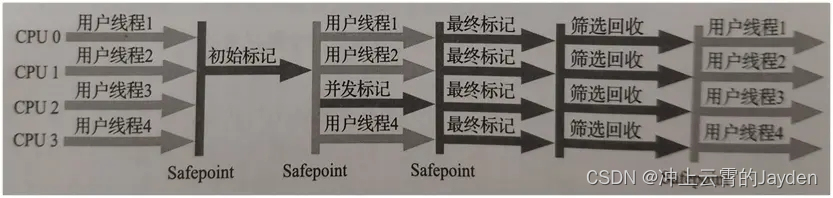

YOLOv8的3个检测头一共有80x80+40x40+20x20=8400个输出单元格,每个单元格包含x,y,w,h这4项再加80个类别的置信度总共84项内容,所以通过上面命令导出的onnx模型的输出维度为

1x84x8400。 -

模型输出维度

-

这样的通道排列顺序有个问题,那就是后处理的时候会造成内存访问不连续。

-

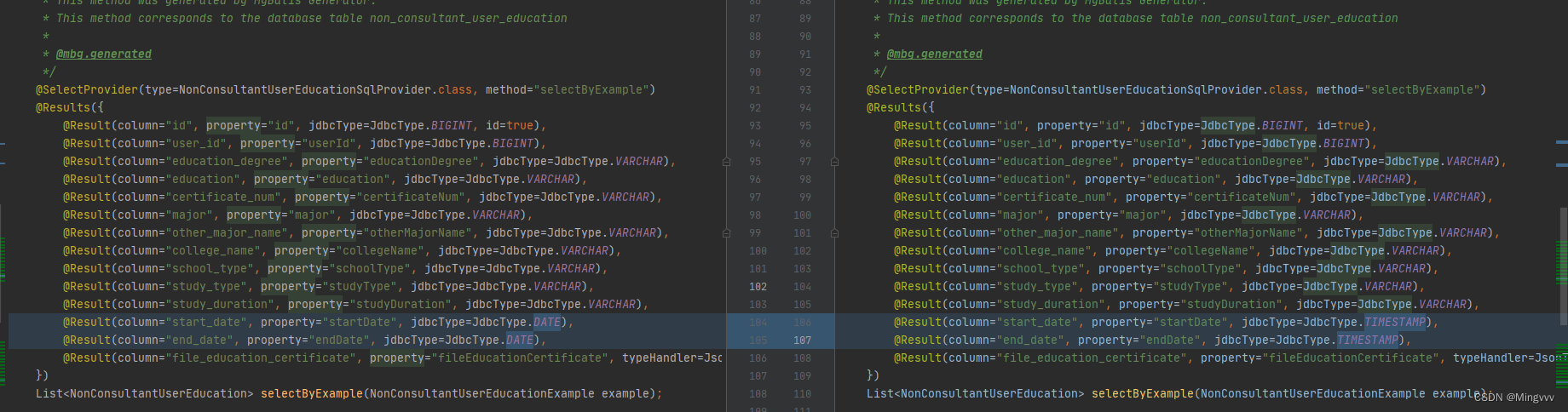

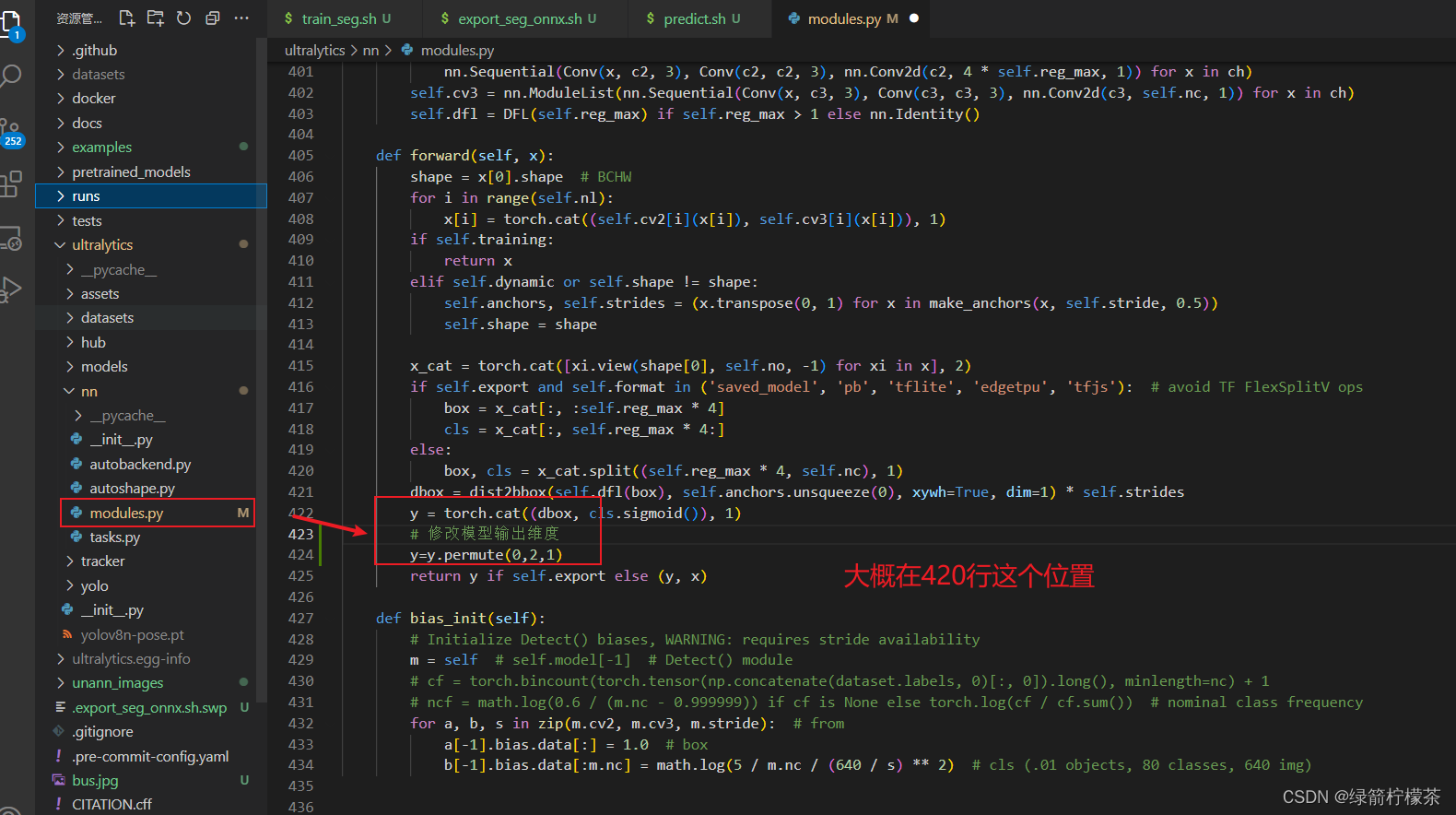

为了解决这个问题,我们可以修改一下代码,具体做法是把

ultralytics/nn/modules.py文件中的代码做如下修改,交换一下张量y的通道顺序:

def forward(self, x):

shape = x[0].shape # BCHW

for i in range(self.nl):

x[i] = torch.cat((self.cv2[i](x[i]), self.cv3[i](x[i])), 1)

if self.training:

return x

elif self.dynamic or self.shape != shape:

self.anchors, self.strides = (x.transpose(0, 1) for x in make_anchors(x, self.stride, 0.5))

self.shape = shape

x_cat = torch.cat([xi.view(shape[0], self.no, -1) for xi in x], 2)

if self.export and self.format in ('saved_model', 'pb', 'tflite', 'edgetpu', 'tfjs'): # avoid TF FlexSplitV ops

box = x_cat[:, :self.reg_max * 4]

cls = x_cat[:, self.reg_max * 4:]

else:

box, cls = x_cat.split((self.reg_max * 4, self.nc), 1)

dbox = dist2bbox(self.dfl(box), self.anchors.unsqueeze(0), xywh=True, dim=1) * self.strides

y = torch.cat((dbox, cls.sigmoid()), 1)

# 修改模型输出维度

y=y.permute(0,2,1)

return y if self.export else (y, x)

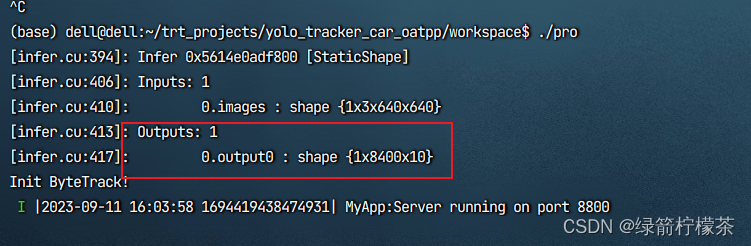

- 这样修改后再执行上面的模型导出命令,模型的输出维度变为

1x8400x84

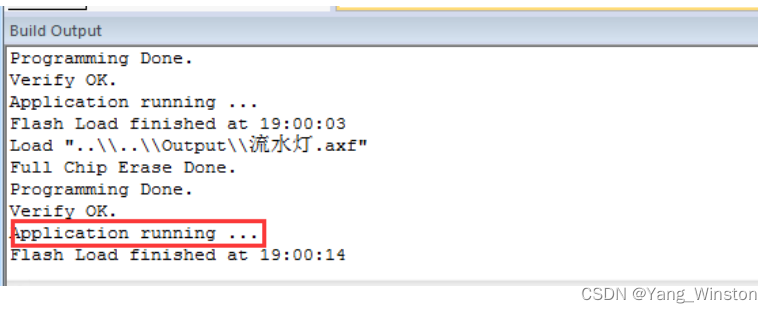

2、模型onnx格式转engine 部署

- 配置好

TensorRT和NVIDIA环境 - 使用

trtexec转换格式trtexec --onnx=coco/best.onnx --saveEngine=coco/best.onnx.engine --workspace=32 - 模型部署部分代码-c++

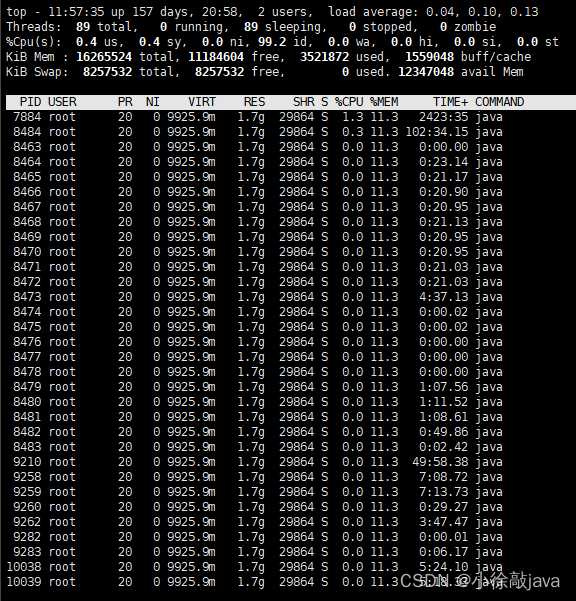

#ifndef MyController_hpp #define MyController_hpp #include <ctime> #include <chrono> #include <sstream> #include <iomanip> #include <iostream> #include <numeric> #include <vector> #include "oatpp/web/server/api/ApiController.hpp" #include "oatpp/core/macro/codegen.hpp" #include "oatpp/core/macro/component.hpp" #include "opencv2/opencv.hpp" #include "../dto/DTOs.hpp" // 定义数据格式,用于在不同组件之间传输数据 #include "../yoloApp/simple_yolo.hpp" #include "../byteTrackApp/logging.h" #include "../byteTrackApp/BYTETracker.h" // high performance #include "../yoloHighPer/cpm.hpp" #include "../yoloHighPer/infer.hpp" #include "../yoloHighPer/yolo.hpp" # include <dirent.h> # include <sys/types.h> # include <sys/stat.h> # include <unistd.h> # include <stdarg.h> using namespace std; using namespace cv; #include OATPP_CODEGEN_BEGIN(ApiController) //<-- Begin Codegen static bool exists(const string& path){ #ifdef _WIN32 return ::PathFileExistsA(path.c_str()); #else return access(path.c_str(), R_OK) == 0; #endif } static std::vector<std::string> cocolabels = { "car", "excavator", "loader", "dumpTruck", "person" }; class InferInstance{ public: InferInstance(std::string onnx_model_path, std::string trt_model_path){ onnx_model = onnx_model_path; trt_model = trt_model_path; startup(); } bool startup(){ // if(!exists(trt_model)){ // SimpleYolo::compile( // SimpleYolo::Mode::FP32, // FP32、FP16、INT8 // SimpleYolo::Type::V8, // 1, // max batch size // onnx_model, // source // trt_model, // save to // 1 << 30, // "inference" // ); // } infer_ = yolo::load(trt_model, yolo::Type::V8); return infer_ != nullptr; } int inference(const Mat& image_input, yolo::BoxArray& boxarray){ if(infer_ == nullptr){ // INFOE("Not Initialize."); return 1; } if(image_input.empty()){ // INFOE("Image is empty."); return 1; } boxarray = infer_->forward(cvimg(image_input)); return 0; } private: yolo::Image cvimg(const cv::Mat &image) { return yolo::Image(image.data, image.cols, image.rows);} private: std::string onnx_model = "best.onnx"; std::string trt_model = "best.onnx.engine"; shared_ptr<yolo::Infer> infer_; }; /// std::string onnx_model = "coco/best.onnx"; std::string engine_label = "coco/best.onnx.engine"; std::unique_ptr<InferInstance> infer_instance1(new InferInstance(onnx_model, engine_label)); int frame_rate = 10; int track_buffer = 30; std::unique_ptr<BYTETracker> tracker_instance1(new BYTETracker(frame_rate, track_buffer)); /// /** * 建议使用 Api 控制器,而不是使用裸 HttpRequestHandler 为每个新端点创建新的请求处理程序。 * API 控制器通过为您生成样板代码,使添加新端点的过程变得更加容易。 它还有助于组织您的端点, * 将它们分组到不同的 API 控制器中。 */ /** * Sample Api Controller. */ class MyController : public oatpp::web::server::api::ApiController { protected: /** * Constructor with object mapper. * @param objectMapper - default object mapper used to serialize/deserialize DTOs. */ MyController(const std::shared_ptr<ObjectMapper>& objectMapper) : oatpp::web::server::api::ApiController(objectMapper) {} public: static std::shared_ptr<MyController> createShared(OATPP_COMPONENT(std::shared_ptr<ObjectMapper>, objectMapper)){ return std::shared_ptr<MyController>(new MyController(objectMapper)); } // TODO Insert Your endpoints here !!! /--data-- // 多目标追踪 ENDPOINT_ASYNC("POST", "/car1", tracker1){ ENDPOINT_ASYNC_INIT(tracker1) Action act() override { return request->readBodyToStringAsync().callbackTo(&tracker1::returnResponse); } Action returnResponse(const oatpp::String& body_){ auto response = tracker_inference(*infer_instance1, *tracker_instance1, body_, controller); return _return(response); } }; // public: // 多目标追踪 static std::shared_ptr<OutgoingResponse> tracker_inference(InferInstance& infer_, BYTETracker& track_infer, std::string body_, auto* controller){ auto base64Image = base64_decode(body_); if(base64Image.empty()){ return controller->createResponse(Status::CODE_400, "The image is empty!"); } std::vector<char> base64_img(base64Image.begin(), base64Image.end()); cv::Mat image = cv::imdecode(base64_img, 1); // 获取程序开始时间点 auto start_time = std::chrono::high_resolution_clock::now(); // 推理 yolo::BoxArray boxarray; CV_Assert(0 == infer_.inference(image, boxarray)); // 获取程序结束时间点 auto end_time = std::chrono::high_resolution_clock::now(); // 计算运行时间 auto duration = std::chrono::duration_cast<std::chrono::milliseconds>(end_time - start_time); // 打印运行时间(以微秒为单位) // std::cout << "程序运行时间: " << duration.count() << " 毫秒" << std::endl; // 结果处理 vector<Objects> objects; objects.resize(boxarray.size()); int index = 0; for(auto& box : boxarray) { objects[index].rect.x = box.left;; objects[index].rect.y = box.top; objects[index].rect.width = box.right - box.left; objects[index].rect.height = box.bottom - box.top; objects[index].prob = box.confidence; objects[index].label = box.class_label; index++; std::cout << "left: " << box.left << ", top: " << box.top << ", right: " << box.right << ", bottom: " << box.bottom << ", confidence: " << box.confidence << ", class_label: " << box.class_label << std::endl; } auto yoloDto = TrackYoloDto::createShared(); auto boxList = TrackBoxList::createShared(); std::vector<STrack> output_stracks = track_infer.update(objects); for (int i = 0; i < output_stracks.size(); i++) { auto trackBoxDto = TrackerBboxes::createShared(); vector<float> tlwh = output_stracks[i].tlwh; // 方框的位置 trackBoxDto->class_id = cocolabels[output_stracks[i].class_id]; trackBoxDto->track_id = output_stracks[i].track_id; trackBoxDto->x = tlwh[0]; trackBoxDto->y = tlwh[1]; trackBoxDto->width = tlwh[2]; trackBoxDto->height = tlwh[3]; boxList->push_back(trackBoxDto); } output_stracks.clear(); yoloDto->data = boxList; yoloDto->status = "successful"; yoloDto->time = currentDateTime(); return controller->createDtoResponse(Status::CODE_200, yoloDto); } static std::string currentDateTime(){ auto now = std::chrono::system_clock::now(); auto now_c = std::chrono::system_clock::to_time_t(now); auto now_ms = std::chrono::duration_cast<std::chrono::milliseconds>(now.time_since_epoch()) % 1000; std::stringstream ss; ss << std::put_time(std::localtime(&now_c), "%Y-%m-%d %H:%M:%S") << '.' << std::setfill('0') << std::setw(3) << now_ms.count(); return ss.str(); } static unsigned char from_b64(unsigned char ch){ /* Inverse lookup map */ static const unsigned char tab[128] = { 255, 255, 255, 255, 255, 255, 255, 255, /* 0 */ 255, 255, 255, 255, 255, 255, 255, 255, /* 8 */ 255, 255, 255, 255, 255, 255, 255, 255, /* 16 */ 255, 255, 255, 255, 255, 255, 255, 255, /* 24 */ 255, 255, 255, 255, 255, 255, 255, 255, /* 32 */ 255, 255, 255, 62, 255, 255, 255, 63, /* 40 */ 52, 53, 54, 55, 56, 57, 58, 59, /* 48 */ 60, 61, 255, 255, 255, 200, 255, 255, /* 56 '=' is 200, on index 61 */ 255, 0, 1, 2, 3, 4, 5, 6, /* 64 */ 7, 8, 9, 10, 11, 12, 13, 14, /* 72 */ 15, 16, 17, 18, 19, 20, 21, 22, /* 80 */ 23, 24, 25, 255, 255, 255, 255, 255, /* 88 */ 255, 26, 27, 28, 29, 30, 31, 32, /* 96 */ 33, 34, 35, 36, 37, 38, 39, 40, /* 104 */ 41, 42, 43, 44, 45, 46, 47, 48, /* 112 */ 49, 50, 51, 255, 255, 255, 255, 255, /* 120 */ }; return tab[ch & 127]; } static std::string base64_decode(const std::string& base64){ if(base64.empty()) return ""; int len = base64.size(); auto s = (const unsigned char*)base64.data(); unsigned char a, b, c, d; int orig_len = len; int dec_len = 0; string out_data; auto end_s = s + base64.size(); int count_eq = 0; while(*--end_s == '='){ count_eq ++; } out_data.resize(len / 4 * 3 - count_eq); char *dst = const_cast<char*>(out_data.data()); char *orig_dst = dst; while (len >= 4 && (a = from_b64(s[0])) != 255 && (b = from_b64(s[1])) != 255 && (c = from_b64(s[2])) != 255 && (d = from_b64(s[3])) != 255) { s += 4; len -= 4; if (a == 200 || b == 200) break; /* '=' can't be there */ *dst++ = a << 2 | b >> 4; if (c == 200) break; *dst++ = b << 4 | c >> 2; if (d == 200) break; *dst++ = c << 6 | d; } dec_len = (dst - orig_dst); // dec_len必定等于out_data.size() return out_data; } }; #include OATPP_CODEGEN_END(ApiController) //<-- End Codegen #endif /* MyController_hpp */ - 启动模型

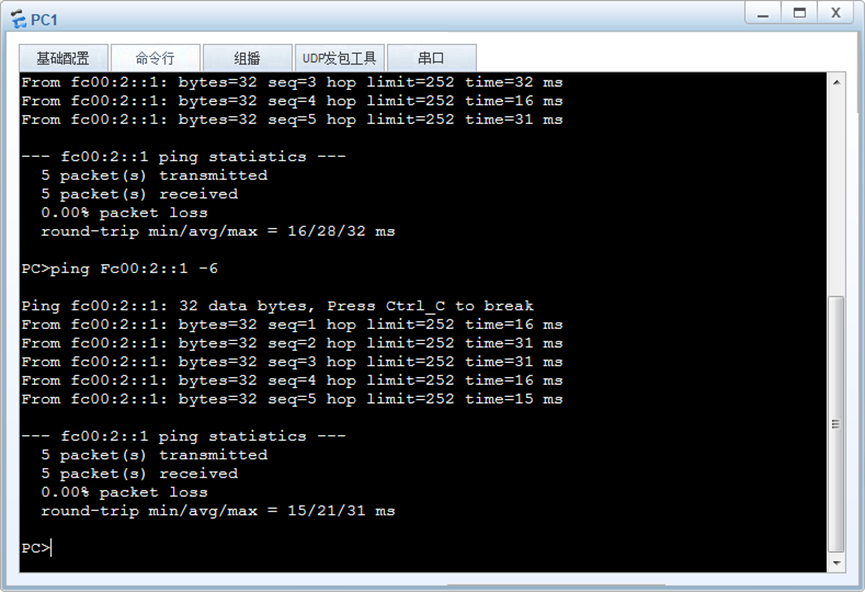

- 请求接口进行推理

yolov8 模型部署测试