代码地址:GitHub - apple/ml-mobileone: This repository contains the official implementation of the research paper, "An Improved One millisecond Mobile Backbone".

论文地址:https://arxiv.org/abs/2206.04040

MobileOne出自Apple,它的作者声称在iPhone 12上MobileOne的推理时间只有1毫秒,这也是MobileOne这个名字中One的含义。从MobileOne的快速落地可以看到重参数化在移动端的潜力:简单、高效、即插即用。

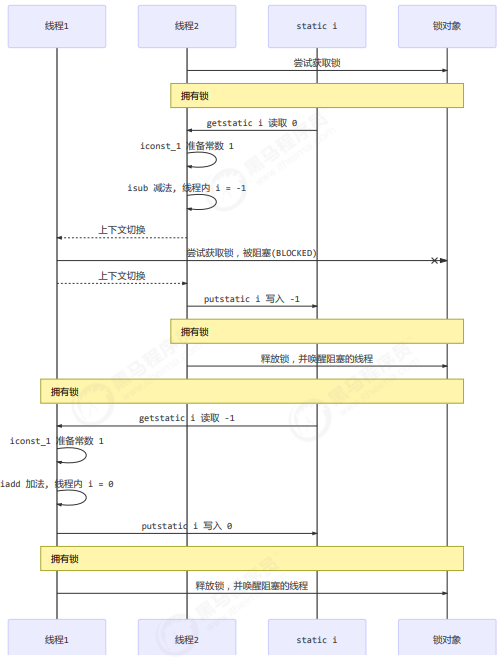

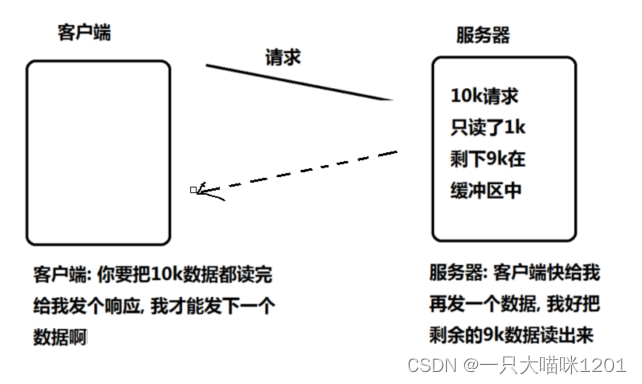

图3中的左侧部分构成了MobileOne的一个完整building block。它由上下两部分构成,其中上面部分基于深度卷积(Depthwise Convolution),下面部分基于点卷积(Pointwise Convolution)。深度卷积与点卷积的术语来自于MobileNet。深度卷积本质上是一个分组卷积,它的分组数g与输入通道相同。而点卷积是一个1×1卷积。

图3中的深度卷积模块由三条分支构成。最左侧分支是1×1卷积;中间分支是过参数化的3×3卷积,即k个3×3卷积;右侧部分是一个包含BN层的shortcut连接。这里的1×1卷积和3×3卷积都是深度卷积(也即分组卷积,分组数g等于输入通道数)。

图3中的点卷积模块由两条分支构成。左侧分支是过参数化的1×1卷积,由k个1×1卷积构成。右侧分支是一个包含BN层的跳跃连接。在训练阶段,MobileOne就是由这样的building block堆叠而成。当训练完成后,可以使用重参数化方法将图3中左侧所示的building block重参数化图3中右侧的结构。

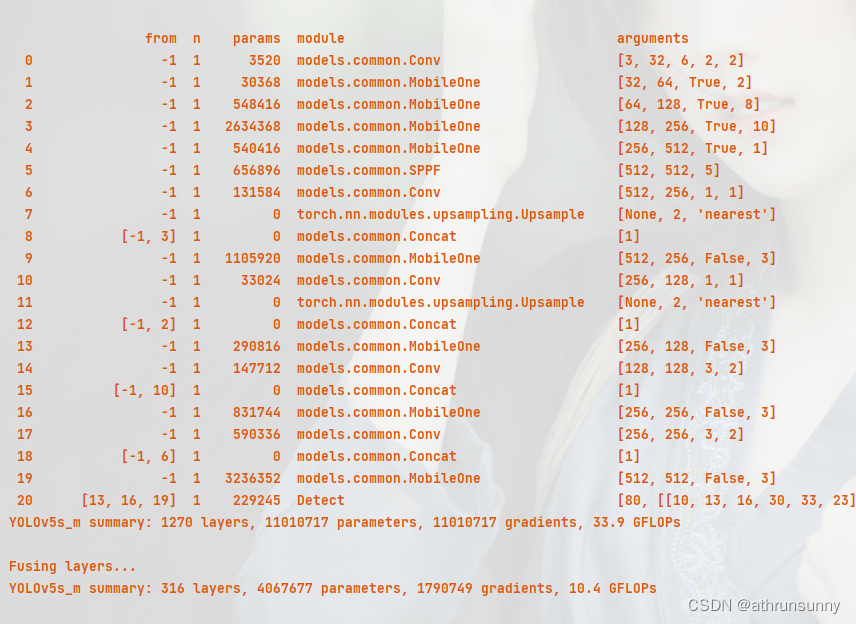

1、yolov5

创建yolov5s-mobileone.yaml

# YOLOv5 🚀 by Ultralytics, GPL-3.0 license

# Parameters

nc: 80 # number of classes

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.50 # layer channel multiple

anchors:

- [10,13, 16,30, 33,23] # P3/8

- [30,61, 62,45, 59,119] # P4/16

- [116,90, 156,198, 373,326] # P5/32

# YOLOv5 v6.0 backbone

backbone:

# [from, number, module, args]

[[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2

[ -1, 1, MobileOne, [ 128, True, 2] ], # 1-P2/4

[ -1, 1, MobileOne, [ 256, True, 8] ], # 2-P3/8

[ -1, 1, MobileOne, [ 512, True, 10] ], # 3-P4/16

[ -1, 1, MobileOne, [ 1024, True, 1] ], # 4-P5/32

[-1, 1, SPPF, [1024, 5]], # 5

]

# YOLOv5 v6.0 head

head:

[[-1, 1, Conv, [512, 1, 1]], # 6

[-1, 1, nn.Upsample, [None, 2, 'nearest']], # 7

[[-1, 3], 1, Concat, [1]], # cat backbone P4

[ -1, 1, MobileOne, [ 512, False, 3] ], # 9

[-1, 1, Conv, [256, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 2], 1, Concat, [1]], # cat backbone P3

[ -1, 1, MobileOne, [ 256, False, 3] ], # 13 (P3/8-small)

[-1, 1, Conv, [256, 3, 2]],

[[-1, 10], 1, Concat, [1]], # cat head P4

[ -1, 1, MobileOne, [ 512, False, 3] ], # 16 (P4/16-medium)

[-1, 1, Conv, [512, 3, 2]],

[[-1, 6], 1, Concat, [1]], # cat head P5

[ -1, 1, MobileOne, [ 1024, False, 3] ], # 19 (P5/32-large)

[[13, 16, 19], 1, Detect, [nc, anchors]], # Detect(P3, P4, P5)

]

common.py中增加

from typing import Optional, List, Tuple

import torch.nn.functional as F

class SEBlock(nn.Module):

""" Squeeze and Excite module.

Pytorch implementation of `Squeeze-and-Excitation Networks` -

https://arxiv.org/pdf/1709.01507.pdf

"""

def __init__(self,

in_channels: int,

rd_ratio: float = 0.0625) -> None:

""" Construct a Squeeze and Excite Module.

:param in_channels: Number of input channels.

:param rd_ratio: Input channel reduction ratio.

"""

super(SEBlock, self).__init__()

self.reduce = nn.Conv2d(in_channels=in_channels,

out_channels=int(in_channels * rd_ratio),

kernel_size=1,

stride=1,

bias=True)

self.expand = nn.Conv2d(in_channels=int(in_channels * rd_ratio),

out_channels=in_channels,

kernel_size=1,

stride=1,

bias=True)

def forward(self, inputs: torch.Tensor) -> torch.Tensor:

""" Apply forward pass. """

b, c, h, w = inputs.size()

x = F.avg_pool2d(inputs, kernel_size=[h, w])

x = self.reduce(x)

x = F.relu(x)

x = self.expand(x)

x = torch.sigmoid(x)

x = x.view(-1, c, 1, 1)

return inputs * x

class MobileOneBlock(nn.Module):

""" MobileOne building block.

This block has a multi-branched architecture at train-time

and plain-CNN style architecture at inference time

For more details, please refer to our paper:

`An Improved One millisecond Mobile Backbone` -

https://arxiv.org/pdf/2206.04040.pdf

"""

def __init__(self,

in_channels: int,

out_channels: int,

kernel_size: int,

stride: int = 1,

padding: int = 0,

dilation: int = 1,

groups: int = 1,

inference_mode: bool = False,

use_se: bool = False,

num_conv_branches: int = 1) -> None:

""" Construct a MobileOneBlock module.

:param in_channels: Number of channels in the input.

:param out_channels: Number of channels produced by the block.

:param kernel_size: Size of the convolution kernel.

:param stride: Stride size.

:param padding: Zero-padding size.

:param dilation: Kernel dilation factor.

:param groups: Group number.

:param inference_mode: If True, instantiates model in inference mode.

:param use_se: Whether to use SE-ReLU activations.

:param num_conv_branches: Number of linear conv branches.

"""

super(MobileOneBlock, self).__init__()

self.inference_mode = inference_mode

self.groups = groups

self.stride = stride

self.kernel_size = kernel_size

self.in_channels = in_channels

self.out_channels = out_channels

self.num_conv_branches = num_conv_branches

# Check if SE-ReLU is requested

if use_se:

self.se = SEBlock(out_channels)

else:

self.se = nn.Identity()

self.activation = nn.ReLU()

if inference_mode:

self.reparam_conv = nn.Conv2d(in_channels=in_channels,

out_channels=out_channels,

kernel_size=kernel_size,

stride=stride,

padding=padding,

dilation=dilation,

groups=groups,

bias=True)

else:

# Re-parameterizable skip connection

self.rbr_skip = nn.BatchNorm2d(num_features=in_channels) \

if out_channels == in_channels and stride == 1 else None

# Re-parameterizable conv branches

rbr_conv = list()

for _ in range(self.num_conv_branches):

rbr_conv.append(self._conv_bn(kernel_size=kernel_size,

padding=padding))

self.rbr_conv = nn.ModuleList(rbr_conv)

# Re-parameterizable scale branch

self.rbr_scale = None

if kernel_size > 1:

self.rbr_scale = self._conv_bn(kernel_size=1,

padding=0)

def forward(self, x: torch.Tensor) -> torch.Tensor:

""" Apply forward pass. """

# Inference mode forward pass.

if self.inference_mode:

return self.activation(self.se(self.reparam_conv(x)))

# Multi-branched train-time forward pass.

# Skip branch output

identity_out = 0

if self.rbr_skip is not None:

identity_out = self.rbr_skip(x)

# Scale branch output

scale_out = 0

if self.rbr_scale is not None:

scale_out = self.rbr_scale(x)

# Other branches

out = scale_out + identity_out

for ix in range(self.num_conv_branches):

out += self.rbr_conv[ix](x)

return self.activation(self.se(out))

def reparameterize(self):

""" Following works like `RepVGG: Making VGG-style ConvNets Great Again` -

https://arxiv.org/pdf/2101.03697.pdf. We re-parameterize multi-branched

architecture used at training time to obtain a plain CNN-like structure

for inference.

"""

if self.inference_mode:

return

kernel, bias = self._get_kernel_bias()

self.reparam_conv = nn.Conv2d(in_channels=self.rbr_conv[0].conv.in_channels,

out_channels=self.rbr_conv[0].conv.out_channels,

kernel_size=self.rbr_conv[0].conv.kernel_size,

stride=self.rbr_conv[0].conv.stride,

padding=self.rbr_conv[0].conv.padding,

dilation=self.rbr_conv[0].conv.dilation,

groups=self.rbr_conv[0].conv.groups,

bias=True)

self.reparam_conv.weight.data = kernel

self.reparam_conv.bias.data = bias

# Delete un-used branches

for para in self.parameters():

para.detach_()

self.__delattr__('rbr_conv')

self.__delattr__('rbr_scale')

if hasattr(self, 'rbr_skip'):

self.__delattr__('rbr_skip')

self.inference_mode = True

def _get_kernel_bias(self) -> Tuple[torch.Tensor, torch.Tensor]:

""" Method to obtain re-parameterized kernel and bias.

Reference: https://github.com/DingXiaoH/RepVGG/blob/main/repvgg.py#L83

:return: Tuple of (kernel, bias) after fusing branches.

"""

# get weights and bias of scale branch

kernel_scale = 0

bias_scale = 0

if self.rbr_scale is not None:

kernel_scale, bias_scale = self._fuse_bn_tensor(self.rbr_scale)

# Pad scale branch kernel to match conv branch kernel size.

pad = self.kernel_size // 2

kernel_scale = torch.nn.functional.pad(kernel_scale,

[pad, pad, pad, pad])

# get weights and bias of skip branch

kernel_identity = 0

bias_identity = 0

if self.rbr_skip is not None:

kernel_identity, bias_identity = self._fuse_bn_tensor(self.rbr_skip)

# get weights and bias of conv branches

kernel_conv = 0

bias_conv = 0

for ix in range(self.num_conv_branches):

_kernel, _bias = self._fuse_bn_tensor(self.rbr_conv[ix])

kernel_conv += _kernel

bias_conv += _bias

kernel_final = kernel_conv + kernel_scale + kernel_identity

bias_final = bias_conv + bias_scale + bias_identity

return kernel_final, bias_final

def _fuse_bn_tensor(self, branch) -> Tuple[torch.Tensor, torch.Tensor]:

""" Method to fuse batchnorm layer with preceeding conv layer.

Reference: https://github.com/DingXiaoH/RepVGG/blob/main/repvgg.py#L95

:param branch:

:return: Tuple of (kernel, bias) after fusing batchnorm.

"""

if isinstance(branch, nn.Sequential):

kernel = branch.conv.weight

running_mean = branch.bn.running_mean

running_var = branch.bn.running_var

gamma = branch.bn.weight

beta = branch.bn.bias

eps = branch.bn.eps

else:

assert isinstance(branch, nn.BatchNorm2d)

if not hasattr(self, 'id_tensor'):

input_dim = self.in_channels // self.groups

kernel_value = torch.zeros((self.in_channels,

input_dim,

self.kernel_size,

self.kernel_size),

dtype=branch.weight.dtype,

device=branch.weight.device)

for i in range(self.in_channels):

kernel_value[i, i % input_dim,

self.kernel_size // 2,

self.kernel_size // 2] = 1

self.id_tensor = kernel_value

kernel = self.id_tensor

running_mean = branch.running_mean

running_var = branch.running_var

gamma = branch.weight

beta = branch.bias

eps = branch.eps

std = (running_var + eps).sqrt()

t = (gamma / std).reshape(-1, 1, 1, 1)

return kernel * t, beta - running_mean * gamma / std

def _conv_bn(self,

kernel_size: int,

padding: int) -> nn.Sequential:

""" Helper method to construct conv-batchnorm layers.

:param kernel_size: Size of the convolution kernel.

:param padding: Zero-padding size.

:return: Conv-BN module.

"""

mod_list = nn.Sequential()

mod_list.add_module('conv', nn.Conv2d(in_channels=self.in_channels,

out_channels=self.out_channels,

kernel_size=kernel_size,

stride=self.stride,

padding=padding,

groups=self.groups,

bias=False))

mod_list.add_module('bn', nn.BatchNorm2d(num_features=self.out_channels))

return mod_list在yolo.py中增加

if m in (Conv, GhostConv, Bottleneck, GhostBottleneck, SPP, SPPF, DWConv, MixConv2d, Focus, CrossConv,

BottleneckCSP, C3, C3TR, C3SPP, C3Ghost, nn.ConvTranspose2d, DWConvTranspose2d, C3x, C2f_Add,

MobileOne):

c1, c2 = ch[f], args[0]

if c2 != no: # if not output

c2 = make_divisible(c2 * gw, 8)

args = [c1, c2, *args[1:]]

if m in [BottleneckCSP, C3, C3TR, C3Ghost, C3x, C2f_Add]:

args.insert(2, n) # number of repeats

n = 1同时在yolo.py的basemodel中添加

def fuse(self): # fuse model Conv2d() + BatchNorm2d() layers

LOGGER.info('Fusing layers... ')

for m in self.model.modules():

if isinstance(m, (Conv, DWConv)) and hasattr(m, 'bn'):

m.conv = fuse_conv_and_bn(m.conv, m.bn) # update conv

delattr(m, 'bn') # remove batchnorm

m.forward = m.forward_fuse # update forward

if hasattr(m, 'reparameterize'):

m.reparameterize()

self.info()

return self运行yolo.py

![java八股文面试[数据库]——B树和B+树的区别](https://img-blog.csdnimg.cn/e3340931f2e1432eacc7536f91775af0.png)