参考:

本项目 https://github.com/PromtEngineer/localGPT

模型 https://huggingface.co/TheBloke/Llama-2-7B-Chat-GGML

云端知识库项目:基于GPT-4和LangChain构建云端定制化PDF知识库AI聊天机器人_Entropy-Go的博客-CSDN博客

1. 摘要

相比OpenAI的LLM ChatGPT模型必须网络连接并通过API key云端调用模型,担心数据隐私安全。基于Llama2和LangChain构建本地化定制化知识库AI聊天机器人,是将训练好的LLM大语言模型本地化部署,在没有网络连接的情况下对你的文件提问。100%私有化本地化部署,任何时候都不会有数据离开您的运行环境。你可以在没有网络连接的情况下获取文件和提问!

2. 准备工作

2.1 Meta's Llama 2 7b Chat GGML

These files are GGML format model files for Meta's Llama 2 7b Chat.

GGML files are for CPU + GPU inference using llama.cpp and libraries and UIs which support this format

2.2 安装Conda

CentOS 上快速安装包管理工具Conda_Entropy-Go的博客-CSDN博客

2.3 升级gcc

CentOS gcc介绍及快速升级_Entropy-Go的博客-CSDN博客

3. 克隆或下载项目localGPT

git clone https://github.com/PromtEngineer/localGPT.git4. 安装依赖包

4.1 Conda安装并激活

conda create -n localGPT

conda activate localGPT4.2 安装依赖包

如果Conda环境变量正常设置,直接pip install

pip install -r requirements.txt否则会使用系统自带的python,可以使用Conda的安装的绝对路径执行,后续都必须使用Conda的python

whereis conda

conda: /root/miniconda3/bin/conda /root/miniconda3/condabin/conda/root/miniconda3/bin/pip install -r requirements.txt安装时如遇下面问题,参考2.3 gcc升级,建议升级至gcc 11

ERROR: Could not build wheels for llama-cpp-python, hnswlib, lxml, which is required to install pyproject.toml-based project

安装python依赖包过程

/root/miniconda3/bin/pip install -r requirements.txt

Ignoring protobuf: markers 'sys_platform == "darwin" and platform_machine != "arm64"' don't match your environment

Ignoring protobuf: markers 'sys_platform == "darwin" and platform_machine == "arm64"' don't match your environment

Ignoring bitsandbytes-windows: markers 'sys_platform == "win32"' don't match your environment

Requirement already satisfied: langchain==0.0.191 in /root/miniconda3/lib/python3.11/site-packages (from -r requirements.txt (line 2)) (0.0.191)

Requirement already satisfied: chromadb==0.3.22 in /root/miniconda3/lib/python3.11/site-packages (from -r requirements.txt (line 3)) (0.3.22)

Requirement already satisfied: llama-cpp-python==0.1.66 in /root/miniconda3/lib/python3.11/site-packages (from -r requirements.txt (line 4)) (0.1.66)

Requirement already satisfied: pdfminer.six==20221105 in /root/miniconda3/lib/python3.11/site-packages (from -r requirements.txt (line 5)) (20221105)

Requirement already satisfied: InstructorEmbedding in /root/miniconda3/lib/python3.11/site-packages (from -r requirements.txt (line 6)) (1.0.1)

Requirement already satisfied: sentence-transformers in /root/miniconda3/lib/python3.11/site-packages (from -r requirements.txt (line 7)) (2.2.2)

Requirement already satisfied: faiss-cpu in /root/miniconda3/lib/python3.11/site-packages (from -r requirements.txt (line 8)) (1.7.4)

Requirement already satisfied: huggingface_hub in /root/miniconda3/lib/python3.11/site-packages (from -r requirements.txt (line 9)) (0.16.4)

Requirement already satisfied: transformers in /root/miniconda3/lib/python3.11/site-packages (from -r requirements.txt (line 10)) (4.31.0)

Requirement already satisfied: protobuf==3.20.0 in /root/miniconda3/lib/python3.11/site-packages (from -r requirements.txt (line 11)) (3.20.0)

Requirement already satisfied: auto-gptq==0.2.2 in /root/miniconda3/lib/python3.11/site-packages (from -r requirements.txt (line 14)) (0.2.2)

Requirement already satisfied: docx2txt in /root/miniconda3/lib/python3.11/site-packages (from -r requirements.txt (line 15)) (0.8)

Requirement already satisfied: unstructured in /root/miniconda3/lib/python3.11/site-packages (from -r requirements.txt (line 16)) (0.10.2)

Requirement already satisfied: urllib3==1.26.6 in /root/miniconda3/lib/python3.11/site-packages (from -r requirements.txt (line 19)) (1.26.6)

Requirement already satisfied: accelerate in /root/miniconda3/lib/python3.11/site-packages (from -r requirements.txt (line 20)) (0.21.0)

Requirement already satisfied: bitsandbytes in /root/miniconda3/lib/python3.11/site-packages (from -r requirements.txt (line 21)) (0.41.1)

Requirement already satisfied: click in /root/miniconda3/lib/python3.11/site-packages (from -r requirements.txt (line 23)) (8.1.7)

Requirement already satisfied: flask in /root/miniconda3/lib/python3.11/site-packages (from -r requirements.txt (line 24)) (2.3.2)

Requirement already satisfied: requests in /root/miniconda3/lib/python3.11/site-packages (from -r requirements.txt (line 25)) (2.31.0)

Requirement already satisfied: streamlit in /root/miniconda3/lib/python3.11/site-packages (from -r requirements.txt (line 28)) (1.25.0)

Requirement already satisfied: Streamlit-extras in /root/miniconda3/lib/python3.11/site-packages (from -r requirements.txt (line 29)) (0.3.0)

Requirement already satisfied: openpyxl in /root/miniconda3/lib/python3.11/site-packages (from -r requirements.txt (line 32)) (3.1.2)

Requirement already satisfied: PyYAML>=5.4.1 in /root/miniconda3/lib/python3.11/site-packages (from langchain==0.0.191->-r requirements.txt (line 2)) (6.0.1)

Requirement already satisfied: SQLAlchemy<3,>=1.4 in /root/miniconda3/lib/python3.11/site-packages (from langchain==0.0.191->-r requirements.txt (line 2)) (2.0.20)

Requirement already satisfied: aiohttp<4.0.0,>=3.8.3 in /root/miniconda3/lib/python3.11/site-packages (from langchain==0.0.191->-r requirements.txt (line 2)) (3.8.5)

Requirement already satisfied: dataclasses-json<0.6.0,>=0.5.7 in /root/miniconda3/lib/python3.11/site-packages (from langchain==0.0.191->-r requirements.txt (line 2)) (0.5.14)

Requirement already satisfied: numexpr<3.0.0,>=2.8.4 in /root/miniconda3/lib/python3.11/site-packages (from langchain==0.0.191->-r requirements.txt (line 2)) (2.8.5)

Requirement already satisfied: numpy<2,>=1 in /root/miniconda3/lib/python3.11/site-packages (from langchain==0.0.191->-r requirements.txt (line 2)) (1.25.2)

Requirement already satisfied: openapi-schema-pydantic<2.0,>=1.2 in /root/miniconda3/lib/python3.11/site-packages (from langchain==0.0.191->-r requirements.txt (line 2)) (1.2.4)

Requirement already satisfied: pydantic<2,>=1 in /root/miniconda3/lib/python3.11/site-packages (from langchain==0.0.191->-r requirements.txt (line 2)) (1.10.12)

Requirement already satisfied: tenacity<9.0.0,>=8.1.0 in /root/miniconda3/lib/python3.11/site-packages (from langchain==0.0.191->-r requirements.txt (line 2)) (8.2.3)

Requirement already satisfied: pandas>=1.3 in /root/miniconda3/lib/python3.11/site-packages (from chromadb==0.3.22->-r requirements.txt (line 3)) (2.0.3)

Requirement already satisfied: hnswlib>=0.7 in /root/miniconda3/lib/python3.11/site-packages (from chromadb==0.3.22->-r requirements.txt (line 3)) (0.7.0)

Requirement already satisfied: clickhouse-connect>=0.5.7 in /root/miniconda3/lib/python3.11/site-packages (from chromadb==0.3.22->-r requirements.txt (line 3)) (0.6.8)

Requirement already satisfied: duckdb>=0.7.1 in /root/miniconda3/lib/python3.11/site-packages (from chromadb==0.3.22->-r requirements.txt (line 3)) (0.8.1)

Requirement already satisfied: fastapi>=0.85.1 in /root/miniconda3/lib/python3.11/site-packages (from chromadb==0.3.22->-r requirements.txt (line 3)) (0.101.1)

Requirement already satisfied: uvicorn[standard]>=0.18.3 in /root/miniconda3/lib/python3.11/site-packages (from chromadb==0.3.22->-r requirements.txt (line 3)) (0.23.2)

Requirement already satisfied: posthog>=2.4.0 in /root/miniconda3/lib/python3.11/site-packages (from chromadb==0.3.22->-r requirements.txt (line 3)) (3.0.2)

Requirement already satisfied: typing-extensions>=4.5.0 in /root/miniconda3/lib/python3.11/site-packages (from chromadb==0.3.22->-r requirements.txt (line 3)) (4.7.1)

Requirement already satisfied: diskcache>=5.6.1 in /root/miniconda3/lib/python3.11/site-packages (from llama-cpp-python==0.1.66->-r requirements.txt (line 4)) (5.6.1)

Requirement already satisfied: charset-normalizer>=2.0.0 in /root/miniconda3/lib/python3.11/site-packages (from pdfminer.six==20221105->-r requirements.txt (line 5)) (2.0.4)

Requirement already satisfied: cryptography>=36.0.0 in /root/miniconda3/lib/python3.11/site-packages (from pdfminer.six==20221105->-r requirements.txt (line 5)) (39.0.1)

Requirement already satisfied: datasets in /root/miniconda3/lib/python3.11/site-packages (from auto-gptq==0.2.2->-r requirements.txt (line 14)) (2.14.4)

Requirement already satisfied: rouge in /root/miniconda3/lib/python3.11/site-packages (from auto-gptq==0.2.2->-r requirements.txt (line 14)) (1.0.1)

Requirement already satisfied: torch>=1.13.0 in /root/miniconda3/lib/python3.11/site-packages (from auto-gptq==0.2.2->-r requirements.txt (line 14)) (2.0.1)

Requirement already satisfied: safetensors in /root/miniconda3/lib/python3.11/site-packages (from auto-gptq==0.2.2->-r requirements.txt (line 14)) (0.3.2)

Requirement already satisfied: tqdm in /root/miniconda3/lib/python3.11/site-packages (from sentence-transformers->-r requirements.txt (line 7)) (4.65.0)

Requirement already satisfied: torchvision in /root/miniconda3/lib/python3.11/site-packages (from sentence-transformers->-r requirements.txt (line 7)) (0.15.2)

Requirement already satisfied: scikit-learn in /root/miniconda3/lib/python3.11/site-packages (from sentence-transformers->-r requirements.txt (line 7)) (1.3.0)

Requirement already satisfied: scipy in /root/miniconda3/lib/python3.11/site-packages (from sentence-transformers->-r requirements.txt (line 7)) (1.11.2)

Requirement already satisfied: nltk in /root/miniconda3/lib/python3.11/site-packages (from sentence-transformers->-r requirements.txt (line 7)) (3.8.1)

Requirement already satisfied: sentencepiece in /root/miniconda3/lib/python3.11/site-packages (from sentence-transformers->-r requirements.txt (line 7)) (0.1.99)

Requirement already satisfied: filelock in /root/miniconda3/lib/python3.11/site-packages (from huggingface_hub->-r requirements.txt (line 9)) (3.12.2)

Requirement already satisfied: fsspec in /root/miniconda3/lib/python3.11/site-packages (from huggingface_hub->-r requirements.txt (line 9)) (2023.6.0)

Requirement already satisfied: packaging>=20.9 in /root/miniconda3/lib/python3.11/site-packages (from huggingface_hub->-r requirements.txt (line 9)) (23.0)

Requirement already satisfied: regex!=2019.12.17 in /root/miniconda3/lib/python3.11/site-packages (from transformers->-r requirements.txt (line 10)) (2023.8.8)

Requirement already satisfied: tokenizers!=0.11.3,<0.14,>=0.11.1 in /root/miniconda3/lib/python3.11/site-packages (from transformers->-r requirements.txt (line 10)) (0.13.3)

Requirement already satisfied: chardet in /root/miniconda3/lib/python3.11/site-packages (from unstructured->-r requirements.txt (line 16)) (5.2.0)

Requirement already satisfied: filetype in /root/miniconda3/lib/python3.11/site-packages (from unstructured->-r requirements.txt (line 16)) (1.2.0)

Requirement already satisfied: python-magic in /root/miniconda3/lib/python3.11/site-packages (from unstructured->-r requirements.txt (line 16)) (0.4.27)

Requirement already satisfied: lxml in /root/miniconda3/lib/python3.11/site-packages (from unstructured->-r requirements.txt (line 16)) (4.9.3)

Requirement already satisfied: tabulate in /root/miniconda3/lib/python3.11/site-packages (from unstructured->-r requirements.txt (line 16)) (0.9.0)

Requirement already satisfied: beautifulsoup4 in /root/miniconda3/lib/python3.11/site-packages (from unstructured->-r requirements.txt (line 16)) (4.12.2)

Requirement already satisfied: emoji in /root/miniconda3/lib/python3.11/site-packages (from unstructured->-r requirements.txt (line 16)) (2.8.0)

Requirement already satisfied: psutil in /root/miniconda3/lib/python3.11/site-packages (from accelerate->-r requirements.txt (line 20)) (5.9.5)

Requirement already satisfied: Werkzeug>=2.3.3 in /root/miniconda3/lib/python3.11/site-packages (from flask->-r requirements.txt (line 24)) (2.3.7)

Requirement already satisfied: Jinja2>=3.1.2 in /root/miniconda3/lib/python3.11/site-packages (from flask->-r requirements.txt (line 24)) (3.1.2)

Requirement already satisfied: itsdangerous>=2.1.2 in /root/miniconda3/lib/python3.11/site-packages (from flask->-r requirements.txt (line 24)) (2.1.2)

Requirement already satisfied: blinker>=1.6.2 in /root/miniconda3/lib/python3.11/site-packages (from flask->-r requirements.txt (line 24)) (1.6.2)

Requirement already satisfied: idna<4,>=2.5 in /root/miniconda3/lib/python3.11/site-packages (from requests->-r requirements.txt (line 25)) (3.4)

Requirement already satisfied: certifi>=2017.4.17 in /root/miniconda3/lib/python3.11/site-packages (from requests->-r requirements.txt (line 25)) (2023.7.22)

Requirement already satisfied: altair<6,>=4.0 in /root/miniconda3/lib/python3.11/site-packages (from streamlit->-r requirements.txt (line 28)) (5.0.1)

Requirement already satisfied: cachetools<6,>=4.0 in /root/miniconda3/lib/python3.11/site-packages (from streamlit->-r requirements.txt (line 28)) (5.3.1)

Requirement already satisfied: importlib-metadata<7,>=1.4 in /root/miniconda3/lib/python3.11/site-packages (from streamlit->-r requirements.txt (line 28)) (6.8.0)

Requirement already satisfied: pillow<10,>=7.1.0 in /root/miniconda3/lib/python3.11/site-packages (from streamlit->-r requirements.txt (line 28)) (9.5.0)

Requirement already satisfied: pyarrow>=6.0 in /root/miniconda3/lib/python3.11/site-packages (from streamlit->-r requirements.txt (line 28)) (12.0.1)

Requirement already satisfied: pympler<2,>=0.9 in /root/miniconda3/lib/python3.11/site-packages (from streamlit->-r requirements.txt (line 28)) (1.0.1)

Requirement already satisfied: python-dateutil<3,>=2.7.3 in /root/miniconda3/lib/python3.11/site-packages (from streamlit->-r requirements.txt (line 28)) (2.8.2)

Requirement already satisfied: rich<14,>=10.14.0 in /root/miniconda3/lib/python3.11/site-packages (from streamlit->-r requirements.txt (line 28)) (13.5.2)

Requirement already satisfied: toml<2,>=0.10.1 in /root/miniconda3/lib/python3.11/site-packages (from streamlit->-r requirements.txt (line 28)) (0.10.2)

Requirement already satisfied: tzlocal<5,>=1.1 in /root/miniconda3/lib/python3.11/site-packages (from streamlit->-r requirements.txt (line 28)) (4.3.1)

Requirement already satisfied: validators<1,>=0.2 in /root/miniconda3/lib/python3.11/site-packages (from streamlit->-r requirements.txt (line 28)) (0.21.2)

Requirement already satisfied: gitpython!=3.1.19,<4,>=3.0.7 in /root/miniconda3/lib/python3.11/site-packages (from streamlit->-r requirements.txt (line 28)) (3.1.32)

Requirement already satisfied: pydeck<1,>=0.8 in /root/miniconda3/lib/python3.11/site-packages (from streamlit->-r requirements.txt (line 28)) (0.8.0)

Requirement already satisfied: tornado<7,>=6.0.3 in /root/miniconda3/lib/python3.11/site-packages (from streamlit->-r requirements.txt (line 28)) (6.3.3)

Requirement already satisfied: watchdog>=2.1.5 in /root/miniconda3/lib/python3.11/site-packages (from streamlit->-r requirements.txt (line 28)) (3.0.0)

Requirement already satisfied: htbuilder==0.6.1 in /root/miniconda3/lib/python3.11/site-packages (from Streamlit-extras->-r requirements.txt (line 29)) (0.6.1)

Requirement already satisfied: markdownlit>=0.0.5 in /root/miniconda3/lib/python3.11/site-packages (from Streamlit-extras->-r requirements.txt (line 29)) (0.0.7)

Requirement already satisfied: st-annotated-text>=3.0.0 in /root/miniconda3/lib/python3.11/site-packages (from Streamlit-extras->-r requirements.txt (line 29)) (4.0.0)

Requirement already satisfied: streamlit-camera-input-live>=0.2.0 in /root/miniconda3/lib/python3.11/site-packages (from Streamlit-extras->-r requirements.txt (line 29)) (0.2.0)

Requirement already satisfied: streamlit-card>=0.0.4 in /root/miniconda3/lib/python3.11/site-packages (from Streamlit-extras->-r requirements.txt (line 29)) (0.0.61)

Requirement already satisfied: streamlit-embedcode>=0.1.2 in /root/miniconda3/lib/python3.11/site-packages (from Streamlit-extras->-r requirements.txt (line 29)) (0.1.2)

Requirement already satisfied: streamlit-faker>=0.0.2 in /root/miniconda3/lib/python3.11/site-packages (from Streamlit-extras->-r requirements.txt (line 29)) (0.0.2)

Requirement already satisfied: streamlit-image-coordinates<0.2.0,>=0.1.1 in /root/miniconda3/lib/python3.11/site-packages (from Streamlit-extras->-r requirements.txt (line 29)) (0.1.6)

Requirement already satisfied: streamlit-keyup>=0.1.9 in /root/miniconda3/lib/python3.11/site-packages (from Streamlit-extras->-r requirements.txt (line 29)) (0.2.0)

Requirement already satisfied: streamlit-toggle-switch>=1.0.2 in /root/miniconda3/lib/python3.11/site-packages (from Streamlit-extras->-r requirements.txt (line 29)) (1.0.2)

Requirement already satisfied: streamlit-vertical-slider>=1.0.2 in /root/miniconda3/lib/python3.11/site-packages (from Streamlit-extras->-r requirements.txt (line 29)) (1.0.2)

Requirement already satisfied: more-itertools in /root/miniconda3/lib/python3.11/site-packages (from htbuilder==0.6.1->Streamlit-extras->-r requirements.txt (line 29)) (10.1.0)

Requirement already satisfied: et-xmlfile in /root/miniconda3/lib/python3.11/site-packages (from openpyxl->-r requirements.txt (line 32)) (1.1.0)

Requirement already satisfied: attrs>=17.3.0 in /root/miniconda3/lib/python3.11/site-packages (from aiohttp<4.0.0,>=3.8.3->langchain==0.0.191->-r requirements.txt (line 2)) (23.1.0)

Requirement already satisfied: multidict<7.0,>=4.5 in /root/miniconda3/lib/python3.11/site-packages (from aiohttp<4.0.0,>=3.8.3->langchain==0.0.191->-r requirements.txt (line 2)) (6.0.4)

Requirement already satisfied: async-timeout<5.0,>=4.0.0a3 in /root/miniconda3/lib/python3.11/site-packages (from aiohttp<4.0.0,>=3.8.3->langchain==0.0.191->-r requirements.txt (line 2)) (4.0.3)

Requirement already satisfied: yarl<2.0,>=1.0 in /root/miniconda3/lib/python3.11/site-packages (from aiohttp<4.0.0,>=3.8.3->langchain==0.0.191->-r requirements.txt (line 2)) (1.9.2)

Requirement already satisfied: frozenlist>=1.1.1 in /root/miniconda3/lib/python3.11/site-packages (from aiohttp<4.0.0,>=3.8.3->langchain==0.0.191->-r requirements.txt (line 2)) (1.4.0)

Requirement already satisfied: aiosignal>=1.1.2 in /root/miniconda3/lib/python3.11/site-packages (from aiohttp<4.0.0,>=3.8.3->langchain==0.0.191->-r requirements.txt (line 2)) (1.3.1)

Requirement already satisfied: jsonschema>=3.0 in /root/miniconda3/lib/python3.11/site-packages (from altair<6,>=4.0->streamlit->-r requirements.txt (line 28)) (4.19.0)

Requirement already satisfied: toolz in /root/miniconda3/lib/python3.11/site-packages (from altair<6,>=4.0->streamlit->-r requirements.txt (line 28)) (0.12.0)

Requirement already satisfied: pytz in /root/miniconda3/lib/python3.11/site-packages (from clickhouse-connect>=0.5.7->chromadb==0.3.22->-r requirements.txt (line 3)) (2023.3)

Requirement already satisfied: zstandard in /root/miniconda3/lib/python3.11/site-packages (from clickhouse-connect>=0.5.7->chromadb==0.3.22->-r requirements.txt (line 3)) (0.19.0)

Requirement already satisfied: lz4 in /root/miniconda3/lib/python3.11/site-packages (from clickhouse-connect>=0.5.7->chromadb==0.3.22->-r requirements.txt (line 3)) (4.3.2)

Requirement already satisfied: cffi>=1.12 in /root/miniconda3/lib/python3.11/site-packages (from cryptography>=36.0.0->pdfminer.six==20221105->-r requirements.txt (line 5)) (1.15.1)

Requirement already satisfied: marshmallow<4.0.0,>=3.18.0 in /root/miniconda3/lib/python3.11/site-packages (from dataclasses-json<0.6.0,>=0.5.7->langchain==0.0.191->-r requirements.txt (line 2)) (3.20.1)

Requirement already satisfied: typing-inspect<1,>=0.4.0 in /root/miniconda3/lib/python3.11/site-packages (from dataclasses-json<0.6.0,>=0.5.7->langchain==0.0.191->-r requirements.txt (line 2)) (0.9.0)

Requirement already satisfied: starlette<0.28.0,>=0.27.0 in /root/miniconda3/lib/python3.11/site-packages (from fastapi>=0.85.1->chromadb==0.3.22->-r requirements.txt (line 3)) (0.27.0)

Requirement already satisfied: gitdb<5,>=4.0.1 in /root/miniconda3/lib/python3.11/site-packages (from gitpython!=3.1.19,<4,>=3.0.7->streamlit->-r requirements.txt (line 28)) (4.0.10)

Requirement already satisfied: zipp>=0.5 in /root/miniconda3/lib/python3.11/site-packages (from importlib-metadata<7,>=1.4->streamlit->-r requirements.txt (line 28)) (3.16.2)

Requirement already satisfied: MarkupSafe>=2.0 in /root/miniconda3/lib/python3.11/site-packages (from Jinja2>=3.1.2->flask->-r requirements.txt (line 24)) (2.1.3)

Requirement already satisfied: markdown in /root/miniconda3/lib/python3.11/site-packages (from markdownlit>=0.0.5->Streamlit-extras->-r requirements.txt (line 29)) (3.4.4)

Requirement already satisfied: favicon in /root/miniconda3/lib/python3.11/site-packages (from markdownlit>=0.0.5->Streamlit-extras->-r requirements.txt (line 29)) (0.7.0)

Requirement already satisfied: pymdown-extensions in /root/miniconda3/lib/python3.11/site-packages (from markdownlit>=0.0.5->Streamlit-extras->-r requirements.txt (line 29)) (10.1)

Requirement already satisfied: tzdata>=2022.1 in /root/miniconda3/lib/python3.11/site-packages (from pandas>=1.3->chromadb==0.3.22->-r requirements.txt (line 3)) (2023.3)

Requirement already satisfied: six>=1.5 in /root/miniconda3/lib/python3.11/site-packages (from posthog>=2.4.0->chromadb==0.3.22->-r requirements.txt (line 3)) (1.16.0)

Requirement already satisfied: monotonic>=1.5 in /root/miniconda3/lib/python3.11/site-packages (from posthog>=2.4.0->chromadb==0.3.22->-r requirements.txt (line 3)) (1.6)

Requirement already satisfied: backoff>=1.10.0 in /root/miniconda3/lib/python3.11/site-packages (from posthog>=2.4.0->chromadb==0.3.22->-r requirements.txt (line 3)) (2.2.1)

Requirement already satisfied: markdown-it-py>=2.2.0 in /root/miniconda3/lib/python3.11/site-packages (from rich<14,>=10.14.0->streamlit->-r requirements.txt (line 28)) (3.0.0)

Requirement already satisfied: pygments<3.0.0,>=2.13.0 in /root/miniconda3/lib/python3.11/site-packages (from rich<14,>=10.14.0->streamlit->-r requirements.txt (line 28)) (2.16.1)

Requirement already satisfied: greenlet!=0.4.17 in /root/miniconda3/lib/python3.11/site-packages (from SQLAlchemy<3,>=1.4->langchain==0.0.191->-r requirements.txt (line 2)) (2.0.2)

Requirement already satisfied: faker in /root/miniconda3/lib/python3.11/site-packages (from streamlit-faker>=0.0.2->Streamlit-extras->-r requirements.txt (line 29)) (19.3.0)

Requirement already satisfied: matplotlib in /root/miniconda3/lib/python3.11/site-packages (from streamlit-faker>=0.0.2->Streamlit-extras->-r requirements.txt (line 29)) (3.7.2)

Requirement already satisfied: sympy in /root/miniconda3/lib/python3.11/site-packages (from torch>=1.13.0->auto-gptq==0.2.2->-r requirements.txt (line 14)) (1.12)

Requirement already satisfied: networkx in /root/miniconda3/lib/python3.11/site-packages (from torch>=1.13.0->auto-gptq==0.2.2->-r requirements.txt (line 14)) (3.1)

Requirement already satisfied: nvidia-cuda-nvrtc-cu11==11.7.99 in /root/miniconda3/lib/python3.11/site-packages (from torch>=1.13.0->auto-gptq==0.2.2->-r requirements.txt (line 14)) (11.7.99)

Requirement already satisfied: nvidia-cuda-runtime-cu11==11.7.99 in /root/miniconda3/lib/python3.11/site-packages (from torch>=1.13.0->auto-gptq==0.2.2->-r requirements.txt (line 14)) (11.7.99)

Requirement already satisfied: nvidia-cuda-cupti-cu11==11.7.101 in /root/miniconda3/lib/python3.11/site-packages (from torch>=1.13.0->auto-gptq==0.2.2->-r requirements.txt (line 14)) (11.7.101)

Requirement already satisfied: nvidia-cudnn-cu11==8.5.0.96 in /root/miniconda3/lib/python3.11/site-packages (from torch>=1.13.0->auto-gptq==0.2.2->-r requirements.txt (line 14)) (8.5.0.96)

Requirement already satisfied: nvidia-cublas-cu11==11.10.3.66 in /root/miniconda3/lib/python3.11/site-packages (from torch>=1.13.0->auto-gptq==0.2.2->-r requirements.txt (line 14)) (11.10.3.66)

Requirement already satisfied: nvidia-cufft-cu11==10.9.0.58 in /root/miniconda3/lib/python3.11/site-packages (from torch>=1.13.0->auto-gptq==0.2.2->-r requirements.txt (line 14)) (10.9.0.58)

Requirement already satisfied: nvidia-curand-cu11==10.2.10.91 in /root/miniconda3/lib/python3.11/site-packages (from torch>=1.13.0->auto-gptq==0.2.2->-r requirements.txt (line 14)) (10.2.10.91)

Requirement already satisfied: nvidia-cusolver-cu11==11.4.0.1 in /root/miniconda3/lib/python3.11/site-packages (from torch>=1.13.0->auto-gptq==0.2.2->-r requirements.txt (line 14)) (11.4.0.1)

Requirement already satisfied: nvidia-cusparse-cu11==11.7.4.91 in /root/miniconda3/lib/python3.11/site-packages (from torch>=1.13.0->auto-gptq==0.2.2->-r requirements.txt (line 14)) (11.7.4.91)

Requirement already satisfied: nvidia-nccl-cu11==2.14.3 in /root/miniconda3/lib/python3.11/site-packages (from torch>=1.13.0->auto-gptq==0.2.2->-r requirements.txt (line 14)) (2.14.3)

Requirement already satisfied: nvidia-nvtx-cu11==11.7.91 in /root/miniconda3/lib/python3.11/site-packages (from torch>=1.13.0->auto-gptq==0.2.2->-r requirements.txt (line 14)) (11.7.91)

Requirement already satisfied: triton==2.0.0 in /root/miniconda3/lib/python3.11/site-packages (from torch>=1.13.0->auto-gptq==0.2.2->-r requirements.txt (line 14)) (2.0.0)

Requirement already satisfied: setuptools in /root/miniconda3/lib/python3.11/site-packages (from nvidia-cublas-cu11==11.10.3.66->torch>=1.13.0->auto-gptq==0.2.2->-r requirements.txt (line 14)) (67.8.0)

Requirement already satisfied: wheel in /root/miniconda3/lib/python3.11/site-packages (from nvidia-cublas-cu11==11.10.3.66->torch>=1.13.0->auto-gptq==0.2.2->-r requirements.txt (line 14)) (0.38.4)

Requirement already satisfied: cmake in /root/miniconda3/lib/python3.11/site-packages (from triton==2.0.0->torch>=1.13.0->auto-gptq==0.2.2->-r requirements.txt (line 14)) (3.27.2)

Requirement already satisfied: lit in /root/miniconda3/lib/python3.11/site-packages (from triton==2.0.0->torch>=1.13.0->auto-gptq==0.2.2->-r requirements.txt (line 14)) (16.0.6)

Requirement already satisfied: pytz-deprecation-shim in /root/miniconda3/lib/python3.11/site-packages (from tzlocal<5,>=1.1->streamlit->-r requirements.txt (line 28)) (0.1.0.post0)

Requirement already satisfied: h11>=0.8 in /root/miniconda3/lib/python3.11/site-packages (from uvicorn[standard]>=0.18.3->chromadb==0.3.22->-r requirements.txt (line 3)) (0.14.0)

Requirement already satisfied: httptools>=0.5.0 in /root/miniconda3/lib/python3.11/site-packages (from uvicorn[standard]>=0.18.3->chromadb==0.3.22->-r requirements.txt (line 3)) (0.6.0)

Requirement already satisfied: python-dotenv>=0.13 in /root/miniconda3/lib/python3.11/site-packages (from uvicorn[standard]>=0.18.3->chromadb==0.3.22->-r requirements.txt (line 3)) (1.0.0)

Requirement already satisfied: uvloop!=0.15.0,!=0.15.1,>=0.14.0 in /root/miniconda3/lib/python3.11/site-packages (from uvicorn[standard]>=0.18.3->chromadb==0.3.22->-r requirements.txt (line 3)) (0.17.0)

Requirement already satisfied: watchfiles>=0.13 in /root/miniconda3/lib/python3.11/site-packages (from uvicorn[standard]>=0.18.3->chromadb==0.3.22->-r requirements.txt (line 3)) (0.19.0)

Requirement already satisfied: websockets>=10.4 in /root/miniconda3/lib/python3.11/site-packages (from uvicorn[standard]>=0.18.3->chromadb==0.3.22->-r requirements.txt (line 3)) (11.0.3)

Requirement already satisfied: soupsieve>1.2 in /root/miniconda3/lib/python3.11/site-packages (from beautifulsoup4->unstructured->-r requirements.txt (line 16)) (2.4.1)

Requirement already satisfied: dill<0.3.8,>=0.3.0 in /root/miniconda3/lib/python3.11/site-packages (from datasets->auto-gptq==0.2.2->-r requirements.txt (line 14)) (0.3.7)

Requirement already satisfied: xxhash in /root/miniconda3/lib/python3.11/site-packages (from datasets->auto-gptq==0.2.2->-r requirements.txt (line 14)) (3.3.0)

Requirement already satisfied: multiprocess in /root/miniconda3/lib/python3.11/site-packages (from datasets->auto-gptq==0.2.2->-r requirements.txt (line 14)) (0.70.15)

Requirement already satisfied: joblib in /root/miniconda3/lib/python3.11/site-packages (from nltk->sentence-transformers->-r requirements.txt (line 7)) (1.3.2)

Requirement already satisfied: threadpoolctl>=2.0.0 in /root/miniconda3/lib/python3.11/site-packages (from scikit-learn->sentence-transformers->-r requirements.txt (line 7)) (3.2.0)

Requirement already satisfied: pycparser in /root/miniconda3/lib/python3.11/site-packages (from cffi>=1.12->cryptography>=36.0.0->pdfminer.six==20221105->-r requirements.txt (line 5)) (2.21)

Requirement already satisfied: smmap<6,>=3.0.1 in /root/miniconda3/lib/python3.11/site-packages (from gitdb<5,>=4.0.1->gitpython!=3.1.19,<4,>=3.0.7->streamlit->-r requirements.txt (line 28)) (5.0.0)

Requirement already satisfied: jsonschema-specifications>=2023.03.6 in /root/miniconda3/lib/python3.11/site-packages (from jsonschema>=3.0->altair<6,>=4.0->streamlit->-r requirements.txt (line 28)) (2023.7.1)

Requirement already satisfied: referencing>=0.28.4 in /root/miniconda3/lib/python3.11/site-packages (from jsonschema>=3.0->altair<6,>=4.0->streamlit->-r requirements.txt (line 28)) (0.30.2)

Requirement already satisfied: rpds-py>=0.7.1 in /root/miniconda3/lib/python3.11/site-packages (from jsonschema>=3.0->altair<6,>=4.0->streamlit->-r requirements.txt (line 28)) (0.9.2)

Requirement already satisfied: mdurl~=0.1 in /root/miniconda3/lib/python3.11/site-packages (from markdown-it-py>=2.2.0->rich<14,>=10.14.0->streamlit->-r requirements.txt (line 28)) (0.1.2)

Requirement already satisfied: anyio<5,>=3.4.0 in /root/miniconda3/lib/python3.11/site-packages (from starlette<0.28.0,>=0.27.0->fastapi>=0.85.1->chromadb==0.3.22->-r requirements.txt (line 3)) (3.7.1)

Requirement already satisfied: mypy-extensions>=0.3.0 in /root/miniconda3/lib/python3.11/site-packages (from typing-inspect<1,>=0.4.0->dataclasses-json<0.6.0,>=0.5.7->langchain==0.0.191->-r requirements.txt (line 2)) (1.0.0)

Requirement already satisfied: contourpy>=1.0.1 in /root/miniconda3/lib/python3.11/site-packages (from matplotlib->streamlit-faker>=0.0.2->Streamlit-extras->-r requirements.txt (line 29)) (1.1.0)

Requirement already satisfied: cycler>=0.10 in /root/miniconda3/lib/python3.11/site-packages (from matplotlib->streamlit-faker>=0.0.2->Streamlit-extras->-r requirements.txt (line 29)) (0.11.0)

Requirement already satisfied: fonttools>=4.22.0 in /root/miniconda3/lib/python3.11/site-packages (from matplotlib->streamlit-faker>=0.0.2->Streamlit-extras->-r requirements.txt (line 29)) (4.42.0)

Requirement already satisfied: kiwisolver>=1.0.1 in /root/miniconda3/lib/python3.11/site-packages (from matplotlib->streamlit-faker>=0.0.2->Streamlit-extras->-r requirements.txt (line 29)) (1.4.4)

Requirement already satisfied: pyparsing<3.1,>=2.3.1 in /root/miniconda3/lib/python3.11/site-packages (from matplotlib->streamlit-faker>=0.0.2->Streamlit-extras->-r requirements.txt (line 29)) (3.0.9)

Requirement already satisfied: mpmath>=0.19 in /root/miniconda3/lib/python3.11/site-packages (from sympy->torch>=1.13.0->auto-gptq==0.2.2->-r requirements.txt (line 14)) (1.3.0)

Requirement already satisfied: sniffio>=1.1 in /root/miniconda3/lib/python3.11/site-packages (from anyio<5,>=3.4.0->starlette<0.28.0,>=0.27.0->fastapi>=0.85.1->chromadb==0.3.22->-r requirements.txt (line 3)) (1.3.0)

WARNING: Running pip as the 'root' user can result in broken permissions and conflicting behaviour with the system package manager. It is recommended to use a virtual environment instead: https://pip.pypa.io/warnings/venv

5. 添加文档为知识库

5.1 文档目录以及模板文档

可以替换成需要的文档

~localGPT/SOURCE_DOCUMENTS/constitution.pdf注入前验证下help,如前面提到,建议直接使用Conda绝对路径的python

/root/miniconda3/bin/python ingest.py --help

Usage: ingest.py [OPTIONS]

Options:

--device_type [cpu|cuda|ipu|xpu|mkldnn|opengl|opencl|ideep|hip|ve|fpga|ort|xla|lazy|vulkan|mps|meta|hpu|mtia]

Device to run on. (Default is cuda)

--help Show this message and exit.5.2 开始注入文档

默认使用cuda/GPU

/root/miniconda3/bin/python ingest.py可以指定CPU

/root/miniconda3/bin/python ingest.py --device_type cpu首次注入时,会下载对应的矢量数据DB,矢量数据DB会存放到 /root/localGPT/DB

首次注入过程

/root/miniconda3/bin/python ingest.py

2023-08-18 09:36:55,389 - INFO - ingest.py:122 - Loading documents from /root/localGPT/SOURCE_DOCUMENTS

all files: ['constitution.pdf']

2023-08-18 09:36:55,398 - INFO - ingest.py:34 - Loading document batch

2023-08-18 09:36:56,818 - INFO - ingest.py:131 - Loaded 1 documents from /root/localGPT/SOURCE_DOCUMENTS

2023-08-18 09:36:56,818 - INFO - ingest.py:132 - Split into 72 chunks of text

2023-08-18 09:36:57,994 - INFO - SentenceTransformer.py:66 - Load pretrained SentenceTransformer: hkunlp/instructor-large

Downloading (…)c7233/.gitattributes: 100%|███████████████████████████████████████████████████████████████████████████| 1.48k/1.48k [00:00<00:00, 4.13MB/s]

Downloading (…)_Pooling/config.json: 100%|████████████████████████████████████████████████████████████████████████████████| 270/270 [00:00<00:00, 915kB/s]

Downloading (…)/2_Dense/config.json: 100%|████████████████████████████████████████████████████████████████████████████████| 116/116 [00:00<00:00, 380kB/s]

Downloading pytorch_model.bin: 100%|█████████████████████████████████████████████████████████████████████████████████| 3.15M/3.15M [00:01<00:00, 2.99MB/s]

Downloading (…)9fb15c7233/README.md: 100%|████████████████████████████████████████████████████████████████████████████| 66.3k/66.3k [00:00<00:00, 359kB/s]

Downloading (…)b15c7233/config.json: 100%|███████████████████████████████████████████████████████████████████████████| 1.53k/1.53k [00:00<00:00, 5.70MB/s]

Downloading (…)ce_transformers.json: 100%|████████████████████████████████████████████████████████████████████████████████| 122/122 [00:00<00:00, 485kB/s]

Downloading pytorch_model.bin: 100%|█████████████████████████████████████████████████████████████████████████████████| 1.34G/1.34G [03:15<00:00, 6.86MB/s]

Downloading (…)nce_bert_config.json: 100%|██████████████████████████████████████████████████████████████████████████████| 53.0/53.0 [00:00<00:00, 109kB/s]

Downloading (…)cial_tokens_map.json: 100%|███████████████████████████████████████████████████████████████████████████| 2.20k/2.20k [00:00<00:00, 8.96MB/s]

Downloading spiece.model: 100%|████████████████████████████████████████████████████████████████████████████████████████| 792k/792k [00:00<00:00, 3.46MB/s]

Downloading (…)c7233/tokenizer.json: 100%|███████████████████████████████████████████████████████████████████████████| 2.42M/2.42M [00:00<00:00, 3.01MB/s]

Downloading (…)okenizer_config.json: 100%|███████████████████████████████████████████████████████████████████████████| 2.41k/2.41k [00:00<00:00, 9.75MB/s]

Downloading (…)15c7233/modules.json: 100%|███████████████████████████████████████████████████████████████████████████████| 461/461 [00:00<00:00, 1.92MB/s]

load INSTRUCTOR_Transformer

2023-08-18 09:40:26,658 - INFO - instantiator.py:21 - Created a temporary directory at /tmp/tmp47gnnhwi

2023-08-18 09:40:26,658 - INFO - instantiator.py:76 - Writing /tmp/tmp47gnnhwi/_remote_module_non_scriptable.py

max_seq_length 512

2023-08-18 09:40:30,076 - INFO - __init__.py:88 - Running Chroma using direct local API.

2023-08-18 09:40:30,248 - WARNING - __init__.py:43 - Using embedded DuckDB with persistence: data will be stored in: /root/localGPT/DB

2023-08-18 09:40:30,252 - INFO - ctypes.py:22 - Successfully imported ClickHouse Connect C data optimizations

2023-08-18 09:40:30,257 - INFO - json_impl.py:45 - Using python library for writing JSON byte strings

2023-08-18 09:40:30,295 - INFO - duckdb.py:454 - No existing DB found in /root/localGPT/DB, skipping load

2023-08-18 09:40:30,295 - INFO - duckdb.py:466 - No existing DB found in /root/localGPT/DB, skipping load

2023-08-18 09:40:32,800 - INFO - duckdb.py:414 - Persisting DB to disk, putting it in the save folder: /root/localGPT/DB

2023-08-18 09:40:32,813 - INFO - duckdb.py:414 - Persisting DB to disk, putting it in the save folder: /root/localGPT/DB

项目文件列表

ls

ACKNOWLEDGEMENT.md CONTRIBUTING.md ingest.py localGPT_UI.py README.md run_localGPT.py

constants.py DB LICENSE __pycache__ requirements.txt SOURCE_DOCUMENTS

constitution.pdf Dockerfile localGPTUI pyproject.toml run_localGPT_API.py 6. 运行知识库AI聊天机器人

现在可以和你的本地化知识库开始对话聊天了!

6.1 命令行方式运行提问

首次运行时,会下载对应的默认模型 ~/localGPT/constants.py

# model link: https://huggingface.co/TheBloke/Llama-2-7B-Chat-GGML

MODEL_ID = "TheBloke/Llama-2-7B-Chat-GGML"

MODEL_BASENAME = "llama-2-7b-chat.ggmlv3.q4_0.bin"模型会下载到 /root/.cache/huggingface/hub/models--TheBloke--Llama-2-7B-Chat-GGML

直接运行

/root/miniconda3/bin/python run_localGPT.py对话输入

支持英文,中文需要加utf-8进行处理

Enter a query:对话记录

/root/miniconda3/bin/python run_localGPT.py

2023-08-18 09:43:02,433 - INFO - run_localGPT.py:180 - Running on: cuda

2023-08-18 09:43:02,433 - INFO - run_localGPT.py:181 - Display Source Documents set to: False

2023-08-18 09:43:02,676 - INFO - SentenceTransformer.py:66 - Load pretrained SentenceTransformer: hkunlp/instructor-large

load INSTRUCTOR_Transformer

max_seq_length 512

2023-08-18 09:43:05,301 - INFO - __init__.py:88 - Running Chroma using direct local API.

2023-08-18 09:43:05,317 - WARNING - __init__.py:43 - Using embedded DuckDB with persistence: data will be stored in: /root/localGPT/DB

2023-08-18 09:43:05,328 - INFO - ctypes.py:22 - Successfully imported ClickHouse Connect C data optimizations

2023-08-18 09:43:05,336 - INFO - json_impl.py:45 - Using python library for writing JSON byte strings

2023-08-18 09:43:05,402 - INFO - duckdb.py:460 - loaded in 72 embeddings

2023-08-18 09:43:05,405 - INFO - duckdb.py:472 - loaded in 1 collections

2023-08-18 09:43:05,406 - INFO - duckdb.py:89 - collection with name langchain already exists, returning existing collection

2023-08-18 09:43:05,406 - INFO - run_localGPT.py:45 - Loading Model: TheBloke/Llama-2-7B-Chat-GGML, on: cuda

2023-08-18 09:43:05,406 - INFO - run_localGPT.py:46 - This action can take a few minutes!

2023-08-18 09:43:05,406 - INFO - run_localGPT.py:50 - Using Llamacpp for GGML quantized models

Downloading (…)chat.ggmlv3.q4_0.bin: 100%|███████████████████████████████████████████████████████████████████████████| 3.79G/3.79G [09:53<00:00, 6.39MB/s]

llama.cpp: loading model from /root/.cache/huggingface/hub/models--TheBloke--Llama-2-7B-Chat-GGML/snapshots/b616819cd4777514e3a2d9b8be69824aca8f5daf/llama-2-7b-chat.ggmlv3.q4_0.bin

llama_model_load_internal: format = ggjt v3 (latest)

llama_model_load_internal: n_vocab = 32000

llama_model_load_internal: n_ctx = 2048

llama_model_load_internal: n_embd = 4096

llama_model_load_internal: n_mult = 256

llama_model_load_internal: n_head = 32

llama_model_load_internal: n_layer = 32

llama_model_load_internal: n_rot = 128

llama_model_load_internal: ftype = 2 (mostly Q4_0)

llama_model_load_internal: n_ff = 11008

llama_model_load_internal: n_parts = 1

llama_model_load_internal: model size = 7B

llama_model_load_internal: ggml ctx size = 0.07 MB

llama_model_load_internal: mem required = 5407.71 MB (+ 1026.00 MB per state)

llama_new_context_with_model: kv self size = 1024.00 MB

AVX = 1 | AVX2 = 1 | AVX512 = 1 | AVX512_VBMI = 1 | AVX512_VNNI = 1 | FMA = 1 | NEON = 0 | ARM_FMA = 0 | F16C = 1 | FP16_VA = 0 | WASM_SIMD = 0 | BLAS = 0 | SSE3 = 1 | VSX = 0 |Enter a query:

或者添加参数--show_sources,回答时显示引用章节信息

/root/miniconda3/bin/python run_localGPT.py --show_sources对话记录:

/root/miniconda3/bin/python run_localGPT.py --show_sources

2023-08-18 10:03:55,466 - INFO - run_localGPT.py:180 - Running on: cuda

2023-08-18 10:03:55,466 - INFO - run_localGPT.py:181 - Display Source Documents set to: True

2023-08-18 10:03:55,708 - INFO - SentenceTransformer.py:66 - Load pretrained SentenceTransformer: hkunlp/instructor-large

load INSTRUCTOR_Transformer

max_seq_length 512

2023-08-18 10:03:58,302 - INFO - __init__.py:88 - Running Chroma using direct local API.

2023-08-18 10:03:58,307 - WARNING - __init__.py:43 - Using embedded DuckDB with persistence: data will be stored in: /root/localGPT/DB

2023-08-18 10:03:58,312 - INFO - ctypes.py:22 - Successfully imported ClickHouse Connect C data optimizations

2023-08-18 10:03:58,318 - INFO - json_impl.py:45 - Using python library for writing JSON byte strings

2023-08-18 10:03:58,372 - INFO - duckdb.py:460 - loaded in 72 embeddings

2023-08-18 10:03:58,373 - INFO - duckdb.py:472 - loaded in 1 collections

2023-08-18 10:03:58,373 - INFO - duckdb.py:89 - collection with name langchain already exists, returning existing collection

2023-08-18 10:03:58,374 - INFO - run_localGPT.py:45 - Loading Model: TheBloke/Llama-2-7B-Chat-GGML, on: cuda

2023-08-18 10:03:58,374 - INFO - run_localGPT.py:46 - This action can take a few minutes!

2023-08-18 10:03:58,374 - INFO - run_localGPT.py:50 - Using Llamacpp for GGML quantized models

llama.cpp: loading model from /root/.cache/huggingface/hub/models--TheBloke--Llama-2-7B-Chat-GGML/snapshots/b616819cd4777514e3a2d9b8be69824aca8f5daf/llama-2-7b-chat.ggmlv3.q4_0.bin

llama_model_load_internal: format = ggjt v3 (latest)

llama_model_load_internal: n_vocab = 32000

llama_model_load_internal: n_ctx = 2048

llama_model_load_internal: n_embd = 4096

llama_model_load_internal: n_mult = 256

llama_model_load_internal: n_head = 32

llama_model_load_internal: n_layer = 32

llama_model_load_internal: n_rot = 128

llama_model_load_internal: ftype = 2 (mostly Q4_0)

llama_model_load_internal: n_ff = 11008

llama_model_load_internal: n_parts = 1

llama_model_load_internal: model size = 7B

llama_model_load_internal: ggml ctx size = 0.07 MB

llama_model_load_internal: mem required = 5407.71 MB (+ 1026.00 MB per state)

llama_new_context_with_model: kv self size = 1024.00 MB

AVX = 1 | AVX2 = 1 | AVX512 = 1 | AVX512_VBMI = 1 | AVX512_VNNI = 1 | FMA = 1 | NEON = 0 | ARM_FMA = 0 | F16C = 1 | FP16_VA = 0 | WASM_SIMD = 0 | BLAS = 0 | SSE3 = 1 | VSX = 0 |Enter a query: how many times could president act, and how many years as max?

llama_print_timings: load time = 19737.32 ms

llama_print_timings: sample time = 101.14 ms / 169 runs ( 0.60 ms per token, 1671.02 tokens per second)

llama_print_timings: prompt eval time = 19736.91 ms / 925 tokens ( 21.34 ms per token, 46.87 tokens per second)

llama_print_timings: eval time = 36669.35 ms / 168 runs ( 218.27 ms per token, 4.58 tokens per second)

llama_print_timings: total time = 56849.80 ms

> Question:

how many times could president act, and how many years as max?> Answer:

The answer to this question can be found in Amendment XXII and Amendment XXIII of the US Constitution. According to these amendments, a person cannot be elected President more than twice, and no person can hold the office of President for more than two years of a term to which someone else was elected President. However, if the President is unable to discharge their powers and duties due to incapacity, the Vice President will continue to act as President until Congress determines the issue.

In summary, a person can be elected President at most twice, and they cannot hold the office for more than two years of a term to which someone else was elected President. If the President becomes unable to discharge their powers and duties, the Vice President will continue to act as President until Congress makes a determination.

----------------------------------SOURCE DOCUMENTS---------------------------> /root/localGPT/SOURCE_DOCUMENTS/constitution.pdf:

Amendment XXII.Amendment XXIII.

Passed by Congress March 21, 1947. Ratified February 27,

Passed by Congress June 16, 1960. Ratified March 29, 1961.

951.

SECTION 1

...

SECTION 2

....

----------------------------------SOURCE DOCUMENTS---------------------------

Enter a query: exit

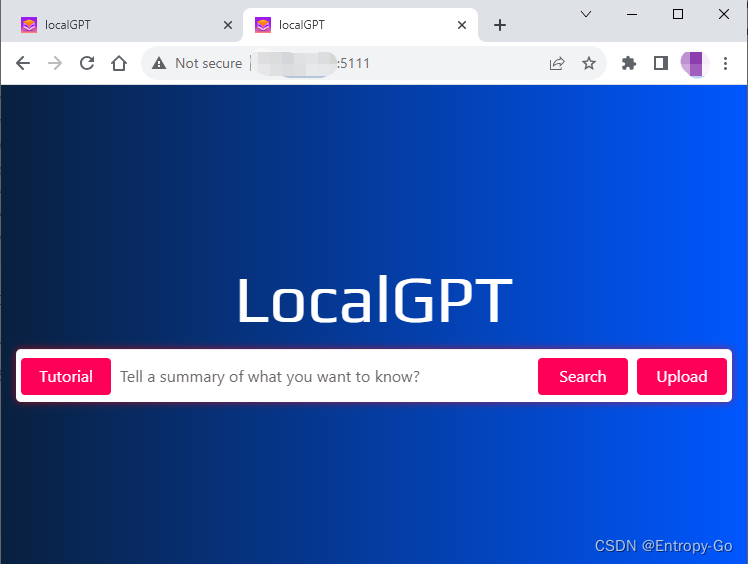

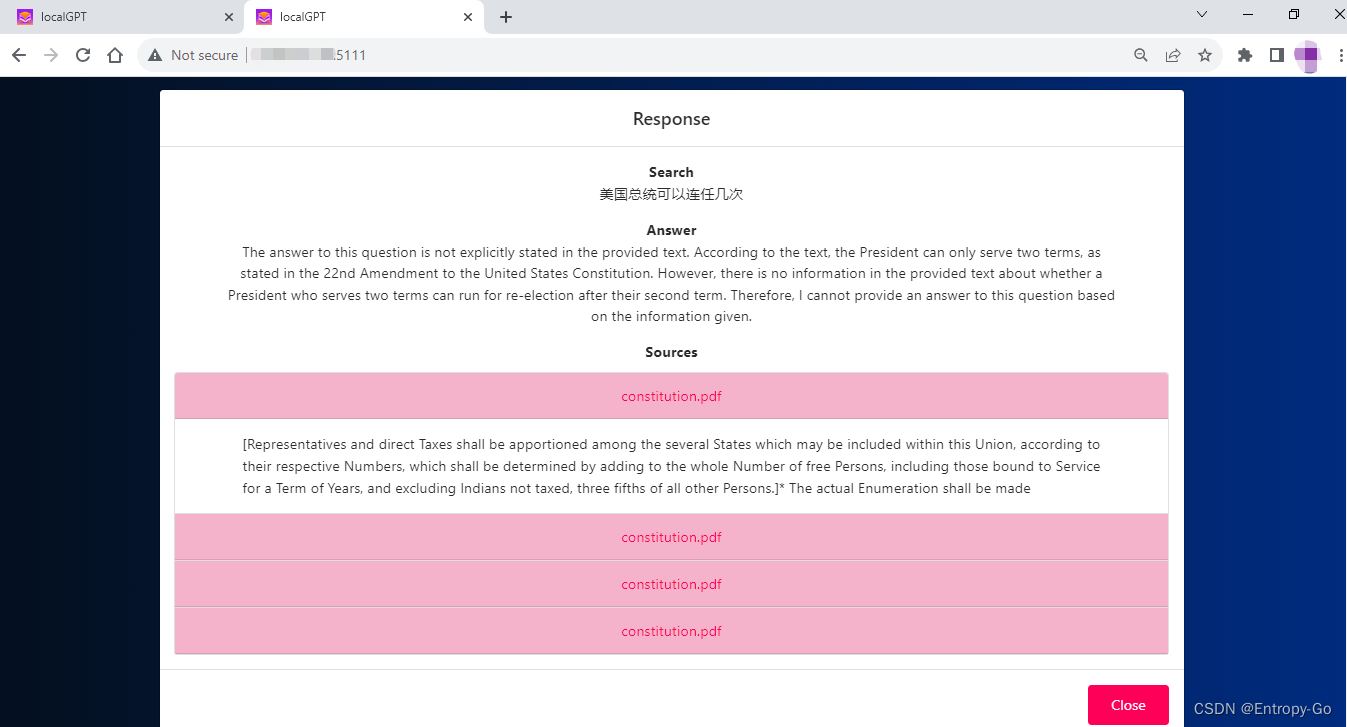

6.2 Web UI方式运行提问

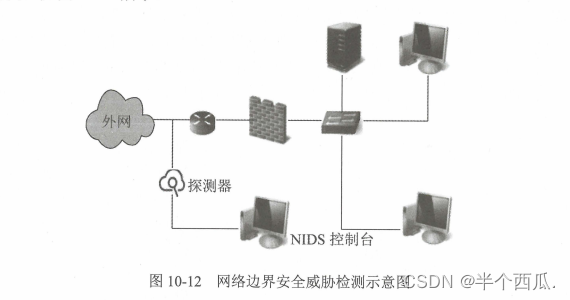

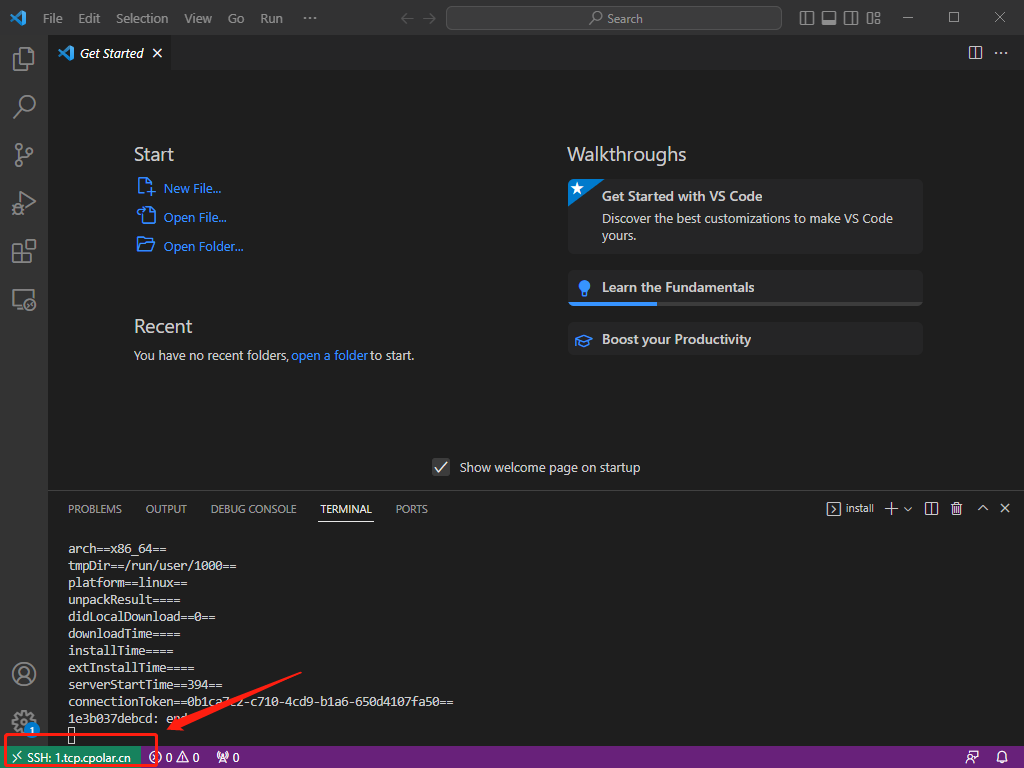

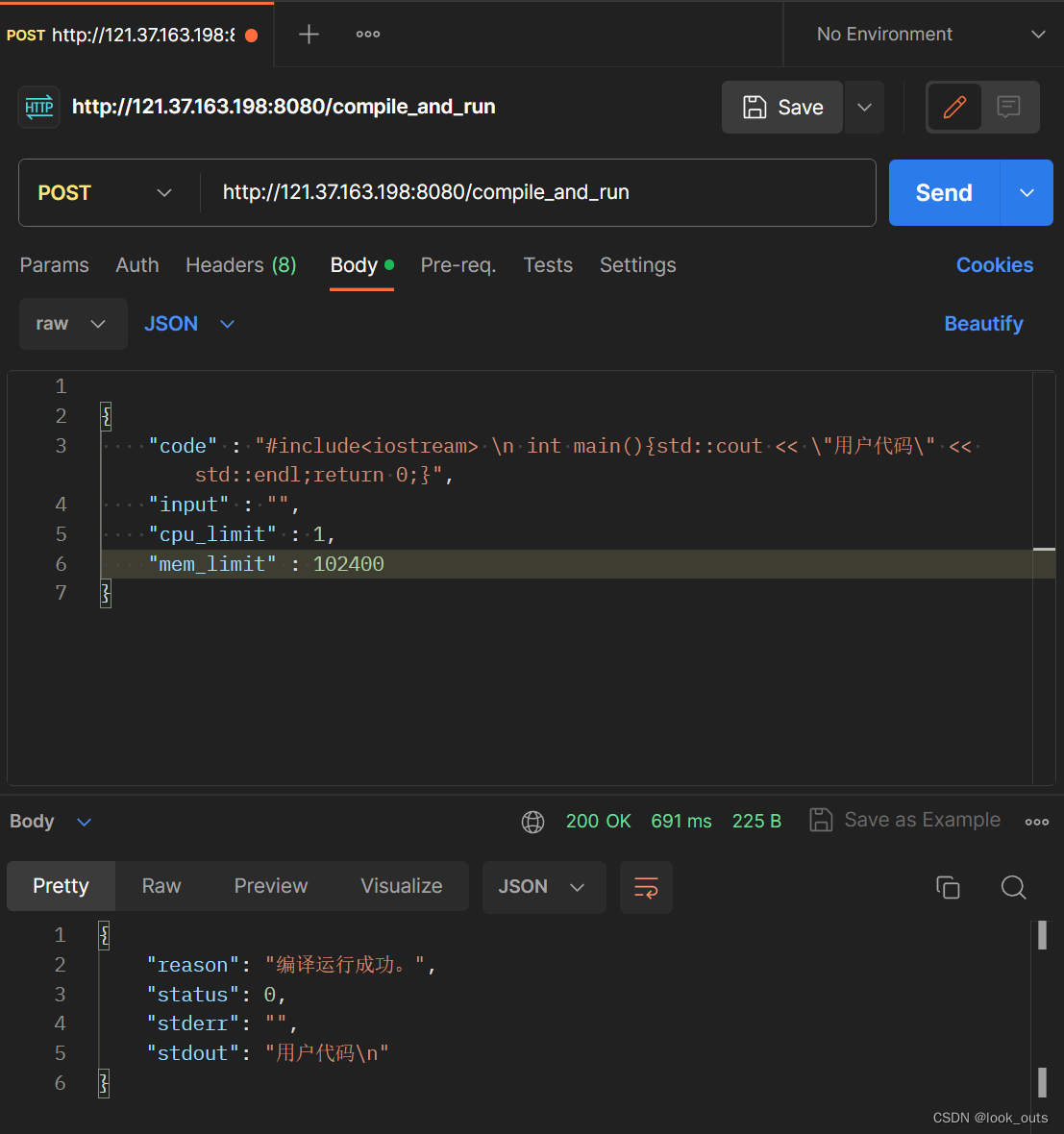

6.2.1 启动服务器端API

可以使用Web UI方式运行,启动服务器端API在5110端口上进行监听服务

http://127.0.0.1:5110

/root/miniconda3/bin/python run_localGPT_API.py如果执行过程遇到下面问题,还是代码中的python没有使用Conda PATH下面的python导致的。

/root/miniconda3/bin/python run_localGPT_API.py

load INSTRUCTOR_Transformer

max_seq_length 512

The directory does not exist

run_langest_commands ['python', 'ingest.py']

Traceback (most recent call last):

File "/root/localGPT/run_localGPT_API.py", line 56, in <module>

raise FileNotFoundError(

FileNotFoundError: No files were found inside SOURCE_DOCUMENTS, please put a starter file inside before starting the API!

可以修改~/localGPT/run_localGPT_API.py中的python为Conda下的路径

run_langest_commands = ["python", "ingest.py"]修改为

run_langest_commands = ["/root/miniconda3/bin/python", "ingest.py"]运行过程

看到 INFO:werkzeug: 表示启动成功,窗口可以保留座位debug用途

/root/miniconda3/bin/python run_localGPT_API.py

load INSTRUCTOR_Transformer

max_seq_length 512

WARNING:chromadb:Using embedded DuckDB with persistence: data will be stored in: /root/localGPT/DB

llama.cpp: loading model from /root/.cache/huggingface/hub/models--TheBloke--Llama-2-7B-Chat-GGML/snapshots/b616819cd4777514e3a2d9b8be69824aca8f5daf/llama-2-7b-chat.ggmlv3.q4_0.bin

llama_model_load_internal: format = ggjt v3 (latest)

llama_model_load_internal: n_vocab = 32000

llama_model_load_internal: n_ctx = 2048

llama_model_load_internal: n_embd = 4096

llama_model_load_internal: n_mult = 256

llama_model_load_internal: n_head = 32

llama_model_load_internal: n_layer = 32

llama_model_load_internal: n_rot = 128

llama_model_load_internal: ftype = 2 (mostly Q4_0)

llama_model_load_internal: n_ff = 11008

llama_model_load_internal: n_parts = 1

llama_model_load_internal: model size = 7B

llama_model_load_internal: ggml ctx size = 0.07 MB

llama_model_load_internal: mem required = 5407.71 MB (+ 1026.00 MB per state)

llama_new_context_with_model: kv self size = 1024.00 MB

AVX = 1 | AVX2 = 1 | AVX512 = 1 | AVX512_VBMI = 1 | AVX512_VNNI = 1 | FMA = 1 | NEON = 0 | ARM_FMA = 0 | F16C = 1 | FP16_VA = 0 | WASM_SIMD = 0 | BLAS = 0 | SSE3 = 1 | VSX = 0 |

* Serving Flask app 'run_localGPT_API'

* Debug mode: on

INFO:werkzeug:WARNING: This is a development server. Do not use it in a production deployment. Use a production WSGI server instead.

* Running on http://127.0.0.1:5110

INFO:werkzeug:Press CTRL+C to quit

INFO:werkzeug: * Restarting with watchdog (inotify)

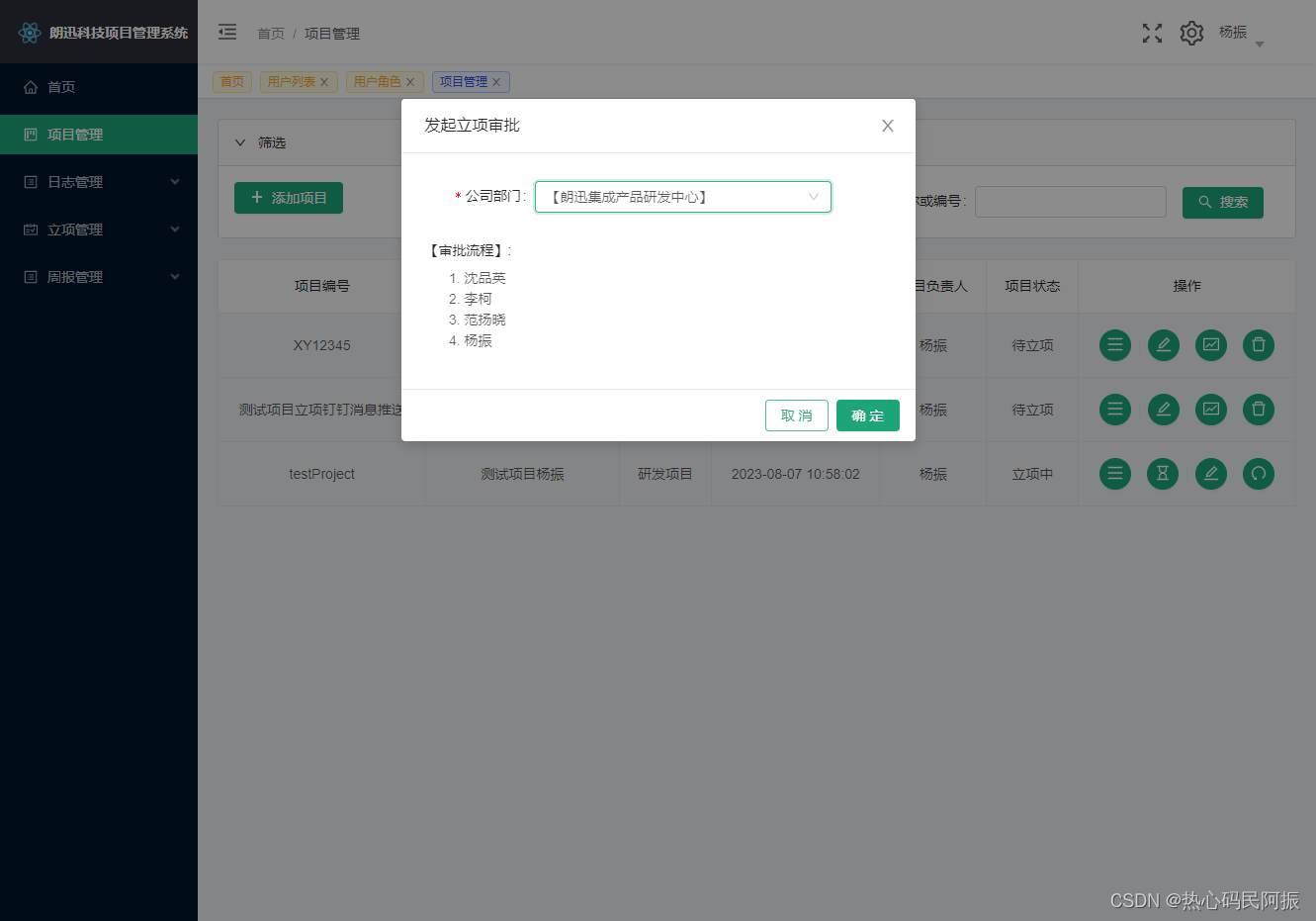

6.2.2 启动服务器端UI

重新打开一个新的命令行终端,运行~/localGPT/localGPTUI/localGPTUI.py,启动服务器端UI在5111端口上进行监听服务

http://127.0.0.1:5111

/root/miniconda3/bin/python localGPTUI.py如需局域网访问,修改localGPTUI.py,127.0.0.1 -> 0.0.0.0

parser.add_argument("--host", type=str, default="0.0.0.0",

help="Host to run the UI on. Defaults to 127.0.0.1. "

"Set to 0.0.0.0 to make the UI externally "

"accessible from other devices.")运行记录

/root/miniconda3/bin/python localGPTUI.py

* Serving Flask app 'localGPTUI'

* Debug mode: on

WARNING: This is a development server. Do not use it in a production deployment. Use a production WSGI server instead.

* Running on all addresses (0.0.0.0)

* Running on http://127.0.0.1:5111

* Running on http://IP:5111

端口使用情况

netstat -nltp | grep 511

tcp 0 0 127.0.0.1:5110 0.0.0.0:* LISTEN 57479/python

tcp 0 0 0.0.0.0:5111 0.0.0.0:* LISTEN 21718/python

6.3.3 浏览器访问Web UI

本机: http://127.0.0.1:5111

局域网: http://IP:5111

网页端可以进行自由对话,支持中文输入。

使用截图

7.常见问题Troubleshooting

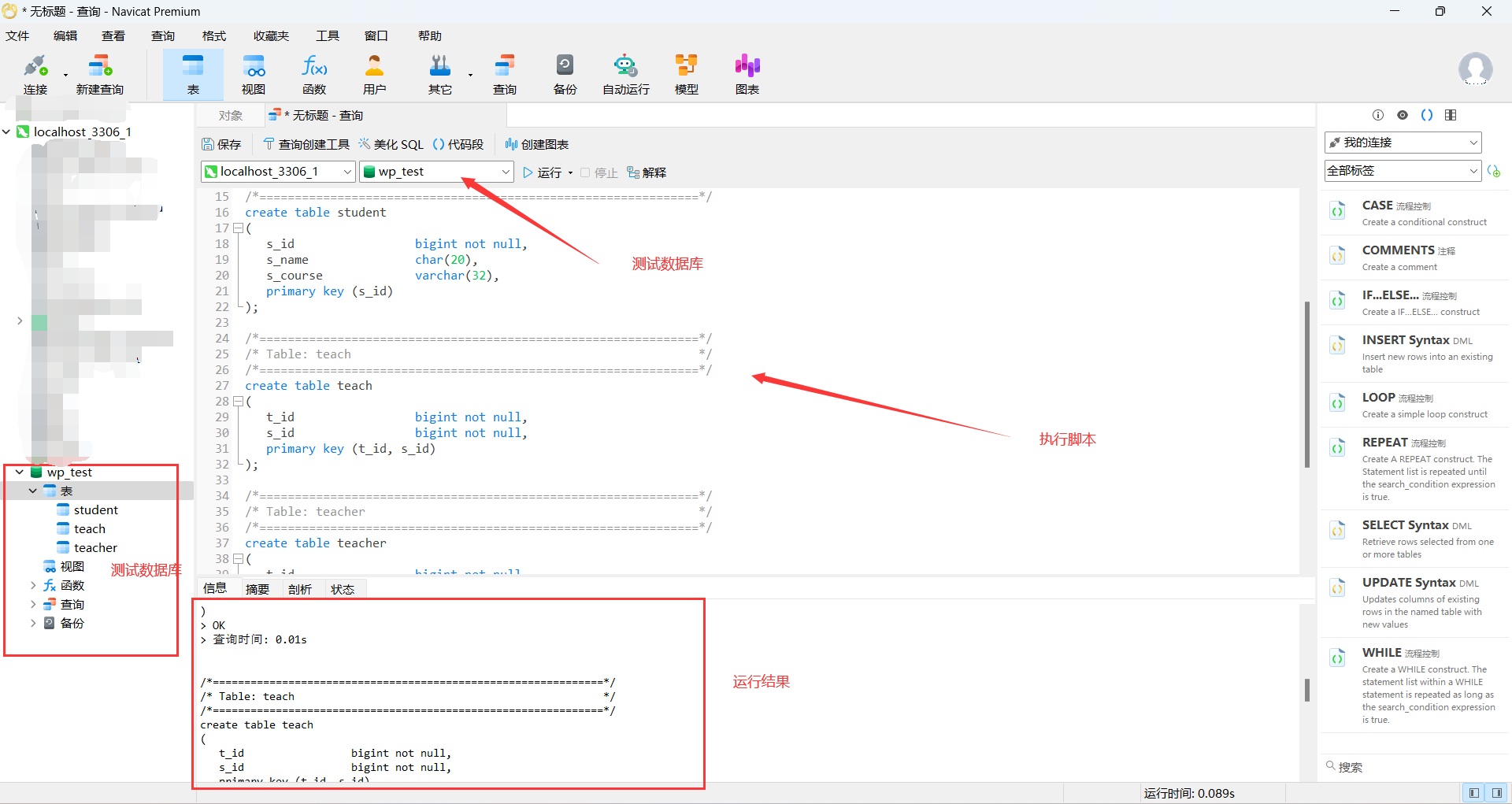

7.1 中文文档注入

修改run_localGPT_API.py

max_ctx_size = 4096修改ingest.py

text_splitter = RecursiveCharacterTextSplitter(chunk_size=800, chunk_overlap=200)7.2 网页打开后,问题无回复,response.status_code = 504, 304

如果环境使用了代理,在运行服务器端UI前,先去掉代理后再运行

unset http_proxy

unset https_proxy

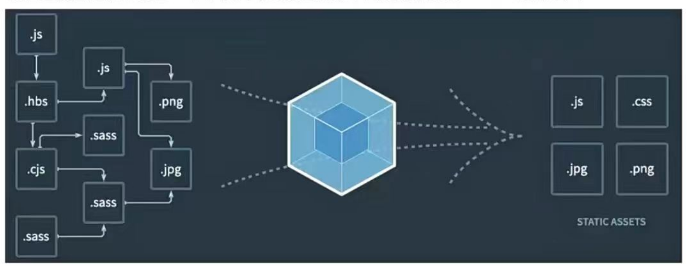

unset ftp_proxy/root/miniconda3/bin/python localGPTUI.py7.3 locaGPT如何工作的

Selecting the right local models and the power of LangChain you can run the entire pipeline locally, without any data leaving your environment, and with reasonable performance.

ingest.pyusesLangChaintools to parse the document and create embeddings locally usingInstructorEmbeddings. It then stores the result in a local vector database usingChromavector store.run_localGPT.pyuses a local LLM to understand questions and create answers. The context for the answers is extracted from the local vector store using a similarity search to locate the right piece of context from the docs.- You can replace this local LLM with any other LLM from the HuggingFace. Make sure whatever LLM you select is in the HF format.

7.4 怎么选择不同的LLM大语言模型

The following will provide instructions on how you can select a different LLM model to create your response:

-

Open up

constants.pyin the editor of your choice. -

Change the

MODEL_IDandMODEL_BASENAME. If you are using a quantized model (GGML,GPTQ), you will need to provideMODEL_BASENAME. For unquatized models, setMODEL_BASENAMEtoNONE -

There are a number of example models from HuggingFace that have already been tested to be run with the original trained model (ending with HF or have a .bin in its "Files and versions"), and quantized models (ending with GPTQ or have a .no-act-order or .safetensors in its "Files and versions").

-

For models that end with HF or have a .bin inside its "Files and versions" on its HuggingFace page.

- Make sure you have a model_id selected. For example ->

MODEL_ID = "TheBloke/guanaco-7B-HF" - If you go to its HuggingFace repo and go to "Files and versions" you will notice model files that end with a .bin extension.

- Any model files that contain .bin extensions will be run with the following code where the

# load the LLM for generating Natural Language responsescomment is found. MODEL_ID = "TheBloke/guanaco-7B-HF"

- Make sure you have a model_id selected. For example ->

-

For models that contain GPTQ in its name and or have a .no-act-order or .safetensors extension inside its "Files and versions on its HuggingFace page.

-

Make sure you have a model_id selected. For example -> model_id =

"TheBloke/wizardLM-7B-GPTQ" -

You will also need its model basename file selected. For example ->

model_basename = "wizardLM-7B-GPTQ-4bit.compat.no-act-order.safetensors" -

If you go to its HuggingFace repo and go to "Files and versions" you will notice a model file that ends with a .safetensors extension.

-

Any model files that contain no-act-order or .safetensors extensions will be run with the following code where the

# load the LLM for generating Natural Language responsescomment is found. -

MODEL_ID = "TheBloke/WizardLM-7B-uncensored-GPTQ"MODEL_BASENAME = "WizardLM-7B-uncensored-GPTQ-4bit-128g.compat.no-act-order.safetensors"

-

-

Comment out all other instances of

MODEL_ID="other model names",MODEL_BASENAME=other base model names, andllm = load_model(args*)

7.5 更多问题参考

Issues · PromtEngineer/localGPT · GitHub

![[论文分享]VOLO: Vision Outlooker for Visual Recognition](https://img-blog.csdnimg.cn/66aca6a3aea648df8876cc9df3b44d8d.png)