pip install torch-scatter -f https://pytorch-geometric.com/whl/torch-1.10.2+cu102.html

pip install torch-sparse -f https://pytorch-geometric.com/whl/torch-1.10.2+cu102.html

pip install torch-geometric

pip install ogb1. PyG Datasets

PyG有两个类,用于存储图以及将图转换为Tensor格式

torch_geometry.datasets 包含各种常见的图形数据集

torch_geometric.data 提供Tensor的图数据处理

1.1 从torch_geometric.datasets中读取数据集

# 每一个dataset都是多张图的list,单张图的类型为torch_geometric.data.Data

import torch

import os

from torch_geometric.datasets import TUDataset

root = './enzymes'

name = 'ENZYMES'

# The ENZYMES dataset

pyg_dataset= TUDataset('./enzymes', 'ENZYMES')

# You can find that there are 600 graphs in this dataset

print(pyg_dataset)

print(type(pyg_dataset))

# 对于第一张图

# print(pyg_dataset[0])

# print(pyg_dataset[0].num_nodes)

# print(pyg_dataset[0].edge_index)

# print(pyg_dataset[0].x)

# print(pyg_dataset[0].y)1.2 ENZYMES数据集中类别数量和特征维度

# num_classes

def get_num_classes(pyg_dataset):

num_classes = pyg_dataset.num_classes

return num_classes

# num_features

def get_num_features(pyg_dataset):

num_features = pyg_dataset.num_node_features

return num_features

num_classes = get_num_classes(pyg_dataset)

num_features = get_num_features(pyg_dataset)

print("{} dataset has {} classes".format(name, num_classes))

print("{} dataset has {} features".format(name, num_features))1.3 ENZYMES数据集中第idx张图的label和边的数量

def get_graph_class(pyg_dataset, idx):

# y就是这张图的类别

label = pyg_dataset[idx].y.item()

return label

graph_0 = pyg_dataset[0]

print(graph_0)

idx = 100

label = get_graph_class(pyg_dataset, idx)

print('Graph with index {} has label {}'.format(idx, label))

def get_graph_num_edges(pyg_dataset, idx):

num_edges = pyg_dataset[idx].num_edges/2 # 无向图

return num_edges

idx = 200

num_edges = get_graph_num_edges(pyg_dataset, idx)

print('Graph with index {} has {} edges'.format(idx, num_edges))2. Open Graph Benchmark (OGB)

OGB是基准数据集的集合,使用OGB数据加载器自动下载、处理和拆分,通过OGB Evaluator以统一的方式来评估模型性能

2.1 读取OBG的数据集(以ogbn-arxiv为例)

import ogb

import torch_geometric.transforms as T

from ogb.nodeproppred import PygNodePropPredDataset

dataset_name = 'ogbn-arxiv'

# 加载ogbn-arxiv数据集,并使用ToSparseTensor转换成Tensor格式

dataset = PygNodePropPredDataset(name=dataset_name,

transform=T.ToSparseTensor())

print('The {} dataset has {} graph'.format(dataset_name, len(dataset)))

# 第一张图

data = dataset[0]

print(data)

def graph_num_features(data):

num_features=data.num_features

return num_features

num_features = graph_num_features(data)

print('The graph has {} features'.format(num_features))3. GNN节点属性预测(节点分类)(以ogbn-arxiv数据集为例)

3.1 加载并预处理数据集

import torch

import pandas as pd

import torch.nn.functional as F

from torch_geometric.nn import GCNConv

import torch_geometric.transforms as T

from ogb.nodeproppred import PygNodePropPredDataset, Evaluator

device = 'cuda' if torch.cuda.is_available() else 'cpu'

dataset_name = 'ogbn-arxiv'

# PygNodePropPredDataset读取节点分类的数据集

dataset = PygNodePropPredDataset(name=dataset_name,

transform=T.ToSparseTensor())

data = dataset[0]

# 转换成稀疏矩阵

data.adj_t = data.adj_t.to_symmetric()

print(type(data.adj_t))

data = data.to(device)

# 用get_idx_split划分数据集为train,valid,test三部分

split_idx = dataset.get_idx_split()

print(split_idx)

train_idx = split_idx['train'].to(device)3.2 GCN Model

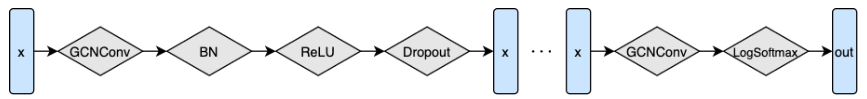

class GCN(torch.nn.Module):

## Note:

## 1. You should use torch.nn.ModuleList for self.convs and self.bns

## 2. self.convs has num_layers GCNConv layers

## 3. self.bns has num_layers - 1 BatchNorm1d layers

## 4. You should use torch.nn.LogSoftmax for self.softmax

## 5. The parameters you can set for GCNConv include 'in_channels' and

## 'out_channels'. For more information please refer to the documentation:

## https://pytorch-geometric.readthedocs.io/en/latest/modules/nn.html#torch_geometric.nn.conv.GCNConv

## 6. The only parameter you need to set for BatchNorm1d is 'num_features'

def __init__(self, input_dim, hidden_dim, output_dim, num_layers,

dropout, return_embeds=False):

super(GCN, self).__init__()

# A list of GCNConv layers

self.convs = torch.nn.ModuleList()

for i in range(num_layers - 1):

self.convs.append(GCNConv(input_dim, hidden_dim))

input_dim = hidden_dim

self.convs.append(GCNConv(hidden_dim, output_dim))

# A list of 1D batch normalization layers

self.bns = torch.nn.ModuleList()

for i in range(num_layers - 1):

self.convs.append(torch.nn.BatchNorm1d(hidden_dim))

# The log softmax layer

self.softmax = torch.nn.LogSoftmax()

# Probability of an element getting zeroed

self.dropout = dropout

# Skip classification layer and return node embeddings

self.return_embeds = return_embeds

def reset_parameters(self):

for conv in self.convs:

conv.reset_parameters()

for bn in self.bns:

bn.reset_parameters()

## Note:

## 1. Construct the network as shown in the figure

## 2. torch.nn.functional.relu and torch.nn.functional.dropout are useful

## For more information please refer to the documentation:

## https://pytorch.org/docs/stable/nn.functional.html

## 3. Don't forget to set F.dropout training to self.training

## 4. If return_embeds is True, then skip the last softmax layer

def forward(self, x, adj_t):

for layer in range(len(self.convs)-1):

x=self.convs[layer](x,adj_t)

x=self.bns[layer](x)

x=F.relu(x)

x=F.dropout(x,self.dropout,self.training)

out=self.convs[-1](x,adj_t)

if not self.return_embeds:

out=self.softmax(out)

return out3.3 训练和评估

def train(model, data, train_idx, optimizer, loss_fn):

model.train()

optimizer.zero_grad()

out = model(data.x,data.adj_t)

# 计算训练部分的loss

train_output = out[train_idx]

train_label = data.y[train_idx,0]

loss = loss_fn(train_output,train_label)

loss.backward()

optimizer.step()

return loss.item()

# 注意data.y[train_idx]和data.y[train_idx,0]的区别

print(data.y[train_idx])

print(data.y[train_idx,0])def test(model, data, split_idx, evaluator, save_model_results=False):

model.eval()

out = model(data.x,data.adj_t)

y_pred = out.argmax(dim=-1, keepdim=True)

# 使用OGB Evaluator进行评估

train_acc = evaluator.eval({

'y_true': data.y[split_idx['train']],

'y_pred': y_pred[split_idx['train']],

})['acc']

valid_acc = evaluator.eval({

'y_true': data.y[split_idx['valid']],

'y_pred': y_pred[split_idx['valid']],

})['acc']

test_acc = evaluator.eval({

'y_true': data.y[split_idx['test']],

'y_pred': y_pred[split_idx['test']],

})['acc']

return train_acc, valid_acc, test_accargs = {

'device': device,

'num_layers': 3,

'hidden_dim': 256,

'dropout': 0.5,

'lr': 0.01,

'epochs': 100,

}

model = GCN(data.num_features, args['hidden_dim'],

dataset.num_classes, args['num_layers'],

args['dropout']).to(device)

evaluator = Evaluator(name='ogbn-arxiv')

# 初始化模型参数

model.reset_parameters()

optimizer = torch.optim.Adam(model.parameters(), lr=args['lr'])

loss_fn = F.nll_loss

for epoch in range(1, args["epochs"]+1):

loss = train(model, data, train_idx, optimizer, loss_fn)

train_acc, valid_acc, test_acc = test(model, data, split_idx, evaluator)

print(f'Epoch: {epoch:02d}, '

f'Loss: {loss:.4f}, '

f'Train: {100 * train_acc:.2f}%, '

f'Valid: {100 * valid_acc:.2f}% '

f'Test: {100 * test_acc:.2f}%')4. GNN图属性预测(图分类)(以ogbn-arxiv数据集为例)

4.1 加载并预处理数据集

from ogb.graphproppred import PygGraphPropPredDataset, Evaluator

from torch_geometric.data import DataLoader

from tqdm.notebook import tqdm

# PygGraphPropPredDataset读取图分类的数据集

dataset = PygGraphPropPredDataset(name='ogbg-molhiv')

split_idx = dataset.get_idx_split()

# DataLoader

train_loader = DataLoader(dataset[split_idx["train"]], batch_size=32, shuffle=True, num_workers=0)

valid_loader = DataLoader(dataset[split_idx["valid"]], batch_size=32, shuffle=False, num_workers=0)

test_loader = DataLoader(dataset[split_idx["test"]], batch_size=32, shuffle=False, num_workers=0)4.2 GCN Model

from ogb.graphproppred.mol_encoder import AtomEncoder

from torch_geometric.nn import global_add_pool, global_mean_pool

class GCN_Graph(torch.nn.Module):

def __init__(self, hidden_dim, output_dim, num_layers, dropout):

super(GCN_Graph, self).__init__()

# encoders

self.node_encoder = AtomEncoder(hidden_dim)

# 通过GCN

self.gnn_node = GCN(hidden_dim, hidden_dim,

hidden_dim, num_layers, dropout, return_embeds=True)

# 全局池化

self.pool = global_mean_pool

self.linear = torch.nn.Linear(hidden_dim, output_dim)

def reset_parameters(self):

self.gnn_node.reset_parameters()

self.linear.reset_parameters()

def forward(self, batched_data):

x, edge_index, batch = batched_data.x, batched_data.edge_index, batched_data.batch

embed = self.node_encoder(x)

out = self.gnn_node(embed,edge_index)

out = self.pool(out,batch)

out = self.linear(out)

return out4.3 训练和评估

def train(model, device, data_loader, optimizer, loss_fn):

model.train()

for step, batch in enumerate(tqdm(data_loader, desc="Iteration")):

batch = batch.to(device)

# 跳过不完整的batch

if batch.x.shape[0] == 1 or batch.batch[-1] == 0:

pass

else:

# 过滤掉无标签的数据

is_labeled = (batch.y == batch.y)

optimizer.zero_grad()

op = model(batch)

train_op = op[is_labeled]

train_labels = batch.y[is_labeled].view(-1)

loss = loss_fn(train_op.float(),train_labels.float())

loss.backward()

optimizer.step()

return loss.item()def eval(model, device, loader, evaluator):

model.eval()

y_true = []

y_pred = []

for step, batch in enumerate(tqdm(loader, desc="Iteration")):

batch = batch.to(device)

if batch.x.shape[0] == 1:

pass

else:

with torch.no_grad():

pred = model(batch)

y_true.append(batch.y.view(pred.shape).detach().cpu())

y_pred.append(pred.detach().cpu())

y_true = torch.cat(y_true, dim = 0).numpy()

y_pred = torch.cat(y_pred, dim = 0).numpy()

input_dict = {"y_true": y_true, "y_pred": y_pred}

result = evaluator.eval(input_dict)

return resultargs = {

'device': device,

'num_layers': 5,

'hidden_dim': 256,

'dropout': 0.5,

'lr': 0.001,

'epochs': 30,

}

model = GCN_Graph(args['hidden_dim'],

dataset.num_tasks, args['num_layers'],

args['dropout']).to(device)

evaluator = Evaluator(name='ogbg-molhiv')

model.reset_parameters()

optimizer = torch.optim.Adam(model.parameters(), lr=args['lr'])

loss_fn = torch.nn.BCEWithLogitsLoss()

for epoch in range(1, 1 + args["epochs"]):

print('Training...')

loss = train(model, device, train_loader, optimizer, loss_fn)

print('Evaluating...')

train_result = eval(model, device, train_loader, evaluator)

val_result = eval(model, device, valid_loader, evaluator)

test_result = eval(model, device, test_loader, evaluator)

train_acc, valid_acc, test_acc = train_result[dataset.eval_metric], val_result[dataset.eval_metric], test_result[dataset.eval_metric]

print(f'Epoch: {epoch:02d}, '

f'Loss: {loss:.4f}, '

f'Train: {100 * train_acc:.2f}%, '

f'Valid: {100 * valid_acc:.2f}% '

f'Test: {100 * test_acc:.2f}%')