目录

一、创建PeerConnectionFactory

初始化

构建对象

二、创建AudioDeviceModule

AudioDeviceModule

JavaAudioDeviceModule

构建对象

setAudioAttributes

setAudioFormat

setAudioSource

创建录制视频相关对象

创建VideoSource

创建VideoCapturer

创建VideoTrack

播放视频

切换前后置摄像头

别忘了申请权限

完整代码

本系列文章带大家熟悉webrtc,最终用webrtc做一个p2p音视频通话的app。本文章作为本系列第一期主要讲解一些基础知识,同时实现用webrtc播放本地录制的视频的功能。文章最后会提供完整的代码。如果有小伙伴还没有Android webrtc源码,可以关注我并私信“Android webrtc源码”,我会给大家提供源码,获取源码后以module的形式导入到自己的项目即可。

一、创建PeerConnectionFactory

PeerConnectionFactory是一个创建、配置和管理其余一切的类,是使用webrtc的起点。

初始化

PeerConnectionFactory.initialize(InitializationOptions.builder(context)

// .setFieldTrials("xiongFieldTrials")// 设置实验性功能

// .setNativeLibraryName("jingle_peerconnection_so")// 底层库的名称,可以不用设置。如果设置名称一定要和底层库的名称一致

.setEnableInternalTracer(true)// 启用内部追踪器,用来记录一些相关数据

.createInitializationOptions())在使用webrtc之前至少要调用一次该方法,主要目的是初始化并加载webrtc。

构建对象

peerConnectionFactory = PeerConnectionFactory.builder()

.setVideoDecoderFactory(DefaultVideoDecoderFactory(eglContext))// 设置视频解码工厂

.setVideoEncoderFactory(DefaultVideoEncoderFactory(eglContext, false, true))//设置视频编码工厂

.setAudioDeviceModule(adm)

.setOptions(options)

.createPeerConnectionFactory()在构建对象时主要设置了编解码工厂、音频管理设备和一些额外选项,其中编解码知识不在本文章讲解范围内,音频管理设备在本文章后面进行讲解。除了这些,这个setOptions是做什么的呢?在setOptions时需要传入一个Options对象,这个对象有三个公开的属性:

public int networkIgnoreMask;

public boolean disableEncryption;

public boolean disableNetworkMonitor;其中,networkIgnoreMask:用来忽略指定的网络类型,也就是说不会使用这个网络进行webrtc通信,可取以下这些值:

ADAPTER_TYPE_UNKNOWN = 0:未知类型的以太网适配器。ADAPTER_TYPE_ETHERNET = 1 << 0:以太网适配器。ADAPTER_TYPE_WIFI = 1 << 1:Wi-Fi 适配器。ADAPTER_TYPE_CELLULAR = 1 << 2:蜂窝移动数据适配器。ADAPTER_TYPE_VPN = 1 << 3:VPN 适配器。ADAPTER_TYPE_LOOPBACK = 1 << 4:回环适配器。ADAPTER_TYPE_ANY = 1 << 5:任何适配器类型都不被忽略。这是默认值。

disableEncryption:true表示不用数据加密

disableNetworkMonitor:true表示禁用网络监视器

二、创建AudioDeviceModule

AudioDeviceModule

AudioDeviceModule是webrtc用来管理和配置音频的,在上边创建peerconnectionFactory时把该对象作为setAudioDeviceModule方法的参数传了进去。AudioDeviceModule是一个接口类,其中有四个方法:

/**

* Returns a C++ pointer to a webrtc::AudioDeviceModule. Caller does _not_ take ownership and

* lifetime is handled through the release() call.

*/

long getNativeAudioDeviceModulePointer();

/**

* Release resources for this AudioDeviceModule, including native resources. The object should not

* be used after this call.

*/

void release();

/** Control muting/unmuting the speaker. */

void setSpeakerMute(boolean mute);

/** Control muting/unmuting the microphone. */

void setMicrophoneMute(boolean mute);- getNativeAudioDeviceModulePointer:返回顶层c++ webrtc::AudioDeviceModule的指针

- release: 释放资源,释放后不能再使用这个对象了

- setSpeakerMute:扬声器开启或者关闭静音

- setMicrophoneMute:麦克风开启或者关闭静音

JavaAudioDeviceModule

JavaAudioDeviceModule是AudioDeviceModule的实现类,里面封装了一个WebRtcAudioRecord对象作为音频输入、一个WebRtcAudioTrack对象作为音频输出,还封装了一些设置采样率、音频格式、声道数等的配置。其中各种关于音频类的关系以及WebRtcAudioRecord我在另外一篇文章中有比较详细的介绍:webrtc怎么播放本地音频文件。

构建对象

adm = JavaAudioDeviceModule.builder(context)

.setAudioAttributes(audioAttributes)// 设置音频属性

.setAudioFormat(AudioFormat.ENCODING_PCM_16BIT)// 设置音频采样格式

.setAudioSource(MediaRecorder.AudioSource.VOICE_COMMUNICATION)// 设置音频录制源

.setSampleRate(44100)// 设置音频采样率

.setAudioRecordErrorCallback(audioRecordErrorCallback)// audio record错误记录

.setAudioRecordStateCallback(audioRecordStateCallback)// audio record状态回调

.setAudioTrackErrorCallback(audioTrackErrorCallback)// audio track错误回调

.setAudioTrackStateCallback(audioTrackStateCallback)// audio track状态回调

.setSamplesReadyCallback(samplesReadyCallback)// 每成功发送一次数据就调用该对象中的onWebRtcAudioRecordSamplesReady方法

.setUseHardwareAcousticEchoCanceler(true)// 使用硬件回声消除

.setUseHardwareNoiseSuppressor(true)// 使用硬件噪声抑制

.setUseStereoInput(false)// 使用立体声输入

.setUseStereoOutput(false)// 使用立体声输出

.createAudioDeviceModule()adm就是AudioDeviceModule对象,我们来看一下builder中的各种方法:

setAudioAttributes

此方法需要传递一个AudioAttributes对象,最后到WebrtcAudioTrack的构造方法中。AudioAttributes是用来描述音频流的信息的。可以通过以下方法创建该对象:

val audioAttributes = AudioAttributes.Builder()

.setFlags(AudioAttributes.FLAG_AUDIBILITY_ENFORCED)// 设置标志,加强可听性

.setContentType(AudioAttributes.CONTENT_TYPE_SPEECH)// 设置音频类型,会影响输出

.setUsage(AudioAttributes.USAGE_VOICE_COMMUNICATION)// 设置使用场景

.build()setUsage设置使用场景,可用的取值有:

-

USAGE_MEDIA:用于媒体播放 -

USAGE_VOICE_COMMUNICATION:用于语音通信 -

USAGE_ALARM:用于闹钟提醒 -

USAGE_NOTIFICATION:用于通知提醒 -

USAGE_NOTIFICATION_RINGTONE:用于设置通知铃声 -

USAGE_NOTIFICATION_COMMUNICATION_REQUEST:用于请求通话或使用其它形式的通信 -

USAGE_NOTIFICATION_COMMUNICATION_INSTANT:用于即时通信 -

USAGE_NOTIFICATION_COMMUNICATION_DELAYED:用于延迟发送的即时通信 -

USAGE_ASSISTANCE_ACCESSIBILITY:用于辅助功能,如语音助手和屏幕阅读器。

本篇文章的重点不在这里,想要深入了解的小伙伴可自行去了解,其它的就不展开讲了。

setAudioFormat

设置音频采样格式,ENCODING_PCM_16BIT意味着每个采样点点的位深为16bit,单个采样点位深越大,音频质量越好。

setAudioSource

设置音频源,取值必须来自android.media.MediaRecorder.AudioSource中定义的值,可用取值为:

-

MediaRecorder.AudioSource.MIC:麦克风录音 -

MediaRecorder.AudioSource.CAMCORDER:摄像头录音 -

MediaRecorder.AudioSource.DEFAULT:默认音频源 -

MediaRecorder.AudioSource.VOICE_RECOGNITION:语音识别 -

MediaRecorder.AudioSource.VOICE_COMMUNICATION:网络电话等实时通信场景 -

MediaRecorder.AudioSource.REMOTE_SUBMIX:捕获远程混音声音的输出流(需要 API level 19 及以上) -

MediaRecorder.AudioSource.VOICE_DOWNLINK: 用于从电话系统中录制接收到的下行语音的源 -

MediaRecorder.AudioSource.VOICE_UPLINK: 用于从电话系统中录制发送到上行语音的源

创建录制视频相关对象

创建VideoSource

videoSource = peerConnectionFactory.createVideoSource(false)// 参数isScreentcase表示是否是屏幕录制VideoSource对象是连接VideoCapturer和VideoTrack的桥梁。

创建VideoCapturer

VideoCapturer是所有录制视频的类必需要实现的接口,其中有六个方法:

/**

* This function is used to initialize the camera thread, the android application context, and the

* capture observer. It will be called only once and before any startCapture() request. The

* camera thread is guaranteed to be valid until dispose() is called. If the VideoCapturer wants

* to deliver texture frames, it should do this by rendering on the SurfaceTexture in

* {@code surfaceTextureHelper}, register itself as a listener, and forward the frames to

* CapturerObserver.onFrameCaptured(). The caller still has ownership of {@code

* surfaceTextureHelper} and is responsible for making sure surfaceTextureHelper.dispose() is

* called. This also means that the caller can reuse the SurfaceTextureHelper to initialize a new

* VideoCapturer once the previous VideoCapturer has been disposed.

*/

void initialize(SurfaceTextureHelper surfaceTextureHelper, Context applicationContext,

CapturerObserver capturerObserver);

/**

* Start capturing frames in a format that is as close as possible to {@code width x height} and

* {@code framerate}.

*/

void startCapture(int width, int height, int framerate);

/**

* Stop capturing. This function should block until capture is actually stopped.

*/

void stopCapture() throws InterruptedException;

void changeCaptureFormat(int width, int height, int framerate);

/**

* Perform any final cleanup here. No more capturing will be done after this call.

*/

void dispose();

/**

* @return true if-and-only-if this is a screen capturer.

*/

boolean isScreencast();- initialize:初始化,在开始录制前必需要调用此方法

- startCapture:开始录制

- stopCapture:停止录制

- changeCaptureFormat:更改参数,包括视频宽高和帧率

- dispose:释放资源

- isScreencast:是否是屏幕录制

ScreenCapturerAndroid、FileVideoCapturer和CameraCapturer是VideoCapturer的实现类,此处我们用到的是CameraCapturer,即用摄像头来录制视频。说到CameraCapturer,就有必要了解下CameraEnumerator。CameraEnumerator用来获取设备摄像头,并且创建CameraCapturer。

var cameraEnumerator:CameraEnumerator// CameraEnumerator是枚举本地设备的类,用来创建cameraCapturer

if (Camera2Enumerator.isSupported(context)) {

cameraEnumerator = Camera2Enumerator(context)

} else {

cameraEnumerator = Camera1Enumerator()

}

for (name in cameraEnumerator.deviceNames) {

if(cameraEnumerator.isFrontFacing(name)) frontDeviceName = name//前置摄像头

if (cameraEnumerator.isBackFacing(name)) backDeviceName = name//后置摄像头

}

videoCapturer = cameraEnumerator.createCapturer(frontDeviceName!!, cameraEventsHandler)

创建VideoTrack

在创建VideoTrack之前首先要开始录制。

videoCapturer.initialize(surfaceTextureHelper, context, videoSource.capturerObserver)

videoCapturer.startCapture(HD_VIDEO_WIDTH, HD_VIDEO_HEIGHT, FRAME_RATE)

videoTrack = peerConnectionFactory.createVideoTrack("xiong video track", videoSource)播放视频

webrtc播放视频的控件是SurfaceViewRenderer,其继承了SurfaceView,真正实现视频渲染的是EglRenderer类。SurfaceViewRenderer必需要先调用init方法初始化。

surfaceViewRenderer.init(eglContext, null)

surfaceViewRenderer.setScalingType(RendererCommon.ScalingType.SCALE_ASPECT_FIT)//视频缩放类型

surfaceViewRenderer.setMirror(isLocal)//是否启用镜像

调用videoTrack的addSink方法即可播放。

videoTrack.addSink(localRenderer)

切换前后置摄像头

cameraVideoCapturer.switchCamera(cameraSwitchHandler, deviceName)

deviceName为需要切换的摄像头名称,上边在创建VideoCapturer时我们获取到了前后置摄像头的名称,传到这里即可。

别忘了申请权限

使用webrtc需要申请相关的权限,本次我们只需要申请camera和audio_record两个权限。

首先在AndroidManifest.xml文件中添加如下代码:

<uses-permission android:name="android.permission.CAMERA" />

<uses-permission android:name="android.permission.RECORD_AUDIO" />然后在运行时动态申请:

if (ContextCompat.checkSelfPermission(this, Manifest.permission.CAMERA) != PackageManager.PERMISSION_GRANTED ||

ContextCompat.checkSelfPermission(this, Manifest.permission.RECORD_AUDIO) != PackageManager.PERMISSION_GRANTED) {

ActivityCompat.requestPermissions(this, new String[] {Manifest.permission.CAMERA, Manifest.permission.RECORD_AUDIO}, 0x01);

}完整代码

class MediaClient(context: Context) {

private lateinit var peerConnectionFactory: PeerConnectionFactory

private lateinit var videoSource: VideoSource

private lateinit var videoTrack: VideoTrack

private lateinit var videoCapturer: VideoCapturer

private lateinit var audioSource: AudioSource

private lateinit var audioTrack: AudioTrack

private lateinit var adm: AudioDeviceModule

private var eglContext: EglBase.Context = EglBase.create().eglBaseContext

private var context = context.applicationContext//防止内存泄露

private var frontDeviceName: String? = null// 前置摄像头name

private var backDeviceName: String? = null// 后置摄像头name

private val HD_VIDEO_WIDTH = 320//视频宽

private val HD_VIDEO_HEIGHT = 240//视频高

private val FRAME_RATE = 30//视频帧率

private var isInitialized = false// 是否被初始化,如果没有,使用之前首先要初始化

private var isFront = true// 是否是前置摄像头,用来切换前后摄像头

companion object {

private val TAG = "MediaClientLog"

}

fun initMediaClient() {

isInitialized = true;

PeerConnectionFactory.initialize(InitializationOptions.builder(context)

// .setFieldTrials("xiongFieldTrials")// 设置实验性功能

// .setNativeLibraryName("jingle_peerconnection_so")// 底层库的名称,可以不用设置。如果设置名称一定要和底层库的名称一致

.setEnableInternalTracer(true)// 启用内部追踪器,用来记录一些相关数据

.createInitializationOptions())

createAdm()//创建adm

val options = PeerConnectionFactory.Options()

options.networkIgnoreMask = 0x8// 需要忽略的网络类型,忽略了指定的网络后不会尝试连接该类型网络,此处0x8表示vpn

options.disableEncryption = true// 不用数据加密

options.disableNetworkMonitor = false// 启用网络监视器

peerConnectionFactory = PeerConnectionFactory.builder()

.setVideoDecoderFactory(DefaultVideoDecoderFactory(eglContext))// 设置视频解码工厂

.setVideoEncoderFactory(DefaultVideoEncoderFactory(eglContext, false, true))//设置视频编码工厂

.setAudioDeviceModule(adm)

.setOptions(options)

.createPeerConnectionFactory()

initAVResource()//初始化相关资源或对象

}

private fun createAdm() {

val audioAttributes = AudioAttributes.Builder()

.setFlags(AudioAttributes.FLAG_AUDIBILITY_ENFORCED)// 设置标志,加强可听性

.setContentType(AudioAttributes.CONTENT_TYPE_SPEECH)// 设置音频类型,会影响输出

.setUsage(AudioAttributes.USAGE_VOICE_COMMUNICATION)// 设置使用场景

.build()

val audioRecordErrorCallback = object : AudioRecordErrorCallback {

override fun onWebRtcAudioRecordInitError(errorMessage: String?) {

Log.e(TAG, "onWebRtcAudioRecordInitError: ")

}

override fun onWebRtcAudioRecordStartError(

errorCode: JavaAudioDeviceModule.AudioRecordStartErrorCode?,

errorMessage: String?

) {

Log.e(TAG, "onWebRtcAudioRecordStartError: ")

}

override fun onWebRtcAudioRecordError(errorMessage: String?) {

Log.e(TAG, "onWebRtcAudioRecordError: ")

}

}

val audioRecordStateCallback = object : AudioRecordStateCallback {

override fun onWebRtcAudioRecordStart() {

Log.d(TAG, "onWebRtcAudioRecordStart: ")

}

override fun onWebRtcAudioRecordStop() {

Log.d(TAG, "onWebRtcAudioRecordStop: ")

}

}

val audioTrackErrorCallback = object : AudioTrackErrorCallback {

override fun onWebRtcAudioTrackInitError(errorMessage: String?) {

Log.e(TAG, "onWebRtcAudioTrackInitError: ", )

}

override fun onWebRtcAudioTrackStartError(

errorCode: JavaAudioDeviceModule.AudioTrackStartErrorCode?,

errorMessage: String?

) {

Log.e(TAG, "onWebRtcAudioTrackStartError: ", )

}

override fun onWebRtcAudioTrackError(errorMessage: String?) {

Log.e(TAG, "onWebRtcAudioTrackError: ", )

}

}

val audioTrackStateCallback = object : AudioTrackStateCallback {

override fun onWebRtcAudioTrackStart() {

Log.d(TAG, "onWebRtcAudioTrackStart: ")

}

override fun onWebRtcAudioTrackStop() {

Log.d(TAG, "onWebRtcAudioTrackStop: ")

}

}

val samplesReadyCallback = object : SamplesReadyCallback {

override fun onWebRtcAudioRecordSamplesReady(samples: JavaAudioDeviceModule.AudioSamples?) {

Log.d(TAG, "onWebRtcAudioRecordSamplesReady: ")

}

}

adm = JavaAudioDeviceModule.builder(context)

.setAudioAttributes(audioAttributes)// 设置音频属性

.setAudioFormat(AudioFormat.ENCODING_PCM_16BIT)// 设置音频采样格式

.setAudioSource(MediaRecorder.AudioSource.VOICE_COMMUNICATION)// 设置音频录制源

.setSampleRate(44100)// 设置音频采样率

.setAudioRecordErrorCallback(audioRecordErrorCallback)// audio record错误记录

.setAudioRecordStateCallback(audioRecordStateCallback)// audio record状态回调

.setAudioTrackErrorCallback(audioTrackErrorCallback)// audio track错误回调

.setAudioTrackStateCallback(audioTrackStateCallback)// audio track状态回调

.setSamplesReadyCallback(samplesReadyCallback)// 每成功发送一次数据就调用该对象中的onWebRtcAudioRecordSamplesReady方法

.setUseHardwareAcousticEchoCanceler(true)// 使用硬件回声消除

.setUseHardwareNoiseSuppressor(true)// 使用硬件噪声抑制

.setUseStereoInput(false)// 使用立体声输入

.setUseStereoOutput(false)// 使用立体声输出

.createAudioDeviceModule()

adm.release()

}

private fun initAVResource() {

val audioConstraints = MediaConstraints()

audioConstraints.mandatory.add(MediaConstraints.KeyValuePair("googEchoCancellation", "true"))// 启用回声消除

audioConstraints.mandatory.add(MediaConstraints.KeyValuePair("googAutoGainControl", "true"))// 自动增益

audioConstraints.mandatory.add(MediaConstraints.KeyValuePair("googNoiseSuppression", "true"))// 降噪

audioConstraints.mandatory.add(MediaConstraints.KeyValuePair("googHighpassFilter", "true"))// 高通滤波

audioSource = peerConnectionFactory.createAudioSource(audioConstraints)

audioTrack = peerConnectionFactory.createAudioTrack("xiong audio track", audioSource)

var cameraEnumerator:CameraEnumerator// CameraEnumerator是枚举本地设备的类,用来创建cameraCapturer

if (Camera2Enumerator.isSupported(context)) {

cameraEnumerator = Camera2Enumerator(context)

} else {

cameraEnumerator = Camera1Enumerator()

}

for (name in cameraEnumerator.deviceNames) {

if(cameraEnumerator.isFrontFacing(name)) frontDeviceName = name//前置摄像头

if (cameraEnumerator.isBackFacing(name)) backDeviceName = name//后置摄像头

}

val cameraEventsHandler = object : CameraEventsHandler {

override fun onCameraError(errorDescription: String?) {

Log.e(TAG, "onCameraError: ", )

}

override fun onCameraDisconnected() {

Log.e(TAG, "onCameraDisconnected: ", )

}

override fun onCameraFreezed(errorDescription: String?) {

Log.e(TAG, "onCameraFreezed: ", )

}

override fun onCameraOpening(cameraName: String?) {

Log.d(TAG, "onCameraOpening: ")

}

override fun onFirstFrameAvailable() {

Log.d(TAG, "onFirstFrameAvailable: ")

}

override fun onCameraClosed() {

Log.d(TAG, "onCameraClosed: ")

}

}//camera事件捕捉

videoCapturer = cameraEnumerator.createCapturer(frontDeviceName!!, cameraEventsHandler)

videoSource = peerConnectionFactory.createVideoSource(false)// 参数isScreentcase表示是否是屏幕录制

val surfaceTextureHelper = SurfaceTextureHelper.create("surface thread", eglContext)//用来创建videoframe

videoCapturer.initialize(surfaceTextureHelper, context, videoSource.capturerObserver)

videoCapturer.startCapture(HD_VIDEO_WIDTH, HD_VIDEO_HEIGHT, FRAME_RATE)

videoTrack = peerConnectionFactory.createVideoTrack("xiong video track", videoSource)

}

fun loadVideo(localRenderer: SurfaceViewRenderer) {

if (!isInitialized) initMediaClient()

videoTrack.addSink(localRenderer)

}

fun initRenderer(surfaceViewRenderer: SurfaceViewRenderer, isLocal: Boolean) {

surfaceViewRenderer.init(eglContext, null)

surfaceViewRenderer.setScalingType(RendererCommon.ScalingType.SCALE_ASPECT_FIT)//视频缩放类型

surfaceViewRenderer.setMirror(isLocal)//是否启用镜像

}

fun switchCamera() {

if (!isInitialized) initMediaClient()

val cameraVideoCapturer = videoCapturer as CameraVideoCapturer

val cameraSwitchHandler = object : CameraSwitchHandler {

override fun onCameraSwitchDone(isFrontCamera: Boolean) {

Log.d(TAG, "onCameraSwitchDone: ")

}

override fun onCameraSwitchError(errorDescription: String?) {

Log.d(TAG, "onCameraSwitchError: ")

}

}

if (isFront) {

cameraVideoCapturer.switchCamera(cameraSwitchHandler, backDeviceName!!)

isFront = false

}

else {

cameraVideoCapturer.switchCamera(cameraSwitchHandler, frontDeviceName)

isFront = true

}

}

}在自己的activity中先调用initMediaClient方法,然后调用initRenderer方法,最后调用loadVideo方法即可。

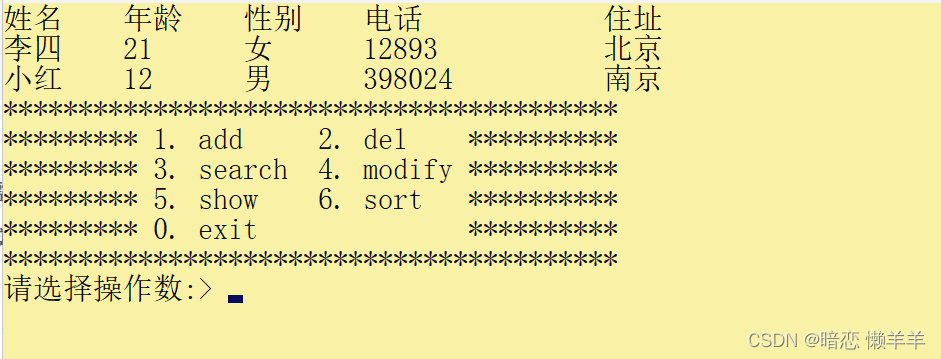

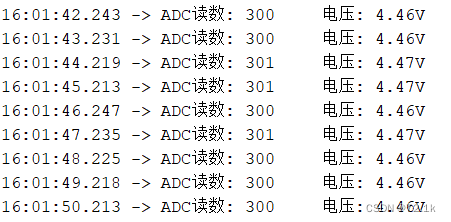

最终实现效果如下: