兼容性针对的是不同的Cuda版本和设备计算能力(compute capability)

shuffle

在C\C++扩展一节

新版本函数见Cuda12.0 文档

__shfl_sync, __shfl_up_sync, __shfl_down_sync, and __shfl_xor_sync exchange a variable between threads within a warp.

Supported by devices of compute capability 5.0 or higher.

计算能力5.0以上设备支持_sync后缀的函数

Deprecation Notice: __shfl, __shfl_up, __shfl_down, and __shfl_xor have been deprecated in CUDA 9.0 for all devices.

Cuda 9.0 开始移除了老版不带_sync后缀的函数

Removal Notice: When targeting devices with compute capability 7.x or higher, __shfl, __shfl_up, __shfl_down, and __shfl_xor are no longer available and their sync variants should be used instead.

计算能力7.x以上的设备不支持老版函数,请更换带_sync后缀的

老版函数见Cuda8.0 文档

__shfl, __shfl_up, __shfl_down, __shfl_xor exchange a variable between threads within a warp.

Supported by devices of compute capability 3.x or higher.

支持计算能力3.x以上的设备

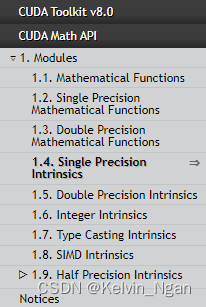

数学库函数

使用时直接查文档即可,在数学API参考一节,没找到就是不支持

Cuda12.0 数学函数/指令列表

Cuda8.0 数学函数/指令列表

原子

在C\C++扩展一节

Cuda12.0 文档

Cuda8.0 文档

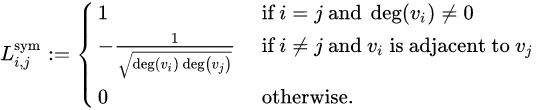

看最新版的文档就行。BTW,吐槽一下新版的排版没有老版的方便看了

atomicAdd()

The 32-bit floating-point version of atomicAdd() is only supported by devices of compute capability 2.x and higher.

32位浮点的原子加要求计算能力2.x以上

The 64-bit floating-point version of atomicAdd() is only supported by devices of compute capability 6.x and higher.

64位浮点的原子加要求计算能力6.x以上

The 32-bit __half2 floating-point version of atomicAdd() is only supported by devices of compute capability 6.x and higher.

32位双半精度浮点的原子加要求计算能力6.x以上

The 16-bit __half floating-point version of atomicAdd() is only supported by devices of compute capability 7.x and higher.

16位半精度浮点的原子加要求计算能力7.x以上

The 16-bit __nv_bfloat16 floating-point version of atomicAdd() is only supported by devices of compute capability 8.x and higher.

16位nv半精度浮点的原子加要求计算能力8.x以上

atomicMin()、atomicMax()、atomicAnd()、atomicOr()、atomicXor()

The 64-bit version of atomicMin() / atomicMax() / atomicAnd() / atomicOr() /atomicXor()is only supported by devices of compute capability 5.0 and higher.

64位的要求计算能力5.x以上

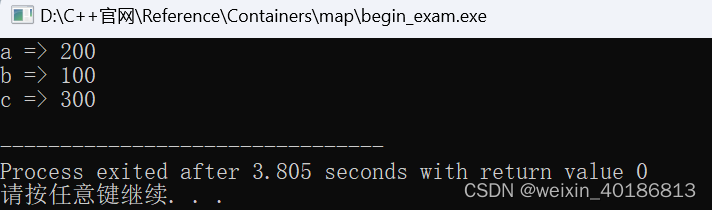

atomicExch()、atomicInc()、atomicDec()、atomicCAS() 没有特别要求,都兼容

![[附源码]Nodejs计算机毕业设计基于Web美食网站设计Express(程序+LW)](https://img-blog.csdnimg.cn/ef25df57f6b349c1abe0f33566d3ee4c.png)

![[附源码]Python计算机毕业设计甘肃草地植物数字化标本库管理系统Django(程序+LW)](https://img-blog.csdnimg.cn/4df4772d0ee74e70b70ca439fe813e1e.png)

![[附源码]Python计算机毕业设计高校创新学分申报管理系统Django(程序+LW)](https://img-blog.csdnimg.cn/15bca14312c046b6b5e9eba764d26f29.png)