基本思想:适配mmpose模型,记录一下流水帐,环境配置和模型来自,请查看参考链接。

链接: https://pan.baidu.com/s/1IkiwuZf1anyKX1sZkYmD1g?pwd=i51s 提取码: i51s

一、转模型

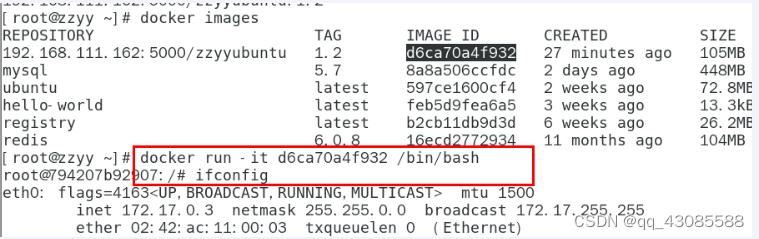

(base) root@davinci-mini:~/sxj731533730# atc --model=end2end.onnx --framework=5 --output=end2end --input_format=NCHW --input_shape="input:1,3,256,256" --log=error --soc_version=Ascend310B1

ATC start working now, please wait for a moment.

...

ATC run success, welcome to the next use.python代码

import time

import cv2

import numpy as np

from ais_bench.infer.interface import InferSession

model_path = "end2end.om"

IMG_PATH = "ca110.jpeg"

def bbox_xywh2cs(bbox, aspect_ratio, padding=1., pixel_std=200.):

"""Transform the bbox format from (x,y,w,h) into (center, scale)

Args:

bbox (ndarray): Single bbox in (x, y, w, h)

aspect_ratio (float): The expected bbox aspect ratio (w over h)

padding (float): Bbox padding factor that will be multilied to scale.

Default: 1.0

pixel_std (float): The scale normalization factor. Default: 200.0

Returns:

tuple: A tuple containing center and scale.

- np.ndarray[float32](2,): Center of the bbox (x, y).

- np.ndarray[float32](2,): Scale of the bbox w & h.

"""

x, y, w, h = bbox[:4]

center = np.array([x + w * 0.5, y + h * 0.5], dtype=np.float32)

if w > aspect_ratio * h:

h = w * 1.0 / aspect_ratio

elif w < aspect_ratio * h:

w = h * aspect_ratio

scale = np.array([w, h], dtype=np.float32) / pixel_std

scale = scale * padding

return center, scale

def rotate_point(pt, angle_rad):

"""Rotate a point by an angle.

Args:

pt (list[float]): 2 dimensional point to be rotated

angle_rad (float): rotation angle by radian

Returns:

list[float]: Rotated point.

"""

assert len(pt) == 2

sn, cs = np.sin(angle_rad), np.cos(angle_rad)

new_x = pt[0] * cs - pt[1] * sn

new_y = pt[0] * sn + pt[1] * cs

rotated_pt = [new_x, new_y]

return rotated_pt

def _get_3rd_point(a, b):

"""To calculate the affine matrix, three pairs of points are required. This

function is used to get the 3rd point, given 2D points a & b.

The 3rd point is defined by rotating vector `a - b` by 90 degrees

anticlockwise, using b as the rotation center.

Args:

a (np.ndarray): point(x,y)

b (np.ndarray): point(x,y)

Returns:

np.ndarray: The 3rd point.

"""

assert len(a) == 2

assert len(b) == 2

direction = a - b

third_pt = b + np.array([-direction[1], direction[0]], dtype=np.float32)

return third_pt

def get_affine_transform(center,

scale,

rot,

output_size,

shift=(0., 0.),

inv=False):

"""Get the affine transform matrix, given the center/scale/rot/output_size.

Args:

center (np.ndarray[2, ]): Center of the bounding box (x, y).

scale (np.ndarray[2, ]): Scale of the bounding box

wrt [width, height].

rot (float): Rotation angle (degree).

output_size (np.ndarray[2, ] | list(2,)): Size of the

destination heatmaps.

shift (0-100%): Shift translation ratio wrt the width/height.

Default (0., 0.).

inv (bool): Option to inverse the affine transform direction.

(inv=False: src->dst or inv=True: dst->src)

Returns:

np.ndarray: The transform matrix.

"""

assert len(center) == 2

assert len(scale) == 2

assert len(output_size) == 2

assert len(shift) == 2

# pixel_std is 200.

scale_tmp = scale * 200.0

shift = np.array(shift)

src_w = scale_tmp[0]

dst_w = output_size[0]

dst_h = output_size[1]

rot_rad = np.pi * rot / 180

src_dir = rotate_point([0., src_w * -0.5], rot_rad)

dst_dir = np.array([0., dst_w * -0.5])

src = np.zeros((3, 2), dtype=np.float32)

src[0, :] = center + scale_tmp * shift

src[1, :] = center + src_dir + scale_tmp * shift

src[2, :] = _get_3rd_point(src[0, :], src[1, :])

dst = np.zeros((3, 2), dtype=np.float32)

dst[0, :] = [dst_w * 0.5, dst_h * 0.5]

dst[1, :] = np.array([dst_w * 0.5, dst_h * 0.5]) + dst_dir

dst[2, :] = _get_3rd_point(dst[0, :], dst[1, :])

if inv:

trans = cv2.getAffineTransform(np.float32(dst), np.float32(src))

else:

trans = cv2.getAffineTransform(np.float32(src), np.float32(dst))

return trans

def bbox_xyxy2xywh(bbox_xyxy):

"""Transform the bbox format from x1y1x2y2 to xywh.

Args:

bbox_xyxy (np.ndarray): Bounding boxes (with scores), shaped (n, 4) or

(n, 5). (left, top, right, bottom, [score])

Returns:

np.ndarray: Bounding boxes (with scores),

shaped (n, 4) or (n, 5). (left, top, width, height, [score])

"""

bbox_xywh = bbox_xyxy.copy()

bbox_xywh[:, 2] = bbox_xywh[:, 2] - bbox_xywh[:, 0]

bbox_xywh[:, 3] = bbox_xywh[:, 3] - bbox_xywh[:, 1]

return bbox_xywh

def _get_max_preds(heatmaps):

"""Get keypoint predictions from score maps.

Note:

batch_size: N

num_keypoints: K

heatmap height: H

heatmap width: W

Args:

heatmaps (np.ndarray[N, K, H, W]): model predicted heatmaps.

Returns:

tuple: A tuple containing aggregated results.

- preds (np.ndarray[N, K, 2]): Predicted keypoint location.

- maxvals (np.ndarray[N, K, 1]): Scores (confidence) of the keypoints.

"""

assert isinstance(heatmaps,

np.ndarray), ('heatmaps should be numpy.ndarray')

assert heatmaps.ndim == 4, 'batch_images should be 4-ndim'

N, K, _, W = heatmaps.shape

heatmaps_reshaped = heatmaps.reshape((N, K, -1))

idx = np.argmax(heatmaps_reshaped, 2).reshape((N, K, 1))

maxvals = np.amax(heatmaps_reshaped, 2).reshape((N, K, 1))

preds = np.tile(idx, (1, 1, 2)).astype(np.float32)

preds[:, :, 0] = preds[:, :, 0] % W

preds[:, :, 1] = preds[:, :, 1] // W

preds = np.where(np.tile(maxvals, (1, 1, 2)) > 0.0, preds, -1)

return preds, maxvals

def transform_preds(coords, center, scale, output_size, use_udp=False):

"""Get final keypoint predictions from heatmaps and apply scaling and

translation to map them back to the image.

Note:

num_keypoints: K

Args:

coords (np.ndarray[K, ndims]):

* If ndims=2, corrds are predicted keypoint location.

* If ndims=4, corrds are composed of (x, y, scores, tags)

* If ndims=5, corrds are composed of (x, y, scores, tags,

flipped_tags)

center (np.ndarray[2, ]): Center of the bounding box (x, y).

scale (np.ndarray[2, ]): Scale of the bounding box

wrt [width, height].

output_size (np.ndarray[2, ] | list(2,)): Size of the

destination heatmaps.

use_udp (bool): Use unbiased data processing

Returns:

np.ndarray: Predicted coordinates in the images.

"""

assert coords.shape[1] in (2, 4, 5)

assert len(center) == 2

assert len(scale) == 2

assert len(output_size) == 2

# Recover the scale which is normalized by a factor of 200.

scale = scale * 200.0

if use_udp:

scale_x = scale[0] / (output_size[0] - 1.0)

scale_y = scale[1] / (output_size[1] - 1.0)

else:

scale_x = scale[0] / output_size[0]

scale_y = scale[1] / output_size[1]

target_coords = np.ones_like(coords)

target_coords[:, 0] = coords[:, 0] * scale_x + center[0] - scale[0] * 0.5

target_coords[:, 1] = coords[:, 1] * scale_y + center[1] - scale[1] * 0.5

return target_coords

def keypoints_from_heatmaps(heatmaps,

center,

scale,

unbiased=False,

post_process='default',

kernel=11,

valid_radius_factor=0.0546875,

use_udp=False,

target_type='GaussianHeatmap'):

# Avoid being affected

heatmaps = heatmaps.copy()

N, K, H, W = heatmaps.shape

preds, maxvals = _get_max_preds(heatmaps)

# add +/-0.25 shift to the predicted locations for higher acc.

for n in range(N):

for k in range(K):

heatmap = heatmaps[n][k]

px = int(preds[n][k][0])

py = int(preds[n][k][1])

if 1 < px < W - 1 and 1 < py < H - 1:

diff = np.array([

heatmap[py][px + 1] - heatmap[py][px - 1],

heatmap[py + 1][px] - heatmap[py - 1][px]

])

preds[n][k] += np.sign(diff) * .25

if post_process == 'megvii':

preds[n][k] += 0.5

# Transform back to the image

for i in range(N):

preds[i] = transform_preds(

preds[i], center[i], scale[i], [W, H], use_udp=use_udp)

if post_process == 'megvii':

maxvals = maxvals / 255.0 + 0.5

return preds, maxvals

def decode(output, center, scale, score_, batch_size=1):

c = np.zeros((batch_size, 2), dtype=np.float32)

s = np.zeros((batch_size, 2), dtype=np.float32)

score = np.ones(batch_size)

for i in range(batch_size):

c[i, :] = center

s[i, :] = scale

score[i] = np.array(score_).reshape(-1)

preds, maxvals = keypoints_from_heatmaps(

output,

c,

s,

False,

'default',

11,

0.0546875,

False,

'GaussianHeatmap'

)

all_preds = np.zeros((batch_size, preds.shape[1], 3), dtype=np.float32)

all_boxes = np.zeros((batch_size, 6), dtype=np.float32)

all_preds[:, :, 0:2] = preds[:, :, 0:2]

all_preds[:, :, 2:3] = maxvals

all_boxes[:, 0:2] = c[:, 0:2]

all_boxes[:, 2:4] = s[:, 0:2]

all_boxes[:, 4] = np.prod(s * 200.0, axis=1)

all_boxes[:, 5] = score

result = {}

result['preds'] = all_preds

result['boxes'] = all_boxes

print(result)

return result

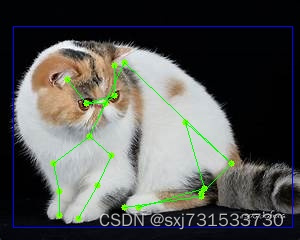

def draw(bgr, predict_dict, skeleton,box):

cv2.rectangle(bgr, (int(box[0]), int(box[1])), (int(box[0]) + int(box[2]), int(box[1]) + int(box[3])),

(255, 0, 0))

all_preds = predict_dict["preds"]

for all_pred in all_preds:

for x, y, s in all_pred:

cv2.circle(bgr, (int(x), int(y)), 3, (0, 255, 120), -1)

for sk in skeleton:

x0 = int(all_pred[sk[0]][0])

y0 = int(all_pred[sk[0]][1])

x1 = int(all_pred[sk[1]][0])

y1 = int(all_pred[sk[1]][1])

cv2.line(bgr, (x0, y0), (x1, y1), (0, 255, 0), 1)

cv2.imwrite("sxj731533730_sxj.jpg", bgr)

if __name__ == "__main__":

# Create RKNN object

model = InferSession(0, model_path)

print("done")

bbox = [13.711652 , 26.188112, 293.61298-13.711652 , 227.78246-26.188112, 9.995332e-01]

image_size = [256, 256]

src_img = cv2.imread(IMG_PATH)

img = cv2.cvtColor(src_img, cv2.COLOR_BGR2RGB) # hwc rgb

aspect_ratio = image_size[0] / image_size[1]

img_height = img.shape[0]

img_width = img.shape[1]

padding = 1.25

pixel_std = 200

center, scale = bbox_xywh2cs(

bbox,

aspect_ratio,

padding,

pixel_std)

trans = get_affine_transform(center, scale, 0, image_size)

img = cv2.warpAffine(

img,

trans, (int(image_size[0]), int(image_size[1])),

flags=cv2.INTER_LINEAR)

print(trans)

img = img / 255.0 # 归一化到0~1

img = img.transpose(2, 0, 1)

img = np.ascontiguousarray(img, dtype=np.float32)

# Inference

print("--> Running model")

outputs = model.infer([img])[0]

print(outputs)

predict_dict = decode(outputs, center, scale, bbox[-1])

skeleton = [[0, 1],[0, 2],[1, 3],[0, 4],

[1, 4],[4, 5],[5, 7],[5,8],[5, 9],

[6, 7],[6, 10],[6, 11],[8, 12],

[9, 13],[10, 14],[11, 15],[12, 16],

[13, 17],[14, 18],[15, 19]]

draw(src_img, predict_dict, skeleton,bbox)

cmakelists.txt

cmake_minimum_required(VERSION 3.16)

project(untitled10)

set(CMAKE_CXX_FLAGS "-std=c++11")

set(CMAKE_CXX_STANDARD 11)

add_definitions(-DENABLE_DVPP_INTERFACE)

include_directories(/usr/local/samples/cplusplus/common/acllite/include)

include_directories(/usr/local/Ascend/ascend-toolkit/latest/aarch64-linux/include)

find_package(OpenCV REQUIRED)

#message(STATUS ${OpenCV_INCLUDE_DIRS})

#添加头文件

include_directories(${OpenCV_INCLUDE_DIRS})

#链接Opencv库

add_library(libascendcl SHARED IMPORTED)

set_target_properties(libascendcl PROPERTIES IMPORTED_LOCATION /usr/local/Ascend/ascend-toolkit/latest/aarch64-linux/lib64/libascendcl.so)

add_library(libacllite SHARED IMPORTED)

set_target_properties(libacllite PROPERTIES IMPORTED_LOCATION /usr/local/samples/cplusplus/common/acllite/out/aarch64/libacllite.so)

add_executable(untitled10 main.cpp)

target_link_libraries(untitled10 ${OpenCV_LIBS} libascendcl libacllite)c++代码

#include <opencv2/opencv.hpp>

#include "AclLiteUtils.h"

#include "AclLiteImageProc.h"

#include "AclLiteResource.h"

#include "AclLiteError.h"

#include "AclLiteModel.h"

using namespace std;

using namespace cv;

typedef enum Result {

SUCCESS = 0,

FAILED = 1

} Result;

struct Keypoints {

float x;

float y;

float score;

Keypoints() : x(0), y(0), score(0) {}

Keypoints(float x, float y, float score) : x(x), y(y), score(score) {}

};

struct Box {

float center_x;

float center_y;

float scale_x;

float scale_y;

float scale_prob;

float score;

Box() : center_x(0), center_y(0), scale_x(0), scale_y(0), scale_prob(0), score(0) {}

Box(float center_x, float center_y, float scale_x, float scale_y, float scale_prob, float score) :

center_x(center_x), center_y(center_y), scale_x(scale_x), scale_y(scale_y), scale_prob(scale_prob),

score(score) {}

};

void bbox_xywh2cs(float bbox[], float aspect_ratio, float padding, float pixel_std, float *center, float *scale) {

float x = bbox[0];

float y = bbox[1];

float w = bbox[2];

float h = bbox[3];

*center = x + w * 0.5;

*(center + 1) = y + h * 0.5;

if (w > aspect_ratio * h)

h = w * 1.0 / aspect_ratio;

else if (w < aspect_ratio * h)

w = h * aspect_ratio;

*scale = (w / pixel_std) * padding;

*(scale + 1) = (h / pixel_std) * padding;

}

void rotate_point(float *pt, float angle_rad, float *rotated_pt) {

float sn = sin(angle_rad);

float cs = cos(angle_rad);

float new_x = pt[0] * cs - pt[1] * sn;

float new_y = pt[0] * sn + pt[1] * cs;

rotated_pt[0] = new_x;

rotated_pt[1] = new_y;

}

void _get_3rd_point(cv::Point2f a, cv::Point2f b, float *direction) {

float direction_0 = a.x - b.x;

float direction_1 = a.y - b.y;

direction[0] = b.x - direction_1;

direction[1] = b.y + direction_0;

}

void get_affine_transform(float *center, float *scale, float rot, float *output_size, float *shift, bool inv,

cv::Mat &trans) {

float scale_tmp[] = {0, 0};

scale_tmp[0] = scale[0] * 200.0;

scale_tmp[1] = scale[1] * 200.0;

float src_w = scale_tmp[0];

float dst_w = output_size[0];

float dst_h = output_size[1];

float rot_rad = M_PI * rot / 180;

float pt[] = {0, 0};

pt[0] = 0;

pt[1] = src_w * (-0.5);

float src_dir[] = {0, 0};

rotate_point(pt, rot_rad, src_dir);

float dst_dir[] = {0, 0};

dst_dir[0] = 0;

dst_dir[1] = dst_w * (-0.5);

cv::Point2f src[3] = {cv::Point2f(0, 0), cv::Point2f(0, 0), cv::Point2f(0, 0)};

src[0] = cv::Point2f(center[0] + scale_tmp[0] * shift[0], center[1] + scale_tmp[1] * shift[1]);

src[1] = cv::Point2f(center[0] + src_dir[0] + scale_tmp[0] * shift[0],

center[1] + src_dir[1] + scale_tmp[1] * shift[1]);

float direction_src[] = {0, 0};

_get_3rd_point(src[0], src[1], direction_src);

src[2] = cv::Point2f(direction_src[0], direction_src[1]);

cv::Point2f dst[3] = {cv::Point2f(0, 0), cv::Point2f(0, 0), cv::Point2f(0, 0)};

dst[0] = cv::Point2f(dst_w * 0.5, dst_h * 0.5);

dst[1] = cv::Point2f(dst_w * 0.5 + dst_dir[0], dst_h * 0.5 + dst_dir[1]);

float direction_dst[] = {0, 0};

_get_3rd_point(dst[0], dst[1], direction_dst);

dst[2] = cv::Point2f(direction_dst[0], direction_dst[1]);

if (inv) {

trans = cv::getAffineTransform(dst, src);

} else {

trans = cv::getAffineTransform(src, dst);

}

}

void

transform_preds(std::vector <cv::Point2f> coords, std::vector <Keypoints> &target_coords, float *center, float *scale,

int w, int h, bool use_udp = false) {

float scale_x[] = {0, 0};

float temp_scale[] = {scale[0] * 200, scale[1] * 200};

if (use_udp) {

scale_x[0] = temp_scale[0] / (w - 1);

scale_x[1] = temp_scale[1] / (h - 1);

} else {

scale_x[0] = temp_scale[0] / w;

scale_x[1] = temp_scale[1] / h;

}

for (int i = 0; i < coords.size(); i++) {

target_coords[i].x = coords[i].x * scale_x[0] + center[0] - temp_scale[0] * 0.5;

target_coords[i].y = coords[i].y * scale_x[1] + center[1] - temp_scale[1] * 0.5;

}

}

int main() {

const char *modelPath = "../end2end.om";

bool flip_test = true;

bool heap_map = false;

float keypoint_score = 0.3f;

cv::Mat bgr = cv::imread("../ca110.jpeg");

cv::Mat rgb;

cv::cvtColor(bgr, rgb, cv::COLOR_BGR2RGB);

float image_target_w = 256;

float image_target_h = 256;

float padding = 1.25;

float pixel_std = 200;

float aspect_ratio = image_target_h / image_target_w;

float bbox[] = {13.711652, 26.188112, 293.61298, 227.78246, 9.995332e-01};// 需要检测框架 这个矩形框来自检测框架的坐标 x y w h score

bbox[2] = bbox[2] - bbox[0];

bbox[3] = bbox[3] - bbox[1];

float center[2] = {0, 0};

float scale[2] = {0, 0};

bbox_xywh2cs(bbox, aspect_ratio, padding, pixel_std, center, scale);

float rot = 0;

float shift[] = {0, 0};

bool inv = false;

float output_size[] = {image_target_h, image_target_w};

cv::Mat trans;

get_affine_transform(center, scale, rot, output_size, shift, inv, trans);

std::cout << trans << std::endl;

std::cout << center[0] << " " << center[1] << " " << scale[0] << " " << scale[1] << std::endl;

cv::Mat detect_image;//= cv::Mat::zeros(image_target_w ,image_target_h, CV_8UC3);

cv::warpAffine(rgb, detect_image, trans, cv::Size(image_target_h, image_target_w), cv::INTER_LINEAR);

//cv::imwrite("te.jpg",detect_image);

std::cout << detect_image.cols << " " << detect_image.rows << std::endl;

// inference

bool release = false;

//SampleYOLOV7 sampleYOLO(modelPath, target_width, target_height);

float *imageBytes;

AclLiteResource aclResource_;

AclLiteImageProc imageProcess_;

AclLiteModel model_;

aclrtRunMode runMode_;

ImageData resizedImage_;

const char *modelPath_;

int32_t modelWidth_;

int32_t modelHeight_;

AclLiteError ret = aclResource_.Init();

if (ret == FAILED) {

ACLLITE_LOG_ERROR("resource init failed, errorCode is %d", ret);

return FAILED;

}

ret = aclrtGetRunMode(&runMode_);

if (ret == FAILED) {

ACLLITE_LOG_ERROR("get runMode failed, errorCode is %d", ret);

return FAILED;

}

// init dvpp resource

ret = imageProcess_.Init();

if (ret == FAILED) {

ACLLITE_LOG_ERROR("imageProcess init failed, errorCode is %d", ret);

return FAILED;

}

// load model from file

ret = model_.Init(modelPath);

if (ret == FAILED) {

ACLLITE_LOG_ERROR("model init failed, errorCode is %d", ret);

return FAILED;

}

// data standardization

float meanRgb[3] = {0, 0, 0};

float stdRgb[3] = {1 / 255.0f, 1 / 255.0f, 1 / 255.0f};

// create malloc of image, which is shape with NCHW

//const float meanRgb[3] = {0.485f * 255.f, 0.456f * 255.f, 0.406f * 255.f};

//const float stdRgb[3] = {(1 / 0.229f / 255.f), (1 / 0.224f / 255.f), (1 / 0.225f / 255.f)};

int32_t channel = detect_image.channels();

int32_t resizeHeight = detect_image.rows;

int32_t resizeWeight = detect_image.cols;

imageBytes = (float *) malloc(channel * image_target_w * image_target_h * sizeof(float));

memset(imageBytes, 0, channel * image_target_h * image_target_w * sizeof(float));

// image to bytes with shape HWC to CHW, and switch channel BGR to RGB

for (int c = 0; c < channel; ++c) {

for (int h = 0; h < resizeHeight; ++h) {

for (int w = 0; w < resizeWeight; ++w) {

int dstIdx = c * resizeHeight * resizeWeight + h * resizeWeight + w;

imageBytes[dstIdx] = static_cast<float>(

(detect_image.at<cv::Vec3b>(h, w)[c] -

1.0f * meanRgb[c]) * 1.0f * stdRgb[c] );

}

}

}

std::vector <InferenceOutput> inferOutputs;

ret = model_.CreateInput(static_cast<void *>(imageBytes),

channel * image_target_w * image_target_h * sizeof(float));

if (ret == FAILED) {

ACLLITE_LOG_ERROR("CreateInput failed, errorCode is %d", ret);

return FAILED;

}

// inference

ret = model_.Execute(inferOutputs);

if (ret != ACL_SUCCESS) {

ACLLITE_LOG_ERROR("execute model failed, errorCode is %d", ret);

return FAILED;

}

// for()

float *data = static_cast<float *>(inferOutputs[0].data.get());

//输出维度

int shape_d =1;

int shape_c = 20;

int shape_w = 64;

int shape_h = 64;

std::vector<float> vec_heap;

for (int i = 0; i < shape_c * shape_h * shape_w; i++) {

vec_heap.push_back(data[i]);

}

std::vector <Keypoints> all_preds;

std::vector<int> idx;

for (int i = 0; i < shape_c; i++) {

auto begin = vec_heap.begin() + i * shape_w * shape_h;

auto end = vec_heap.begin() + (i + 1) * shape_w * shape_h;

float maxValue = *max_element(begin, end);

int maxPosition = max_element(begin, end) - begin;

all_preds.emplace_back(Keypoints(0, 0, maxValue));

idx.emplace_back(maxPosition);

}

std::vector <cv::Point2f> vec_point;

for (int i = 0; i < idx.size(); i++) {

int x = idx[i] % shape_w;

int y = idx[i] / shape_w;

vec_point.emplace_back(cv::Point2f(x, y));

}

for (int i = 0; i < shape_c; i++) {

int px = vec_point[i].x;

int py = vec_point[i].y;

if (px > 1 && px < shape_w - 1 && py > 1 && py < shape_h - 1) {

float diff_0 = vec_heap[py * shape_w + px + 1] - vec_heap[py * shape_w + px - 1];

float diff_1 = vec_heap[(py + 1) * shape_w + px] - vec_heap[(py - 1) * shape_w + px];

vec_point[i].x += diff_0 == 0 ? 0 : (diff_0 > 0) ? 0.25 : -0.25;

vec_point[i].y += diff_1 == 0 ? 0 : (diff_1 > 0) ? 0.25 : -0.25;

}

}

std::vector <Box> all_boxes;

if (heap_map) {

all_boxes.emplace_back(Box(center[0], center[1], scale[0], scale[1], scale[0] * scale[1] * 400, bbox[4]));

}

transform_preds(vec_point, all_preds, center, scale, shape_w, shape_h);

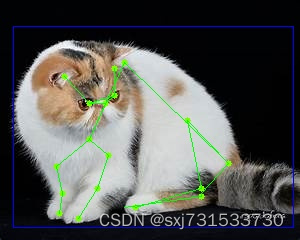

//0 L_Eye 1 R_Eye 2 L_EarBase 3 R_EarBase 4 Nose 5 Throat 6 TailBase 7 Withers 8 L_F_Elbow 9 R_F_Elbow 10 L_B_Elbow 11 R_B_Elbow

// 12 L_F_Knee 13 R_F_Knee 14 L_B_Knee 15 R_B_Knee 16 L_F_Paw 17 R_F_Paw 18 L_B_Paw 19 R_B_Paw

int skeleton[][2] = {{0, 1},

{0, 2},

{1, 3},

{0, 4},

{1, 4},

{4, 5},

{5, 7},

{5, 8},

{5, 9},

{6, 7},

{6, 10},

{6, 11},

{8, 12},

{9, 13},

{10, 14},

{11, 15},

{12, 16},

{13, 17},

{14, 18},

{15, 19}};

cv::rectangle(bgr, cv::Point(bbox[0], bbox[1]), cv::Point(bbox[0] + bbox[2], bbox[1] + bbox[3]),

cv::Scalar(255, 0, 0));

for (int i = 0; i < all_preds.size(); i++) {

if (all_preds[i].score > keypoint_score) {

cv::circle(bgr, cv::Point(all_preds[i].x, all_preds[i].y), 3, cv::Scalar(0, 255, 120), -1);//画点,其实就是实心圆

}

}

for (int i = 0; i < sizeof(skeleton) / sizeof(sizeof(skeleton[1])); i++) {

int x0 = all_preds[skeleton[i][0]].x;

int y0 = all_preds[skeleton[i][0]].y;

int x1 = all_preds[skeleton[i][1]].x;

int y1 = all_preds[skeleton[i][1]].y;

cv::line(bgr, cv::Point(x0, y0), cv::Point(x1, y1),

cv::Scalar(0, 255, 0), 1);

}

cv::imwrite("../image.jpg", bgr);

model_.DestroyResource();

imageProcess_.DestroyResource();

aclResource_.Release();

return SUCCESS;

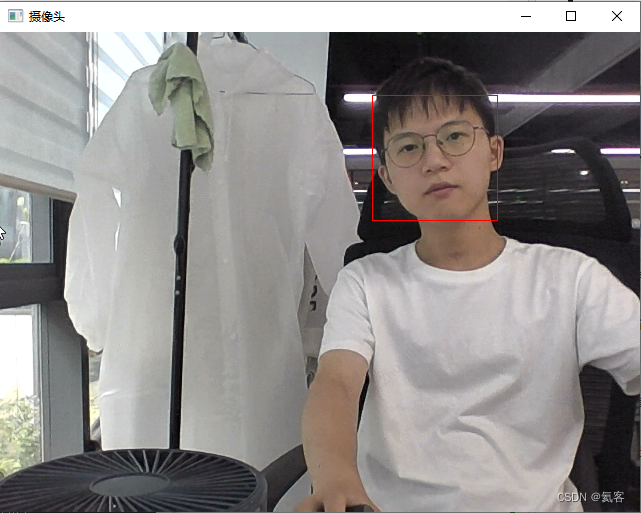

}测试结果

/root/sxj731533730/cmake-build-debug/untitled10

[0.7316863791031282, -0, 15.56737128098375;

-4.62405306581973e-17, 0.7316863791031282, 35.08659815701316]

153.662 126.985 1.74938 1.74938

256 256

[INFO] Acl init ok

[INFO] Open device 0 ok

[INFO] Use default context currently

[INFO] dvpp init resource ok

[INFO] Load model ../end2end.om success

[INFO] Create model description success

[INFO] Create model(../end2end.om) output success

[INFO] Init model ../end2end.om success

[INFO] Unload model ../end2end.om success

[INFO] destroy context ok

[INFO] Reset device 0 ok

[INFO] Finalize acl ok

Process finished with exit code 0

参考自己的博客

48、mmpose中hrnet关键点识别模型转ncnn和mnn,并进行训练和部署_hrnet ncnn_sxj731533730的博客-CSDN博客

61、华为昇腾开发板Atlas 200I DK A2初步测试,yolov7_batchsize_1&yolov7_batchsize_3的python/c++推理测试_sxj731533730的博客-CSDN博客