提示:GPU-manager安装为主部分内容做了升级开箱即用,有用请点收藏❤抱拳

文章目录

- 前言

- 一、约束条件

- 二、使用步骤

- 1.下载镜像

- 1.1 查看当前虚拟机的驱动类型:

- 2.部署gpu-manager

- 3.部署gpu-admission

- 4.修改kube-scheduler.yaml

- 4.1 新建/etc/kubernetes/scheduler-policy-config.json

- 4.2 新建/etc/kubernetes/scheduler-extender.yaml

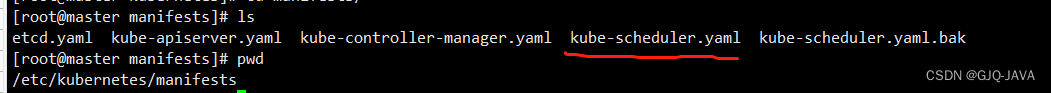

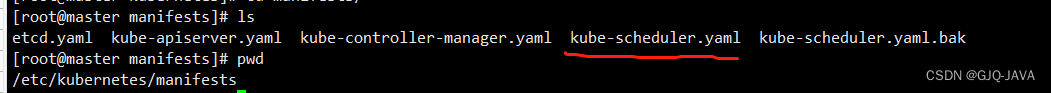

- 4.3 修改/etc/kubernetes/manifests/kube-scheduler.yaml

- 4.1 结果查看

- 测试

- 总结

前言

本文只做开箱即用部分,想了解GPUManager虚拟化方案技术层面请直接点击:GPUmanager虚拟化方案

一、约束条件

1、虚拟机需要完成直通式绑定,也就是物理GPU与虚拟机绑定,我做的是hyper-v的虚拟机绑定参照上一篇文章

2、对于k8s要求1.10版本以上

3、GPU-Manager 要求集群内包含 GPU 机型节点

4、每张 GPU 卡一共有100个单位的资源,仅支持0 - 1的小数卡,以及1的倍数的整数卡设置。显存资源是以256MiB为最小的一个单位的分配显存

我的版本:k8s-1.20

二、使用步骤

1.下载镜像

镜像地址:https://hub.docker.com/r/tkestack/gpu-manager/tags

manager:docker pull tkestack/gpu-manager:v1.1.5

https://hub.docker.com/r/tkestack/gpu-quota-admission/tags

admission:docker pull tkestack/gpu-quota-admission:v1.0.0

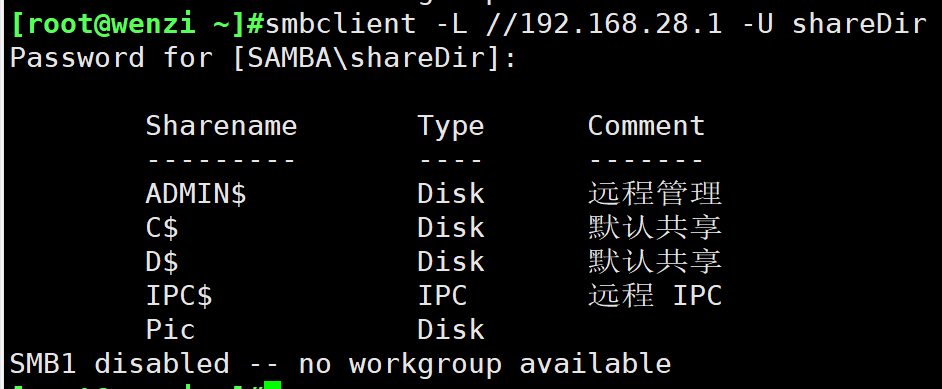

1.1 查看当前虚拟机的驱动类型:

docker info

2.部署gpu-manager

拥有GPU节点打标签:

kubectl label node XX nvidia-device-enable=enable

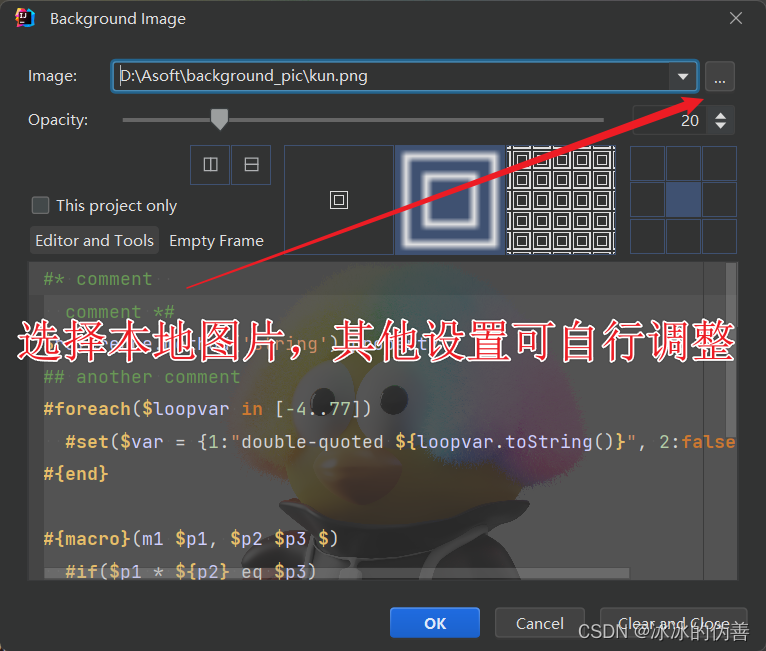

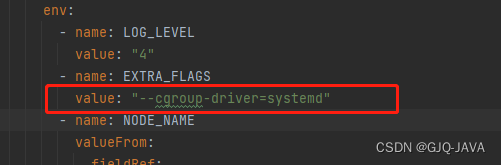

如果docker驱动是systemd 需要在yaml指定,因为GPUmanager默认cgroupfs

创建yaml内容如下:

apiVersion: v1

kind: ServiceAccount

metadata:

name: gpu-manager

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: gpu-manager-role

subjects:

- kind: ServiceAccount

name: gpu-manager

namespace: kube-system

roleRef:

kind: ClusterRole

name: cluster-admin

apiGroup: rbac.authorization.k8s.io

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: gpu-manager-daemonset

namespace: kube-system

spec:

updateStrategy:

type: RollingUpdate

selector:

matchLabels:

name: gpu-manager-ds

template:

metadata:

# This annotation is deprecated. Kept here for backward compatibility

# See https://kubernetes.io/docs/tasks/administer-cluster/guaranteed-scheduling-critical-addon-pods/

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ""

labels:

name: gpu-manager-ds

spec:

serviceAccount: gpu-manager

tolerations:

# This toleration is deprecated. Kept here for backward compatibility

# See https://kubernetes.io/docs/tasks/administer-cluster/guaranteed-scheduling-critical-addon-pods/

- key: CriticalAddonsOnly

operator: Exists

- key: tencent.com/vcuda-core

operator: Exists

effect: NoSchedule

# Mark this pod as a critical add-on; when enabled, the critical add-on

# scheduler reserves resources for critical add-on pods so that they can

# be rescheduled after a failure.

# See https://kubernetes.io/docs/tasks/administer-cluster/guaranteed-scheduling-critical-addon-pods/

priorityClassName: "system-node-critical"

# only run node has gpu device

nodeSelector:

nvidia-device-enable: enable

hostPID: true

containers:

- image: tkestack/gpu-manager:v1.1.5

imagePullPolicy: IfNotPresent

name: gpu-manager

securityContext:

privileged: true

ports:

- containerPort: 5678

volumeMounts:

- name: device-plugin

mountPath: /var/lib/kubelet/device-plugins

- name: vdriver

mountPath: /etc/gpu-manager/vdriver

- name: vmdata

mountPath: /etc/gpu-manager/vm

- name: log

mountPath: /var/log/gpu-manager

- name: checkpoint

mountPath: /etc/gpu-manager/checkpoint

- name: run-dir

mountPath: /var/run

- name: cgroup

mountPath: /sys/fs/cgroup

readOnly: true

- name: usr-directory

mountPath: /usr/local/host

readOnly: true

- name: kube-root

mountPath: /root/.kube

readOnly: true

env:

- name: LOG_LEVEL

value: "4"

- name: EXTRA_FLAGS

value: "--cgroup-driver=systemd"

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

volumes:

- name: device-plugin

hostPath:

type: Directory

path: /var/lib/kubelet/device-plugins

- name: vmdata

hostPath:

type: DirectoryOrCreate

path: /etc/gpu-manager/vm

- name: vdriver

hostPath:

type: DirectoryOrCreate

path: /etc/gpu-manager/vdriver

- name: log

hostPath:

type: DirectoryOrCreate

path: /etc/gpu-manager/log

- name: checkpoint

hostPath:

type: DirectoryOrCreate

path: /etc/gpu-manager/checkpoint

# We have to mount the whole /var/run directory into container, because of bind mount docker.sock

# inode change after host docker is restarted

- name: run-dir

hostPath:

type: Directory

path: /var/run

- name: cgroup

hostPath:

type: Directory

path: /sys/fs/cgroup

# We have to mount /usr directory instead of specified library path, because of non-existing

# problem for different distro

- name: usr-directory

hostPath:

type: Directory

path: /usr

- name: kube-root

hostPath:

type: Directory

path: /root/.kube

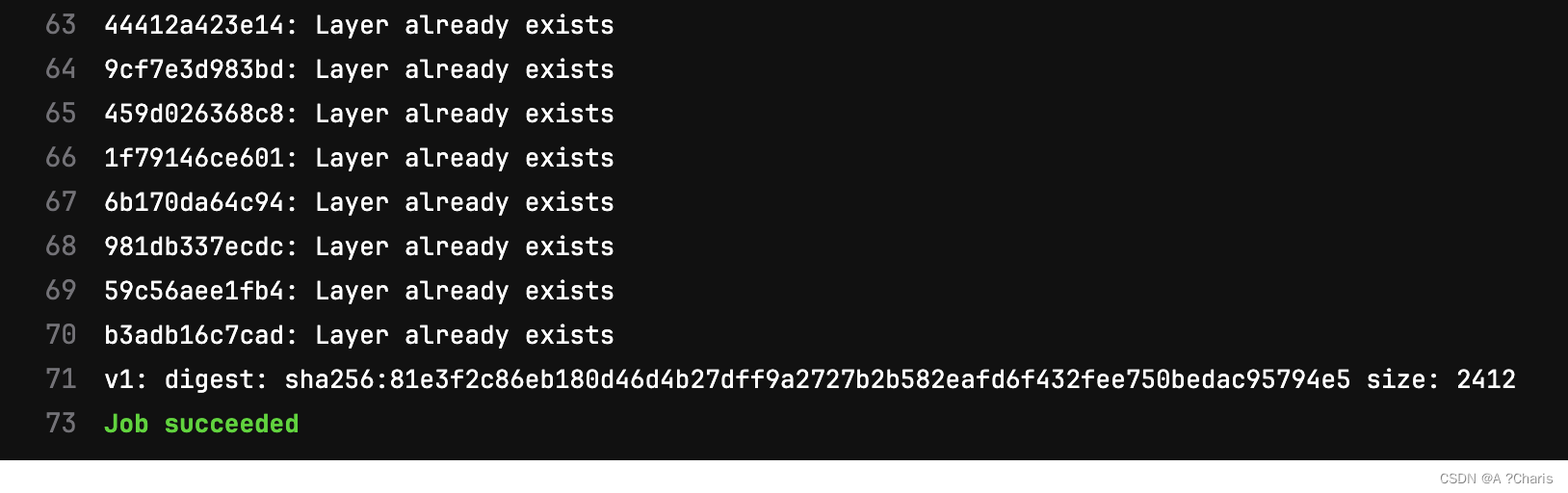

执行yaml文件:

kubectl apply -f gpu-manager.yaml

kubectl get pod -A|grep gpu 查询结果

3.部署gpu-admission

创建yaml内容如下:

apiVersion: v1

kind: ServiceAccount

metadata:

name: gpu-admission

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: gpu-admission-as-kube-scheduler

subjects:

- kind: ServiceAccount

name: gpu-admission

namespace: kube-system

roleRef:

kind: ClusterRole

name: system:kube-scheduler

apiGroup: rbac.authorization.k8s.io

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: gpu-admission-as-volume-scheduler

subjects:

- kind: ServiceAccount

name: gpu-admission

namespace: kube-system

roleRef:

kind: ClusterRole

name: system:volume-scheduler

apiGroup: rbac.authorization.k8s.io

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: gpu-admission-as-daemon-set-controller

subjects:

- kind: ServiceAccount

name: gpu-admission

namespace: kube-system

roleRef:

kind: ClusterRole

name: system:controller:daemon-set-controller

apiGroup: rbac.authorization.k8s.io

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

component: scheduler

tier: control-plane

app: gpu-admission

name: gpu-admission

namespace: kube-system

spec:

selector:

matchLabels:

component: scheduler

tier: control-plane

replicas: 1

template:

metadata:

labels:

component: scheduler

tier: control-plane

version: second

spec:

serviceAccountName: gpu-admission

containers:

- image: thomassong/gpu-admission:47d56ae9

name: gpu-admission

env:

- name: LOG_LEVEL

value: "4"

ports:

- containerPort: 3456

dnsPolicy: ClusterFirstWithHostNet

hostNetwork: true

priority: 2000000000

priorityClassName: system-cluster-critical

---

apiVersion: v1

kind: Service

metadata:

name: gpu-admission

namespace: kube-system

spec:

ports:

- port: 3456

protocol: TCP

targetPort: 3456

selector:

app: gpu-admission

type: ClusterIP

执行yaml文件:

kubectl create -f gpu-admission.yaml

kubectl get pod -A|grep gpu 查询结果

4.修改kube-scheduler.yaml

4.1 新建/etc/kubernetes/scheduler-policy-config.json

创建内容:

vim /etc/kubernetes/scheduler-policy-config.json

复制如下内容:

{

"kind": "Policy",

"apiVersion": "v1",

"predicates": [

{

"name": "PodFitsHostPorts"

},

{

"name": "PodFitsResources"

},

{

"name": "NoDiskConflict"

},

{

"name": "MatchNodeSelector"

},

{

"name": "HostName"

}

],

"priorities": [

{

"name": "BalancedResourceAllocation",

"weight": 1

},

{

"name": "ServiceSpreadingPriority",

"weight": 1

}

],

"extenders": [

{

"urlPrefix": "http://gpu-admission.kube-system:3456/scheduler",

"apiVersion": "v1beta1",

"filterVerb": "predicates",

"enableHttps": false,

"nodeCacheCapable": false

}

],

"hardPodAffinitySymmetricWeight": 10,

"alwaysCheckAllPredicates": false

}

4.2 新建/etc/kubernetes/scheduler-extender.yaml

创建内容:

vim /etc/kubernetes/scheduler-extender.yaml

复制如下内容:

apiVersion: kubescheduler.config.k8s.io/v1alpha1

kind: KubeSchedulerConfiguration

clientConnection:

kubeconfig: "/etc/kubernetes/scheduler.conf"

algorithmSource:

policy:

file:

path: "/etc/kubernetes/scheduler-policy-config.json"

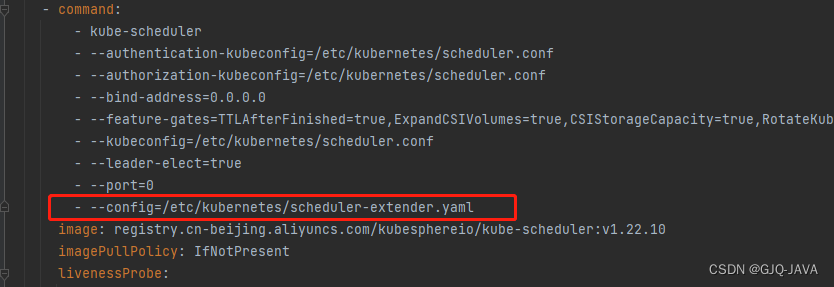

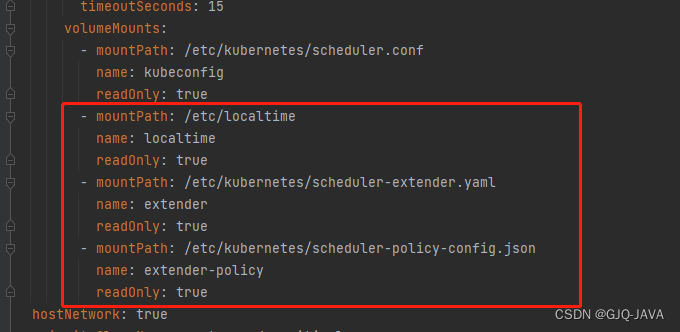

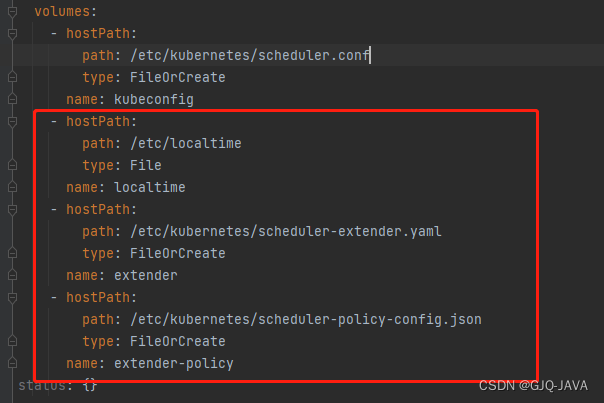

4.3 修改/etc/kubernetes/manifests/kube-scheduler.yaml

修改内容:

vim /etc/kubernetes/manifests/kube-scheduler.yaml

复制如下内容:

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

component: kube-scheduler

tier: control-plane

name: kube-scheduler

namespace: kube-system

spec:

containers:

- command:

- kube-scheduler

- --authentication-kubeconfig=/etc/kubernetes/scheduler.conf

- --authorization-kubeconfig=/etc/kubernetes/scheduler.conf

- --bind-address=0.0.0.0

- --feature-gates=TTLAfterFinished=true,ExpandCSIVolumes=true,CSIStorageCapacity=true,RotateKubeletServerCertificate=true

- --kubeconfig=/etc/kubernetes/scheduler.conf

- --leader-elect=true

- --port=0

- --config=/etc/kubernetes/scheduler-extender.yaml

image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-scheduler:v1.22.10

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 8

httpGet:

path: /healthz

port: 10259

scheme: HTTPS

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 15

name: kube-scheduler

resources:

requests:

cpu: 100m

startupProbe:

failureThreshold: 24

httpGet:

path: /healthz

port: 10259

scheme: HTTPS

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 15

volumeMounts:

- mountPath: /etc/kubernetes/scheduler.conf

name: kubeconfig

readOnly: true

- mountPath: /etc/localtime

name: localtime

readOnly: true

- mountPath: /etc/kubernetes/scheduler-extender.yaml

name: extender

readOnly: true

- mountPath: /etc/kubernetes/scheduler-policy-config.json

name: extender-policy

readOnly: true

hostNetwork: true

priorityClassName: system-node-critical

securityContext:

seccompProfile:

type: RuntimeDefault

volumes:

- hostPath:

path: /etc/kubernetes/scheduler.conf

type: FileOrCreate

name: kubeconfig

- hostPath:

path: /etc/localtime

type: File

name: localtime

- hostPath:

path: /etc/kubernetes/scheduler-extender.yaml

type: FileOrCreate

name: extender

- hostPath:

path: /etc/kubernetes/scheduler-policy-config.json

type: FileOrCreate

name: extender-policy

status: {}

修改内容入下:

修改完成k8s自动重启,如果没有重启执行 kubectl delete pod -n [podname]

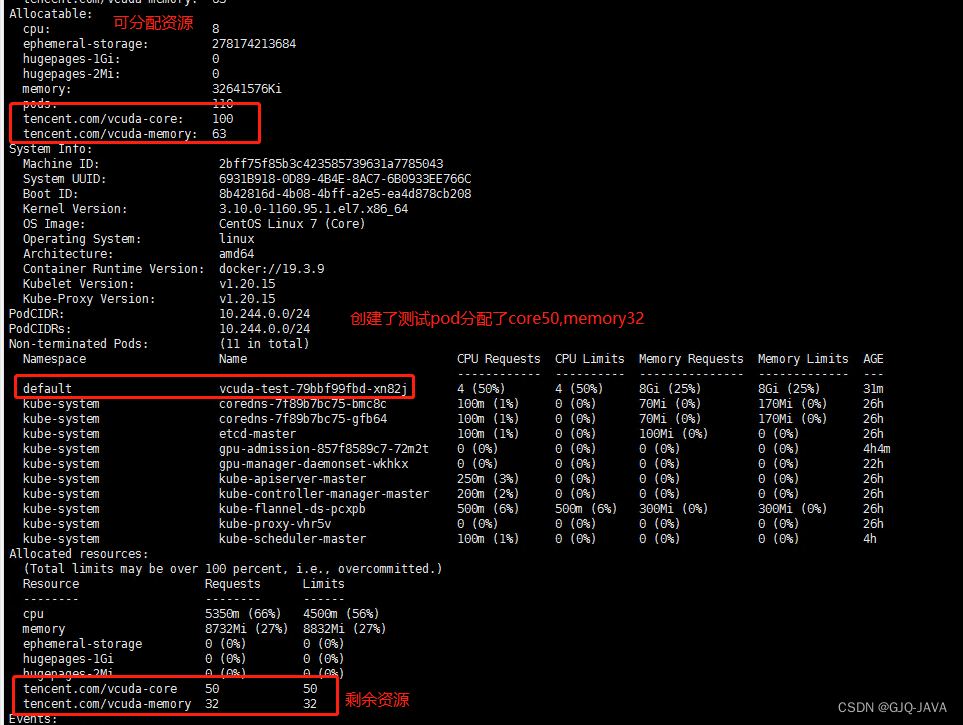

4.1 结果查看

执行命令:

kubectl describe node master[节点名称]

测试

镜像下载:docker pull gaozhenhai/tensorflow-gputest:0.2

创建yaml内容: vim vcuda-test.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

k8s-app: vcuda-test

qcloud-app: vcuda-test

name: vcuda-test

namespace: default

spec:

replicas: 1

selector:

matchLabels:

k8s-app: vcuda-test

template:

metadata:

labels:

k8s-app: vcuda-test

qcloud-app: vcuda-test

spec:

containers:

- command:

- sleep

- 360000s

env:

- name: PATH

value: /usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

image: gaozhenhai/tensorflow-gputest:0.2

imagePullPolicy: IfNotPresent

name: tensorflow-test

resources:

limits:

cpu: "4"

memory: 8Gi

tencent.com/vcuda-core: "50"

tencent.com/vcuda-memory: "32"

requests:

cpu: "4"

memory: 8Gi

tencent.com/vcuda-core: "50"

tencent.com/vcuda-memory: "32"

启动yaml:kubectl apply -f vcuda-test.yaml

进入容器:

kubectl exec -it `kubectl get pods -o name | cut -d '/' -f2` -- bash

执行测试命令:

cd /data/tensorflow/cifar10 && time python cifar10_train.py

查看结果:

执行命令:nvidia-smi pmon -s u -d 1、命令查看GPU资源使用情况

总结

到此vgpu容器层虚拟化全部完成