1. LeNet初始化权重的问题

- 由于我使用的是torch 1.10.0的版本,其Conv2d的init是使用a=sqrt(5)

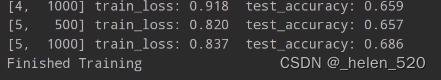

- 我将这里的torch默认初始化改为a=1之后,acc的对比如下:

- 可以看出:更改初始化之后,5个epoch,acc提高了3个点。

- 改为a=0后,继续上升了,loss也下降了,acc也提高了,所以初始化还是很重要的,这才5个epoch。

- 更重要的是初始化影响了后面的训练过程。

# 这是init a=sqrt(5)初始化后的情况

[1, 500] train_loss: 1.756 test_accuracy: 0.458

[1, 1000] train_loss: 1.434 test_accuracy: 0.515

[2, 500] train_loss: 1.191 test_accuracy: 0.573

[2, 1000] train_loss: 1.173 test_accuracy: 0.600

[3, 500] train_loss: 1.037 test_accuracy: 0.624

[3, 1000] train_loss: 1.017 test_accuracy: 0.626

[4, 500] train_loss: 0.917 test_accuracy: 0.638

[4, 1000] train_loss: 0.916 test_accuracy: 0.645

[5, 500] train_loss: 0.851 test_accuracy: 0.666

[5, 1000] train_loss: 0.839 test_accuracy: 0.655

Finished Training

# conv2d权重重新初始化后,看看效果: 重新初始化a=1,因为没有ReLU

[1, 500] train_loss: 1.693 test_accuracy: 0.479

[1, 1000] train_loss: 1.397 test_accuracy: 0.538

[2, 500] train_loss: 1.171 test_accuracy: 0.583

[2, 1000] train_loss: 1.110 test_accuracy: 0.612

[3, 500] train_loss: 0.988 test_accuracy: 0.649

[3, 1000] train_loss: 0.966 test_accuracy: 0.658

[4, 500] train_loss: 0.862 test_accuracy: 0.657

[4, 1000] train_loss: 0.872 test_accuracy: 0.684

[5, 500] train_loss: 0.769 test_accuracy: 0.680

[5, 1000] train_loss: 0.797 test_accuracy: 0.684

Finished Training

# 搞错了,forward中有ReLU,所以还得改为a=0,看结果

[1, 500] train_loss: 1.640 test_accuracy: 0.522

[1, 1000] train_loss: 1.338 test_accuracy: 0.554

[2, 500] train_loss: 1.126 test_accuracy: 0.595

[2, 1000] train_loss: 1.074 test_accuracy: 0.638

[3, 500] train_loss: 0.951 test_accuracy: 0.646

[3, 1000] train_loss: 0.935 test_accuracy: 0.652

[4, 500] train_loss: 0.832 test_accuracy: 0.675

[4, 1000] train_loss: 0.844 test_accuracy: 0.679

[5, 500] train_loss: 0.746 test_accuracy: 0.691

[5, 1000] train_loss: 0.771 test_accuracy: 0.690

Finished Training

# 改为BCEloss,多标签(虽然只会有一个标签),但是要去掉softmax的绝对地位,所以看下效果呢

[1, 500] train_loss: 0.267 test_accuracy: 0.454

[1, 1000] train_loss: 0.221 test_accuracy: 0.524

[2, 500] train_loss: 0.190 test_accuracy: 0.575

[2, 1000] train_loss: 0.183 test_accuracy: 0.609

[3, 500] train_loss: 0.164 test_accuracy: 0.640

[3, 1000] train_loss: 0.163 test_accuracy: 0.650

[4, 500] train_loss: 0.150 test_accuracy: 0.662

[4, 1000] train_loss: 0.149 test_accuracy: 0.666

[5, 500] train_loss: 0.139 test_accuracy: 0.681

[5, 1000] train_loss: 0.140 test_accuracy: 0.676

Finished Training

- 没看到下面forward是加了ReLU的,所以还要改为a=0,测试一下。

import torch.nn as nn

import torch.nn.functional as F

class LeNet(nn.Module):

def __init__(self):

super(LeNet, self).__init__()

self.conv1 = nn.Conv2d(3, 16, 5)

self.pool1 = nn.MaxPool2d(2, 2)

self.conv2 = nn.Conv2d(16, 32, 5)

self.pool2 = nn.MaxPool2d(2, 2)

self.fc1 = nn.Linear(32*5*5, 120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 10)

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, a=1, mode='fan_out') # 没有ReLU,a=1,有ReLU, a=0

elif isinstance(m, nn.Linear):

nn.init.kaiming_normal_(m.weight, a=1, mode='fan_in')

def forward(self, x):

x = F.relu(self.conv1(x)) # input(3, 32, 32) output(16, 28, 28)

x = self.pool1(x) # output(16, 14, 14)

x = F.relu(self.conv2(x)) # output(32, 10, 10)

x = self.pool2(x) # output(32, 5, 5)

x = x.view(-1, 32*5*5) # output(32*5*5)

x = F.relu(self.fc1(x)) # output(120)

x = F.relu(self.fc2(x)) # output(84)

x = self.fc3(x) # output(10)

return x

up主的结果是:68.6%

2. AlexNet初始化网络权重的问题

- 在改了conv2d和linear的初始化为a=0后,因为后面都跟了ReLU的,acc都有上升的。

[epoch 1] train_loss: 1.356 val_accuracy: 0.429

[epoch 2] train_loss: 1.187 val_accuracy: 0.500

[epoch 3] train_loss: 1.095 val_accuracy: 0.544

[epoch 4] train_loss: 1.037 val_accuracy: 0.593

[epoch 5] train_loss: 0.993 val_accuracy: 0.577

[epoch 6] train_loss: 0.923 val_accuracy: 0.618

[epoch 7] train_loss: 0.908 val_accuracy: 0.640

[epoch 8] train_loss: 0.878 val_accuracy: 0.676

[epoch 9] train_loss: 0.847 val_accuracy: 0.646

[epoch 10] train_loss: 0.831 val_accuracy: 0.670

Finished Training

# 改初始化方式:为a=0之后

[epoch 1] train_loss: 1.350 val_accuracy: 0.486

[epoch 2] train_loss: 1.163 val_accuracy: 0.508

[epoch 3] train_loss: 1.086 val_accuracy: 0.571

[epoch 4] train_loss: 1.012 val_accuracy: 0.640

[epoch 5] train_loss: 0.955 val_accuracy: 0.651

[epoch 6] train_loss: 0.920 val_accuracy: 0.657

[epoch 7] train_loss: 0.907 val_accuracy: 0.684

[epoch 8] train_loss: 0.847 val_accuracy: 0.690

[epoch 9] train_loss: 0.831 val_accuracy: 0.670

[epoch 10] train_loss: 0.805 val_accuracy: 0.695

Finished Training

# 改为BCEloss后,acc也提高了介个点

[epoch 1] train_loss: 0.449 val_accuracy: 0.522

[epoch 2] train_loss: 0.400 val_accuracy: 0.538

[epoch 3] train_loss: 0.357 val_accuracy: 0.629

[epoch 4] train_loss: 0.341 val_accuracy: 0.618

[epoch 5] train_loss: 0.319 val_accuracy: 0.613

[epoch 6] train_loss: 0.307 val_accuracy: 0.670

[epoch 7] train_loss: 0.321 val_accuracy: 0.648

[epoch 8] train_loss: 0.285 val_accuracy: 0.681

[epoch 9] train_loss: 0.286 val_accuracy: 0.692

[epoch 10] train_loss: 0.266 val_accuracy: 0.703

Finished Training