目录

- 概述

- 逻辑回归理论

- 数学推导

- 二类分类

- 多分类

- 代码实现

- 备注

概述

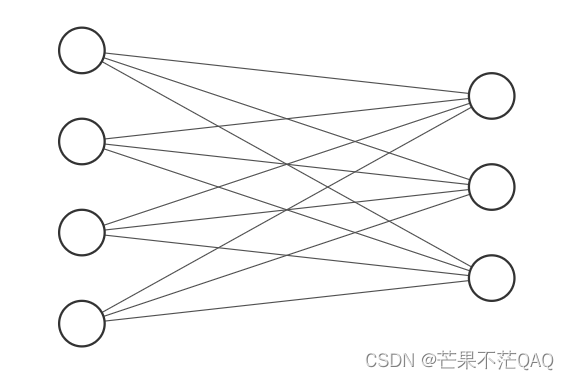

本文使用梯度下降法对逻辑回归进行训练,使用类似于神经网络的方法进行前向传播与反向更新,使用数学公式详细推导前向传播与反向求导过程,包括二分类和多分类问题,最后用python代码实现鸢尾花分类(不使用算法库)

逻辑回归理论

逻辑回归使用了类似于线性回归的方法进行分类,常用于二类分类问题,该模型属于对数线性模型,公式为

P

(

Y

=

1

∣

x

)

=

e

x

p

(

w

⋅

x

+

b

)

1

+

e

x

p

(

w

⋅

x

+

b

)

P(Y=1|x)=\frac{exp(w\cdot x+b)}{1+exp(w\cdot x+b)}

P(Y=1∣x)=1+exp(w⋅x+b)exp(w⋅x+b)

P

(

Y

=

0

∣

x

)

=

1

1

+

e

x

p

(

w

⋅

x

+

b

)

P(Y=0|x)=\frac{1}{1+exp(w\cdot x+b)}

P(Y=0∣x)=1+exp(w⋅x+b)1

Y=1代表属于该类别的概率,Y=0代表不属于该类别的概率,相当于属于另一类别的概率,二者和为1,服从概率分布。

由于

w

⋅

x

+

b

w\cdot x+b

w⋅x+b输出的值范围在

(

−

∞

,

+

∞

)

(-\infty, +\infty)

(−∞,+∞),无法直观的感受概率的大小,所以需要将其约束到一个

[

0

,

1

]

[0,1]

[0,1]之间的概率分布中。书中的公式为上面所示,在使用时通常使用sigmoid函数将其约束到一个概率分布中,其实目的是一样的,sigmoid函数公式为

y

=

1

1

+

e

x

p

(

−

x

)

y=\frac{1}{1+exp(-x)}

y=1+exp(−x)1

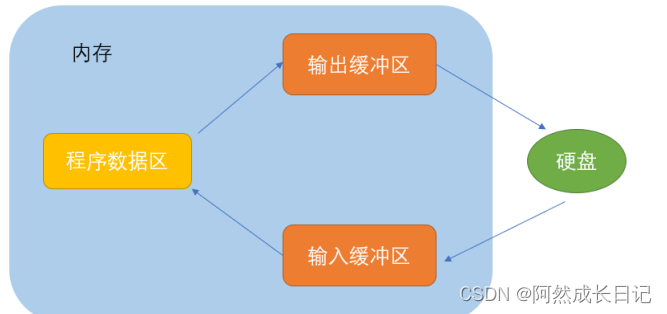

函数图像为:

数学推导

二类分类

这里使用梯度下降法对参数w和b进行更新,所以需要对w和b进行求导计算。

- 首先进行前向传播计算

假设输入样本有四个特征:

x

=

x

1

+

x

2

+

x

3

+

x

4

x=x_1+x_2+x_3+x_4

x=x1+x2+x3+x4;

经过w参数计算之后得到:

z

=

w

⋅

x

+

b

=

w

1

x

1

+

w

2

x

2

+

w

3

x

3

+

w

4

x

4

+

b

z=w\cdot x+b=w_1x_1+w_2x_2+w_3x_3+w_4x_4+b

z=w⋅x+b=w1x1+w2x2+w3x3+w4x4+b;

之后再经过sigmoid函数得到预测概率:

y

^

=

s

i

g

m

o

i

d

(

z

)

=

1

1

+

e

x

p

(

−

z

)

\hat y=sigmoid(z)=\frac{1}{1+exp(-z)}

y^=sigmoid(z)=1+exp(−z)1

使用二元交叉熵函数求得损失值:

−

L

(

y

^

,

y

)

=

y

⋅

l

o

g

y

^

+

(

1

−

y

)

⋅

l

o

g

(

1

−

y

^

)

-L(\hat y, y)=y\cdot log \hat y+(1-y)\cdot log(1-\hat y)

−L(y^,y)=y⋅logy^+(1−y)⋅log(1−y^)

注:对于多个样本,直接取所有样本损失的平均值;

- 反向传播

反向传播时经过链式求导得到参数w和b的梯度,从而进行一步步更新

∂ L ∂ y ^ = − ( y y ^ − 1 − y 1 − y ^ ) = − ( y ( 1 − y ^ ) − y ^ ( 1 − y ) y ^ ( 1 − y ^ ) ) = − ( y − y y ^ − y ^ + y y ^ y ^ ( 1 − y ^ ) ) = y ^ − y y ^ ( 1 − y ^ ) \begin{align} \frac{\partial L}{\partial \hat y}&=-(\frac{y}{\hat y}-\frac{1-y}{1-\hat y})\\ &=-(\frac{y(1-\hat y)-\hat y(1-y)}{\hat y(1-\hat y)})\\ &=-(\frac{y-y\hat y-\hat y+y\hat y}{\hat y(1-\hat y)})\\ &=\frac{\hat y-y}{\hat y(1-\hat y)} \end{align} ∂y^∂L=−(y^y−1−y^1−y)=−(y^(1−y^)y(1−y^)−y^(1−y))=−(y^(1−y^)y−yy^−y^+yy^)=y^(1−y^)y^−y

∂ y ^ ∂ z = ∂ ( 1 + e − z ) − 1 ∂ z = e − z ( 1 + e − z ) 2 = 1 1 + e − z ⋅ e − z 1 + e − z = s i g m o i d ( z ) ⋅ ( 1 − s i g m o i d ( z ) ) = y ^ ⋅ ( 1 − y ^ ) \begin{align} \frac{\partial \hat y}{\partial z}&=\frac{\partial (1+e^{-z})^{-1}}{\partial z}\\ &=\frac{e^{-z}}{(1+e^{-z})^2}\\ &= \frac{1}{1+e^{-z}}\cdot \frac{e^{-z}}{1+e^{-z}}\\ &=sigmoid(z)\cdot (1-sigmoid(z))\\ &=\hat y\cdot (1-\hat y) \end{align} ∂z∂y^=∂z∂(1+e−z)−1=(1+e−z)2e−z=1+e−z1⋅1+e−ze−z=sigmoid(z)⋅(1−sigmoid(z))=y^⋅(1−y^)

∂ z ∂ w = x , ∂ z ∂ b = 1 \frac{\partial z}{\partial w}=x, \frac{\partial z}{\partial b}=1 ∂w∂z=x,∂b∂z=1

所以可以得到参数梯度为

∂

L

∂

w

=

∂

L

∂

y

^

∂

y

^

∂

z

∂

z

∂

w

=

y

^

−

y

y

^

(

1

−

y

^

)

⋅

y

^

(

1

−

y

^

)

⋅

x

=

x

⋅

(

y

^

−

y

)

\frac{\partial L}{\partial w}=\frac{\partial L}{\partial \hat y}\frac{\partial \hat y}{\partial z}\frac{\partial z}{\partial w} =\frac{\hat y-y}{\hat y(1-\hat y)}\cdot \hat y (1-\hat y)\cdot x =x\cdot (\hat y-y)

∂w∂L=∂y^∂L∂z∂y^∂w∂z=y^(1−y^)y^−y⋅y^(1−y^)⋅x=x⋅(y^−y)

∂

L

∂

b

=

∂

L

∂

y

^

∂

y

^

∂

z

∂

z

∂

b

=

y

^

−

y

y

^

(

1

−

y

^

)

⋅

y

^

(

1

−

y

^

)

⋅

1

=

y

^

−

y

\frac{\partial L}{\partial b}=\frac{\partial L}{\partial \hat y}\frac{\partial \hat y}{\partial z}\frac{\partial z}{\partial b} =\frac{\hat y-y}{\hat y(1-\hat y)}\cdot \hat y (1-\hat y)\cdot 1 =\hat y-y

∂b∂L=∂y^∂L∂z∂y^∂b∂z=y^(1−y^)y^−y⋅y^(1−y^)⋅1=y^−y

最后进行梯度更新

w

t

=

w

t

−

1

−

l

r

⋅

∂

L

∂

w

w_t = w_{t-1}-lr\cdot \frac{\partial L}{\partial w}

wt=wt−1−lr⋅∂w∂L

b

t

=

b

t

−

1

−

l

r

⋅

∂

L

∂

b

b_t = b_{t-1}-lr\cdot \frac{\partial L}{\partial b}

bt=bt−1−lr⋅∂b∂L

多分类

多分类问题有一些方法sunshihanshu是使用多个二分类逻辑回归模型,有一些方法是最后使用softmax函数同时得到多个类别的概率,选取概率最大的类别作为预测类别,本文使用后一种方法,这里假设输出类别有三类。

与二类分类问题的区别只有最后的概率归一化层和损失函数。

- 首先进行前向传播计算

假设输入样本有四个特征: x = [ x 1 , x 2 , x 3 , x 4 ] x=[x_1,x_2,x_3,x_4] x=[x1,x2,x3,x4];

这里的w参数维度是(4, 3),会输出三个值,经过w参数计算之后得到:

w = [ w 11 w 21 w 31 w 12 w 22 w 32 w 13 w 23 w 33 w 14 w 24 w 34 ] w=\begin{bmatrix} w_{11} & w_{21} & w_{31} \\ w_{12} & w_{22} & w_{32} \\ w_{13} & w_{23} & w_{33} \\ w_{14} & w_{24} & w_{34} \\ \end{bmatrix} w= w11w12w13w14w21w22w23w24w31w32w33w34

z

=

x

w

+

b

=

[

x

1

,

x

2

,

x

3

,

x

4

]

[

w

11

w

21

w

31

w

12

w

22

w

32

w

13

w

22

w

33

w

14

w

23

w

34

]

+

[

b

1

,

b

2

,

b

3

]

=

[

z

1

,

z

2

,

z

3

]

z=xw+b=[x_1,x_2,x_3,x_4] \begin{bmatrix} w_{11} & w_{21} & w_{31} \\ w_{12} & w_{22} & w_{32} \\ w_{13} & w_{22} & w_{33} \\ w_{14} & w_{23} & w_{34} \\ \end{bmatrix}+[b_1 ,b_2,b_3]=[z_1, z_2,z_3]

z=xw+b=[x1,x2,x3,x4]

w11w12w13w14w21w22w22w23w31w32w33w34

+[b1,b2,b3]=[z1,z2,z3]

其中

z

1

=

w

1

⋅

x

+

b

1

=

w

11

x

1

+

w

12

x

2

+

w

13

x

3

+

w

14

x

4

+

b

1

z_1=w_1\cdot x+b_1=w_{11}x_1+w_{12}x_2+w_{13}x_3+w_{14}x_4+b_1

z1=w1⋅x+b1=w11x1+w12x2+w13x3+w14x4+b1;

z

2

=

w

2

⋅

x

+

b

2

=

w

21

x

1

+

w

22

x

2

+

w

23

x

3

+

w

24

x

4

+

b

2

z_2=w_2\cdot x+b_2=w_{21}x_1+w_{22}x_2+w_{23}x_3+w_{24}x_4+b_2

z2=w2⋅x+b2=w21x1+w22x2+w23x3+w24x4+b2;

z

3

=

w

3

⋅

x

+

b

3

=

w

31

x

1

+

w

32

x

2

+

w

33

x

3

+

w

34

x

4

+

b

3

z_3=w_3\cdot x+b_3=w_{31}x_1+w_{32}x_2+w_{33}x_3+w_{34}x_4+b_3

z3=w3⋅x+b3=w31x1+w32x2+w33x3+w34x4+b3;

之后再经过softmax函数得到预测概率:

y

^

1

=

s

o

f

t

m

a

x

(

z

1

)

=

e

x

p

(

z

1

)

∑

e

x

p

(

z

i

)

\hat y_1=softmax(z_1)=\frac{exp(z_1)}{\sum exp(z_i)}

y^1=softmax(z1)=∑exp(zi)exp(z1)

y

^

2

=

s

o

f

t

m

a

x

(

z

2

)

=

e

x

p

(

z

2

)

∑

e

x

p

(

z

i

)

\hat y_2=softmax(z_2)=\frac{exp(z_2)}{\sum exp(z_i)}

y^2=softmax(z2)=∑exp(zi)exp(z2)

y

^

3

=

s

o

f

t

m

a

x

(

z

3

)

=

e

x

p

(

z

3

)

∑

e

x

p

(

z

i

)

\hat y_3=softmax(z_3)=\frac{exp(z_3)}{\sum exp(z_i)}

y^3=softmax(z3)=∑exp(zi)exp(z3)

使用多元交叉熵函数求得损失值:

L

(

y

^

,

y

)

=

−

∑

y

i

⋅

l

o

g

y

^

i

L(\hat y, y)=-\sum y_i\cdot log \hat y_i

L(y^,y)=−∑yi⋅logy^i

注1:这里的

y

i

y_i

yi代表是否属于第i个类别,例如某样本属于第二个类别,则

y

=

[

y

1

,

y

2

,

y

3

]

=

[

0

,

0

,

1

]

y=[y_1,y_2,y_3]=[0,0,1]

y=[y1,y2,y3]=[0,0,1],则

L

(

y

^

,

y

)

=

−

∑

y

i

⋅

l

o

g

y

^

i

=

−

(

0

⋅

l

o

g

y

^

1

+

0

⋅

l

o

g

y

^

2

+

1

⋅

l

o

g

y

^

3

)

L(\hat y, y)=-\sum y_i\cdot log \hat y_i=-(0\cdot log\hat y_1+0\cdot log\hat y_2+1\cdot log\hat y_3)

L(y^,y)=−∑yi⋅logy^i=−(0⋅logy^1+0⋅logy^2+1⋅logy^3)

注2:对于多个样本,直接取所有样本损失的平均值;

- 反向传播

反向传播时经过链式求导得到参数w和b的梯度,从而进行一步步更新

∂ L ∂ y ^ = − y y ^ = [ − y 1 y ^ 1 , − y 2 y ^ 2 , − y 3 y ^ 3 ] \begin{align} \frac{\partial L}{\partial \hat y}&=-\frac{y}{\hat y}\\ &=[-\frac{y_1}{\hat y_1},-\frac{y_2}{\hat y_2},-\frac{y_3}{\hat y_3}] \end{align} ∂y^∂L=−y^y=[−y^1y1,−y^2y2,−y^3y3]

对于softmax的反向传播比较特殊,由于输入包含多个参数

(

z

1

,

z

2

,

z

3

)

(z_1,z_2,z_3)

(z1,z2,z3),对不同的z求导的结果不同。对于

y

i

y_i

yi和

z

j

z_j

zj,需要分为

i

=

j

i=j

i=j和

i

≠

j

i\ne j

i=j两种情况。

当

i

=

j

i=j

i=j时:

∂

y

^

i

∂

z

j

=

∂

y

^

i

∂

z

i

=

e

z

i

∑

e

z

i

−

e

z

i

e

z

i

(

∑

e

z

i

)

2

=

e

z

i

(

∑

e

z

i

−

e

z

i

)

(

∑

e

z

i

)

2

=

e

z

i

∑

e

z

i

∑

e

z

i

−

e

z

i

∑

e

z

i

=

s

o

f

t

m

a

x

(

z

i

)

⋅

(

1

−

s

o

f

t

m

a

x

(

z

i

)

)

=

y

^

i

⋅

(

1

−

y

^

i

)

\begin{align} \frac{\partial \hat y_i}{\partial z_j}&=\frac{\partial \hat y_i}{\partial z_i}\\ &=\frac{e^{z_i}\sum e^{z_i}-e^{z_i}e^{z_i}}{(\sum e^{z_i})^2}\\ &=\frac{e^{z_i}(\sum e^{z_i}-e^{z_i})}{(\sum e^{z_i})^2}\\ &=\frac{e^{z_i}}{\sum e^{z_i}}\frac{\sum e^{z_i}-e^{z_i}}{\sum e^{z_i}}\\ &=softmax(z_i)\cdot (1-softmax(z_i))\\ &=\hat y_i\cdot (1-\hat y_i) \end{align}

∂zj∂y^i=∂zi∂y^i=(∑ezi)2ezi∑ezi−eziezi=(∑ezi)2ezi(∑ezi−ezi)=∑eziezi∑ezi∑ezi−ezi=softmax(zi)⋅(1−softmax(zi))=y^i⋅(1−y^i)

当

i

≠

j

i\ne j

i=j时:

∂

y

^

i

∂

z

j

=

−

e

z

i

e

z

j

(

∑

e

z

i

)

2

=

−

s

o

f

t

m

a

x

(

z

i

)

⋅

s

o

f

t

m

a

x

(

z

j

)

=

−

y

^

i

⋅

y

^

j

\begin{align} \frac{\partial \hat y_i}{\partial z_j}&=\frac{-e^{z_i}e^{z_j}}{(\sum e^{z_i})^2}\\ &=-softmax(z_i)\cdot softmax(z_j)\\ &=-\hat y_i\cdot\hat y_j \end{align}

∂zj∂y^i=(∑ezi)2−eziezj=−softmax(zi)⋅softmax(zj)=−y^i⋅y^j

合并起来得到:

∂

y

^

∂

z

=

[

∂

y

^

1

∂

z

1

∂

y

^

1

∂

z

2

∂

y

^

1

∂

z

3

∂

y

^

2

∂

z

1

∂

y

^

2

∂

z

2

∂

y

^

2

∂

z

3

∂

y

^

3

∂

z

1

∂

y

^

3

∂

z

2

∂

y

^

3

∂

z

3

]

=

[

y

^

1

⋅

(

1

−

y

^

1

)

−

y

^

1

⋅

y

^

2

−

y

^

1

⋅

y

^

3

−

y

^

2

⋅

y

^

1

y

^

2

⋅

(

1

−

y

^

2

)

−

y

^

2

⋅

y

^

3

−

y

^

3

⋅

y

^

1

−

y

^

3

⋅

y

^

2

y

^

3

⋅

(

1

−

y

^

3

)

]

\begin{align} \frac{\partial \hat y}{\partial z}= {\Large\begin{bmatrix} \frac{\partial \hat y_1}{\partial z_1} & \frac{\partial \hat y_1}{\partial z_2} & \frac{\partial \hat y_1}{\partial z_3} \\ \\ \frac{\partial \hat y_2}{\partial z_1} & \frac{\partial \hat y_2}{\partial z_2} & \frac{\partial \hat y_2}{\partial z_3} \\ \\ \frac{\partial \hat y_3}{\partial z_1} & \frac{\partial \hat y_3}{\partial z_2} & \frac{\partial \hat y_3}{\partial z_3} \\ \end{bmatrix}}= \begin{bmatrix} \hat y_1\cdot (1-\hat y_1) & -\hat y_1\cdot \hat y_2 & -\hat y_1\cdot \hat y_3 \\ \\ -\hat y_2\cdot \hat y_1 & \hat y_2\cdot (1-\hat y_2) & -\hat y_2\cdot \hat y_3 \\ \\ -\hat y_3\cdot \hat y_1 & -\hat y_3\cdot \hat y_2 & \hat y_3\cdot (1-\hat y_3) \\ \end{bmatrix} \end{align}

∂z∂y^=

∂z1∂y^1∂z1∂y^2∂z1∂y^3∂z2∂y^1∂z2∂y^2∂z2∂y^3∂z3∂y^1∂z3∂y^2∂z3∂y^3

=

y^1⋅(1−y^1)−y^2⋅y^1−y^3⋅y^1−y^1⋅y^2y^2⋅(1−y^2)−y^3⋅y^2−y^1⋅y^3−y^2⋅y^3y^3⋅(1−y^3)

∂ L ∂ z = [ − y 1 ⋅ ( 1 − y ^ 1 ) y 1 ⋅ y ^ 2 y 1 ⋅ y ^ 3 y 2 ⋅ y ^ 1 − y 2 ⋅ ( 1 − y ^ 2 ) y 2 ⋅ y ^ 3 y 3 ⋅ y ^ 1 y 3 ⋅ y ^ 2 − y 3 ⋅ ( 1 − y ^ 3 ) ] \begin{align} \frac{\partial L}{\partial z}= \begin{bmatrix} -y_1\cdot (1-\hat y_1) & y_1\cdot \hat y_2 & y_1\cdot \hat y_3 \\ \\ y_2\cdot \hat y_1 & - y_2\cdot (1-\hat y_2) & y_2\cdot \hat y_3 \\ \\ y_3\cdot \hat y_1 & y_3\cdot \hat y_2 & - y_3\cdot (1-\hat y_3) \\ \end{bmatrix} \end{align} ∂z∂L= −y1⋅(1−y^1)y2⋅y^1y3⋅y^1y1⋅y^2−y2⋅(1−y^2)y3⋅y^2y1⋅y^3y2⋅y^3−y3⋅(1−y^3)

之后

∂

z

∂

w

=

[

x

1

x

1

x

1

x

2

x

2

x

2

x

3

x

3

x

3

x

4

x

4

x

4

]

\frac{\partial z}{\partial w}= \begin{bmatrix} x_1 & x_1 & x_1 \\ x_2 & x_2 & x_2 \\ x_3 & x_3 & x_3 \\ x_4 & x_4 & x_4 \\ \end{bmatrix}

∂w∂z=

x1x2x3x4x1x2x3x4x1x2x3x4

∂

z

∂

b

=

[

1

,

1

,

1

]

\frac{\partial z}{\partial b}=[1, 1, 1]

∂b∂z=[1,1,1]

所以最后得到梯度

∂

L

∂

w

=

∂

L

∂

z

∂

z

∂

w

=

[

x

1

x

1

x

1

x

2

x

2

x

2

x

3

x

3

x

3

x

4

x

4

x

4

]

[

−

y

1

⋅

(

1

−

y

^

1

)

y

1

⋅

y

^

2

y

1

⋅

y

^

3

y

2

⋅

y

^

1

−

y

2

⋅

(

1

−

y

^

2

)

y

2

⋅

y

^

3

y

3

⋅

y

^

1

y

3

⋅

y

^

2

−

y

3

⋅

(

1

−

y

^

3

)

]

\begin{align}\frac{\partial L}{\partial w}&=\frac{\partial L}{\partial z}\frac{\partial z}{\partial w}\\ &=\begin{bmatrix} x_1 & x_1 & x_1 \\ x_2 & x_2 & x_2 \\ x_3 & x_3 & x_3 \\ x_4 & x_4 & x_4 \\ \end{bmatrix} \begin{bmatrix} -y_1\cdot (1-\hat y_1) & y_1\cdot \hat y_2 & y_1\cdot \hat y_3 \\ \\ y_2\cdot \hat y_1 & - y_2\cdot (1-\hat y_2) & y_2\cdot \hat y_3 \\ \\ y_3\cdot \hat y_1 & y_3\cdot \hat y_2 & - y_3\cdot (1-\hat y_3) \\ \end{bmatrix} \end{align}

∂w∂L=∂z∂L∂w∂z=

x1x2x3x4x1x2x3x4x1x2x3x4

−y1⋅(1−y^1)y2⋅y^1y3⋅y^1y1⋅y^2−y2⋅(1−y^2)y3⋅y^2y1⋅y^3y2⋅y^3−y3⋅(1−y^3)

∂ L ∂ b = ∂ L ∂ z ∂ z ∂ b = [ 1 , 1 , 1 ] [ − y 1 ⋅ ( 1 − y ^ 1 ) y 1 ⋅ y ^ 2 y 1 ⋅ y ^ 3 y 2 ⋅ y ^ 1 − y 2 ⋅ ( 1 − y ^ 2 ) y 2 ⋅ y ^ 3 y 3 ⋅ y ^ 1 y 3 ⋅ y ^ 2 − y 3 ⋅ ( 1 − y ^ 3 ) ] \begin{align}\frac{\partial L}{\partial b}&=\frac{\partial L}{\partial z}\frac{\partial z}{\partial b}\\ &=[1,1,1] \begin{bmatrix} -y_1\cdot (1-\hat y_1) & y_1\cdot \hat y_2 & y_1\cdot \hat y_3 \\ \\ y_2\cdot \hat y_1 & - y_2\cdot (1-\hat y_2) & y_2\cdot \hat y_3 \\ \\ y_3\cdot \hat y_1 & y_3\cdot \hat y_2 & - y_3\cdot (1-\hat y_3) \\ \end{bmatrix} \end{align} ∂b∂L=∂z∂L∂b∂z=[1,1,1] −y1⋅(1−y^1)y2⋅y^1y3⋅y^1y1⋅y^2−y2⋅(1−y^2)y3⋅y^2y1⋅y^3y2⋅y^3−y3⋅(1−y^3)

最后进行梯度更新

w

t

=

w

t

−

1

−

l

r

⋅

∂

L

∂

w

w_t = w_{t-1}-lr\cdot \frac{\partial L}{\partial w}

wt=wt−1−lr⋅∂w∂L

b

t

=

b

t

−

1

−

l

r

⋅

∂

L

∂

b

b_t = b_{t-1}-lr\cdot \frac{\partial L}{\partial b}

bt=bt−1−lr⋅∂b∂L

代码实现

这里自定义了一个逻辑回归模型类,使用numpy数组指定了w和b参数,自定义softmax和sigmoid函数,计算反向求导公式并更新,代码严格按照上文公式进行计算。

from sklearn.datasets import load_iris

import numpy as np

import matplotlib.pyplot as plt

from sklearn.model_selection import train_test_split

class Logistic_Regression:

def __init__(self, optimizer='GD', lr=0.001, max_iterations=1000):

self.optimizer = optimizer

self.lr = lr

self.max_iterations = max_iterations

def fit(self, input, label, input_test, label_test, n_target=2):

self.n_target = n_target

# 多分类,使用softmax

if self.n_target > 2:

self.weights = np.random.normal(0, 0.1, (input.shape[1], self.n_target))

self.bias = np.zeros(self.n_target)

# 梯度下降法求解

if self.optimizer == 'GD':

for iteration in range(self.max_iterations):

pred = np.dot(input, self.weights) + self.bias

pred = self.softmax(pred)

accuracy = self.accuracy(pred, label)

loss = self.cross_entropy_multi(pred, label)

print(f'{iteration}, accuracy: {accuracy}, loss:{loss}')

label_expand = np.array([[0] * l + [1] + [0] * (self.n_target - 1 - l) for l in label])

softmax_grad = []

for sample in range(label_expand.shape[0]):

softmax_grad.append([[-label_expand[sample][i]*(1-pred[sample][j]) if i == j else label_expand[sample][i]*pred[sample][j] for j in range(self.n_target)] for i in range(self.n_target)])

softmax_grad = np.array(softmax_grad)

input_repeat = np.expand_dims(input, axis=-1).repeat(3, axis=-1)

w_grad = np.matmul(input_repeat, softmax_grad).mean(axis=0)

bias_grad = (softmax_grad.sum(axis=0)).mean(axis=0)

self.weights -= self.lr * w_grad

self.bias -= self.lr * bias_grad

if (iteration + 1) % 500 == 0:

self.test(input_test, label_test)

print(f'{iteration + 1}, accuracy: {accuracy}')

# 二分类,使用sigmoid

else:

self.weights = np.random.normal(0, 0.1, (input.shape[1]))

self.bias = 0

# 梯度下降法求解

if self.optimizer == 'GD':

for iteration in range(self.max_iterations):

pred = np.dot(input, self.weights) + self.bias

pred = self.sigmoid(pred) # pred预测的值代表标签为1的概率

pred_class = (pred > 0.5) + 0

accuracy = self.accuracy(pred_class, label)

loss = self.cross_entropy_binary(pred, label)

print(f'{iteration}, accuracy: {accuracy}, loss:{loss}')

w_grad = (1 / input.shape[0]) * np.matmul(input.T, pred - label)

bias_grad = (pred - label).mean()

self.weights -= self.lr * w_grad

self.bias -= self.lr * bias_grad

if (iteration + 1) % 10 == 0:

self.test(input_test, label_test)

print(f'{iteration + 1}, accuracy: {accuracy}')

return

def test(self, input_test, label_test):

pred = np.dot(input_test, self.weights) + self.bias

if self.n_target > 2:

pred = self.softmax(pred)

else:

pred = self.sigmoid(pred) # pred预测的值代表标签为1的概率

pred = (pred > 0.5) + 0

accuracy = self.accuracy(pred, label_test)

return accuracy

def softmax(self, x):

return np.exp(x) / np.expand_dims(np.exp(x).sum(axis=1), axis=-1)

def sigmoid(self, x):

return 1 / (1 + np.exp(-x))

def cross_entropy_multi(self, pred, label):

loss = pred[range(pred.shape[0]), label] * np.log(pred[range(pred.shape[0]), label])

return -loss.mean()

def cross_entropy_binary(self, pred, label):

loss = label * np.log(pred) + (1 - label) * np.log(1 - pred)

return -loss.mean()

def accuracy(self, pred, label):

if len(pred.shape) != 1:

pred = np.argmax(pred, axis=-1)

return sum(pred == label) / pred.shape[0]

if __name__ == '__main__':

iris = load_iris()

X = iris.data

y = iris.target

print(X.data.shape)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.15, random_state=420)

# 一共150个样本,分别是50个类别1、50个类别2、50个类别3,若想测试二分类可以取前100个样本

# X_train, X_test, y_train, y_test = train_test_split(X[:100], y[:100], test_size=0.15, random_state=420)

LR = Logistic_Regression(optimizer='GD', lr=0.5, max_iterations=5000)

LR.fit(X_train, y_train, X_test, y_test, n_target=3)

备注

本文公式是自己推导的,公式是一个一个敲的,若有错误请指出,我会尽快修改,完整文件可在github查看。