一、环境说明:

- python3.9.6

- mysql5.7数据库

基础环境自行安装,本教程不包含基础环境部分

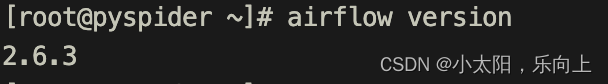

二、安装airflow2.6.3

1.安装Linux系统依赖模块

yum -y install zlib-devel bzip2-devel openssl-devel ncurses-devel sqlite-devel readline-devel tk-devel gdbm-devel db4-devel libpcap-devel xz-devel gcc gcc-devel make mysql-devel python-devel libffi-devel unzip

2.使用pip3安装airflow2.6.3

pip install 'apache-airflow==2.6.3' --constraint "https://raw.githubusercontent.com/apache/airflow/constraints-2.6.3/constraints-3.8.txt"

安装成功

三、修改配置文件

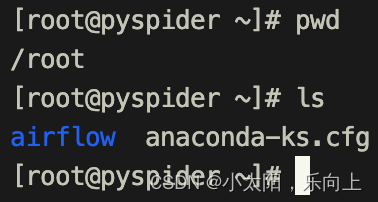

我直接用root用户安装的,会在root下生成一个airflow文件夹

从上到下依次修改这几个配置(具体每个配置干啥的不多赘述):

vim airflow/airflow.cfg

default_timezone = Asia/Shanghai

executor = LocalExecutor

sql_alchemy_conn = mysql://airflow:airflow@localhost:3306/airflow?charset=utf8

default_ui_timezone = Asia/Shanghai

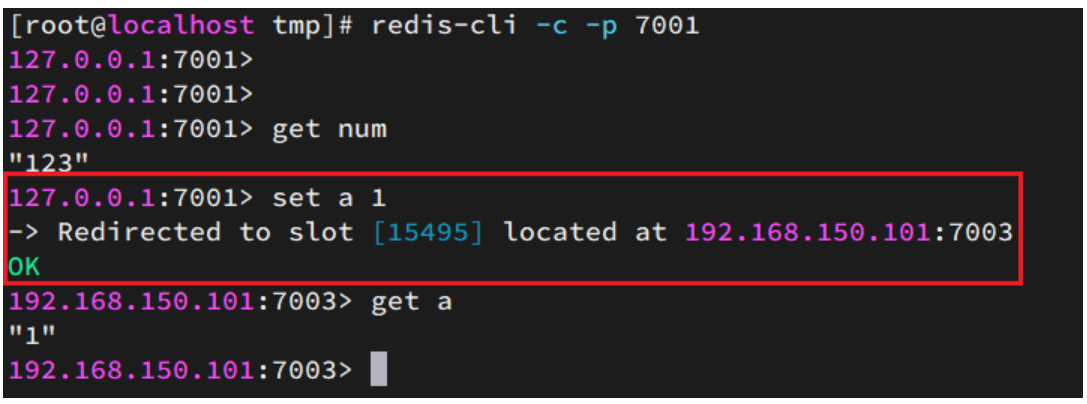

四、初始化

1.初始化数据库

airflow db init

现在已经在数据库airflow生成了相关的元数据表

2.创建admin用户并制定角色

airflow users create --username admin --firstname admin --lastname admin --role Admin --email xxx@xxx.com --password admin

五、启动

1.启动airflow的webserver,-D表示后台运行

airflow webserver --port 8080 -D

2.启动调度程序

airflow scheduler -D

六、特别说明

如果是低版本升级,依然会存在时区问题,只需要在Dag中的start_date添加相关参数即可:

# coding:utf-8

import datetime

import pendulum

from airflow.models import DAG

from airflow.operators.bash import BashOperator

from airflow.operators.empty import EmptyOperator

from airflow.sensors.time_sensor import TimeSensor

default_args = {

'owner': 'root',

'depends_on_past': False, # 否依赖上一个自己的执行状态

'start_date': pendulum.datetime (2023, 7, 21, tz="Asia/Shanghai"),#修改

'email': ['xxx@qq.com'],

'email_on_failure': True,

'email_on_retry': False,

'retries': 3,

'retry_delay': datetime.timedelta (minutes=1)

}

dag = DAG (

dag_id='schedule_task_test',

default_args=default_args,

description='小时调度任务',

schedule="1 * * * *", #修改为schedule

concurrency=10

)

start = EmptyOperator (

task_id='start',

dag=dag,

)

task_01 = BashOperator (

task_id='task_01',

bash_command='echo "hello task01"; exit 99;',

dag=dag,

)

task_02 = BashOperator (

task_id='task_02',

bash_command='echo "hello task02"; exit 99;',,

dag=dag,

)

start >> task_01 >> task_02

![[UE4][C++]调整分屏模式下(本地多玩家)视口的显示位置和区域](https://img-blog.csdnimg.cn/33368562f75f4e4a944d26ea9feff68c.png)