- 先安装transformers

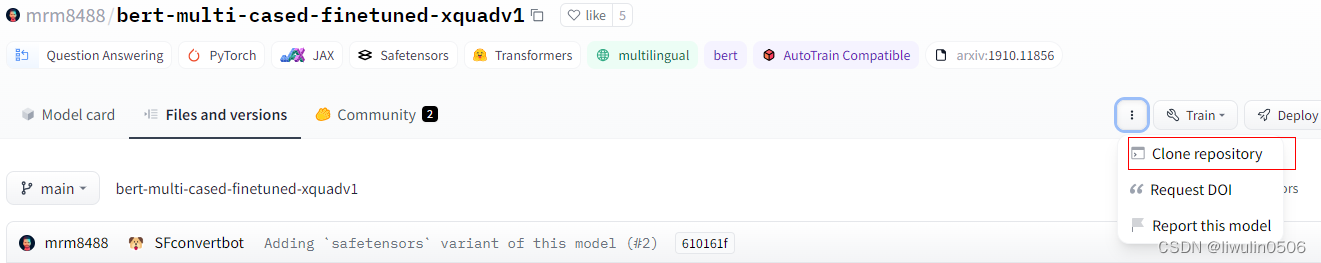

- 在huggingface下载模型

模型bert-multi-cased-finetuned-xquadv1可以从huggingface中下载,具体操作方法可以参照文章https://blog.csdn.net/zhaomengsen/article/details/130616837下载

- git clone就可以了

- 然后使用pipline加载模型

from transformers import pipeline

nlp = pipeline("question-answering",model="mrm8488/bert-multi-cased-finetuned-xquadv1",tokenizer="mrm8488/bert-multi-cased-finetuned-xquadv1")

- 测试

context = """非root用户使用HBase客户端,请确保该HBase客户端目录的属主为该用户,否则请参考如下命令修改属主"""

question = "命令是什么?"

result = nlp(question=question, context=context)

print("Answer:", result['answer'])

print("Score:", result['score'])

Answer: 修改属主

Score: 0.9437811374664307

![[深度学习入门]什么是神经网络?[神经网络的架构、工作、激活函数]](https://img-blog.csdnimg.cn/54cf53fce0a745079201566ff6849152.png#pic_center)