微服务框架

【SpringCloud+RabbitMQ+Docker+Redis+搜索+分布式,系统详解springcloud微服务技术栈课程|黑马程序员Java微服务】

SpringCloud微服务架构

文章目录

- 微服务框架

- SpringCloud微服务架构

- 27 自动补全

- 27.4 修改酒店索引库数据结构

- 27.4.1 案例

27 自动补全

27.4 修改酒店索引库数据结构

27.4.1 案例

案例:实现hotel索引库的自动补全、拼音搜索功能

实现思路如下:

- 修改hotel索引库结构,设置自定义拼音分词器

当前的hotel 数据结构

{

"hotel" : {

"mappings" : {

"properties" : {

"address" : {

"type" : "keyword",

"index" : false

},

"all" : {

"type" : "text",

"analyzer" : "ik_max_word"

},

"brand" : {

"type" : "keyword",

"copy_to" : [

"all"

]

},

"business" : {

"type" : "keyword",

"copy_to" : [

"all"

]

},

"city" : {

"type" : "keyword"

},

"id" : {

"type" : "keyword"

},

"isAD" : {

"type" : "boolean"

},

"location" : {

"type" : "geo_point"

},

"name" : {

"type" : "text",

"copy_to" : [

"all"

],

"analyzer" : "ik_max_word"

},

"pic" : {

"type" : "keyword",

"index" : false

},

"price" : {

"type" : "integer"

},

"score" : {

"type" : "integer"

},

"starName" : {

"type" : "keyword"

}

}

}

}

}

可以看到 all 和 name 是text的

而且分词器也是默认的ik_max_word

改成下面这样

// 酒店数据索引库

PUT /hotel

{

"settings": {

"analysis": {

"analyzer": {

"text_anlyzer": {

"tokenizer": "ik_max_word",

"filter": "py"

},

"completion_analyzer": {

"tokenizer": "keyword",

"filter": "py"

}

},

"filter": {

"py": {

"type": "pinyin",

"keep_full_pinyin": false,

"keep_joined_full_pinyin": true,

"keep_original": true,

"limit_first_letter_length": 16,

"remove_duplicated_term": true,

"none_chinese_pinyin_tokenize": false

}

}

}

},

"mappings": {

"properties": {

"id":{

"type": "keyword"

},

"name":{

"type": "text",

"analyzer": "text_anlyzer",

"search_analyzer": "ik_smart",

"copy_to": "all"

},

"address":{

"type": "keyword",

"index": false

},

"price":{

"type": "integer"

},

"score":{

"type": "integer"

},

"brand":{

"type": "keyword",

"copy_to": "all"

},

"city":{

"type": "keyword"

},

"starName":{

"type": "keyword"

},

"business":{

"type": "keyword",

"copy_to": "all"

},

"location":{

"type": "geo_point"

},

"pic":{

"type": "keyword",

"index": false

},

"all":{

"type": "text",

"analyzer": "text_anlyzer",

"search_analyzer": "ik_smart"

},

"suggestion":{

"type": "completion",

"analyzer": "completion_analyzer"

}

}

}

}

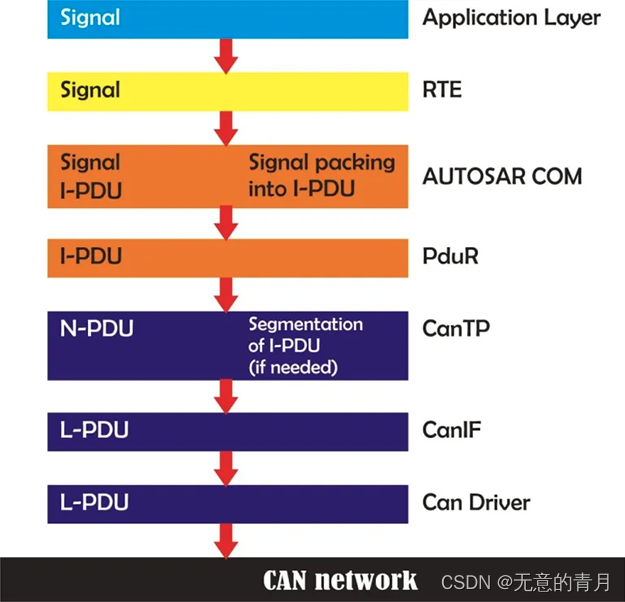

- settings:定义索引库的分词器

- mappings:定义字段

贴到kibana 中

先把之前的hotel 索引库删掉

然后直接运行创建

OK了

-

修改索引库的name、all字段,使用自定义分词器

-

索引库添加一个新字段suggestion,类型为completion类型,使用自定义的分词器

-

给HotelDoc类添加suggestion字段,内容包含brand、business

package cn.itcast.hotel.pojo;

import lombok.Data;

import lombok.NoArgsConstructor;

import java.util.Arrays;

import java.util.List;

/**

* ClassName: HotelDoc

* date: 2022/11/1 10:51

*

* @author DingJiaxiong

*/

@Data

@NoArgsConstructor

public class HotelDoc {

private Long id;

private String name;

private String address;

private Integer price;

private Integer score;

private String brand;

private String city;

private String starName;

private String business;

private String location;

private String pic;

private Object distance;

private Boolean isAD;

private List<String> suggestion;

public HotelDoc(Hotel hotel) {

this.id = hotel.getId();

this.name = hotel.getName();

this.address = hotel.getAddress();

this.price = hotel.getPrice();

this.score = hotel.getScore();

this.brand = hotel.getBrand();

this.city = hotel.getCity();

this.starName = hotel.getStarName();

this.business = hotel.getBusiness();

this.location = hotel.getLatitude() + ", " + hotel.getLongitude();

this.pic = hotel.getPic();

this.suggestion = Arrays.asList(this.brand , this.business);

}

}

- 重新导入数据到hotel库

//批量插入文档

@Test

void testBulkRequest() throws IOException {

//批量查询酒店数据

List<Hotel> hotels = hotelService.list();

//1. 创建Request

BulkRequest request = new BulkRequest();

//2. 准备参数,添加多个新增的Request

for (Hotel hotel : hotels) {

//转换为文档类型HotelDoc

HotelDoc hotelDoc = new HotelDoc(hotel);

//创建新增文档的Request 对象

request.add(new IndexRequest("hotel")

.id(hotelDoc.getId().toString())

.source(JSON.toJSONString(hotelDoc),XContentType.JSON));

}

//3. 发送请求

client.bulk(request , RequestOptions.DEFAULT);

}

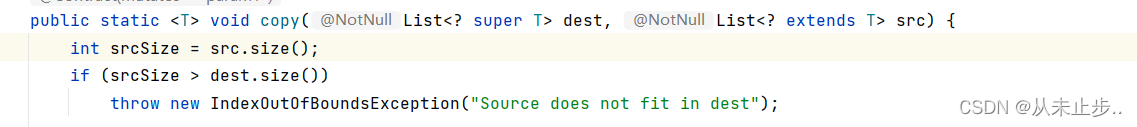

运行结果

OK,数据库中201 条数据就全部插入成功了

注意:name、all是可分词的,自动补全的brand、business是不可分词的,要使用不同的分词器组合

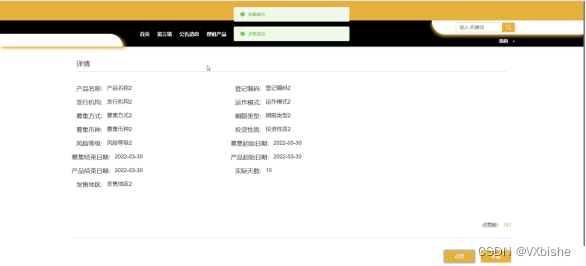

打开kibana ,看看

OK,没问题

而且命中的结果中的suggestion 字段包含了品牌brand 和 商业圈两个信息

但是有些数据会有些问题

例如这个

其实它是有两个商圈,江湾或者五角场

这种数据将来进行提示的时候就只能根据 江来提示了,搞不到五

【所以需要解决一下】【这种应该拆分成两个词条】

老师的是用 / 划分的,但是笔者拿到的资料不一样

它有些是用的 / ,有些是 、【为了和老师的一致,笔者手动把这些、 改成了 /】

改一下Java 代码

package cn.itcast.hotel.pojo;

import lombok.Data;

import lombok.NoArgsConstructor;

import org.springframework.util.CollectionUtils;

import java.util.ArrayList;

import java.util.Arrays;

import java.util.Collections;

import java.util.List;

/**

* ClassName: HotelDoc

* date: 2022/11/1 10:51

*

* @author DingJiaxiong

*/

@Data

@NoArgsConstructor

public class HotelDoc {

private Long id;

private String name;

private String address;

private Integer price;

private Integer score;

private String brand;

private String city;

private String starName;

private String business;

private String location;

private String pic;

private Object distance;

private Boolean isAD;

private List<String> suggestion;

public HotelDoc(Hotel hotel) {

this.id = hotel.getId();

this.name = hotel.getName();

this.address = hotel.getAddress();

this.price = hotel.getPrice();

this.score = hotel.getScore();

this.brand = hotel.getBrand();

this.city = hotel.getCity();

this.starName = hotel.getStarName();

this.business = hotel.getBusiness();

this.location = hotel.getLatitude() + ", " + hotel.getLongitude();

this.pic = hotel.getPic();

if (this.business.contains("/")){

//business 有多个值,需要进行切割

String[] arr = this.business.split("/");

//添加元素

this.suggestion = new ArrayList<>();

this.suggestion.add(this.brand);

Collections.addAll(this.suggestion,arr);

}else {

this.suggestion = Arrays.asList(this.brand , this.business);

}

}

}

重新进行批量导入

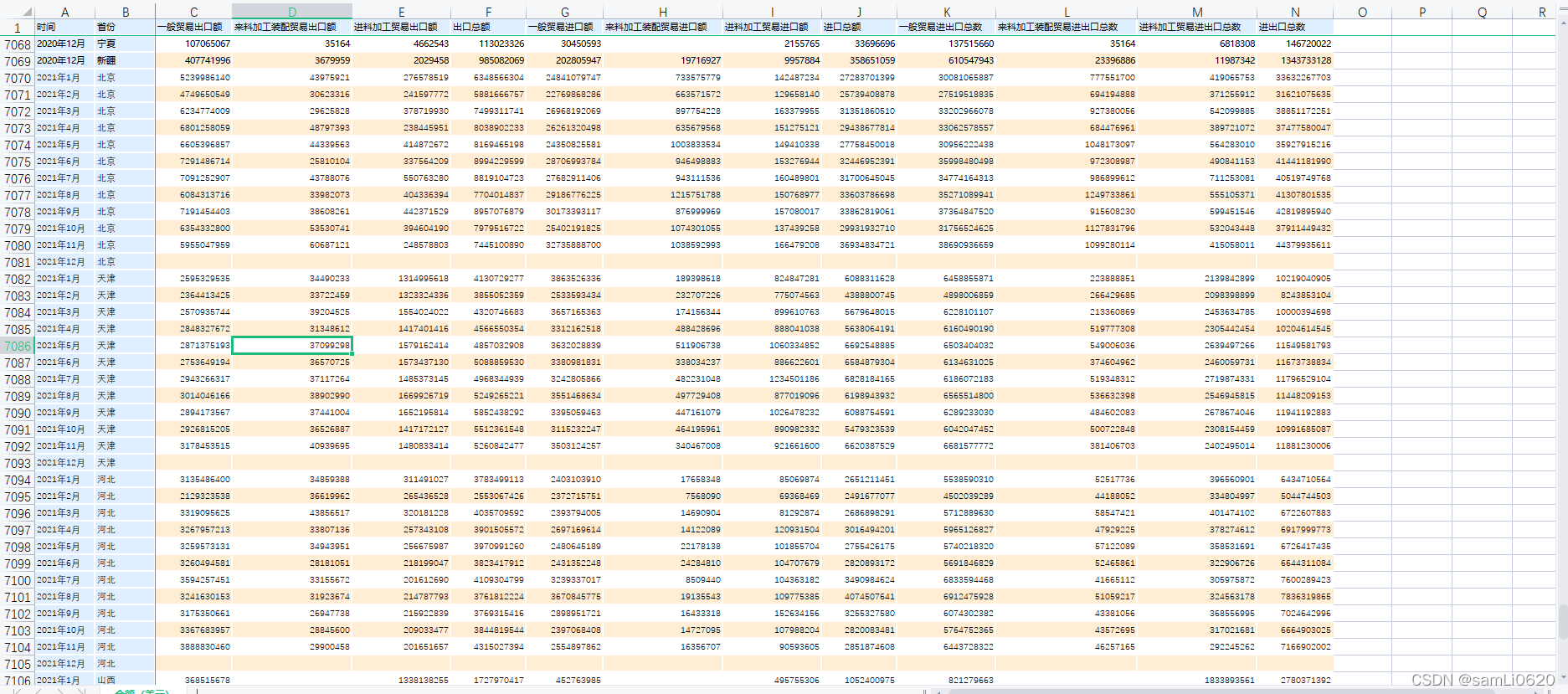

OK,看看kibana

OK,这下就拆开了,直接测试自动补全查询

GET /hotel/_search

{

"suggest": {

"suggestions": {

"text": "h",

"completion": {

"field": "suggestion",

"skip_duplicates": true,

"size": 10

}

}

}

}

运行结果

OK, 没问题

换成s

换成sd

没问题【酒店数据的修改工作就完成了】