参考

清华开源ChatGLM2-6B安装使用 手把手教程,轻松掌握

相关链接

代码:https://github.com/THUDM/ChatGLM2-6B

模型:https://huggingface.co/THUDM/chatglm2-6b、https://cloud.tsinghua.edu.cn/d/674208019e314311ab5c/?p=%2Fchatglm2-6b&mode=list

实践

环境:【大模型】AutoDL快速使用

准备

- 下载依赖

$ cd autodl-tmp

$ git clone https://github.com/THUDM/ChatGLM2-6B.git

$ cd ChatGLM2-6B/

$ pip install -r requirements.txt

... ...

- 下载模型

$ cd ~

$ git clone https://huggingface.co/THUDM/chatglm2-6b

$ cd chatglm2-6b

$ rm tokenizer.model

$ wget https://cloud.tsinghua.edu.cn/seafhttp/files/e09f0aa2-6a1f-44ca-8bbf-ad54431549f8/tokenizer.model

$ rm *.bin

$ wget https://huggingface.co/THUDM/chatglm2-6b/resolve/main/pytorch_model-00001-of-00007.bin

$ wget https://huggingface.co/THUDM/chatglm2-6b/resolve/main/pytorch_model-00002-of-00007.bin

$ wget https://huggingface.co/THUDM/chatglm2-6b/resolve/main/pytorch_model-00003-of-00007.bin

$ wget https://huggingface.co/THUDM/chatglm2-6b/resolve/main/pytorch_model-00004-of-00007.bin

$ wget https://huggingface.co/THUDM/chatglm2-6b/resolve/main/pytorch_model-00005-of-00007.bin

$ wget https://huggingface.co/THUDM/chatglm2-6b/resolve/main/pytorch_model-00006-of-00007.bin

$ wget https://huggingface.co/THUDM/chatglm2-6b/resolve/main/pytorch_model-00007-of-00007.bin

// $ wget https://cloud.tsinghua.edu.cn/seafhttp/files/2009b5fb-6aef-4203-a8ba-8be8d0888a9d/pytorch_model-00001-of-00007.bin

// $ wget https://cloud.tsinghua.edu.cn/seafhttp/files/3d1d5889-0f0d-4790-a60f-66af41efad01/pytorch_model-00002-of-00007.bin

// $ wget https://cloud.tsinghua.edu.cn/seafhttp/files/ce812a31-376b-4cc9-b450-b09423468e37/pytorch_model-00003-of-00007.bin

// $ wget https://cloud.tsinghua.edu.cn/seafhttp/files/19f2fb29-f4f1-4ee8-ab56-b49e1b24c87d/pytorch_model-00004-of-00007.bin

// $ wget https://cloud.tsinghua.edu.cn/seafhttp/files/302f2ecf-e17a-41b2-be96-d6eef6f4471a/pytorch_model-00005-of-00007.bin

// $ wget https://cloud.tsinghua.edu.cn/seafhttp/files/1897c0ab-4ee4-4395-bae6-0abe00720eea/pytorch_model-00006-of-00007.bin

配置修改

$ vim ~/autodl-tmp/ChatGLM2-6B/web_demo.py 将如下内容:

... ...

tokenizer = AutoTokenizer.from_pretrained("THUDM/chatglm2-6b", trust_remote_code=True)

model = AutoModel.from_pretrained("THUDM/chatglm2-6b", trust_remote_code=True).cuda()

... ...

demo.queue().launch(share=False, inbrowser=True)

修改为

tokenizer = AutoTokenizer.from_pretrained("/root/chatglm2-6b", trust_remote_code=True)

model = AutoModel.from_pretrained("/root/chatglm2-6b", trust_remote_code=True).cuda()

... ...

demo.queue().launch(server_port=6006,share=False, inbrowser=True)

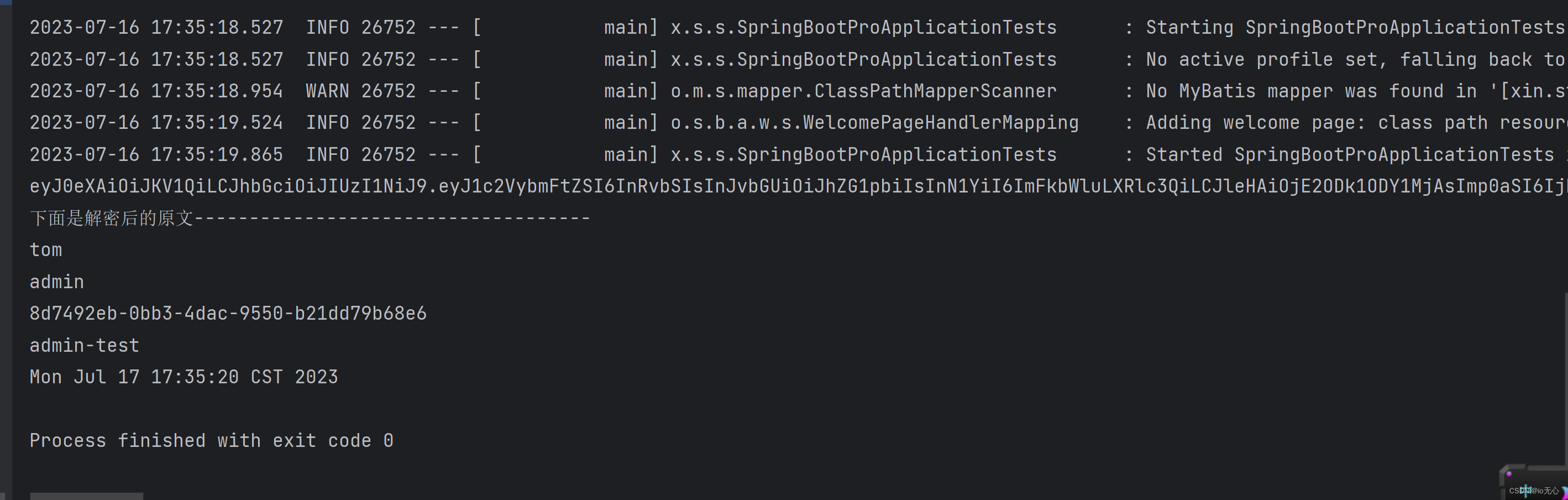

启动

$ cd ~/autodl-tmp/ChatGLM2-6B

$ python web_demo.py

Loading checkpoint shards: 100%|██████████████████████████████████████████████████████████████████████████| 7/7 [00:08<00:00, 1.22s/it]

web_demo.py:89: GradioDeprecationWarning: The `style` method is deprecated. Please set these arguments in the constructor instead.

user_input = gr.Textbox(show_label=False, placeholder="Input...", lines=10).style(

Running on local URL: http://127.0.0.1:6006

To create a public link, set `share=True` in `launch()`.