基于MMdetection3.10

困扰了大半天的问题,终于解决了。

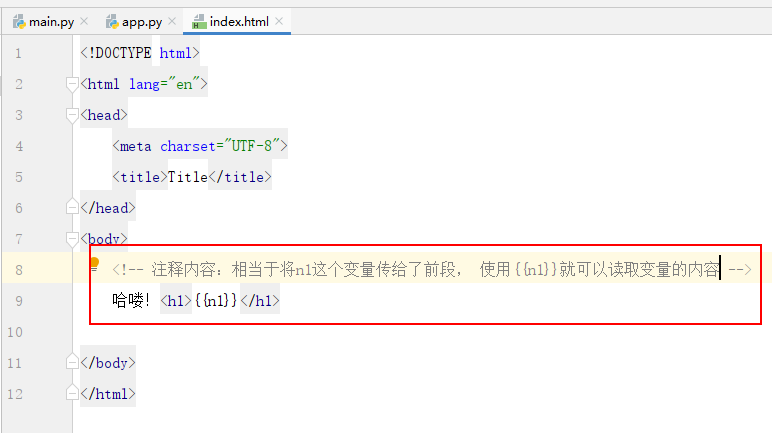

方法1:定位到configs\_base_\datasets\coco_detection.py

将里面的路径全部换为自己的路径,最重要的是将以下注释取消掉,特别注意以下两个参数

改好的文件示例

# dataset settings

dataset_type = 'CocoDataset'

# data_root = 'data/coco/'

data_root = "E:/******************/COCO2017/" # 自己的根路径

# Example to use different file client

# Method 1: simply set the data root and let the file I/O module

# automatically infer from prefix (not support LMDB and Memcache yet)

# data_root = 's3://openmmlab/datasets/detection/coco/'

# Method 2: Use `backend_args`, `file_client_args` in versions before 3.0.0rc6

# backend_args = dict(

# backend='petrel',

# path_mapping=dict({

# './data/': 's3://openmmlab/datasets/detection/',

# 'data/': 's3://openmmlab/datasets/detection/'

# }))

backend_args = None

train_pipeline = [

dict(type='LoadImageFromFile', backend_args=backend_args),

dict(type='LoadAnnotations', with_bbox=True),

dict(type='Resize', scale=(1333, 800), keep_ratio=True),

dict(type='RandomFlip', prob=0.5),

dict(type='PackDetInputs')

]

test_pipeline = [

dict(type='LoadImageFromFile', backend_args=backend_args),

dict(type='Resize', scale=(1333, 800), keep_ratio=True),

# If you don't have a gt annotation, delete the pipeline

dict(type='LoadAnnotations', with_bbox=True),

dict(

type='PackDetInputs',

meta_keys=('img_id', 'img_path', 'ori_shape', 'img_shape',

'scale_factor'))

]

train_dataloader = dict(

batch_size=2,

num_workers=2,

persistent_workers=True,

sampler=dict(type='DefaultSampler', shuffle=True),

batch_sampler=dict(type='AspectRatioBatchSampler'),

dataset=dict(

type=dataset_type,

data_root=data_root,

# ann_file='annotations/instances_train2017.json',

# data_prefix=dict(img='train/'),

ann_file="train/annotations/train.json",

data_prefix=dict(img='train/images/'),

filter_cfg=dict(filter_empty_gt=True, min_size=32),

pipeline=train_pipeline,

backend_args=backend_args))

val_dataloader = dict(

batch_size=1,

num_workers=2,

persistent_workers=True,

drop_last=False,

sampler=dict(type='DefaultSampler', shuffle=False),

dataset=dict(

type=dataset_type,

data_root=data_root,

# ann_file='annotations/instances_val2017.json',

# data_prefix=dict(img='val2017/'),

ann_file = data_root+"test/annotations/test.json",

data_prefix=dict(img='test/images/'),

test_mode=True,

pipeline=test_pipeline,

backend_args=backend_args))

test_dataloader = val_dataloader

val_evaluator = dict(

type='CocoMetric',

# ann_file=data_root + 'annotations/instances_val2017.json',

ann_file = data_root+"test/annotations/test.json",

metric='bbox',

format_only=False,

backend_args=backend_args)

test_evaluator = val_evaluator

# inference on test dataset and

# format the output results for submission.

# test_dataloader = dict(

# batch_size=1,

# num_workers=2,

# persistent_workers=True,

# drop_last=False,

# sampler=dict(type='DefaultSampler', shuffle=False),

# dataset=dict(

# type=dataset_type,

# data_root=data_root,

# ann_file=data_root + 'annotations/image_info_test-dev2017.json',

# data_prefix=dict(img='test2017/'),

# test_mode=True,

# pipeline=test_pipeline))

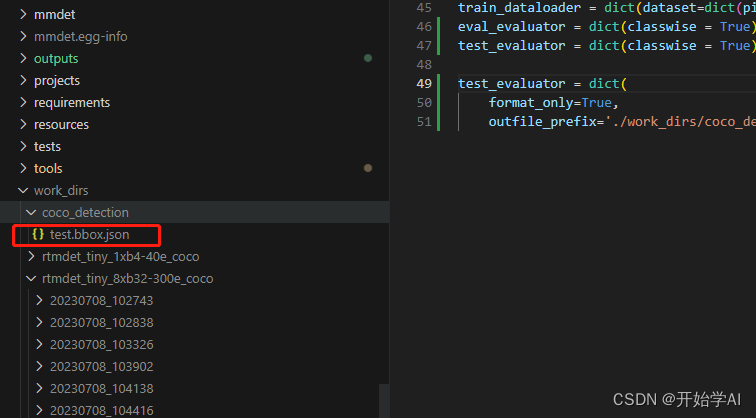

test_evaluator = dict(

type='CocoMetric',

metric='bbox',

format_only=True,

ann_file=data_root+"test/annotations/test.json",

outfile_prefix='./work_dirs/coco_detection/test')

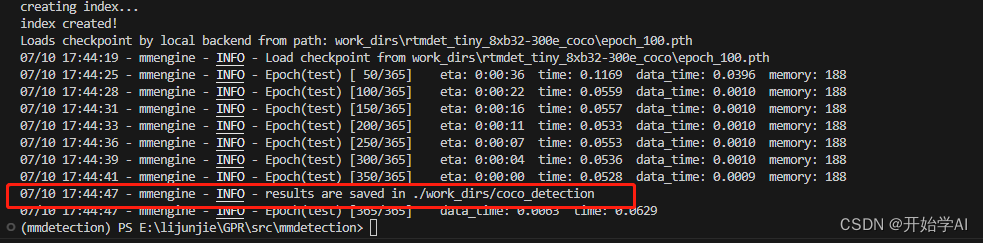

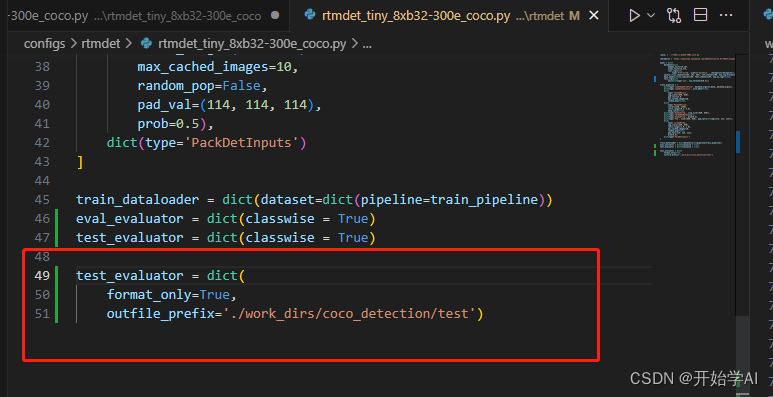

方法2,直接在配置文件中重写test_evaluator 中的 format_only=True,

outfile_prefix='./work_dirs/coco_detection/test'两个参数

最终: