文章目录

- 概述

- Java代码使用PrometheusApi统计监控指标

- Prometheus

- Grafana展示

概述

最近在阅读InLong的源码,发现它采用通过JMX+Prometheus进行指标监控。

这里做了下延伸将介绍使用JMX+Prometheus+Grafana进行监控指标展示,这里单独将Metric部分代码抽离出来做介绍。

Java代码使用PrometheusApi统计监控指标

完整代码地址:https://download.csdn.net/download/zhangshenghang/88030454

主要类(使用Prometheus HTTPServer):

public class AgentPrometheusMetricListener extends Collector implements MetricListener {

public static final String DEFAULT_DIMENSION_LABEL = "dimension";

public static final String HYPHEN_SYMBOL = "-";

private static final Logger LOGGER = LoggerFactory.getLogger(AgentPrometheusMetricListener.class);

protected HTTPServer httpServer;

private AgentMetricItem metricItem;

private Map<String, AtomicLong> metricValueMap = new ConcurrentHashMap<>();

private Map<String, MetricItemValue> dimensionMetricValueMap = new ConcurrentHashMap<>();

private List<String> dimensionKeys = new ArrayList<>();//维度key组成的字段列表,即所有监控实体标记@Dimension的字段

public AgentPrometheusMetricListener() {

this.metricItem = new AgentMetricItem();

final MBeanServer mbs = ManagementFactory.getPlatformMBeanServer();

StringBuilder beanName = new StringBuilder();

beanName.append(JMX_DOMAIN).append(DOMAIN_SEPARATOR).append("type=AgentPrometheus");

String strBeanName = beanName.toString();

try {

ObjectName objName = new ObjectName(strBeanName);

mbs.registerMBean(metricItem, objName);

} catch (Exception ex) {

LOGGER.error("exception while register mbean:{},error:{}", strBeanName, ex);

}

// prepare metric value map

metricValueMap.put(M_JOB_RUNNING_COUNT, metricItem.jobRunningCount);

metricValueMap.put(M_JOB_FATAL_COUNT, metricItem.jobFatalCount);

metricValueMap.put(M_TASK_RUNNING_COUNT, metricItem.taskRunningCount);

metricValueMap.put(M_TASK_RETRYING_COUNT, metricItem.taskRetryingCount);

metricValueMap.put(M_TASK_FATAL_COUNT, metricItem.taskFatalCount);

metricValueMap.put(M_SINK_SUCCESS_COUNT, metricItem.sinkSuccessCount);

metricValueMap.put(M_SINK_FAIL_COUNT, metricItem.sinkFailCount);

metricValueMap.put(M_SOURCE_SUCCESS_COUNT, metricItem.sourceSuccessCount);

metricValueMap.put(M_SOURCE_FAIL_COUNT, metricItem.sourceFailCount);

metricValueMap.put(M_PLUGIN_READ_COUNT, metricItem.pluginReadCount);

metricValueMap.put(M_PLUGIN_SEND_COUNT, metricItem.pluginSendCount);

metricValueMap.put(M_PLUGIN_READ_FAIL_COUNT, metricItem.pluginReadFailCount);

metricValueMap.put(M_PLUGIN_SEND_FAIL_COUNT, metricItem.pluginSendFailCount);

metricValueMap.put(M_PLUGIN_READ_SUCCESS_COUNT, metricItem.pluginReadSuccessCount);

metricValueMap.put(M_PLUGIN_SEND_SUCCESS_COUNT, metricItem.pluginSendSuccessCount);

int metricsServerPort = 19090;

try {

this.httpServer = new HTTPServer(metricsServerPort);

this.register();

LOGGER.info("Starting prometheus metrics server on port {}", metricsServerPort);

} catch (IOException e) {

LOGGER.error("exception while register agent prometheus http server,error:{}", e.getMessage());

}

}

@Override

public List<MetricFamilySamples> collect() {

DefaultExports.initialize();

// 在prometheus中命名为agent_total,(_total是CounterMetricFamily自动添加)

CounterMetricFamily totalCounter = new CounterMetricFamily("agent", "metrics_of_agent_node_total",

Arrays.asList(DEFAULT_DIMENSION_LABEL));

totalCounter.addMetric(Arrays.asList(M_JOB_RUNNING_COUNT), metricItem.jobRunningCount.get());

totalCounter.addMetric(Arrays.asList(M_JOB_FATAL_COUNT), metricItem.jobFatalCount.get());

totalCounter.addMetric(Arrays.asList(M_TASK_RUNNING_COUNT), metricItem.taskRunningCount.get());

totalCounter.addMetric(Arrays.asList(M_TASK_RETRYING_COUNT), metricItem.taskRetryingCount.get());

totalCounter.addMetric(Arrays.asList(M_TASK_FATAL_COUNT), metricItem.taskFatalCount.get());

totalCounter.addMetric(Arrays.asList(M_SINK_SUCCESS_COUNT), metricItem.sinkSuccessCount.get());

totalCounter.addMetric(Arrays.asList(M_SINK_FAIL_COUNT), metricItem.sinkFailCount.get());

totalCounter.addMetric(Arrays.asList(M_SOURCE_SUCCESS_COUNT), metricItem.sourceSuccessCount.get());

totalCounter.addMetric(Arrays.asList(M_SOURCE_FAIL_COUNT), metricItem.sourceFailCount.get());

totalCounter.addMetric(Arrays.asList(M_PLUGIN_READ_COUNT), metricItem.pluginReadCount.get());

totalCounter.addMetric(Arrays.asList(M_PLUGIN_SEND_COUNT), metricItem.pluginSendCount.get());

totalCounter.addMetric(Arrays.asList(M_PLUGIN_READ_FAIL_COUNT), metricItem.pluginReadFailCount.get());

totalCounter.addMetric(Arrays.asList(M_PLUGIN_SEND_FAIL_COUNT), metricItem.pluginSendFailCount.get());

totalCounter.addMetric(Arrays.asList(M_PLUGIN_READ_SUCCESS_COUNT), metricItem.pluginReadSuccessCount.get());

totalCounter.addMetric(Arrays.asList(M_PLUGIN_SEND_SUCCESS_COUNT), metricItem.pluginSendSuccessCount.get());

List<MetricFamilySamples> mfs = new ArrayList<>();

mfs.add(totalCounter);

// 返回每个维度的统计

for (Entry<String, MetricItemValue> entry : this.dimensionMetricValueMap.entrySet()) {

MetricItemValue itemValue = entry.getValue();

Map<String, String> dimensionMap = itemValue.getDimensions();

// 取配置文件任务中ID

String pluginId = dimensionMap.getOrDefault(KEY_PLUGIN_ID, HYPHEN_SYMBOL);

String componentName = dimensionMap.getOrDefault(KEY_COMPONENT_NAME, HYPHEN_SYMBOL);

// 统计名称

String counterName = pluginId.equals(HYPHEN_SYMBOL) ? componentName : pluginId;

List<String> dimensionIdKeys = new ArrayList<>();

dimensionIdKeys.add(DEFAULT_DIMENSION_LABEL);

dimensionIdKeys.addAll(dimensionMap.keySet());

// 第一个参数统计名称,第二个参数帮助说明,第三个参数维度确认字段

CounterMetricFamily idCounter = new CounterMetricFamily(counterName,

"metrics_of_agent_dimensions_" + counterName, dimensionIdKeys);

addCounterMetricFamily(M_JOB_RUNNING_COUNT, itemValue, idCounter);

addCounterMetricFamily(M_JOB_FATAL_COUNT, itemValue, idCounter);

addCounterMetricFamily(M_TASK_RUNNING_COUNT, itemValue, idCounter);

addCounterMetricFamily(M_TASK_RETRYING_COUNT, itemValue, idCounter);

addCounterMetricFamily(M_TASK_FATAL_COUNT, itemValue, idCounter);

addCounterMetricFamily(M_SINK_SUCCESS_COUNT, itemValue, idCounter);

addCounterMetricFamily(M_SINK_FAIL_COUNT, itemValue, idCounter);

addCounterMetricFamily(M_SOURCE_SUCCESS_COUNT, itemValue, idCounter);

addCounterMetricFamily(M_SOURCE_FAIL_COUNT, itemValue, idCounter);

addCounterMetricFamily(M_PLUGIN_READ_COUNT, itemValue, idCounter);

addCounterMetricFamily(M_PLUGIN_SEND_COUNT, itemValue, idCounter);

addCounterMetricFamily(M_PLUGIN_READ_FAIL_COUNT, itemValue, idCounter);

addCounterMetricFamily(M_PLUGIN_SEND_FAIL_COUNT, itemValue, idCounter);

addCounterMetricFamily(M_PLUGIN_READ_SUCCESS_COUNT, itemValue, idCounter);

addCounterMetricFamily(M_PLUGIN_SEND_SUCCESS_COUNT, itemValue, idCounter);

mfs.add(idCounter);

}

return mfs;

}

@Override

public void snapshot(String domain, List<MetricItemValue> itemValues) {

System.out.println("domain:" + domain + "metricItem1 = " + JSONUtil.toJsonStr(metricItem));

for (MetricItemValue itemValue : itemValues) {

// 不同dimension的指标,统计求和

for (Entry<String, MetricValue> entry : itemValue.getMetrics().entrySet()) {

String fieldName = entry.getValue().name;

AtomicLong metricValue = this.metricValueMap.get(fieldName);

if (metricValue != null) {

long fieldValue = entry.getValue().value;

metricValue.addAndGet(fieldValue);

}

}

// 获取统计维度唯一标识

String dimensionKey = itemValue.getKey();

//dimensionMetricValue统计维度数量总和

MetricItemValue dimensionMetricValue = this.dimensionMetricValueMap.get(dimensionKey);

if (dimensionMetricValue == null) {//首次进来

dimensionMetricValue = new MetricItemValue(dimensionKey, new ConcurrentHashMap<>(),

new ConcurrentHashMap<>());

this.dimensionMetricValueMap.putIfAbsent(dimensionKey, dimensionMetricValue);

dimensionMetricValue = this.dimensionMetricValueMap.get(dimensionKey);

dimensionMetricValue.getDimensions().putAll(itemValue.getDimensions());

// add prometheus label name

for (Entry<String, String> entry : itemValue.getDimensions().entrySet()) {

if (!this.dimensionKeys.contains(entry.getKey())) {

this.dimensionKeys.add(entry.getKey());

}

}

}

// 遍历具体统计的指标

for (Entry<String, MetricValue> entry : itemValue.getMetrics().entrySet()) {

String fieldName = entry.getValue().name;//统计指标名称

MetricValue metricValue = dimensionMetricValue.getMetrics().get(fieldName);//获取历史统计的数量

if (metricValue == null) {

//首次统计添加

metricValue = MetricValue.of(fieldName, entry.getValue().value);

dimensionMetricValue.getMetrics().put(metricValue.name, metricValue);

continue;

}

//累加本次统计的数量

metricValue.value += entry.getValue().value;

}

}

System.out.println("metricItem2 = " + JSONUtil.toJsonStr(metricItem));

}

private void addCounterMetricFamily(String defaultDimension, MetricItemValue itemValue,

CounterMetricFamily idCounter) {

Map<String, String> dimensionMap = itemValue.getDimensions();

List<String> labelValues = new ArrayList<>(dimensionMap.size() + 1);

labelValues.add(defaultDimension);//首先添加统计维度字段,如:jobRunningCount

for (String key : dimensionMap.keySet()) {

String labelValue = dimensionMap.getOrDefault(key, HYPHEN_SYMBOL);

labelValues.add(labelValue);

}

long value = 0L;

Map<String, MetricValue> metricValueMap = itemValue.getMetrics();

MetricValue metricValue = metricValueMap.get(defaultDimension);

if (metricValue != null) {

value = metricValue.value;

}

idCounter.addMetric(labelValues, value);

}

}

启动后访问绑定端口http://ip:19090/获取监控内容如下,包括了我们程序中自己监控的信息

# HELP process_cpu_seconds_total Total user and system CPU time spent in seconds.

# TYPE process_cpu_seconds_total counter

process_cpu_seconds_total 12.819136

# HELP process_start_time_seconds Start time of the process since unix epoch in seconds.

# TYPE process_start_time_seconds gauge

process_start_time_seconds 1.688973202094E9

# HELP process_open_fds Number of open file descriptors.

# TYPE process_open_fds gauge

process_open_fds 57.0

# HELP process_max_fds Maximum number of open file descriptors.

# TYPE process_max_fds gauge

process_max_fds 10240.0

# HELP agent_total metrics_of_agent_node_total

# TYPE agent_total counter

agent_total{dimension="jobRunningCount",} 3370.0

agent_total{dimension="jobFatalCount",} 0.0

agent_total{dimension="taskRunningCount",} -90.0

agent_total{dimension="taskRetryingCount",} 0.0

agent_total{dimension="taskFatalCount",} 0.0

agent_total{dimension="sinkSuccessCount",} 0.0

agent_total{dimension="sinkFailCount",} 0.0

agent_total{dimension="sourceSuccessCount",} 0.0

agent_total{dimension="sourceFailCount",} 0.0

agent_total{dimension="pluginReadCount",} 0.0

agent_total{dimension="pluginSendCount",} 6740.0

agent_total{dimension="pluginReadFailCount",} 0.0

agent_total{dimension="pluginSendFailCount",} 0.0

agent_total{dimension="pluginReadSuccessCount",} 0.0

agent_total{dimension="pluginSendSuccessCount",} 0.0

# HELP AServer_total metrics_of_agent_dimensions_AServer

# TYPE AServer_total counter

AServer_total{dimension="jobRunningCount",streamId="streamId",pluginId="AServer",groupId="groupId1",} 1685.0

AServer_total{dimension="jobFatalCount",streamId="streamId",pluginId="AServer",groupId="groupId1",} 0.0

AServer_total{dimension="taskRunningCount",streamId="streamId",pluginId="AServer",groupId="groupId1",} -19.0

AServer_total{dimension="taskRetryingCount",streamId="streamId",pluginId="AServer",groupId="groupId1",} 0.0

AServer_total{dimension="taskFatalCount",streamId="streamId",pluginId="AServer",groupId="groupId1",} 0.0

AServer_total{dimension="sinkSuccessCount",streamId="streamId",pluginId="AServer",groupId="groupId1",} 0.0

AServer_total{dimension="sinkFailCount",streamId="streamId",pluginId="AServer",groupId="groupId1",} 0.0

AServer_total{dimension="sourceSuccessCount",streamId="streamId",pluginId="AServer",groupId="groupId1",} 0.0

AServer_total{dimension="sourceFailCount",streamId="streamId",pluginId="AServer",groupId="groupId1",} 0.0

AServer_total{dimension="pluginReadCount",streamId="streamId",pluginId="AServer",groupId="groupId1",} 0.0

AServer_total{dimension="pluginSendCount",streamId="streamId",pluginId="AServer",groupId="groupId1",} 3370.0

AServer_total{dimension="pluginReadFailCount",streamId="streamId",pluginId="AServer",groupId="groupId1",} 0.0

AServer_total{dimension="pluginSendFailCount",streamId="streamId",pluginId="AServer",groupId="groupId1",} 0.0

AServer_total{dimension="pluginReadSuccessCount",streamId="streamId",pluginId="AServer",groupId="groupId1",} 0.0

AServer_total{dimension="pluginSendSuccessCount",streamId="streamId",pluginId="AServer",groupId="groupId1",} 0.0

# HELP AServer_total metrics_of_agent_dimensions_AServer

# TYPE AServer_total counter

AServer_total{dimension="jobRunningCount",streamId="streamId",pluginId="AServer",groupId="groupId2",} 1685.0

AServer_total{dimension="jobFatalCount",streamId="streamId",pluginId="AServer",groupId="groupId2",} 0.0

AServer_total{dimension="taskRunningCount",streamId="streamId",pluginId="AServer",groupId="groupId2",} -71.0

AServer_total{dimension="taskRetryingCount",streamId="streamId",pluginId="AServer",groupId="groupId2",} 0.0

AServer_total{dimension="taskFatalCount",streamId="streamId",pluginId="AServer",groupId="groupId2",} 0.0

AServer_total{dimension="sinkSuccessCount",streamId="streamId",pluginId="AServer",groupId="groupId2",} 0.0

AServer_total{dimension="sinkFailCount",streamId="streamId",pluginId="AServer",groupId="groupId2",} 0.0

AServer_total{dimension="sourceSuccessCount",streamId="streamId",pluginId="AServer",groupId="groupId2",} 0.0

AServer_total{dimension="sourceFailCount",streamId="streamId",pluginId="AServer",groupId="groupId2",} 0.0

AServer_total{dimension="pluginReadCount",streamId="streamId",pluginId="AServer",groupId="groupId2",} 0.0

AServer_total{dimension="pluginSendCount",streamId="streamId",pluginId="AServer",groupId="groupId2",} 3370.0

AServer_total{dimension="pluginReadFailCount",streamId="streamId",pluginId="AServer",groupId="groupId2",} 0.0

AServer_total{dimension="pluginSendFailCount",streamId="streamId",pluginId="AServer",groupId="groupId2",} 0.0

AServer_total{dimension="pluginReadSuccessCount",streamId="streamId",pluginId="AServer",groupId="groupId2",} 0.0

AServer_total{dimension="pluginSendSuccessCount",streamId="streamId",pluginId="AServer",groupId="groupId2",} 0.0

....

Prometheus

上面通过代码获取到了Prometheus的监控信息,下面我们通过配置Prometheus,在Prometheus中获取到监控指标。

修改Prometheus配置文件/etc/prometheus/prometheus.yml将我们程序中开启的端口,添加到配置文件

# my global config

global:

scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

# scrape_timeout is set to the global default (10s).

# Alertmanager configuration

alerting:

alertmanagers:

- static_configs:

- targets:

# - alertmanager:9093

# Load rules once and periodically evaluate them according to the global 'evaluation_interval'.

rule_files:

# - "first_rules.yml"

# - "second_rules.yml"

# A scrape configuration containing exactly one endpoint to scrape:

# Here it's Prometheus itself.

scrape_configs:

# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.

- job_name: "prometheus"

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ["localhost:9090"]

# 程序中开启的端口

- job_name: "Jast Monitor"

static_configs:

- targets: ['192.168.1.41:19090']

重启Prometheus服务

访问Prometheus进入status->Targets页面

可以看到我们配置的监控

至此Prometheus已经将我们程序的监控信息捕获到。

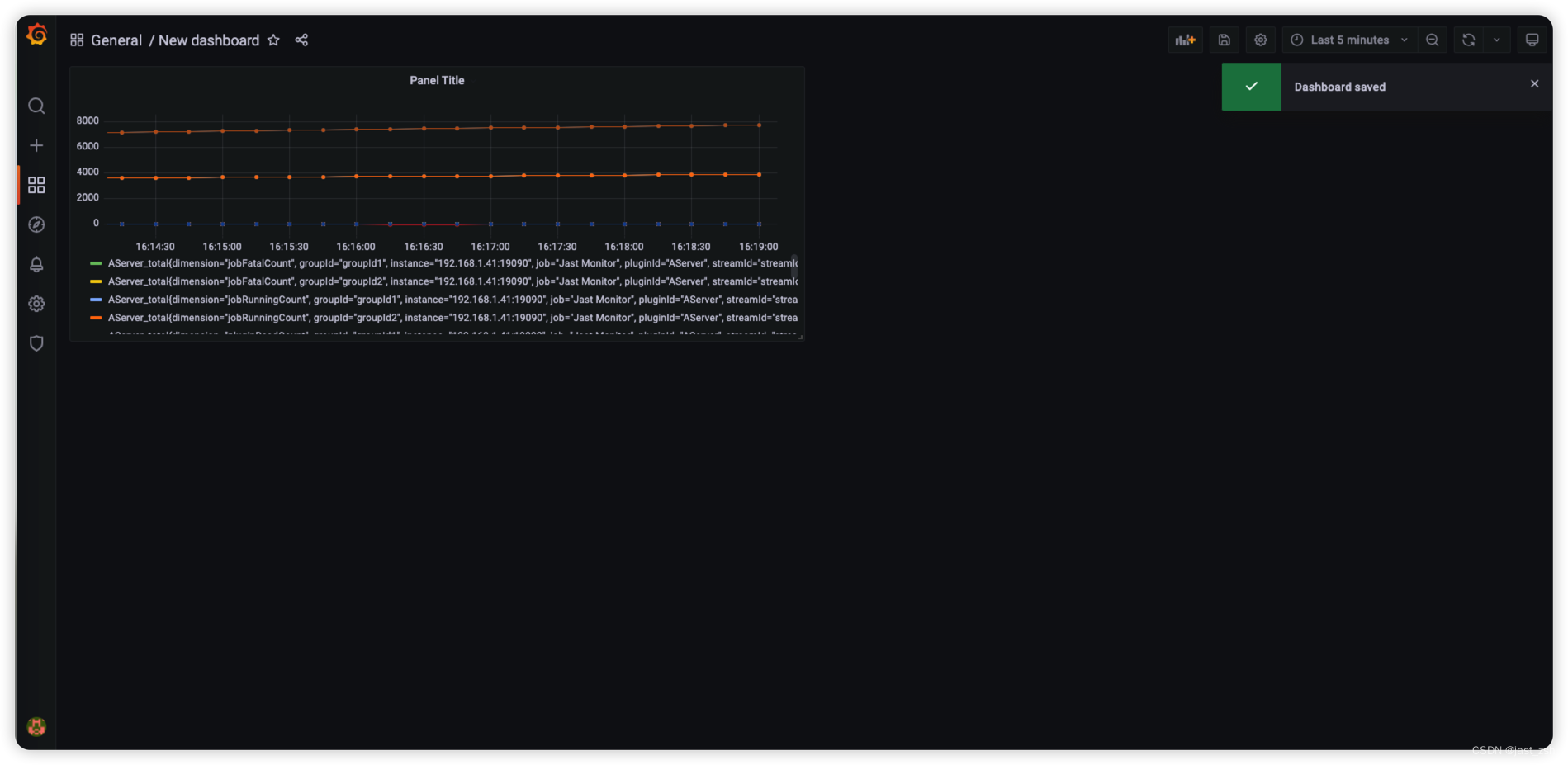

Grafana展示

Grafana安装不在这里介绍,自行安装

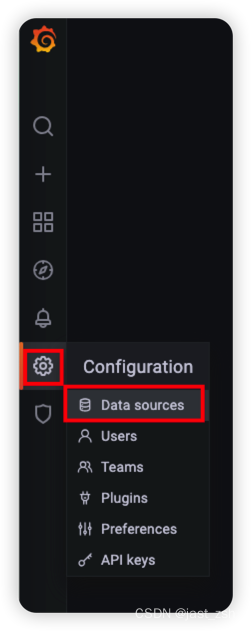

登录Grafana,添加数据源Data Sources

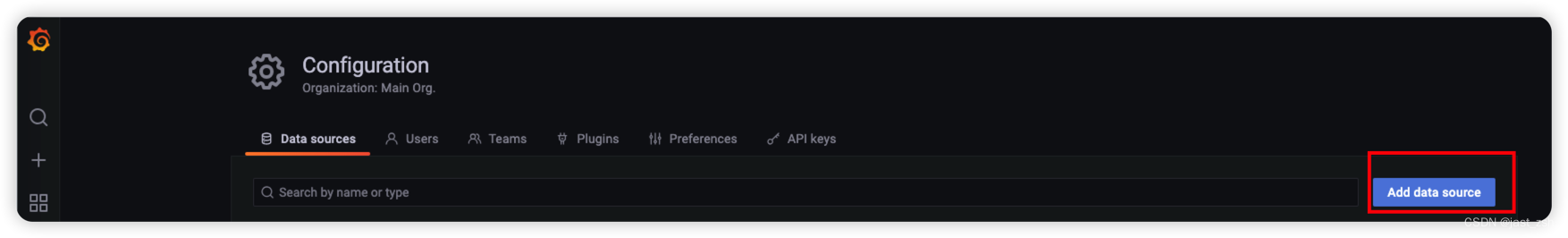

点击Add data source

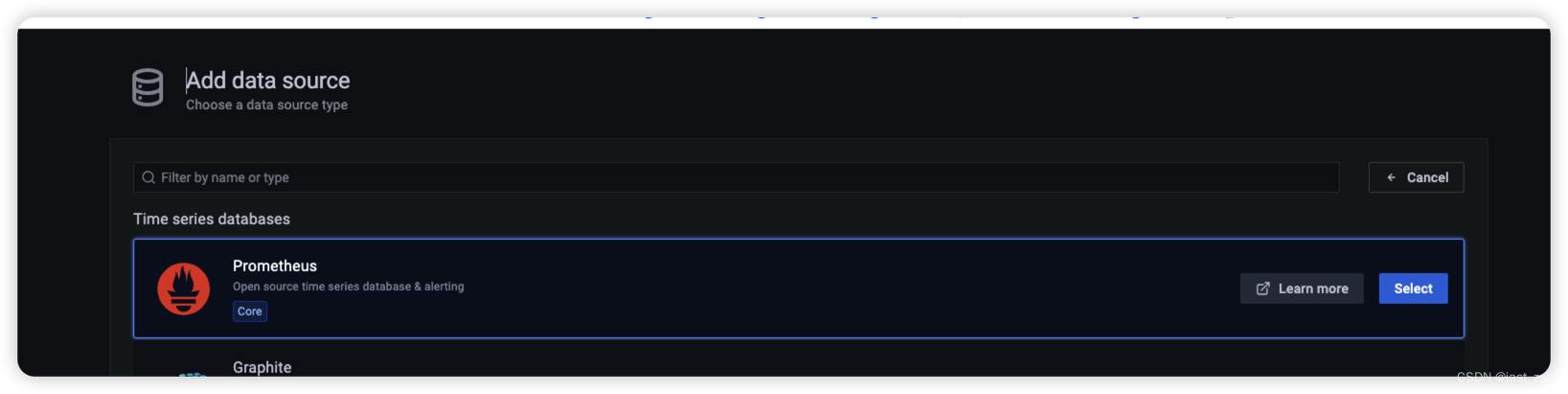

选择Prometheus

配置Prometheus地址

拖到最底下,点击Savae & test,成功会提示Data source is working

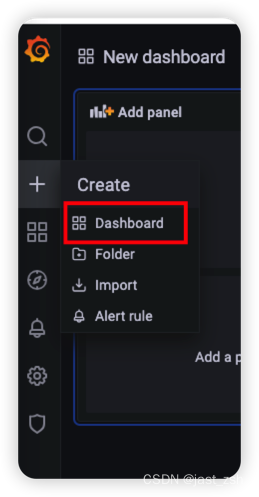

创建仪表盘,配置监控

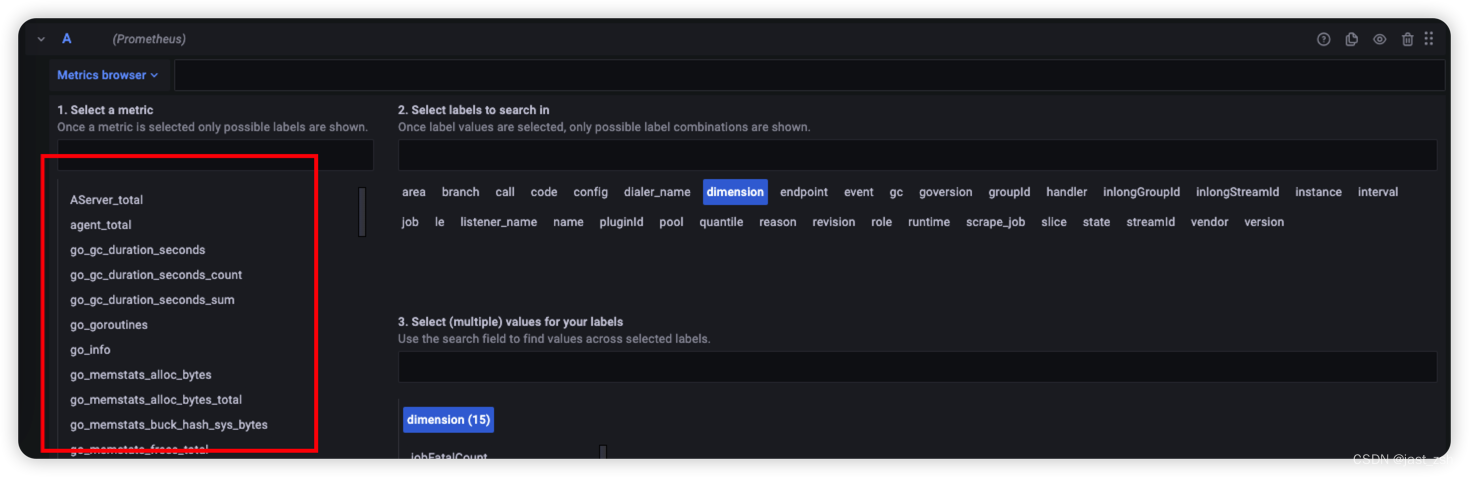

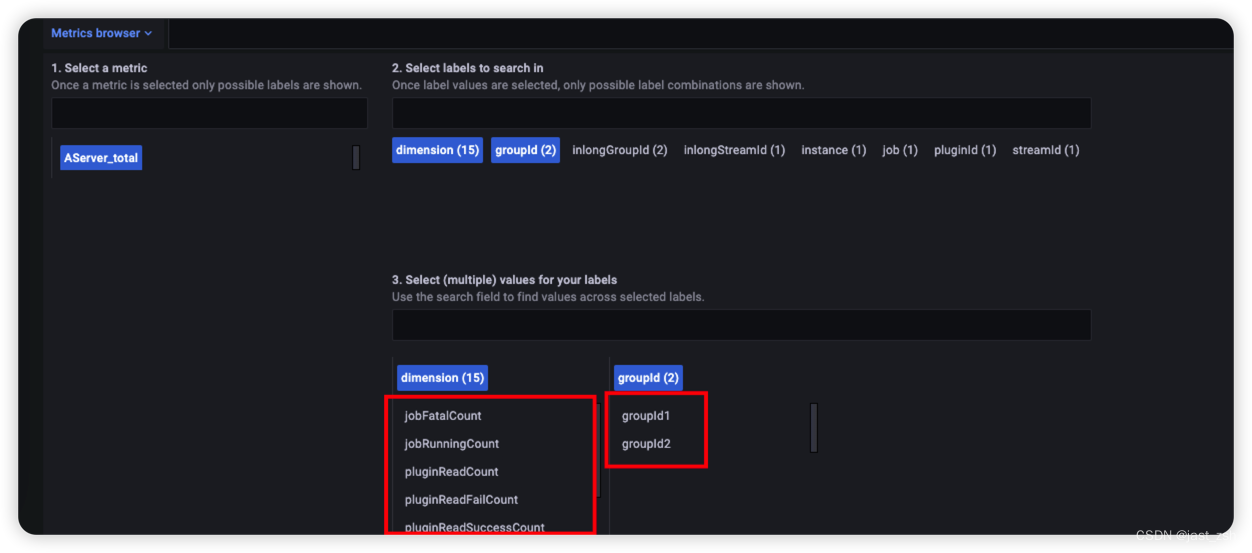

点击Metrics browser展开

选择展示的监控指标(这里AServer_total和agent_total是我们自己代码中配置的监控信息)

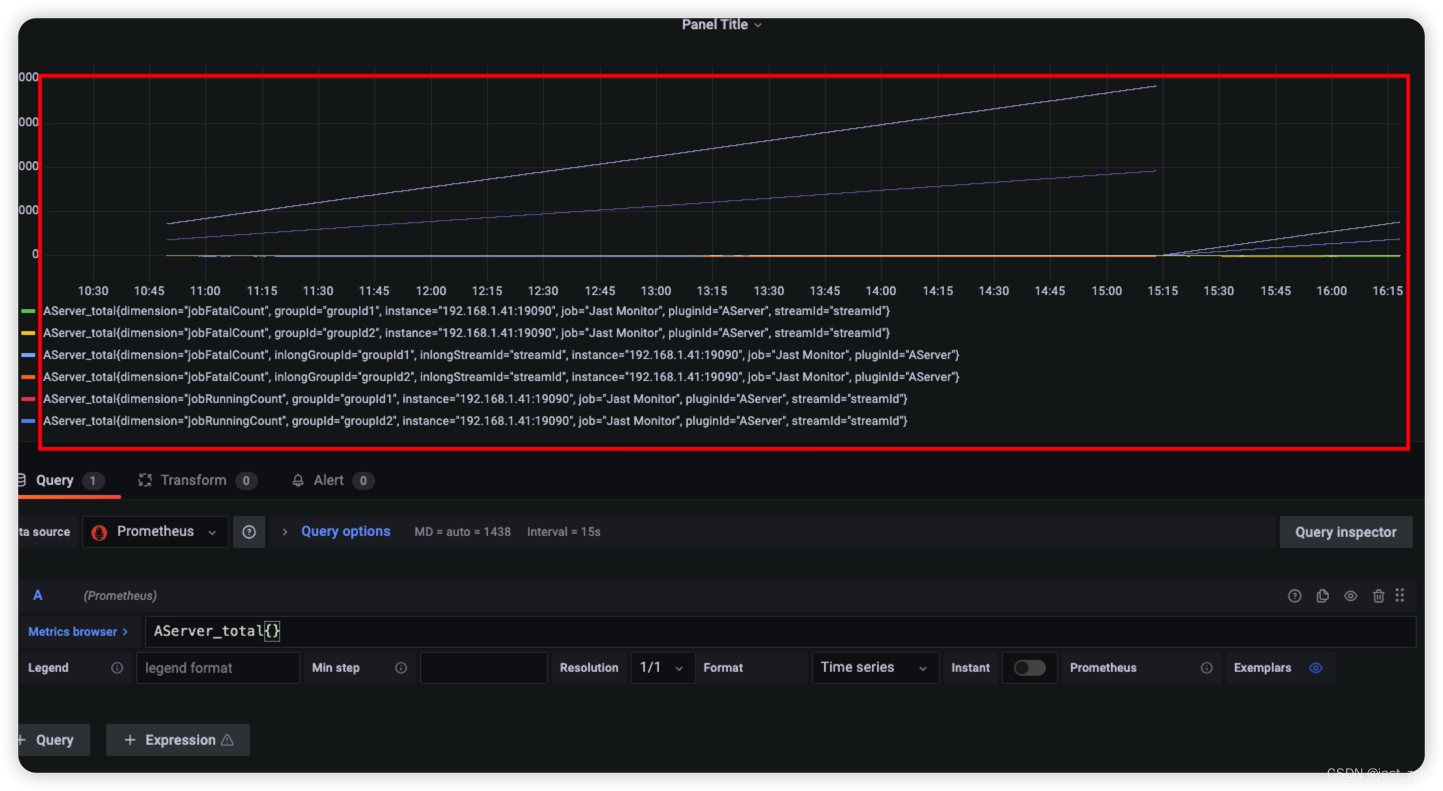

点击Use query

查询出数据展示效果

点击保存可以在Dashboard中查看我们监控指标