BERT: Pre-training of Deep Bidirectional Transformers for

Language Understanding

1 论文解读

1.1 模型概览

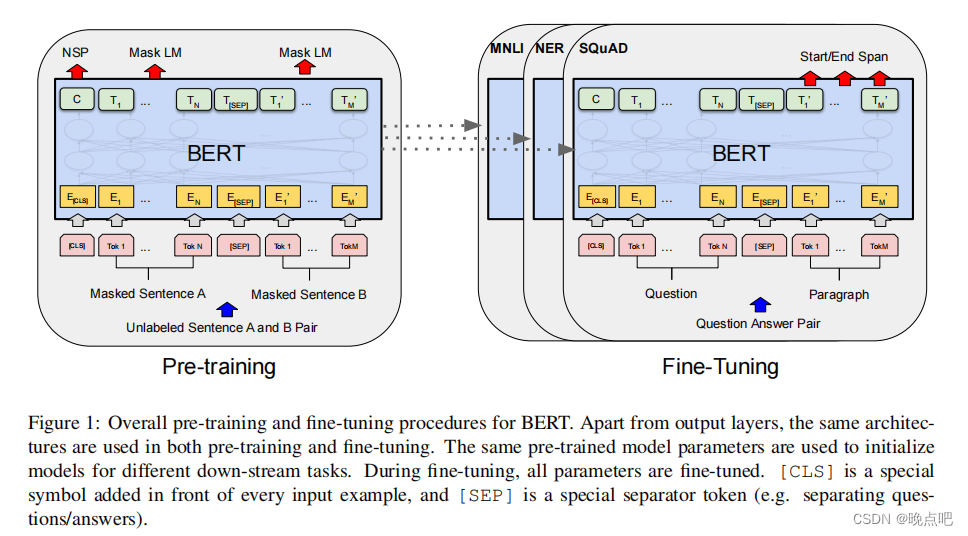

- There are two steps in our framework: pre-training and fine-tuning.

bert由预训练模型+微调模型组成。

① pre-training, the model is trained on unlabeled data over different pre-training tasks.

预训练模型是在无标注数据上训练的

②For fine-tuning, the BERT model is first initialized with

the pre-trained parameters, and all of the parameters are fine-tuned using labeled data from the downstream tasks

微调模型在有监督任务上训练的,不通下游任务训练不同的bert模型,尽管预训练模型一样,不同下游任务都有单独的微调模型

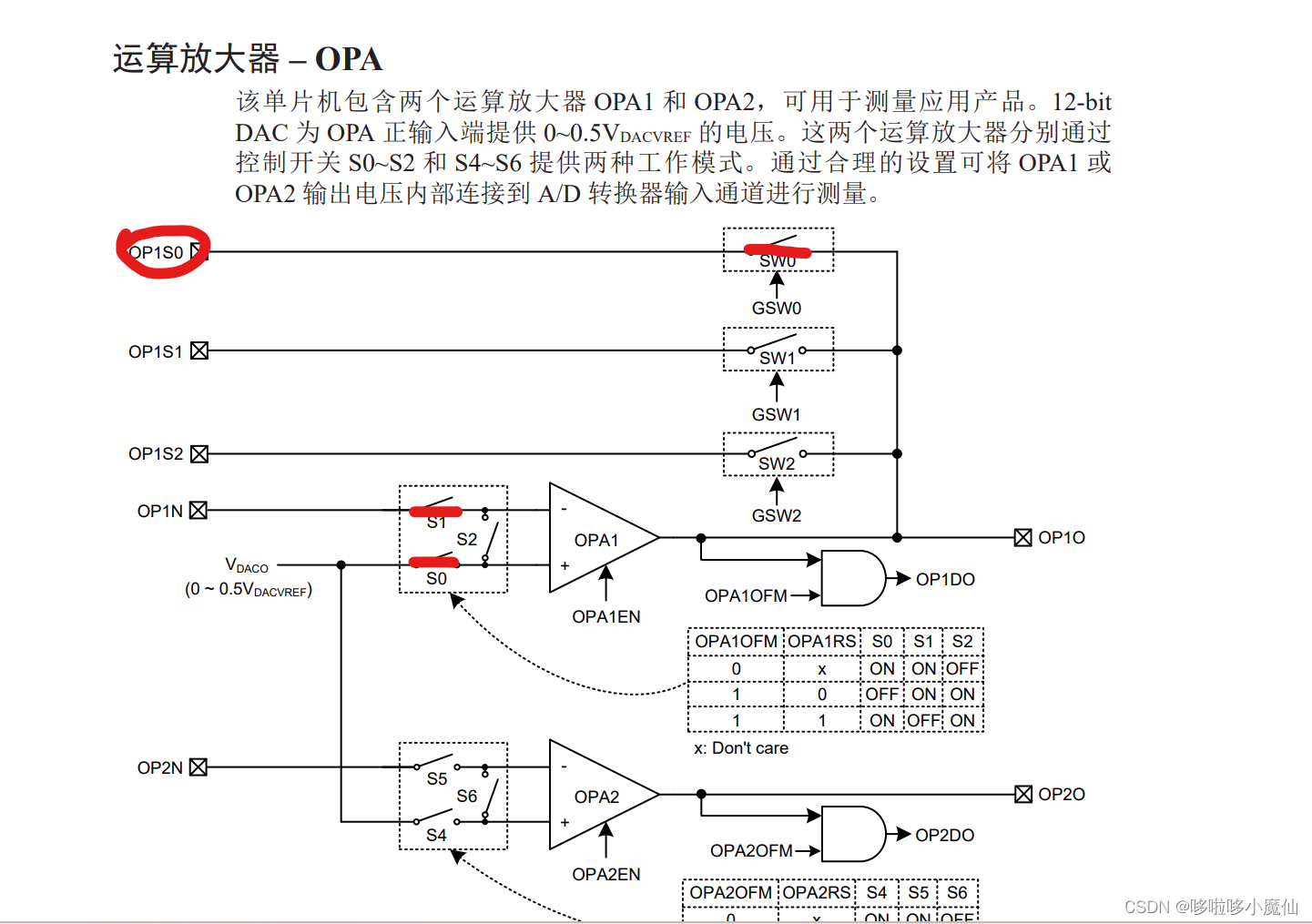

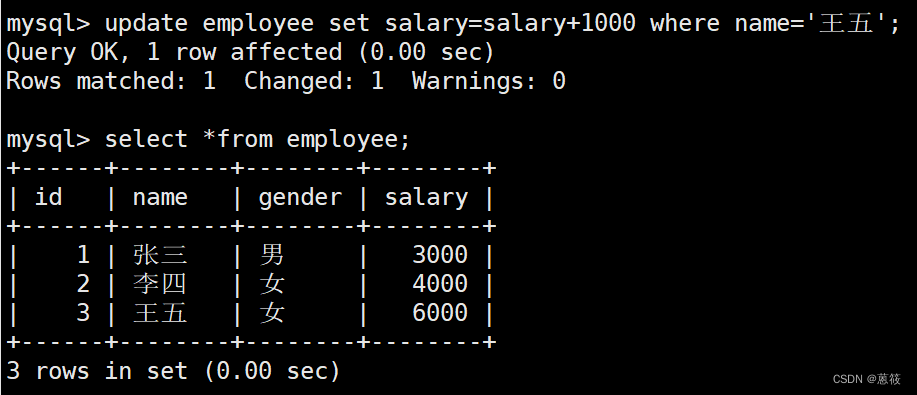

下图中,左边为预训练的bert模型,右边为问题答案匹配微调模型。

1.2 模型架构

- BERT’s model architecture is a multi-layer bidirectional Transformer encoder

bert模型架构是基于原始transorformer的encoder层

常用参数表示:

L : Transformer blocks 的层数

H: embedding的维度大小

A: 多头注意力机制中的头数,代表使用多少个self attention

BERTBASE (L=12, H=768, A=12, Total Parameters=110M) and BERTLARGE (L=24, H=1024,A=16, Total Parameters=340M).

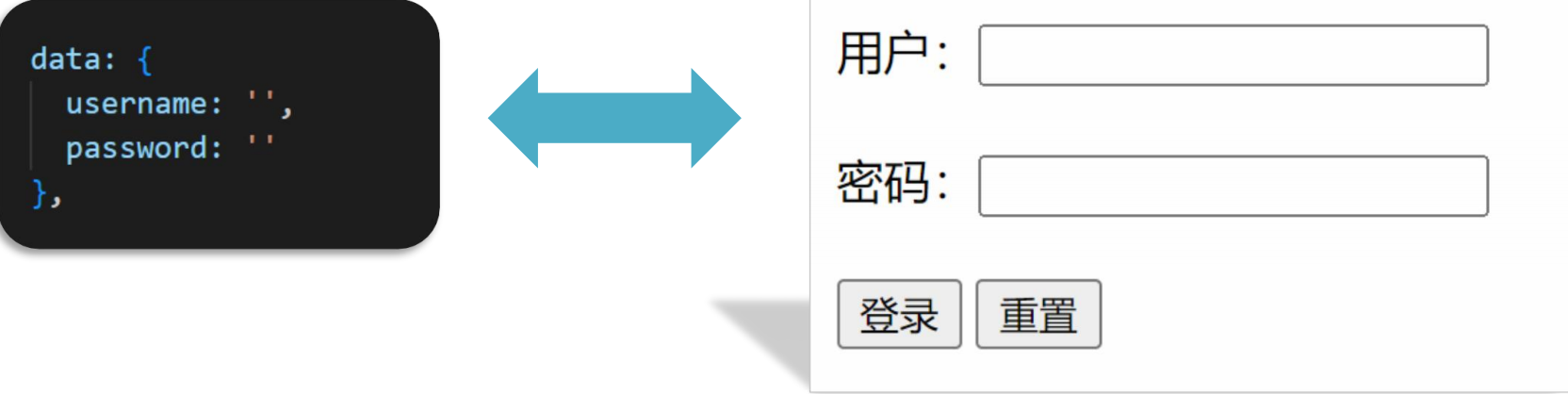

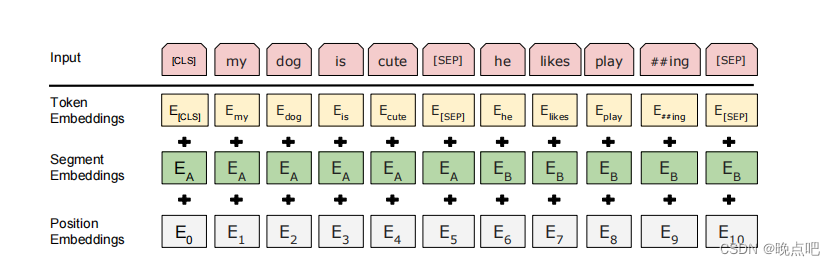

模型输入:

-

将两个句子拼接在一起,组成单个句子,句子之间使用 [SEP] token连接

-

在句子第一个位置加入 [CLS] token ,代表了这个句子的聚合信息。

-

单独构建句子token 拼接句子用0,1和来表示每个字是属于句子1,还是句子0。

For a given token, its input representation is constructed by summing the corresponding token,segment, and position embeddings。

对应给定的一个token,embedding 表征由三部分组成,分别由字embeddign, segment(句子) embedding,和位置embedding相加而成。,如下图所示。

1.3 预训练BERT

模型完成两个任务;

任务①: Task #1: Masked LM

任务②:下一个句子预测

Task #1: Masked LM

-In order to train a deep bidirectional representation, we simply mask some percentage of the input tokens at random, and then predict those masked tokens.

为了学习句子的双向表征,通过一定比例随机mask句子中的一些token,然后去预测这些token。

-

In all of our experiments, we mask 15% of all WordPiece tokens in each sequence at random

我们在每个句子中随机mask 掉15%的tokens -

Although this allows us to obtain a bidirectional pre-trained model, a downside is that we are creating a mismatch between pre-training and fine-tuning, since the [MASK] token does not appear during fine-tuning.

尽管这种mask机制可以解决双向模型训练问题,但是由于在模型fine-tuning时,并没有mask tokens,导致pre-training 和 fine-tuning节点不一致。 -

我们采样如下策略处理上面问题

① 每个句子随机选择15%的token进行mask

② 在选择的mask token中,其中80%替换为[mask],10%被随机替换为其他词,剩下10%保持原token不变

Task #2: Next Sentence Prediction (NSP)

背景:许多下游任务是基于2个句子完成的,如 Question Answering (QA) 、 Natural Language Inference (NLI)。

In order to train a model that understands sentence relationships, we pre-train for a binarized next sentence prediction task

任务设计:

- 对每一个训练,样本选择句子A和句子B

- 50%的句子B,真的是句子A的下一句,label=IsNext

- 50%的句子B随机从预料库中选择的,label=NotNext

1.4 Fine-tuning BERT

For applications involving text pairs, a common pattern is to independently encode text pairs before applying bidirectional cross attention BERT instead uses the self-attention mechanism to unify these two stages, as encoding a concatenated text pair with self-attention effectively includes bidirectional cross attention between two sentences.