文章目录

- 写在前面

- 一、ReplicationController(RC)

- 1、官方解释

- 2、举个例子

- 3、小总结

- 二、ReplicaSet(RS)

- 1、官方解释

- 2、举个例子

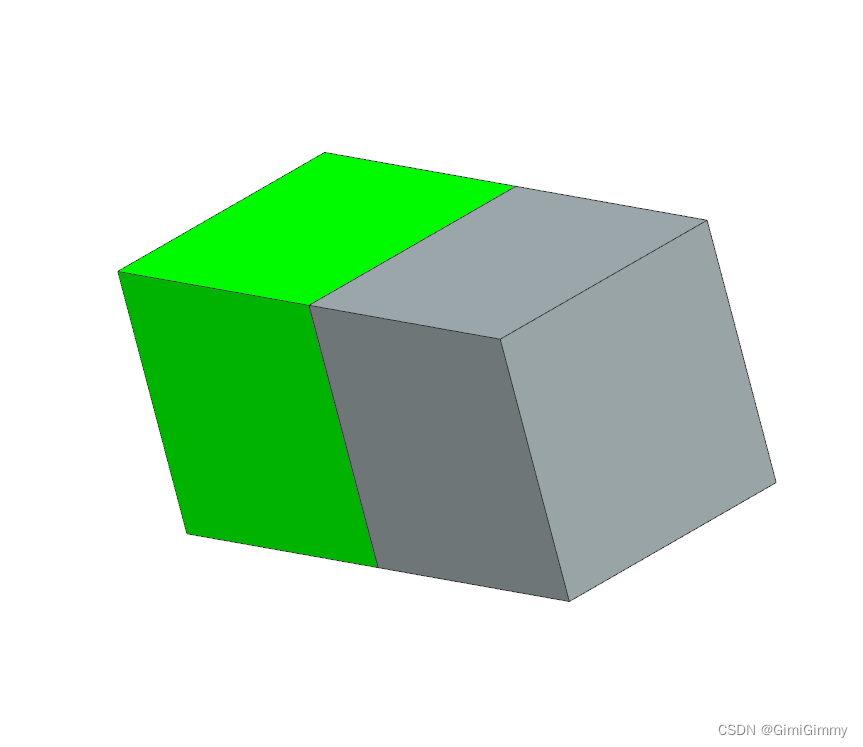

- 三、Deployment(用的最多)

- 1、官方解释

- 2、举个例子

- (1)创建nginx_deployment.yaml文件

- (2)版本滚动更新

- 3、注意事项

- 四、Labels and Selectors

- 1、官方解释

- 2、举个例子

- 五、Namespace

- 1、什么是Namespace

- 2、创建命名空间

- 3、创建指定命名空间下的pod

- 六、Network

- 1、回顾docker的网络

- (1)单机docker

- (2)docker-swarm 多机集群

- (3)k8s中pod网络

- 2、集群内Pod与Node之间的通信

- (1)案例分析

- (2)How to implement the Kubernetes Cluster networking model--Calico

- 3、集群内Service-Cluster IP

- (1)官方描述

- (2)举个例子:pod地址不稳定

- (3)创建service

- (4)查看service详细信息

- (5)使用yaml创建service

- (6)小总结

- 4、外部服务访问集群中的pod:Service-NodePort(不推荐)

- (1)举个例子

- (2)总结

- (3)使用yaml文件一键部署

- 5、外部服务访问集群中的pod:Service-LoadBalance(不推荐)

- 6、外部服务访问集群中的pod:Ingress(推荐)

- (1)官网解释

- (2)举个例子

- ① 先删除之前的tomcat及service

- ② 部署tomcat

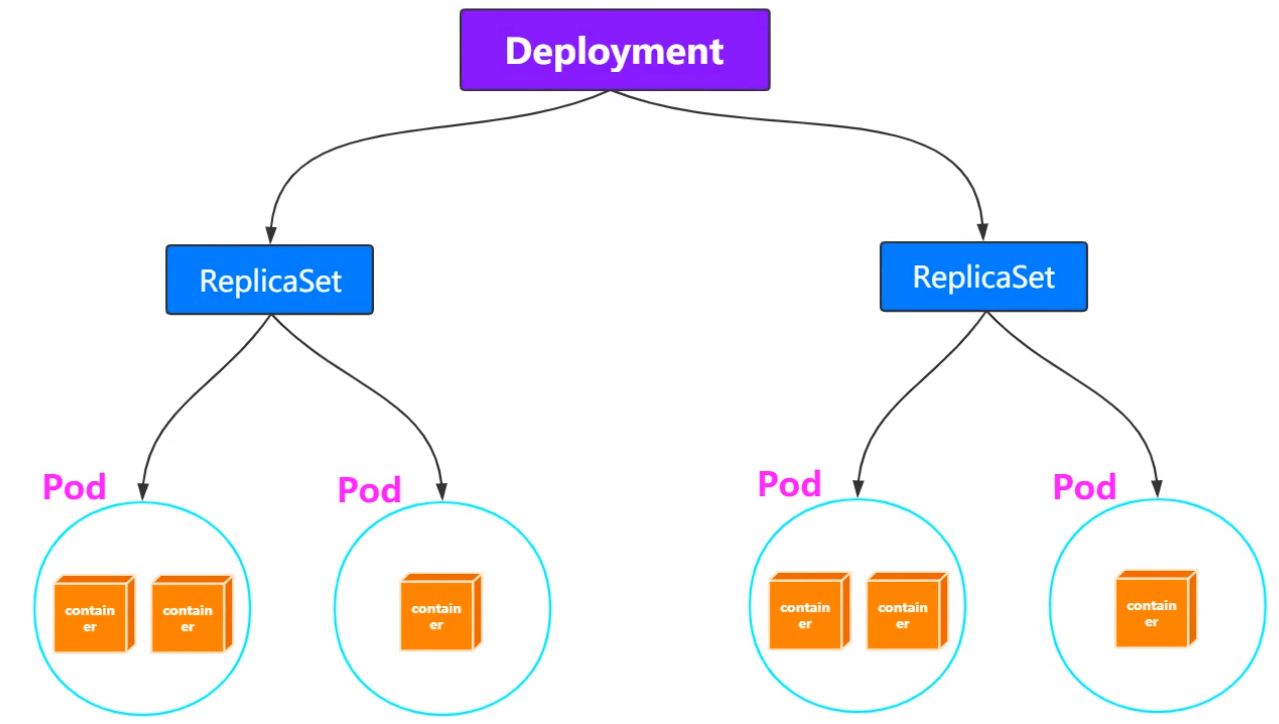

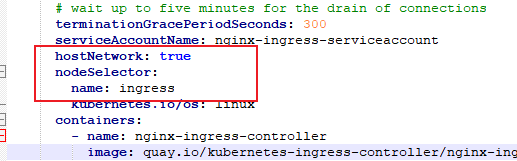

- ③ 部署ingress-controller

- ④ 创建ingress并定义转发规则

写在前面

附:集群搭建请移步:

Kubernetes(k8s)集群搭建,完整无坑,不需要科学上网~

Controllers官网文档:https://kubernetes.io/docs/concepts/workloads/controllers/

一、ReplicationController(RC)

1、官方解释

官网:https://kubernetes.io/docs/concepts/workloads/controllers/replicationcontroller/

官网原文:A ReplicationController ensures that a specified number of pod replicas are running at any one time. In other words, a ReplicationController makes sure that a pod or a homogeneous set of pods is always up and available.

ReplicationController定义了一个期望的场景,即声明某种Pod的副本数量在任意时刻都符合某个预期值,所以RC的定义包含以下几个部分:

- Pod期待的副本数(replicas)

- 用于筛选目标Pod的Label Selector

- 当Pod的副本数量小于预期数量时,用于创建新Pod的Pod模板(template)

也就是说通过RC实现了集群中Pod的高可用,减少了传统IT环境中手工运维的工作。

2、举个例子

(1)创建名为nginx_replication.yaml

kind:表示要新建对象的类型

spec.selector:表示需要管理的Pod的label,这里表示包含app: nginx的label的Pod都会被该RC管理

spec.replicas:表示受此RC管理的Pod需要运行的副本数,永远保持副本数为这个数量

spec.template:表示用于定义Pod的模板,比如Pod名称、拥有的label以及Pod中运行的应用等

通过改变RC里Pod模板中的镜像版本,可以实现Pod的升级功能

kubectl apply -f nginx_replication.yaml,此时k8s会在所有可用的Node上,创建3个Pod,并且每个Pod都有一个app: nginx的label,同时每个Pod中都运行了一个nginx容器。

如果某个Pod发生问题,Controller Manager能够及时发现,然后根据RC的定义,创建一个新的Pod

扩缩容:kubectl scale rc nginx --replicas=5

apiVersion: v1

kind: ReplicationController

metadata:

name: nginx

spec:

replicas: 3

selector:

app: nginx

template:

metadata:

name: nginx

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

# 编辑yaml文件

[root@m ~]# vi nginx_replication.yaml

# 创建pod

[root@m ~]# kubectl apply -f nginx_replication.yaml

replicationcontroller/nginx created

# 获取pod信息

[root@m ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-2fw2t 0/1 ContainerCreating 0 15s

nginx-hqcwh 0/1 ContainerCreating 0 15s

nginx-sks62 0/1 ContainerCreating 0 15s

# 查看详细信息

[root@m ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-2fw2t 1/1 Running 0 75s 192.168.80.196 w2 <none> <none>

nginx-hqcwh 1/1 Running 0 75s 192.168.190.68 w1 <none> <none>

nginx-sks62 1/1 Running 0 75s 192.168.190.67 w1 <none> <none>

# 删除指定pod(会自动重启,永远保持副本数不变,即使宕机也会重启)

kubectl delete pods nginx-2fw2t

kubectl get pods

# 扩容为5个

kubectl scale rc nginx --replicas=5

kubectl get pods

nginx-8fctt 0/1 ContainerCreating 0 2s

nginx-9pgwk 0/1 ContainerCreating 0 2s

nginx-hksg8 1/1 Running 0 6m50s

nginx-q7bw5 1/1 Running 0 6m50s

nginx-wzqkf 1/1 Running 0 99s

# 删除pod,必须通过yaml文件进行删除

kubectl delete -f nginx_replication.yaml

3、小总结

ReplicationController通过selector来管理template(pod),selector中的key-value需要对应template中的labels,否则会找不到。

同时支持扩缩容,副本数永远保持不变。

二、ReplicaSet(RS)

1、官方解释

官网:https://kubernetes.io/docs/concepts/workloads/controllers/replicaset/

官网原文:A ReplicaSet’s purpose is to maintain a stable set of replica Pods running at any given time. As such, it is often used to guarantee the availability of a specified number of identical Pods.

在Kubernetes v1.2时,RC就升级成了另外一个概念:Replica Set,官方解释为“下一代RC”

ReplicaSet和RC没有本质的区别,kubectl中绝大部分作用于RC的命令同样适用于RS

RS与RC唯一的区别是:RS支持基于集合的Label Selector(Set-based selector),而RC只支持基于等式的Label Selector(equality-based selector),这使得Replica Set的功能更强

2、举个例子

apiVersion: extensions/v1beta1

kind: ReplicaSet

metadata:

name: frontend

spec:

matchLabels:

tier: frontend

matchExpressions:

- {key:tier,operator: In,values: [frontend]}

template:

...

操作与ReplicationController(RC)一致,注意:一般情况下,我们很少单独使用Replica Set,它主要是被Deployment这个更高的资源对象所使用,从而形成一整套Pod创建、删除、更新的编排机制。当我们使用Deployment时,无须关心它是如何创建和维护Replica Set的,这一切都是自动发生的。同时,无需担心跟其他机制的不兼容问题(比如ReplicaSet不支持rolling-update但Deployment支持)。

三、Deployment(用的最多)

1、官方解释

官网:https://kubernetes.io/docs/concepts/workloads/controllers/deployment/

A Deployment provides declarative updates for Pods and ReplicaSets.

You describe a desired state in a Deployment, and the Deployment Controller changes the actual state to the desired state at a controlled rate. You can define Deployments to create new ReplicaSets, or to remove existing Deployments and adopt all their resources with new Deployments.

Deployment相对RC最大的一个升级就是我们可以随时知道当前Pod“部署”的进度。

2、举个例子

创建一个Deployment对象来生成对应的Replica Set并完成Pod副本的创建过程,检查Deploymnet的状态来看部署动作是否完成(Pod副本的数量是否达到预期的值)

永远保持pod为3个,并且随时可以知道pod的部署进度!

(1)创建nginx_deployment.yaml文件

apiVersion: apps/v1 # 版本

kind: Deployment # 类型

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 3 # 副本数

selector: # selector 匹配pod的lebel

matchLabels:

app: nginx

template: # pod

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.7.9

ports:

- containerPort: 80

# 根据nginx_deployment.yaml文件创建pod

[root@m ~]# kubectl apply -f nginx_deployment.yaml

deployment.apps/nginx-deployment created

# 查看pod

[root@m ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deployment-6dd86d77d-6q66c 1/1 Running 0 75s 192.168.190.70 w1 <none> <none>

nginx-deployment-6dd86d77d-f98jt 1/1 Running 0 75s 192.168.80.199 w2 <none> <none>

nginx-deployment-6dd86d77d-wcxlf 1/1 Running 0 75s 192.168.80.198 w2 <none> <none>

# 查看deployment

[root@m ~]# kubectl get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

nginx-deployment 0/3 3 0 18s

# 查看ReplicaSet

[root@m ~]# kubectl get rs

NAME DESIRED CURRENT READY AGE

nginx-deployment-6dd86d77d 3 3 0 23s

[root@m ~]# kubectl get deployment -o wide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

nginx-deployment 0/3 3 0 29s nginx nginx:1.7.9 app=nginx

(2)版本滚动更新

# 当前nginx的版本

[root@m ~]# kubectl get deployment -o wide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

nginx-deployment 3/3 3 3 2m36s nginx nginx:1.7.9 app=nginx

# 更新nginx的image版本

[root@m ~]# kubectl set image deployment nginx-deployment nginx=nginx:1.9.1

deployment.extensions/nginx-deployment image updated

# 查看更新后的版本

[root@m ~]# kubectl get deployment -o wide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

nginx-deployment 3/3 1 3 3m21s nginx nginx:1.9.1 app=nginx

# 发现之前的版本已经被删了,新版本是启动状态

[root@m ~]# kubectl get rs -o wide

NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

nginx-deployment-6dd86d77d 0 0 0 4m41s nginx nginx:1.7.9 app=nginx,pod-template-hash=6dd86d77d

nginx-deployment-784b7cc96d 3 3 3 96s nginx nginx:1.9.1 app=nginx,pod-template-hash=784b7cc96d

3、注意事项

通常来说我们使用Deployment只管理一个pod,也就是一个应用。

四、Labels and Selectors

1、官方解释

label,顾名思义,就是给一些资源打上标签的,由key-value键值对组成。

官网:https://kubernetes.io/docs/concepts/overview/working-with-objects/labels/

官方解释:Labels are key/value pairs that are attached to objects, such as pods.

2、举个例子

表示名称为nginx-deployment的pod,有一个label,key为app,value为nginx。

我们可以将具有同一个label的pod,交给selector管理

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 3

selector: # 匹配具有同一个label属性的pod标签

matchLabels:

app: nginx

template: # 定义pod的模板

metadata:

labels:

app: nginx # 定义当前pod的label属性,app为key,value为nginx

spec:

containers:

- name: nginx

image: nginx:1.7.9

ports:

- containerPort: 80

# 查看pod的label标签

[root@m ~]# kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

nginx-deployment-784b7cc96d-25js4 1/1 Running 0 4m39s app=nginx,pod-template-hash=784b7cc96d

nginx-deployment-784b7cc96d-792lj 1/1 Running 0 5m24s app=nginx,pod-template-hash=784b7cc96d

nginx-deployment-784b7cc96d-h5x2k 1/1 Running 0 3m54s app=nginx,pod-template-hash=784b7cc96d

五、Namespace

1、什么是Namespace

[root@m ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-deployment-784b7cc96d-25js4 1/1 Running 0 8m19s

nginx-deployment-784b7cc96d-792lj 1/1 Running 0 9m4s

nginx-deployment-784b7cc96d-h5x2k 1/1 Running 0 7m34s

[root@m ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-f67d5b96f-7p9cg 1/1 Running 2 17h

calico-node-6pvpg 1/1 Running 0 141m

calico-node-m9d5l 1/1 Running 0 141m

calico-node-pvvt8 1/1 Running 2 17h

coredns-fb8b8dccf-bbvtp 1/1 Running 2 17h

coredns-fb8b8dccf-hhfb5 1/1 Running 2 17h

etcd-m 1/1 Running 2 17h

kube-apiserver-m 1/1 Running 2 17h

kube-controller-manager-m 1/1 Running 2 17h

kube-proxy-5hmwn 1/1 Running 0 141m

kube-proxy-bv4z4 1/1 Running 0 141m

kube-proxy-rn8sq 1/1 Running 2 17h

kube-scheduler-m 1/1 Running 2 17h

上面我们查看的pod是不一样的,因为这些pod分属不同的Namespace。

# 查看一下当前的命名空间

[root@m ~]# kubectl get namespaces

NAME STATUS AGE

default Active 17h

kube-node-lease Active 17h

kube-public Active 17h

kube-system Active 17h

其实说白了,命名空间就是为了隔离不同的资源,比如:Pod、Service、Deployment等。可以在输入命令的时候指定命名空间-n,如果不指定,则使用默认的命名空间:default。

2、创建命名空间

创建myns-namespace.yaml

apiVersion: v1

kind: Namespace

metadata:

name: myns

# 创建namespace

kubectl apply -f myns-namespace.yaml

# 查看namespace列表使用kubectl get ns也可以

[root@m ~]# kubectl get namespaces

NAME STATUS AGE

default Active 17h

kube-node-lease Active 17h

kube-public Active 17h

kube-system Active 17h

myns Active 11s

3、创建指定命名空间下的pod

# 比如创建一个pod,属于myns命名空间下

vi nginx-pod.yaml

kubectl apply -f nginx-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod

namespace: myns

spec:

containers:

- name: nginx-container

image: nginx

ports:

- containerPort: 80

#查看myns命名空间下的Pod和资源

# 默认查看default namespace

kubectl get pods

# 指定namespace

kubectl get pods -n myns

kubectl get all -n myns

kubectl get pods --all-namespaces #查找所有命名空间下的pod

六、Network

1、回顾docker的网络

(1)单机docker

在单机docker中,容器与容器之间网络通讯是通过网络桥进行连接的。

docker网络详解,自定义docker网络

(2)docker-swarm 多机集群

当docker-swarm 多机集群下,是如何通信的呢?通过overlay网络,将数据包通过互联网进行传输。

(3)k8s中pod网络

k8s里面,又将网络提高了一个复杂度。

我们都知道K8S最小的操作单位是Pod,先思考一下同一个Pod中多个容器要进行通信,是可以的吗?

由官网的这段话可以看出,同一个pod中的容器是共享网络ip地址和端口号的,通信显然没问题:

Each Pod is assigned a unique IP address. Every container in a Pod shares the network namespace, including the IP address and network ports.

那如果是通过容器的名称进行通信呢?就需要将所有pod中的容器加入到同一个容器的网络中,我们把该容器称作为pod中的pause container。

我们发现,每个pod中都会有一个pause container,所有创建的container都会连接到它上面。

[root@w1 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

559a6e5ab486 94ec7e53edfc "nginx -g 'daemon of…" 2 hours ago Up 2 hours k8s_nginx_nginx-deployment-784b7cc96d-h5x2k_default_f730b118-1a17-11ee-ad40-5254004d77d3_0

60f048b660b1 k8s.gcr.io/pause:3.1 "/pause" 2 hours ago Up 2 hours

2、集群内Pod与Node之间的通信

(1)案例分析

我们准备一个nginx-pod、一个busybox-pod:

# nginx_pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod

labels:

app: nginx

spec:

containers:

- name: nginx-container

image: nginx

ports:

- containerPort: 80

# busybox_pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: busybox

labels:

app: busybox

spec:

containers:

- name: busybox

image: busybox

command: ['sh', '-c', 'echo The app is running! && sleep 3600']

# 将两个pod运行起来,并且查看运行情况

kubectl apply -f nginx_pod.yaml

kubectl apply -f busybox_pod.yaml

[root@m ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

busybox 1/1 Running 0 2m1s 192.168.190.73 w1 <none> <none>

nginx-pod 1/1 Running 0 2m25s 192.168.80.201 w2 <none> <none>

我们发现,两个应用分别部署在了w1节点和w2节点,各自生成了一个ip:192.168.190.73、 192.168.80.201

而且,不管在master节点还是worker节点,访问任意一个pod都是可以访问通的。这就是网络插件(比如calico)的功劳,它不光帮我们生成了pod的ip,并且将这些pod之间的网络也都处理好了。

但是这个ip,只限于集群内访问。

(2)How to implement the Kubernetes Cluster networking model–Calico

官网:https://kubernetes.io/docs/concepts/cluster-administration/networking/#the-kubernetes-network-model

- pods on a node can communicate with all pods on all nodes without NAT

- agents on a node (e.g. system daemons, kubelet) can communicate with all pods on that node

- pods in the host network of a node can communicate with all pods on all nodes without NAT

得益于这个网络插件,集群内部不管是pod访问pod,还是pod访问node,还是node访问pod,都是可以成功的。

3、集群内Service-Cluster IP

对于上述的Pod虽然实现了集群内部互相通信,但是Pod是不稳定的,比如通过Deployment管理Pod,随时可能对Pod进行扩缩容,这时候Pod的IP地址是变化的。

我们希望能够有一个固定的IP,使得集群内能够访问。也就是能够把相同或者具有关联的Pod,打上Label,组成Service。而Service有固定的IP,不管Pod怎么创建和销毁,都可以通过Service的IP进行访问。

(1)官方描述

Service官网:https://kubernetes.io/docs/concepts/services-networking/service/

An abstract way to expose an application running on a set of Pods as a network service.

With Kubernetes you don’t need to modify your application to use an unfamiliar service discovery mechanism. Kubernetes gives Pods their own IP addresses and a single DNS name for a set of Pods, and can load-balance across them.

可以将Service理解为一个nginx。

(2)举个例子:pod地址不稳定

创建whoami-deployment.yaml文件,并且apply

apiVersion: apps/v1

kind: Deployment

metadata:

name: whoami-deployment

labels:

app: whoami

spec:

replicas: 3

selector:

matchLabels:

app: whoami

template:

metadata:

labels:

app: whoami

spec:

containers:

- name: whoami

image: jwilder/whoami

ports:

- containerPort: 8000

# 创建

kubectl apply -f whoami-deployment.yaml

# 查看详细信息

[root@m ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

whoami-deployment-678b64444d-6wltg 1/1 Running 0 100s 192.168.80.202 w2 <none> <none>

whoami-deployment-678b64444d-cjpzr 1/1 Running 0 100s 192.168.190.74 w1 <none> <none>

whoami-deployment-678b64444d-v7zfg 1/1 Running 0 100s 192.168.80.203 w2 <none> <none>

# 删除一个pod

[root@m ~]# kubectl delete pod whoami-deployment-678b64444d-6wltg

pod "whoami-deployment-678b64444d-6wltg" deleted

# 会自动又生成一个pod,但是地址明显变化了

[root@m ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

whoami-deployment-678b64444d-cjpzr 1/1 Running 0 2m59s 192.168.190.74 w1 <none> <none>

whoami-deployment-678b64444d-l4dgz 1/1 Running 0 20s 192.168.190.75 w1 <none> <none>

whoami-deployment-678b64444d-v7zfg 1/1 Running 0 2m59s 192.168.80.203 w2 <none> <none>

我们通过测试发现,pod的地址确实是一直在变化着的,并不稳定。

(3)创建service

# 查看当前service,默认只有一个kubernetes的service

[root@m ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 20h

# 创建一个service

[root@m ~]# kubectl expose deployment whoami-deployment

service/whoami-deployment exposed

# 查看当前service

[root@m ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 20h

whoami-deployment ClusterIP 10.109.104.247 <none> 8000/TCP 9s

# 删除service

#kubectl delete service whoami-deployment

此时我们创建的service,也有一个cluster-ip,我们尝试访问这个ip,发现可以访问whoami的这三个node,并且自动做了负载均衡:

[root@m ~]# curl 10.109.104.247:8000

I'm whoami-deployment-678b64444d-l4dgz

[root@m ~]# curl 10.109.104.247:8000

I'm whoami-deployment-678b64444d-cjpzr

[root@m ~]# curl 10.109.104.247:8000

I'm whoami-deployment-678b64444d-v7zfg

(4)查看service详细信息

[root@m ~]# kubectl describe svc whoami-deployment

Name: whoami-deployment

Namespace: default

Labels: app=whoami

Annotations: <none>

Selector: app=whoami

Type: ClusterIP

IP: 10.109.104.247

Port: <unset> 8000/TCP

TargetPort: 8000/TCP

Endpoints: 192.168.190.74:8000,192.168.190.75:8000,192.168.80.203:8000

Session Affinity: None

Events: <none>

我们发现,下面挂在了三个pod,此时我们扩容一下:

# 扩容

kubectl scale deployment whoami-deployment --replicas=5

[root@m ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

whoami-deployment-678b64444d-btvm4 1/1 Running 0 27s 192.168.80.204 w2 <none> <none>

whoami-deployment-678b64444d-cjpzr 1/1 Running 0 14m 192.168.190.74 w1 <none> <none>

whoami-deployment-678b64444d-l4dgz 1/1 Running 0 11m 192.168.190.75 w1 <none> <none>

whoami-deployment-678b64444d-nfg4b 1/1 Running 0 27s 192.168.190.76 w1 <none> <none>

whoami-deployment-678b64444d-v7zfg 1/1 Running 0 14m 192.168.80.203 w2 <none> <none>

此时再查看service详细信息:

[root@m ~]# kubectl describe svc whoami-deployment

Name: whoami-deployment

Namespace: default

Labels: app=whoami

Annotations: <none>

Selector: app=whoami

Type: ClusterIP

IP: 10.109.104.247

Port: <unset> 8000/TCP

TargetPort: 8000/TCP

Endpoints: 192.168.190.74:8000,192.168.190.75:8000,192.168.190.76:8000 + 2 more...

Session Affinity: None

Events: <none>

我们发现,service的ip,在集群内任意节点和pod也都能够访问(外网不能访问)。

(5)使用yaml创建service

其实对于Service的创建,不仅仅可以使用kubectl expose,也可以定义一个yaml文件

apiVersion: v1

kind: Service # 类型

metadata:

name: my-service # name

spec:

selector:

app: MyApp # 对应deployment的selector与label

ports:

- protocol: TCP

port: 80 # service自己的端口

targetPort: 9376 # 目标端口,对应deployment的端口

type: Cluster

(6)小总结

其实Service存在的意义就是为了Pod的不稳定性,而上述探讨的就是关于Service的一种类型Cluster IP,只能供集群内访问。

4、外部服务访问集群中的pod:Service-NodePort(不推荐)

相当于在Node节点上,对外暴露一个端口,这个端口与pod服务进行绑定。

(1)举个例子

根据whoami-deployment.yaml创建pod:

apiVersion: apps/v1

kind: Deployment

metadata:

name: whoami-deployment

labels:

app: whoami

spec:

replicas: 3

selector:

matchLabels:

app: whoami

template:

metadata:

labels:

app: whoami

spec:

containers:

- name: whoami

image: jwilder/whoami

ports:

- containerPort: 8000

# 创建

kubectl apply -f whoami-deployment.yaml

# 查看详细信息

[root@m ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

whoami-deployment-678b64444d-cn462 1/1 Running 0 10s 192.168.80.206 w2 <none> <none>

whoami-deployment-678b64444d-j8r7f 1/1 Running 0 10s 192.168.190.77 w1 <none> <none>

whoami-deployment-678b64444d-wwl47 1/1 Running 0 10s 192.168.80.205 w2 <none> <none>

# 创建一个NodePort类型的service

[root@m ~]# kubectl expose deployment whoami-deployment --type=NodePort

service/whoami-deployment exposed

# 查看service

[root@m ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 21h

whoami-deployment NodePort 10.100.197.231 <none> 8000:31222/TCP 6s

我们发现,这个service的类型就是NodePort 了,仍然有一个cluster-ip,在集群内部使用这个cluster-ip仍然是可用的:

[root@m ~]# curl 10.100.197.231:8000

I'm whoami-deployment-678b64444d-j8r7f

[root@m ~]# curl 10.100.197.231:8000

I'm whoami-deployment-678b64444d-wwl47

[root@m ~]# curl 10.100.197.231:8000

I'm whoami-deployment-678b64444d-cn462

我们还发现PORT那一栏,有这样的一个标识:8000:31222/TCP,说明8000端口已经被映射到了31222端口。

我们可以从外界来访问集群内部的服务了。

(2)总结

使用NodePort的方式可以实现外部服务访问集群中的pod,但是端口占用太多,生产不推荐使用。

[root@m ~]# kubectl delete -f whoami-deployment.yaml

deployment.apps "whoami-deployment" deleted

[root@m ~]# kubectl delete svc whoami-deployment

service "whoami-deployment" deleted

(3)使用yaml文件一键部署

apiVersion: apps/v1

kind: Deployment

metadata:

name: tomcat-deployment

labels:

app: tomcat

spec:

replicas: 3

selector:

matchLabels:

app: tomcat

template:

metadata:

labels:

app: tomcat

spec:

containers:

- name: tomcat

image: tomcat

ports:

- containerPort: 8080

---

apiVersion: v1

kind: Service

metadata:

name: tomcat-service

spec:

ports:

- port: 80

protocol: TCP

targetPort: 8080

selector:

app: tomcat

type: NodePort

vi my-tomcat.yaml

kubectl apply -f my-tomcat.yaml

kubectl get pods -o wide

kubectl get deployment

kubectl get svc

浏览器想要访问这个tomcat,也就是外部要访问该tomcat,用之前的Service-NodePort的方式是可以的,比如暴露一个32008端口,只需要访问192.168.0.61:32008即可。

Service-NodePort的方式生产环境不推荐使用。

5、外部服务访问集群中的pod:Service-LoadBalance(不推荐)

Service-LoadBalance通常需要第三方云提供商支持,有约束性,我们也不推荐使用

6、外部服务访问集群中的pod:Ingress(推荐)

(1)官网解释

官网:https://kubernetes.io/docs/concepts/services-networking/ingress/

An API object that manages external access to the services in a cluster, typically HTTP.

Ingress can provide load balancing, SSL termination and name-based virtual hosting.

Ingress就是帮助我们访问集群内的服务的。

官网Ingress:https://kubernetes.io/docs/concepts/services-networking/ingress/

GitHub Ingress Nginx:https://github.com/kubernetes/ingress-nginx

Nginx Ingress Controller:https://kubernetes.github.io/ingress-nginx/

(2)举个例子

① 先删除之前的tomcat及service

# 先删除之前的tomcat及service

kubectl delete -f my-tomcat.yaml

② 部署tomcat

apiVersion: apps/v1

kind: Deployment

metadata:

name: tomcat-deployment

labels:

app: tomcat

spec:

replicas: 1

selector:

matchLabels:

app: tomcat

template:

metadata:

labels:

app: tomcat

spec:

containers:

- name: tomcat

image: tomcat

ports:

- containerPort: 8080

---

apiVersion: v1

kind: Service

metadata:

name: tomcat-service

spec:

ports:

- port: 80

protocol: TCP

targetPort: 8080

selector:

app: tomcat

# 部署tomcat

vi tomcat.yaml

kubectl apply -f tomcat.yaml

[root@m ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

tomcat-deployment-6b9d6f8547-6d4z4 1/1 Running 0 2m22s 192.168.80.208 w2 <none> <none>

[root@m ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 22h

tomcat-service ClusterIP 10.102.167.248 <none> 80/TCP 2m36s

此时,集群内访问tomcat是可以访问的:

curl 192.168.80.208:8080

curl 10.102.167.248

③ 部署ingress-controller

通过NodePort的方式会占用所有节点的主机端口,我们使用ingress的方式只需要指定一台主机端口即可。

# 确保nginx-controller运行到w1节点上

kubectl label node w1 name=ingress

# 使用HostPort方式运行,需要增加配置(看下图)

#hostNetwork: true

# 搜索nodeSelector,并且要确保w1节点上的80和443端口没有被占用,镜像拉取需要较长的时间,这块注意一下哦

kubectl apply -f mandatory.yaml

kubectl get all -n ingress-nginx

# mandatory.yaml

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: nginx-configuration

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: tcp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: udp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: nginx-ingress-clusterrole

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses/status

verbs:

- update

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: Role

metadata:

name: nginx-ingress-role

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

resourceNames:

# Defaults to "<election-id>-<ingress-class>"

# Here: "<ingress-controller-leader>-<nginx>"

# This has to be adapted if you change either parameter

# when launching the nginx-ingress-controller.

- "ingress-controller-leader-nginx"

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- ""

resources:

- endpoints

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: nginx-ingress-role-nisa-binding

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: nginx-ingress-role

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: nginx-ingress-clusterrole-nisa-binding

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: nginx-ingress-clusterrole

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-ingress-controller

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

annotations:

prometheus.io/port: "10254"

prometheus.io/scrape: "true"

spec:

# wait up to five minutes for the drain of connections

terminationGracePeriodSeconds: 300

serviceAccountName: nginx-ingress-serviceaccount

hostNetwork: true

nodeSelector:

name: ingress

kubernetes.io/os: linux

containers:

- name: nginx-ingress-controller

image: quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.26.1

args:

- /nginx-ingress-controller

- --configmap=$(POD_NAMESPACE)/nginx-configuration

- --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services

- --udp-services-configmap=$(POD_NAMESPACE)/udp-services

- --publish-service=$(POD_NAMESPACE)/ingress-nginx

- --annotations-prefix=nginx.ingress.kubernetes.io

securityContext:

allowPrivilegeEscalation: true

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

# www-data -> 33

runAsUser: 33

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

ports:

- name: http

containerPort: 80

- name: https

containerPort: 443

livenessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

---

# 通过我们的配置,一定是部署在w1节点上的

[root@m ~]# kubectl get all -n ingress-nginx

NAME READY STATUS RESTARTS AGE

pod/nginx-ingress-controller-7c66dcdd6c-qrgpg 1/1 Running 0 113s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/nginx-ingress-controller 1/1 1 1 113s

NAME DESIRED CURRENT READY AGE

replicaset.apps/nginx-ingress-controller-7c66dcdd6c 1 1 1 113s

[root@m ~]# kubectl get pods -o wide -n ingress-nginx

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-ingress-controller-7c66dcdd6c-qrgpg 1/1 Running 0 116s 192.168.56.101 w1 <none> <none>

此时,w1节点的80、443端口就打通了。

④ 创建ingress并定义转发规则

#nginx-ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: nginx-ingress

spec:

rules:

- host: tomcat.cxf.com # 域名

http:

paths:

- path: /

backend:

serviceName: tomcat-service

servicePort: 80

# 创建ingress

kubectl apply -f nginx-ingress.yaml

[root@m ~]# kubectl get ingress

NAME HOSTS ADDRESS PORTS AGE

nginx-ingress tomcat.cxf.com 80 18s

[root@m ~]# kubectl describe ingress nginx-ingress

Name: nginx-ingress

Namespace: default

Address:

Default backend: default-http-backend:80 (<none>)

Rules:

Host Path Backends

---- ---- --------

tomcat.cxf.com

/ tomcat-service:80 (192.168.80.208:8080)

Annotations:

kubectl.kubernetes.io/last-applied-configuration: {"apiVersion":"extensions/v1beta1","kind":"Ingress","metadata":{"annotations":{},"name":"nginx-ingress","namespace":"default"},"spec":{"rules":[{"host":"tomcat.cxf.com","http":{"paths":[{"backend":{"serviceName":"tomcat-service","servicePort":80},"path":"/"}]}}]}}

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal CREATE 29s nginx-ingress-controller Ingress default/nginx-ingress

此时访问http://tomcat.cxf.com/ 就可以访问到tomcat了(需要使用域名)。

![NSS [SWPUCTF 2022 新生赛]xff](https://img-blog.csdnimg.cn/img_convert/652bb3ec9183b5831f0d29ec82771b79.png)