Firefly(流萤): 中文对话式大语言模型在本文中,笔者将介绍关于Firefly(流萤)模型的工作,一个中文对话式大语言模型。https://mp.weixin.qq.com/s/TX7wj8IzD_EaMTvk0bjRtA一个支持中文的176B开源基础模型BLOOM:从数据源、训练任务到性能评估全文总结一个支持中文的176B开源基础模型BLOOM:从数据源、训练任务到性能评估全文总结

https://mp.weixin.qq.com/s/4osRaxrbAGLHqO1mDz2kPQ怎么裁剪LLM(大语言模型)的vocab(词表)? - 知乎Part1 前言对于一些多语言的大语言模型而言,它的词表往往很大。在下游使用这些模型的时候,可能我们不需要其它的一些语言,例如只需要中文和英文,此时,我们可以对其vocab进行裁剪,既可以大大减少参数量,也能…

![]() https://zhuanlan.zhihu.com/p/623691267关于LLAMA词表的疑问 · Issue #12 · Facico/Chinese-Vicuna · GitHub您好!感谢开源这么棒的项目,我有些疑问,很多人说LLAMA词表中只有几百个中文字,显然是不全的,这方面大佬是怎么考虑的?

https://zhuanlan.zhihu.com/p/623691267关于LLAMA词表的疑问 · Issue #12 · Facico/Chinese-Vicuna · GitHub您好!感谢开源这么棒的项目,我有些疑问,很多人说LLAMA词表中只有几百个中文字,显然是不全的,这方面大佬是怎么考虑的?![]() https://github.com/Facico/Chinese-Vicuna/issues/12【万字长文】LLaMA, ChatGLM, BLOOM的参数高效微调实践 - 知乎1. 开源基座模型对比大语言模型的训练分为两个阶段:(1)在海量文本语料上的无监督预训练,学习通用的语义表示和世界知识。(2)在小规模数据上,进行指令微调和基于人类反馈的强化学习,更好地对齐最终任务和人…

https://github.com/Facico/Chinese-Vicuna/issues/12【万字长文】LLaMA, ChatGLM, BLOOM的参数高效微调实践 - 知乎1. 开源基座模型对比大语言模型的训练分为两个阶段:(1)在海量文本语料上的无监督预训练,学习通用的语义表示和世界知识。(2)在小规模数据上,进行指令微调和基于人类反馈的强化学习,更好地对齐最终任务和人…![]() https://zhuanlan.zhihu.com/p/635710004https://github.com/yangjianxin1/LLMPruner/issues/2

https://zhuanlan.zhihu.com/p/635710004https://github.com/yangjianxin1/LLMPruner/issues/2![]() https://github.com/yangjianxin1/LLMPruner/issues/2GitHub - yangjianxin1/Firefly: Firefly(流萤): 中文对话式大语言模型(全量微调+QLoRA)Firefly(流萤): 中文对话式大语言模型(全量微调+QLoRA). Contribute to yangjianxin1/Firefly development by creating an account on GitHub.

https://github.com/yangjianxin1/LLMPruner/issues/2GitHub - yangjianxin1/Firefly: Firefly(流萤): 中文对话式大语言模型(全量微调+QLoRA)Firefly(流萤): 中文对话式大语言模型(全量微调+QLoRA). Contribute to yangjianxin1/Firefly development by creating an account on GitHub.![]() https://github.com/yangjianxin1/Firefly1.tokenizer

https://github.com/yangjianxin1/Firefly1.tokenizer

流萤的词表是从bloom模型裁剪而来,bloom是多语言的大模型,其中中文预料占比达到17.7%,仅次于英文,其中裁剪后的bloom词表来自于澜舟科技的孟子模型(langboat)。

在代码层面是先将新词表中的token建立到就词表中token的映射,再将就词表对应的模型中的权重更新到新模型中,更新embedding层和head层。这两步对整体参数量的缩减效果很明显。

通过裁剪将bloom的词表从25w裁剪到了46145。

2.数据

指令数据集

firefly-train-1.1M:firefly自己收集的常见的nlp任务数据,moss-003-sft-data:moss开源的中英文多轮对话数据100w+,ultrachat:清华开源的英文多轮对话数据,140w+

{

"conversation_id":44,

"category":"Brainstorming",

"conversation":[

{

"human":"怎么能够有效地提升个人能力?",

"assistant":"提升个人能力可以通过以下几个方面实现:\n\n1. 学习新知识和技能:阅读相关书籍、参加课程和工作坊、观看教育视频等学习方式,可以帮助你掌握新的知识和技能,从而提升个人能力。\n\n2. 持续练习和实践:不断地练习和实践所学的知识和技能,可以帮助你更好地掌握和应用这些技能,提高你的表现和效率。\n\n3. 寻求反馈和改进:寻求他人的反馈和建议可以帮助你发现自己的不足之处,进一步完善和改进自己的能力。\n\n4. 建立良好的工作和生活习惯:建立良好的工作和生活习惯,如合理安排时间、注意健康、保持积极态度等,可以提高你的工作效率和生活品质,从而提升个人能力。\n\n5. 社交和交流:与他人交流和合作,可以帮助你更好地了解他人的观点和经验,从而提升自己的能力和见识。"

},

{

"human":"对于初学者来说,如何快速掌握编程技能?",

"assistant":"以下是几个建议,可以帮助初学者快速掌握编程技能:\n\n1. 学习基础知识:建议先学习编程语言的基础知识和语法,例如变量、数据类型、控制结构、函数等。可以通过在线课程、编程书籍、视频教程等方式学习。\n\n2. 实践编程:进行实际的编程练习是非常重要的,可以通过参加编程挑战、编写小项目等方式来进行实践。\n\n3. 寻求反馈:可以寻求他人的反馈和建议,例如在线编程社区、编程导师等,他们可以帮助你发现问题并及时纠正。\n\n4. 阅读优秀代码:阅读和分析优秀的代码可以帮助你学习其他程序员的编程思路和技巧,从而提高自己的编程能力。\n\n5. 不断学习和更新:编程技术和工具在不断更新和发展,因此需要不断学习新的知识和技能,例如学习新的编程语言、框架、工具等,以跟上技术的发展和变化。\n\n总之,学习编程需要耐心和坚持,并且需要不断地实践和学习。通过以上建议,可以帮助初学者快速掌握编程技能。"

},

{

"human":"除了参加编程挑战和阅读代码,还有哪些方式可以提升自己的编程能力呢?",

"assistant":"除了参加编程挑战和阅读代码,还有以下几种方式可以提升自己的编程能力:\n\n1. 参与开源项目:加入一个开源项目,可以让你了解到实际的软件开发流程和团队合作方式,并且可以与其他开发人员合作,学习和分享编程技巧和经验。\n\n2. 进行代码重构:对已经编写的代码进行重构,可以帮助你了解更好的编程实践和设计模式,从而提高代码的可读性和可维护性。\n\n3. 练习算法和数据结构:算法和数据结构是编程中的重要基础,练习算法和数据结构可以帮助你了解不同的数据结构和算法,从而更好地解决实际问题。\n\n4. 学习软件工程和架构:学习软件工程和架构的知识,可以帮助你了解如何设计和构建可靠、可扩展和可维护的软件系统。\n\n5. 参加编程社区和论坛:参加编程社区和论坛可以让你与其他开发人员分享和讨论编程经验和技巧,了解最新的技术趋势和解决方案。\n\n总之,提升编程能力需要不断地学习和实践,并与其他开发人员和编程专家进行交流和合作,以获取更多的经验和知识。"

},

{

"human":"你有没有推荐的编程挑战网站?",

"assistant":"当然,以下是一些值得推荐的编程挑战网站:\n\n1. HackerRank:HackerRank 是一个全球领先的技术招聘平台,提供数百种编程挑战和比赛,包括算法、数据结构、数学、SQL、AI 等多个技术领域。\n\n2. LeetCode:LeetCode 是一个在线编程学习和实践平台,提供数千道编程题目,主要涵盖算法和数据结构。\n\n3. Codeforces:Codeforces 是一个全球知名的编程竞赛网站,提供数百个比赛和练习题目,主要涵盖算法和数据结构。\n\n4. Codewars:Codewars 是一个在线编程学习和实践平台,提供数千道编程题目,主要涵盖算法、数据结构、面向对象编程等多个技术领域。\n\n5. Project Euler:Project Euler 是一个非营利性的在线编程挑战平台,提供一系列数学和计算机科学问题,需要用编程语言进行解答。\n\n以上这些编程挑战网站可以帮助你提高编程能力,并且可以与其他编程爱好者进行交流和竞争。"

},

{

"human":"在学习编程的过程中,如何避免陷入困境和放弃呢?",

"assistant":"学习编程是一个需要长期坚持和不断学习的过程,以下是一些避免陷入困境和放弃的方法:\n\n1. 制定合理的学习计划:制定合理的学习计划,包括学习时间、学习内容、目标等,可以帮助你更好地管理时间和精力,避免学习过程中的松懈和分心。\n\n2. 寻找合适的学习资源:选择适合自己的学习资源,例如在线课程、编程书籍、视频教程等,可以让你更好地了解和掌握编程知识和技能。\n\n3. 寻求帮助和支持:在学习过程中,遇到问题和困难是很正常的,可以寻求他人的帮助和支持,例如参加编程社区、找到编程导师等。\n\n4. 进行实践和项目:实践和项目是学习编程的重要组成部分,可以帮助你更好地了解和掌握编程技能,同时也可以提高学习的兴趣和动力。\n\n5. 坚持并保持兴趣:坚持学习和保持兴趣是学习编程的关键。可以通过参加编程社区、参加编程竞赛、与其他编程爱好者交流等方式来保持兴趣和动力。\n\n总之,学习编程需要耐心和坚持,并需要不断学习和实践。通过以上方法可以帮助你避免陷入困境和放弃。"

}

],

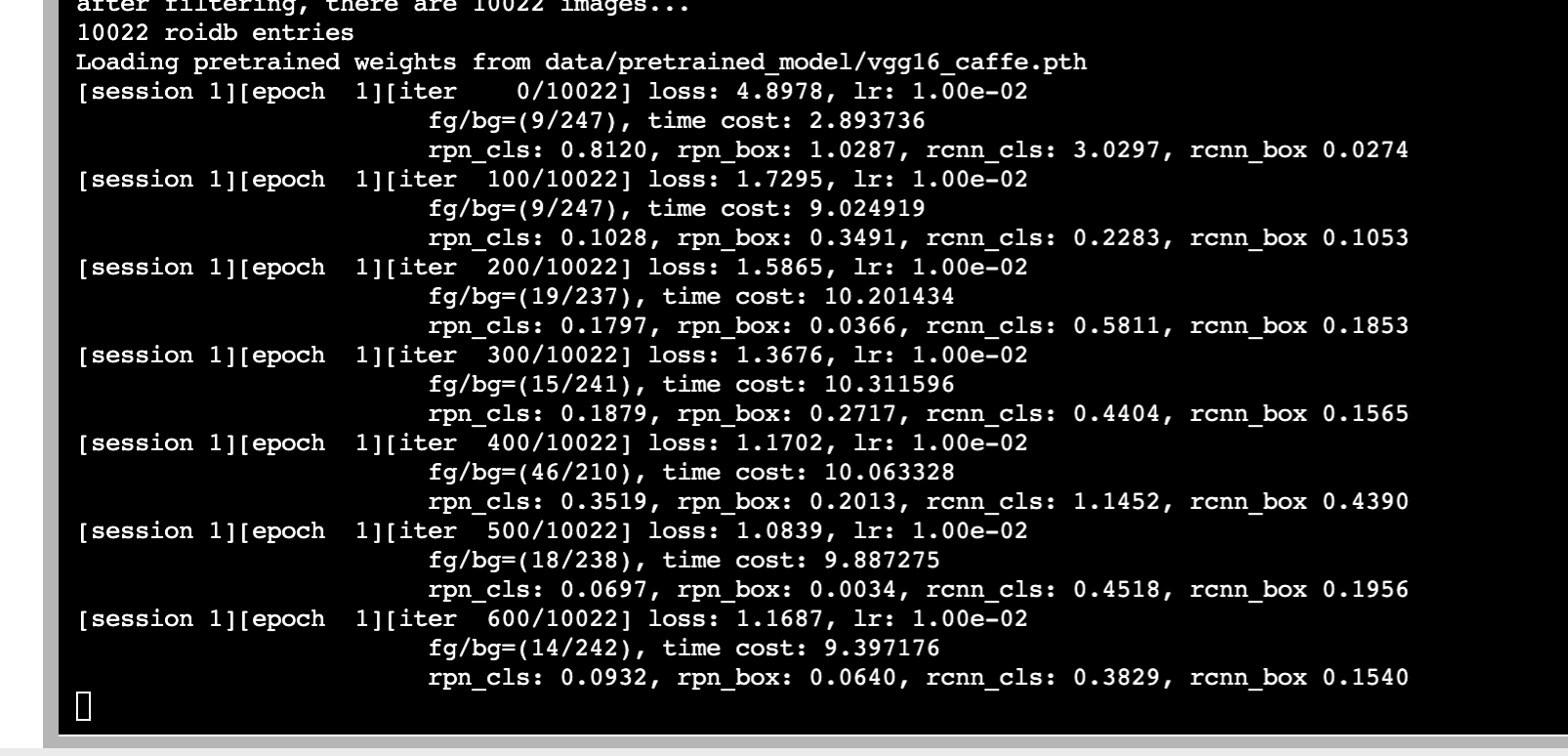

}3.模型训练:

目前提供了全量参数质量微调,qlora高效指令微调,未提供rlhf的对齐精调。

BloomForCausalLM(

(transformer): BloomModel(

(word_embeddings): Embedding(46145, 2048)

(word_embeddings_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(h): ModuleList(

(0): BloomBlock(

(input_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(self_attention): BloomAttention(

(query_key_value): Linear(in_features=2048, out_features=6144, bias=True)

(dense): Linear(in_features=2048, out_features=2048, bias=True)

(attention_dropout): Dropout(p=0.0, inplace=False)

)

(post_attention_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(mlp): BloomMLP(

(dense_h_to_4h): Linear(in_features=2048, out_features=8192, bias=True)

(gelu_impl): BloomGelu()

(dense_4h_to_h): Linear(in_features=8192, out_features=2048, bias=True)

)

)

(1): BloomBlock(

(input_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(self_attention): BloomAttention(

(query_key_value): Linear(in_features=2048, out_features=6144, bias=True)

(dense): Linear(in_features=2048, out_features=2048, bias=True)

(attention_dropout): Dropout(p=0.0, inplace=False)

)

(post_attention_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(mlp): BloomMLP(

(dense_h_to_4h): Linear(in_features=2048, out_features=8192, bias=True)

(gelu_impl): BloomGelu()

(dense_4h_to_h): Linear(in_features=8192, out_features=2048, bias=True)

)

)

(2): BloomBlock(

(input_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(self_attention): BloomAttention(

(query_key_value): Linear(in_features=2048, out_features=6144, bias=True)

(dense): Linear(in_features=2048, out_features=2048, bias=True)

(attention_dropout): Dropout(p=0.0, inplace=False)

)

(post_attention_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(mlp): BloomMLP(

(dense_h_to_4h): Linear(in_features=2048, out_features=8192, bias=True)

(gelu_impl): BloomGelu()

(dense_4h_to_h): Linear(in_features=8192, out_features=2048, bias=True)

)

)

(3): BloomBlock(

(input_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(self_attention): BloomAttention(

(query_key_value): Linear(in_features=2048, out_features=6144, bias=True)

(dense): Linear(in_features=2048, out_features=2048, bias=True)

(attention_dropout): Dropout(p=0.0, inplace=False)

)

(post_attention_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(mlp): BloomMLP(

(dense_h_to_4h): Linear(in_features=2048, out_features=8192, bias=True)

(gelu_impl): BloomGelu()

(dense_4h_to_h): Linear(in_features=8192, out_features=2048, bias=True)

)

)

(4): BloomBlock(

(input_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(self_attention): BloomAttention(

(query_key_value): Linear(in_features=2048, out_features=6144, bias=True)

(dense): Linear(in_features=2048, out_features=2048, bias=True)

(attention_dropout): Dropout(p=0.0, inplace=False)

)

(post_attention_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(mlp): BloomMLP(

(dense_h_to_4h): Linear(in_features=2048, out_features=8192, bias=True)

(gelu_impl): BloomGelu()

(dense_4h_to_h): Linear(in_features=8192, out_features=2048, bias=True)

)

)

(5): BloomBlock(

(input_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(self_attention): BloomAttention(

(query_key_value): Linear(in_features=2048, out_features=6144, bias=True)

(dense): Linear(in_features=2048, out_features=2048, bias=True)

(attention_dropout): Dropout(p=0.0, inplace=False)

)

(post_attention_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(mlp): BloomMLP(

(dense_h_to_4h): Linear(in_features=2048, out_features=8192, bias=True)

(gelu_impl): BloomGelu()

(dense_4h_to_h): Linear(in_features=8192, out_features=2048, bias=True)

)

)

(6): BloomBlock(

(input_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(self_attention): BloomAttention(

(query_key_value): Linear(in_features=2048, out_features=6144, bias=True)

(dense): Linear(in_features=2048, out_features=2048, bias=True)

(attention_dropout): Dropout(p=0.0, inplace=False)

)

(post_attention_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(mlp): BloomMLP(

(dense_h_to_4h): Linear(in_features=2048, out_features=8192, bias=True)

(gelu_impl): BloomGelu()

(dense_4h_to_h): Linear(in_features=8192, out_features=2048, bias=True)

)

)

(7): BloomBlock(

(input_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(self_attention): BloomAttention(

(query_key_value): Linear(in_features=2048, out_features=6144, bias=True)

(dense): Linear(in_features=2048, out_features=2048, bias=True)

(attention_dropout): Dropout(p=0.0, inplace=False)

)

(post_attention_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(mlp): BloomMLP(

(dense_h_to_4h): Linear(in_features=2048, out_features=8192, bias=True)

(gelu_impl): BloomGelu()

(dense_4h_to_h): Linear(in_features=8192, out_features=2048, bias=True)

)

)

(8): BloomBlock(

(input_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(self_attention): BloomAttention(

(query_key_value): Linear(in_features=2048, out_features=6144, bias=True)

(dense): Linear(in_features=2048, out_features=2048, bias=True)

(attention_dropout): Dropout(p=0.0, inplace=False)

)

(post_attention_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(mlp): BloomMLP(

(dense_h_to_4h): Linear(in_features=2048, out_features=8192, bias=True)

(gelu_impl): BloomGelu()

(dense_4h_to_h): Linear(in_features=8192, out_features=2048, bias=True)

)

)

(9): BloomBlock(

(input_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(self_attention): BloomAttention(

(query_key_value): Linear(in_features=2048, out_features=6144, bias=True)

(dense): Linear(in_features=2048, out_features=2048, bias=True)

(attention_dropout): Dropout(p=0.0, inplace=False)

)

(post_attention_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(mlp): BloomMLP(

(dense_h_to_4h): Linear(in_features=2048, out_features=8192, bias=True)

(gelu_impl): BloomGelu()

(dense_4h_to_h): Linear(in_features=8192, out_features=2048, bias=True)

)

)

(10): BloomBlock(

(input_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(self_attention): BloomAttention(

(query_key_value): Linear(in_features=2048, out_features=6144, bias=True)

(dense): Linear(in_features=2048, out_features=2048, bias=True)

(attention_dropout): Dropout(p=0.0, inplace=False)

)

(post_attention_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(mlp): BloomMLP(

(dense_h_to_4h): Linear(in_features=2048, out_features=8192, bias=True)

(gelu_impl): BloomGelu()

(dense_4h_to_h): Linear(in_features=8192, out_features=2048, bias=True)

)

)

(11): BloomBlock(

(input_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(self_attention): BloomAttention(

(query_key_value): Linear(in_features=2048, out_features=6144, bias=True)

(dense): Linear(in_features=2048, out_features=2048, bias=True)

(attention_dropout): Dropout(p=0.0, inplace=False)

)

(post_attention_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(mlp): BloomMLP(

(dense_h_to_4h): Linear(in_features=2048, out_features=8192, bias=True)

(gelu_impl): BloomGelu()

(dense_4h_to_h): Linear(in_features=8192, out_features=2048, bias=True)

)

)

(12): BloomBlock(

(input_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(self_attention): BloomAttention(

(query_key_value): Linear(in_features=2048, out_features=6144, bias=True)

(dense): Linear(in_features=2048, out_features=2048, bias=True)

(attention_dropout): Dropout(p=0.0, inplace=False)

)

(post_attention_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(mlp): BloomMLP(

(dense_h_to_4h): Linear(in_features=2048, out_features=8192, bias=True)

(gelu_impl): BloomGelu()

(dense_4h_to_h): Linear(in_features=8192, out_features=2048, bias=True)

)

)

(13): BloomBlock(

(input_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(self_attention): BloomAttention(

(query_key_value): Linear(in_features=2048, out_features=6144, bias=True)

(dense): Linear(in_features=2048, out_features=2048, bias=True)

(attention_dropout): Dropout(p=0.0, inplace=False)

)

(post_attention_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(mlp): BloomMLP(

(dense_h_to_4h): Linear(in_features=2048, out_features=8192, bias=True)

(gelu_impl): BloomGelu()

(dense_4h_to_h): Linear(in_features=8192, out_features=2048, bias=True)

)

)

(14): BloomBlock(

(input_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(self_attention): BloomAttention(

(query_key_value): Linear(in_features=2048, out_features=6144, bias=True)

(dense): Linear(in_features=2048, out_features=2048, bias=True)

(attention_dropout): Dropout(p=0.0, inplace=False)

)

(post_attention_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(mlp): BloomMLP(

(dense_h_to_4h): Linear(in_features=2048, out_features=8192, bias=True)

(gelu_impl): BloomGelu()

(dense_4h_to_h): Linear(in_features=8192, out_features=2048, bias=True)

)

)

(15): BloomBlock(

(input_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(self_attention): BloomAttention(

(query_key_value): Linear(in_features=2048, out_features=6144, bias=True)

(dense): Linear(in_features=2048, out_features=2048, bias=True)

(attention_dropout): Dropout(p=0.0, inplace=False)

)

(post_attention_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(mlp): BloomMLP(

(dense_h_to_4h): Linear(in_features=2048, out_features=8192, bias=True)

(gelu_impl): BloomGelu()

(dense_4h_to_h): Linear(in_features=8192, out_features=2048, bias=True)

)

)

(16): BloomBlock(

(input_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(self_attention): BloomAttention(

(query_key_value): Linear(in_features=2048, out_features=6144, bias=True)

(dense): Linear(in_features=2048, out_features=2048, bias=True)

(attention_dropout): Dropout(p=0.0, inplace=False)

)

(post_attention_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(mlp): BloomMLP(

(dense_h_to_4h): Linear(in_features=2048, out_features=8192, bias=True)

(gelu_impl): BloomGelu()

(dense_4h_to_h): Linear(in_features=8192, out_features=2048, bias=True)

)

)

(17): BloomBlock(

(input_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(self_attention): BloomAttention(

(query_key_value): Linear(in_features=2048, out_features=6144, bias=True)

(dense): Linear(in_features=2048, out_features=2048, bias=True)

(attention_dropout): Dropout(p=0.0, inplace=False)

)

(post_attention_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(mlp): BloomMLP(

(dense_h_to_4h): Linear(in_features=2048, out_features=8192, bias=True)

(gelu_impl): BloomGelu()

(dense_4h_to_h): Linear(in_features=8192, out_features=2048, bias=True)

)

)

(18): BloomBlock(

(input_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(self_attention): BloomAttention(

(query_key_value): Linear(in_features=2048, out_features=6144, bias=True)

(dense): Linear(in_features=2048, out_features=2048, bias=True)

(attention_dropout): Dropout(p=0.0, inplace=False)

)

(post_attention_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(mlp): BloomMLP(

(dense_h_to_4h): Linear(in_features=2048, out_features=8192, bias=True)

(gelu_impl): BloomGelu()

(dense_4h_to_h): Linear(in_features=8192, out_features=2048, bias=True)

)

)

(19): BloomBlock(

(input_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(self_attention): BloomAttention(

(query_key_value): Linear(in_features=2048, out_features=6144, bias=True)

(dense): Linear(in_features=2048, out_features=2048, bias=True)

(attention_dropout): Dropout(p=0.0, inplace=False)

)

(post_attention_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(mlp): BloomMLP(

(dense_h_to_4h): Linear(in_features=2048, out_features=8192, bias=True)

(gelu_impl): BloomGelu()

(dense_4h_to_h): Linear(in_features=8192, out_features=2048, bias=True)

)

)

(20): BloomBlock(

(input_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(self_attention): BloomAttention(

(query_key_value): Linear(in_features=2048, out_features=6144, bias=True)

(dense): Linear(in_features=2048, out_features=2048, bias=True)

(attention_dropout): Dropout(p=0.0, inplace=False)

)

(post_attention_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(mlp): BloomMLP(

(dense_h_to_4h): Linear(in_features=2048, out_features=8192, bias=True)

(gelu_impl): BloomGelu()

(dense_4h_to_h): Linear(in_features=8192, out_features=2048, bias=True)

)

)

(21): BloomBlock(

(input_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(self_attention): BloomAttention(

(query_key_value): Linear(in_features=2048, out_features=6144, bias=True)

(dense): Linear(in_features=2048, out_features=2048, bias=True)

(attention_dropout): Dropout(p=0.0, inplace=False)

)

(post_attention_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(mlp): BloomMLP(

(dense_h_to_4h): Linear(in_features=2048, out_features=8192, bias=True)

(gelu_impl): BloomGelu()

(dense_4h_to_h): Linear(in_features=8192, out_features=2048, bias=True)

)

)

(22): BloomBlock(

(input_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(self_attention): BloomAttention(

(query_key_value): Linear(in_features=2048, out_features=6144, bias=True)

(dense): Linear(in_features=2048, out_features=2048, bias=True)

(attention_dropout): Dropout(p=0.0, inplace=False)

)

(post_attention_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(mlp): BloomMLP(

(dense_h_to_4h): Linear(in_features=2048, out_features=8192, bias=True)

(gelu_impl): BloomGelu()

(dense_4h_to_h): Linear(in_features=8192, out_features=2048, bias=True)

)

)

(23): BloomBlock(

(input_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(self_attention): BloomAttention(

(query_key_value): Linear(in_features=2048, out_features=6144, bias=True)

(dense): Linear(in_features=2048, out_features=2048, bias=True)

(attention_dropout): Dropout(p=0.0, inplace=False)

)

(post_attention_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(mlp): BloomMLP(

(dense_h_to_4h): Linear(in_features=2048, out_features=8192, bias=True)

(gelu_impl): BloomGelu()

(dense_4h_to_h): Linear(in_features=8192, out_features=2048, bias=True)

)

)

)

(ln_f): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

)

(lm_head): Linear(in_features=2048, out_features=46145, bias=False)

)train.py: error: unrecognized arguments: --local_rank=0:

应该是作者deepspeed代码写的有问题,这块一直没跑通,最后用的torch直接跑的,python train.py。

安装requirements.txt装就没问题,应该是torch版本问题,1.13

ValueError:Attempting to unscale fp16 gradients.

解决不了,暂时用的float32来搞吧,模型换成bloom-1b4

2b6,fp16的话,显存16g能跑,fp32的话不行,1b4,fp32的话,显存16g能跑,把max_seq_length再缩一缩。

transformers中集成了deepspeed,下面是全量参数微调

==== BloomModel

# Embedding + LN Embedding

self.word_embeddings = nn.Embedding(config.vocab_size, self.embed_dim)

self.word_embeddings_layernorm = LayerNorm(self.embed_dim, eps=config.layer_norm_epsilon)

# Transformer blocks

self.h = nn.ModuleList([BloomBlock(config) for _ in range(config.num_hidden_layers)])

# Final Layer Norm

self.ln_f = LayerNorm(self.embed_dim, eps=config.layer_norm_epsilon)

==== BloomBlock:

self.input_layernorm = LayerNorm(hidden_size, eps=config.layer_norm_epsilon)

self.num_heads = config.n_head

self.self_attention = BloomAttention(config)

self.post_attention_layernorm = LayerNorm(hidden_size, eps=config.layer_norm_epsilon)

self.mlp = BloomMLP(config)

self.apply_residual_connection_post_layernorm = config.apply_residual_connection_post_layernorm

self.hidden_dropout = config.hidden_dropout

==== BloomAttention

self.query_key_value = nn.Linear(self.hidden_size, 3 * self.hidden_size, bias=True)

self.dense = nn.Linear(self.hidden_size, self.hidden_size)

self.attention_dropout = nn.Dropout(config.attention_dropout)args,training_args=setup_everything()->

# 读取训练参数,sft.json中定义的参数都在TrainArguments中,CustomizedArgument中是自定义参数

- parser=HfArgumentPatser((CustomizedArgument,TrainingArguments))->

# 加载各种组件

trainer=init_components(args,training_args)->

# 加载tokenizer

- tokenizer= AutoTokenizer.from_pretrained(args.model_name_or_path,trust_remote_code=True)->

- model= AutoModelForCausalLM.from_pretrained(args.model_name_or_path,torch_dtype=torch.float16)->

# 初始化损失函数

- loss_func=TargetLMLoss()->

# 加载训练集

- train_dataset=SFTDataset(train_file,tokenizer,max_seq_length)->

- data_collator = SFTDataCollator(tokenizer, args.max_seq_length)

# 加载模型,继承的是transformers中的trainer类

- trainer=Trainer(model=model,args=training_args,train_dataset=train_dataset,

tokenizer=tokenizer,data_collator=data_collator,compute_loss=loss_func)->

-- transformers.trainer.Trainer.__init__

# 开始训练

train_result=trainer.train()->

- transformers.trainer.Trainer.train->

- inner_training_loop(args=args,resume_from_checkpoint=resume_from_checkpoint,

trial=trial,ignore_keys_for_eval=ignore_keys_for_eval,)->

-- train_dataloader=self.get_train_dataloader()->

-- train_dataset = self.train_dataset->SFTDataset

data_collator = self.data_collator->SFTDataCollator

-- train_sampler=self._get_train_sampler()->

-- DataLoader(train_dataset,batch_size=self._train_batch_size,sampler=train_sampler,

collate_fn=data_collator,drop_last=self.args.dataloader_drop_last,num_workers=self.args.dataloader_num_workers,pin_memory=self.args.dataloader_pin_memory,worker_init_fn=seed_workr,)->

-- model=self._wrap_model(self.model_wrapped)->

-- self._load_optimizer_and_scheduler(resume_from_checkpoint)->

# training

-- model.zero_grad()->

-- self.control=self.callback_handler.on_train_begin(args,state,self.control)->

-- transformers.trainer_callbacks.CallbackHandler.call_event:[<transformers.trainer_callback.DefaultFlowCallback object at 0x7f7ebc1008e0>, <transformers.integrations.TensorBoardCallback object at 0x7f7ebc103f10>, <transformers.trainer_callback.ProgressCallback object at 0x7f7ebc100820>]

-- transformers.integrations.TensorboardCallback.on_train_begin->

-- transformers.trainer_callback.ProgressCallback.on_train_begin->

-- self.control=self.callback_handler.on_epoch_begin(args,self.state,self.control)->

[<transformers.trainer_callback.DefaultFlowCallback object at 0x7efeb4f40910>, <transformers.integrations.TensorBoardCallback object at 0x7efeb4f43f40>, <transformers.trainer_callback.ProgressCallback object at 0x7efeb4f40850>]

-- for step,inputs in enumerate(epoch_iterator):->

-- component.dataset.SFTDataset.__getitem__/component.collator.SFTDataCollator.__call__->

-- inputs['input_ids','attention_mask','target_mask']:input_ids是模型输入token之后的序列;attention_mask:为了使序列对齐,需要padding到相同长度,attention_mask指示哪些位置是真实输入,哪些位置是填充,attention_mask的长度与input_ids相同,其中1是对应位置是真实输入,0表示对应位置是填充的;target_mask:用于指示模型在计算损失函数时应该关注哪些位置,其中1是目标位置,需要计算损失,0对应输入部分,不需要计算损失

-- tr_loss_step=self.training_step(model,inputs)->

--- model.train()->

--- loss=self.compute_loss(model,inputs)->component.trainer.Trainer.compute_loss()->

--- self.loss_func(model,inputs,self.args,return_outputs)->componet.loss.TargetLMLoss.__call__->

--- input_ids = inputs['input_ids'] 2,100,2是batch,100是max_seq_length,可以自己设

attention_mask = inputs['attention_mask']

target_mask = inputs['target_mask']

# 模型前馈预测

--- outputs = model(input_ids=input_ids, attention_mask=attention_mask)

---- transformers.models.bloom.modeling_bloom.BloomForCausalLM.forward->

---- self.transformer=BloomModel(config)->

---- transformer_outputs = self.transformer(

input_ids,

past_key_values=past_key_values,

attention_mask=attention_mask,

head_mask=head_mask,

inputs_embeds=inputs_embeds,

use_cache=use_cache,

output_attentions=output_attentions,

output_hidden_states=output_hidden_states,

return_dict=return_dict)->

---- BloomModel.forward->

---- past_key_value:模型可以从过去的注意力键值对中获取先前计算的信息,避免重复计算,head_mask用于控制多头自注意力机制的mask,多头自注意力机制将输入划分为多头,每个头关注不同的信息,通过mask,使模型选择性的屏蔽一些注意力头。

---- head_mask=self.get_head_mask(head_mask,self.config.n_layer)->

---- inputs_embeds=self.word_embeddings(input_ids)->2x100x2048

---- inputs_embeds:用于传递已嵌入张量到model中,输入单词需要转换为向量,可以通过预训练得到。word_embedding将input_ids索引转换为对应的词向量->

---- hidden_states=self.word_embeddings_layernorm(inputs_embeds) 2x100x2048 是词嵌入的归一化层,它对输入的词嵌入进行归一化处理

---- alibi = build_alibi_tensor(attention_mask, self.num_heads, dtype=hidden_states.dtype) 用于对注意力权重进行调整,它用来控制每个输入位置在不同注意力头之间的分配-> 32x1x100[batch_size*num_heads,1,seq_length]

---- causal_mask = self._prepare_attn_mask(

attention_mask,

input_shape=(batch_size, seq_length),

past_key_values_length=past_key_values_length, ) 在训练时,mask decoder需要输入attention mask,准备一个针对自注意力机制的mask,根据输入参数来生成mask,在注意力计算时将某些位置进行mask,以确保后来位置不会影响当前注意力计算

---- for i, (block, layer_past) in enumerate(zip(self.h, past_key_values)) ->

BloomBlock(

(input_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(self_attention): BloomAttention(

(query_key_value): Linear(in_features=2048, out_features=6144, bias=True)

(dense): Linear(in_features=2048, out_features=2048, bias=True)

(attention_dropout): Dropout(p=0.0, inplace=False)

)

(post_attention_layernorm): LayerNorm((2048,), eps=1e-05, elementwise_affine=True)

(mlp): BloomMLP(

(dense_h_to_4h): Linear(in_features=2048, out_features=8192, bias=True)

(gelu_impl): BloomGelu()

(dense_4h_to_h): Linear(in_features=8192, out_features=2048, bias=True)

)

)

---- outputs = block(hidden_states,layer_past=layer_past, attention_mask=causal_mask,head_mask=head_mask[i], use_cache=use_cache,output_attentions=output_attentions,alibi=alibi)->

---- transformers.models.bloom.modeling_bloom.BloomBlock.forward->

---- hidden_states:2x100x2048,alibi:32x1x100,attention_mask:2x1x100x100,

---- layernorm_output = self.input_layernorm(hidden_states)-> 2x100x2048

---- residual=hidden_states->

---- attn_outputs = self.self_attention(

layernorm_output,

residual,

layer_past=layer_past,

attention_mask=attention_mask,

alibi=alibi,

head_mask=head_mask,

use_cache=use_cache,

output_attentions=output_attentions,)->

BloomAttention(

(query_key_value): Linear(in_features=2048, out_features=6144, bias=True)

(dense): Linear(in_features=2048, out_features=2048, bias=True)

(attention_dropout): Dropout(p=0.0, inplace=False)

)->

----- transformers.models.bloom.modeling_bloom.BloomAttention.forward->

----- fused_qkv=self.query_key_value(hidden_states):1x512x6144->

----- (query_layer, key_layer, value_layer) = self._split_heads(fused_qkv):1x512x16x126->

----- query_layer = query_layer.transpose(1, 2).reshape(batch_size * self.num_heads, q_length, self.head_dim) 32x100x128->

----- matmul_result:32x100x100,attention_score:2x16x100x100,attn_weights:2x16x100x100,

----- attention_probs = F.softmax(attn_weights, dim=-1, dtype=torch.float32).to(input_dtype) 2x16x100x100->

----- attention_probs = self.attention_dropout(attention_probs)->

----- attention_probs_reshaped = attention_probs.view(batch_size * self.num_heads, q_length, kv_length) 32x100x100->

----- context_layer = torch.bmm(attention_probs_reshaped, value_layer):32x100x128->

----- context_layer = self._merge_heads(context_layer):2x100x2048->

----- output_tensor = self.dense(context_layer):2x100x2048->

----- output_tensor = dropout_add(output_tensor, residual, self.hidden_dropout, self.training)->

----- attention_output=attn_output[0]:2x100x2048,outputs=attn_outputs[1:]

----- layernorm_output = self.post_attention_layernorm(attention_output):2x100x2048->

----- residual = attention_output->

----- output = self.mlp(layernorm_output, residual):2x100x2048->

---- hidden_states = self.ln_f(hidden_states)->

---- BaseModelOutputWithPastAndCrossAttentions()->

--- hidden_states=transformer_outputs[0]:2x100x2048->

--- lm_logits=self.lm_head()->Linear(2048,46145)->

--- CausalLMOutputWithCrossAttentions()->

--- logits=output.logits:2x100x46145->

--- labels=torch.where(target_mask == 1, input_ids, self.ignore_index):2x100->

--- shift_logits = logits[..., :-1, :].contiguous()->

--- shift_labels = labels[..., 1:].contiguous()->

--- loss = self.loss_fn(shift_logits.view(-1, shift_logits.size(-1)), shift_labels.view(-1))-> (198,46145) 198

-- loss.backward()-> tensor(0.7256, device='cuda:0', dtype=torch.float16, grad_fn=<DivBackward0>)

-- tr_loss += tr_loss_step->

-- self.control = self.callback_handler.on_substep_end(args, self.state, self.control)

[<transformers.trainer_callback.DefaultFlowCallback object at 0x7f992c5cc8e0>, <transformers.integrations.TensorBoardCallback object at 0x7f992c5cff70>, <transformers.trainer_callback.ProgressCallback object at 0x7f992c5cc880>]

-- self.control = self.callback_handler.on_epoch_end(args, self.state, self.control)->

[<transformers.trainer_callback.DefaultFlowCallback object at 0x7f2e401ec940>, <transformers.integrations.TensorBoardCallback object at 0x7f2e401eff70>, <transformers.trainer_callback.ProgressCallback object at 0x7f2e401ec880>]

-- self._maybe_log_save_evaluate(tr_loss, model, trial, epoch, ignore_keys_for_eval)->

-- self._memory_tracker.stop_and_update_metrics(metrics)->

-- run_dir = self._get_output_dir(trial)->

-- checkpoints_sorted = self._sorted_checkpoints(use_mtime=False, output_dir=run_dir)->

-- self.control = self.callback_handler.on_train_end(args, self.state, self.control)->

trainer.save_model()->

metrics=train_result.metrics

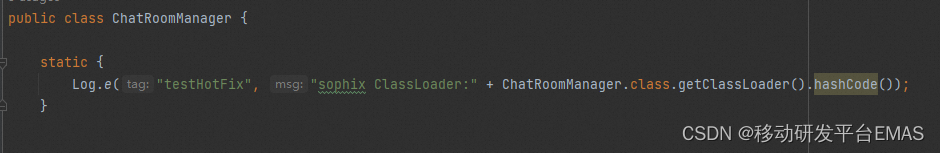

Qlora微调,baichuan7B用qlora微调的话,需要32Gv100.

4.模型推理

firefly-1b6,全量sft模型

tokenizer=BloomTokenizerFast.from_pretrained()->

model=BloomForCausalLM.for_pretrained()->

text = '<s>{}</s></s>'.format(text)->

input_ids=tokenizer(text).input_ids->tensor([[ 1, 11273, 6979, 9843, 2889, 2, 2]]),tokenizer是将text变成标识

outputs = model.generate(input_ids, max_new_tokens=200, do_sample=True, top_p=0.8, temperature=0.35, repetition_penalty=1.2, eos_token_id=tokenizer.eos_token_id)->

- transformers.generation.utils.GenerationMixin.generate->

- 1.处理generation_config和可能更新它的关键字参数,并验证.generate()方法的调用

- new_generation_config = GenerationConfig.from_model_config(self.config)

BloomConfig {

"_name_or_path": "/root/autodl-tmp/Firefly/weights/models--YeungNLP--firefly-1b4/snapshots/7c463062d96826ee682e63e9e55de0056f62beb3",

"apply_residual_connection_post_layernorm": false,

"architectures": [

"BloomForCausalLM"

],

"attention_dropout": 0.0,

"attention_softmax_in_fp32": true,

"bias_dropout_fusion": true,

"bos_token_id": 1,

"eos_token_id": 2,

"hidden_dropout": 0.0,

"hidden_size": 2048,

"initializer_range": 0.02,

"layer_norm_epsilon": 1e-05,

"masked_softmax_fusion": true,

"model_type": "bloom",

"n_head": 16,

"n_inner": null,

"n_layer": 24,

"offset_alibi": 100,

"pad_token_id": 3,

"pretraining_tp": 2,

"seq_length": 4096,

"skip_bias_add": true,

"skip_bias_add_qkv": false,

"slow_but_exact": false,

"torch_dtype": "float16",

"transformers_version": "4.30.1",

"unk_token_id": 0,

"use_cache": true,

"vocab_size": 46145

}

- 2.如果尚未定义,设置生成参数

- 3.定义模型输入

- 4.定义模型参数

- 5.准备用于自回归生成的input_ids

- 6.根据其他停止准则准备max_length

- 7.准备生成模式

- is_sample_gen_mode=True

- 8.准备分布式预处理采样器

- logits_processor = self._get_logits_processor(

generation_config=generation_config,

input_ids_seq_length=input_ids_seq_length,

encoder_input_ids=inputs_tensor,

prefix_allowed_tokens_fn=prefix_allowed_tokens_fn,

logits_processor=logits_processor,

)

- 9.准备停止准则

- 10.进入不同的生成模式

- 11.准备输出结果调整器(Logits Warper)

- logits_warper = self._get_logits_warper(generation_config)

- 12.expand input_ids with `num_return_sequences` additional sequences per batch

- 13.run sample

# auto-regressive generation

-- model_inputs=prepare_inputs_for_generation(input_ids)->

{'input_ids': tensor([[ 1, 11273, 6979, 9843, 2889, 2, 2]], device='cuda:0'), 'past_key_values': None, 'use_cache': True, 'attention_mask': tensor([[1, 1, 1, 1, 1, 1, 1]], device='cuda:0')}

# forward pass to get next token

-- transformers.models.bloom.modeling_bloom.BloomForCausalLm.forward()->

-- outputs:logits[1x7x46145],past_key_values->

# pre-process distribution

-- next_token_scores = logits_processor(input_ids, next_token_logits):1x46145

-- next_token_scores = logits_warper(input_ids, next_token_scores)

# sample

-- probs=nn.functional.softmax(next_token_scores,dim=-1)->

-- next_tokens=torch.multinomial(probs,num_samples=-1).squeeze()->

-- next_tokens = next_tokens * unfinished_sequences + pad_token_id * (1 - unfinished_sequences) [6979]

-- input_ids = torch.cat([input_ids, next_tokens[:, None]], dim=-1)

1, 11273, 6979, 9843, 2889, 2, 2, 6979beichuan-qlora-sft,使用qlora这样的adapter来微调sft