1. 背景

在做图像感知工作过程中会遇到需要处理相机畸变的情况,如SLAM、3D重建等,则需要了解一些常见相机模型的成像过程,以及依据成像过程实现去除相机成像的畸变。

注意:这篇文章并不涉及太多相机参数畸变原理,更多侧重在使用对应相机模型如何实现图像去畸变。

2. pinhole相机模型

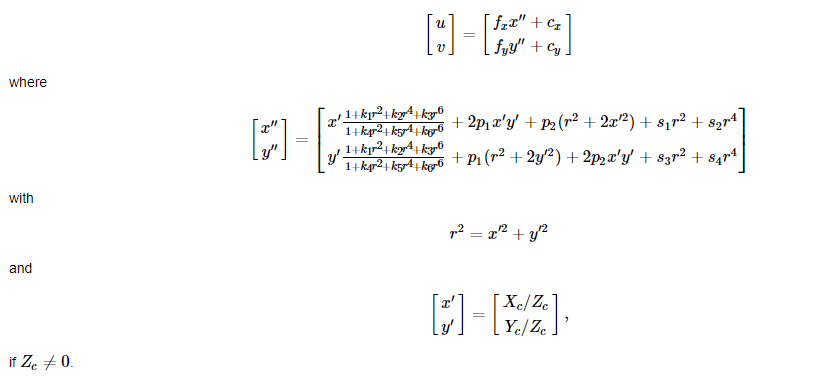

针孔模型是较为经典的模型,它的畸变建模比较简单,一般使用两种畸变参数:径向畸变(radial distortion)和切向畸变(tangential distortion)。当然在一些资料中还存在棱镜畸变(prism distortion),只是这个畸变参数不经常使用。这里对于径向畸变使用 [ k 1 , k 2 , … , k n ] [k_1,k_2,\dots,k_n] [k1,k2,…,kn]表示,其中 n n n是畸变的阶数(下同),切向畸变使用 [ p 1 , p 2 ] [p_1,p_2] [p1,p2],棱镜畸变使用 [ s 1 , s 2 , s 3 , s 4 ] [s_1,s_2,s_3,s_4] [s1,s2,s3,s4]表示。

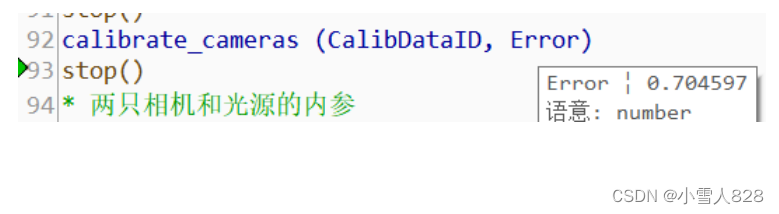

则对于针孔相机下的去畸变可以描述为如下计算过程:

ref:

- opencv文档——Camera Calibration and 3D Reconstruction

pinhole模型畸变去除参考代码:

def undistort_pinhole_image(image_data:np.array, cam_I:np.array, cam_D:np.array, desire_cam_I:np.array):

H, W = image_data.shape[:2]

# get camera infos

fx, fy, cx, cy = cam_I[0, 0], cam_I[1, 1], cam_I[0, 2], cam_I[1, 2]

k1, k2, p1, p2, k3 = cam_D[:5]

# get new camera world points

x, y = np.meshgrid(np.arange(W), np.arange(H))

x = (x.reshape((-1, 1)) - desire_cam_I[0, 2]) / desire_cam_I[0, 0]

y = (y.reshape((-1, 1)) - desire_cam_I[1, 2]) / desire_cam_I[1, 1]

z = np.ones_like(x)

# normalize

x = x / z

y = y / z

xx = x * x

yy = y * y

xy = x * y

rr = xx + yy

# Radial distortion 径向畸变

rd_x = x * (1. + k1*rr + k2*rr*rr + k3*rr*rr*rr)

rd_y = y * (1. + k1*rr + k2*rr*rr + k3*rr*rr*rr)

# tangential distortion 切向畸变

td_x = 2 * p1 * xy + p2 * (rr + 2 * xx)

td_y = 2 * p2 * xy + p1 * (rr + 2 * yy)

# rectified points

r_x = rd_x + td_x

r_y = rd_y + td_y

# map to distored image

map_u = (r_x * fx + cx).reshape((H, W)).astype(np.float32)

map_v = (r_y * fy + cy).reshape((H, W)).astype(np.float32)

img_undistored = cv2.remap(image_data, map_u, map_v, cv2.INTER_CUBIC)

return img_undistored

2. omni-directional相机模型

全向相机是将点投影到单位球,之后再进行畸变矫正的过程,这里会使用 ξ \xi ξ进行单位球转换。对于其去畸变的过程参考代码如下:

def undistort_omni_image(image_data:np.array, cam_I:np.array, cam_D:np.array, desire_cam_I:np.array):

H, W = image_data.shape[:2]

# get camera infos

fx, fy, cx, cy, xi = cam_I[0, 0], cam_I[1, 1], cam_I[0, 2], cam_I[1, 2], cam_I[0, 1]

k1, k2, p1, p2 = cam_D[:4]

# get new camera world points

x, y = np.meshgrid(np.arange(W), np.arange(H))

x = (x.reshape((-1, 1)) - desire_cam_I[0, 2]) / desire_cam_I[0, 0]

y = (y.reshape((-1, 1)) - desire_cam_I[1, 2]) / desire_cam_I[1, 1]

z = np.ones_like(x)

# normalize

rz = z + xi * np.sqrt(x*x + y*y + z*z)

x = x / rz

y = y / rz

xx = x * x

yy = y * y

xy = x * y

rr = xx + yy

# Radial distortion 径向畸变

rd_x = x * (1. + k1*rr + k2*rr*rr)

rd_y = y * (1. + k1*rr + k2*rr*rr)

# tangential distortion 切向畸变

td_x = 2 * p1 * xy + p2 * (rr + 2 * xx)

td_y = 2 * p2 * xy + p1 * (rr + 2 * yy)

# rectified points

r_x = rd_x + td_x

r_y = rd_y + td_y

# map to distored image

map_u = (r_x * fx + cx).reshape((H, W)).astype(np.float32)

map_v = (r_y * fy + cy).reshape((H, W)).astype(np.float32)

img_undistored = cv2.remap(image_data, map_u, map_v, cv2.INTER_CUBIC)

return img_undistored

ref:

- Omnidirectional Camera Calibration

- cv::omnidir::projectPoints()

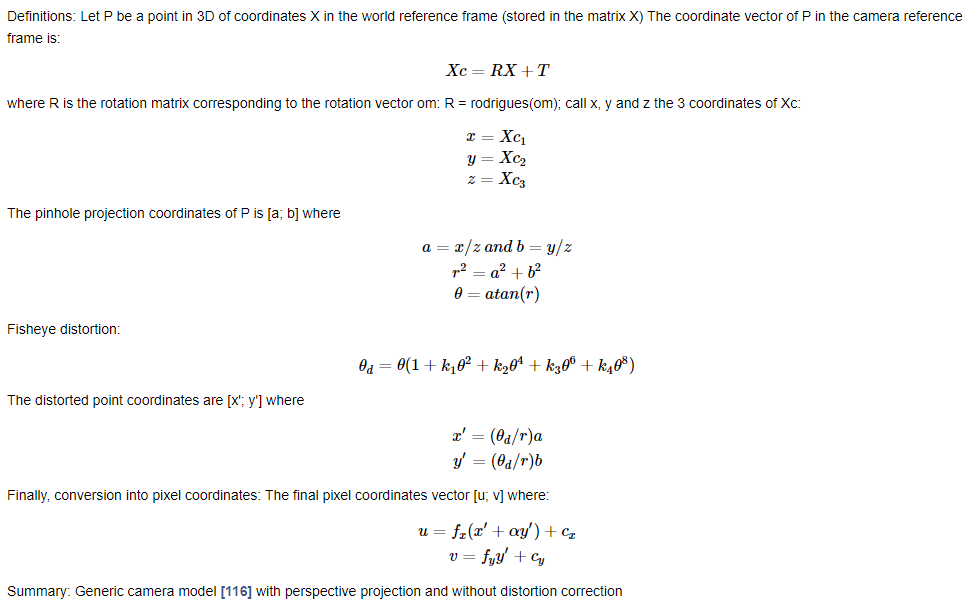

3. Kannala Brandt相机模型

在该相机模型中引入射光线与主轴的夹角用于描述成像畸变,也就是偏离主轴越远畸变的程度越大,因而使用畸变参数 [ k 1 , k 2 , k 3 , k 4 ] [k_1,k_2,k_3,k_4] [k1,k2,k3,k4]与入射光线主轴夹角 θ \theta θ便可使用上述几个变量完成鱼眼相机去畸变,其去畸变的流程如下:

ref:

- Fisheye camera model

畸变去除参考代码:

def undistort_kb_image(image_data:np.array, cam_I:np.array, cam_D:np.array, desire_cam_I:np.array):

H, W = image_data.shape[:2]

fx, fy, cx, cy = cam_I[0, 0], cam_I[1, 1], cam_I[0, 2], cam_I[1, 2]

k1, k2, k3, k4 = cam_D[:4]

x, y = np.meshgrid(np.arange(W), np.arange(H))

x = (x.reshape((-1, 1)) - desire_cam_I[0, 2]) / desire_cam_I[0, 0]

y = (y.reshape((-1, 1)) - desire_cam_I[1, 2]) / desire_cam_I[1, 1]

z = np.ones_like(x)

# normalize

x /= z

y /= z

rr = x*x + y*y

theta = np.arctan(np.sqrt(rr))

theta_d = theta * (1 + k1*theta*theta + k2*theta*theta*theta*theta +\

k3*theta*theta*theta*theta*theta*theta*theta*theta + \

k4*theta*theta*theta*theta*theta*theta*theta*theta*theta*theta)

t_x = (theta_d/(np.sqrt(rr)+1.e-6)) * x

t_y = (theta_d/(np.sqrt(rr)+1.e-6)) * y

# map to distored image

map_u = (t_x * fx + cx).reshape((H, W)).astype(np.float32)

map_v = (t_y * fy + cy).reshape((H, W)).astype(np.float32)

img_undistored = cv2.remap(image_data, map_u, map_v, cv2.INTER_CUBIC)

return img_undistored