源码下载链接:ppt.rar - 蓝奏云

PPT下载链接:https://pan.baidu.com/s/1oOIO76xhSw283aHTDhBcPg?pwd=dydk

提取码:dydk

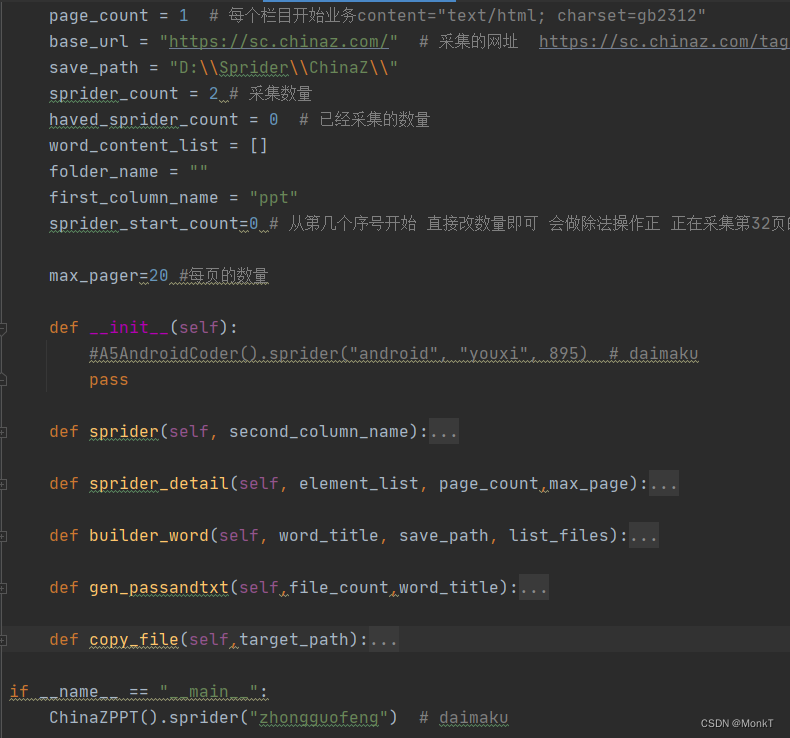

采集的参数

page_count = 1 # 每个栏目开始业务content="text/html; charset=gb2312"

base_url = "https://sc.chinaz.com/" # 采集的网址 https://sc.chinaz.com/tag_ppt/zhongguofeng.html

save_path = "D:\\Sprider\\ChinaZ\\"

sprider_count = 110 # 采集数量

haved_sprider_count = 0 # 已经采集的数量

word_content_list = []

folder_name = ""

first_column_name = "ppt"

sprider_start_count=800 # 从第几个序号开始 直接改数量即可 会做除法操作正 正在采集第32页的第16个资源 debug

max_pager=20 #每页的数量采集主体代码

def sprider(self, second_column_name):

"""

采集Coder代码

:return:

"""

if second_column_name == "zhongguofeng":

self.folder_name = "中国风"

self.first_column_name="tag_ppt"

elif second_column_name == "xiaoqingxin":

self.folder_name = "小清新"

self.first_column_name = "tag_ppt"

elif second_column_name == "kejian":

self.folder_name = "课件"

self.first_column_name = "ppt"

merchant = int(self.sprider_start_count) // int(self.max_pager) + 1

second_folder_name = str(self.sprider_count) + "个" + self.folder_name

self.save_path = self.save_path+ os.sep + "PPT" + os.sep + second_folder_name

BaseFrame().debug("开始采集ChinaZPPT...")

sprider_url = (self.base_url + "/" + self.first_column_name + "/" + second_column_name + ".html")

response = requests.get(sprider_url, timeout=10, headers=UserAgent().get_random_header(self.base_url))

response.encoding = 'UTF-8'

soup = BeautifulSoup(response.text, "html5lib")

#print(soup)

div_list = soup.find('div', attrs={"class": 'ppt-list'})

div_list =div_list.find_all('div', attrs={"class": 'item'})

#print(div_list)

laster_pager_url = soup.find('a', attrs={"class": 'nextpage'})

laster_pager_url = laster_pager_url.previous_sibling

#<a href="zhongguofeng_89.html"><b>89</b></a>

page_end_number = int(laster_pager_url.find('b').string)

#print(page_end_number)

self.page_count = merchant

while self.page_count <= int(page_end_number): # 翻完停止

try:

if self.page_count == 1:

self.sprider_detail(div_list,self.page_count,page_end_number)

else:

if self.haved_sprider_count == self.sprider_count:

BaseFrame().debug("采集到达数量采集停止...")

BaseFrame().debug("开始写文章...")

self.builder_word(self.folder_name, self.save_path, self.word_content_list)

BaseFrame().debug("文件编写完毕,请到对应的磁盘查看word文件和下载文件!")

break

#https://www.a5xiazai.com/android/youxi/qipaiyouxi/list_913_1.html

#https://www.a5xiazai.com/android/youxi/qipaiyouxi/list_913_2.html

#next_url = sprider_url + "/list_{0}_{1}.html".format(str(url_index), self.page_count)

# (self.base_url + "/" + first_column_name + "/" + second_column_name + "/"+three_column_name+"")

next_url =(self.base_url + "/" + self.first_column_name + "/" + second_column_name + "_{0}.html").format(self.page_count)

# (self.base_url + "/" + self.first_column_name + "/" + second_column_name + "")+"/list_{0}_{1}.html".format(str(self.url_index), self.page_count)

response = requests.get(next_url, timeout=10, headers=UserAgent().get_random_header(self.base_url))

response.encoding = 'UTF-8'

soup = BeautifulSoup(response.text, "html5lib")

div_list = soup.find('div', attrs={"class": 'ppt-list'})

div_list = div_list.find_all('div', attrs={"class": 'item'})

self.sprider_detail(div_list, self.page_count,page_end_number)

pass

except Exception as e:

print("sprider()执行过程出现错误" + str(e))

pass

self.page_count = self.page_count + 1 # 页码增加1

def sprider_detail(self, element_list, page_count,max_page):

try:

element_length = len(element_list)

self.sprider_start_index = int(self.sprider_start_count) % int(self.max_pager)

index = self.sprider_start_index

while index < element_length:

a=element_list[index]

if self.haved_sprider_count == self.sprider_count:

BaseFrame().debug("采集到达数量采集停止...")

break

index = index + 1

sprider_info = "正在采集第" + str(page_count) + "页的第" + str(index) + "个资源"

BaseFrame().debug(sprider_info)

title_image_obj = a.find('img', attrs={"class": 'lazy'})

url_A_obj=a.find('a', attrs={"class": 'name'})

next_url = self.base_url+url_A_obj.get("href")

coder_title = title_image_obj.get("alt")

response = requests.get(next_url, timeout=10, headers=UserAgent().get_random_header(self.base_url))

response.encoding = 'UTF-8'

soup = BeautifulSoup(response.text, "html5lib")

#print(next_url)

down_load_file_div = soup.find('div', attrs={"class": 'download-url'})

if down_load_file_div is None:

BaseFrame().debug("需要花钱无法下载因此跳过哦....")

continue

down_load_file_url = down_load_file_div.find('a').get("href")

#print(down_load_file_url)

image_obj = soup.find('div', attrs={"class": "one-img-box"}).find('img')

image_src = "https:"+ image_obj.get("data-original")

#print(image_src)

if (DownLoad(self.save_path).__down_load_file__(down_load_file_url, coder_title, self.folder_name)):

DownLoad(self.save_path).down_cover_image__(image_src, coder_title) # 资源的 封面

sprider_content = [coder_title,

self.save_path + os.sep + "image" + os.sep + coder_title + ".jpg"] # 采集成功的记录

self.word_content_list.append(sprider_content) # 增加到最终的数组

self.haved_sprider_count = self.haved_sprider_count + 1

BaseFrame().debug("已经采集完成第" + str(self.haved_sprider_count) + "个")

if (int(page_count) == int(max_page)):

self.builder_word(self.folder_name, self.save_path, self.word_content_list)

BaseFrame().debug("文件编写完毕,请到对应的磁盘查看word文件和下载文件!")

except Exception as e:

print("sprider_detail:" + str(e))

pass

采集的文件名

初中化学实验课件ppt模板

开学第一课开学季ppt模板设计

大学生情绪压力管理ppt模板课件

简约风格幼小衔接ppt课件免费下载

高考填报志愿课件免费ppt模板下载

岳阳楼记教学设计ppt课件

岳阳楼记ppt课件免费下载第3课时

岳阳楼记ppt课件免费下载第2课时

岳阳楼记ppt课件免费下载第1课时

岳阳楼记译文ppt课件