本期双语文章来自《经济学人》2023年5月13日周报社论:《人工智能经济学》(The economics of AI)

A stochastic parrot in every pot?

每口锅里都会有一只随机鹦鹉?

What does a leaked Google memo reveal about the future of AI?

一份泄露的谷歌备忘录揭示了人工智能怎样的未来?

Open-source AI is booming. That makes it less likely that a handful of firms will control the technology.

开源AI正在蓬勃发展。从而降低了少数公司控制这项技术的可能性。

They have changed the world by writing software. But techy types are also known for composing lengthy memos in prose, the most famous of which have marked turning points in computing. Think of Bill Gates’s “Internet tidal wave” memo of 1995, which reoriented Microsoft towards the web; or Jeff Bezos’s “API mandate” memo of 2002, which opened up Amazon’s digital infrastructure, paving the way for modern cloud computing. Now techies are abuzz about another memo, this time leaked from within Google, titled “We have no moat”. Its unknown author details the astonishing progress being made in artificial intelligence (AI)—and challenges some long-held assumptions about the balance of power in this fast-moving industry.

他们通过编写软件改变了世界。但是这些技术型人才也因为用散文撰写冗长的备忘录而闻名,其中最著名的一篇标志着计算机科学的转折点。想想比尔·盖茨(Bill Gates)1995年的“互联网浪潮”备忘录,该备忘录将微软重新定位到网络;或者杰夫·贝佐斯(Jeff Bezos)2002年的“API授权”备忘录,该备忘录开辟了亚马逊的数字基础设施,并为现代云计算技术铺平了道路。现在,技术人员正在讨论另一份备忘录,这次是从谷歌内部泄露的,标题为“我们没有护城河”。其未知作者详细介绍了人工智能(AI)取得的惊人进展,并对这个快速发展行业中势力均衡的一些长期假设提出了挑战。

1995年,微软总裁比尔·盖茨(Bill Gates)在巴黎举行的新闻发布会前展示了微软的Windows 95程序。下图为比尔·盖茨1995年“互联网浪潮”备忘录

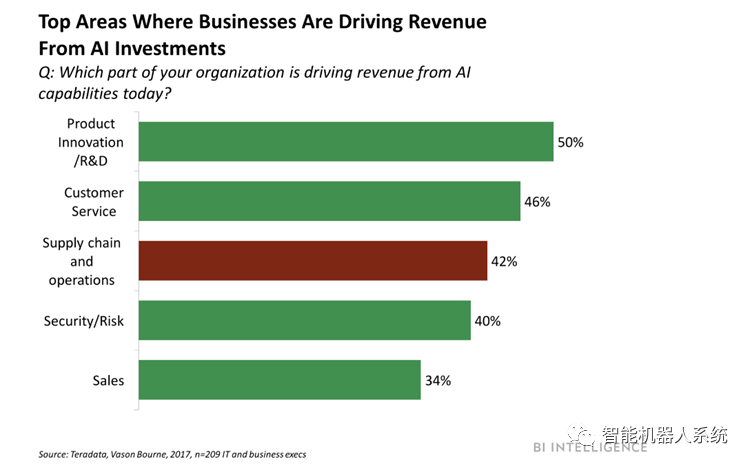

AI burst into the public consciousness with the launch in late 2022 of ChatGPT, a chatbot powered by a “large language model” (LLM) made by OpenAI, a startup closely linked to Microsoft. Its success prompted Google and other tech firms to release their own LLM-powered chatbots. Such systems can generate text and hold realistic conversations because they have been trained using trillions of words taken from the internet. Training a large LLM takes months and costs tens of millions of dollars. This led to concerns that AI would be dominated by a few deep-pocketed firms.

随着2022年底推出ChatGPT,人工智能进入了公众意识,这是一款由与微软密切相关的初创公司OpenAI制造的“大语言模型”(LLM)驱动的聊天机器人。它的成功促使谷歌和其他科技公司发布了自己的LLM聊天机器人。这样的系统可以生成文本并进行逼真的对话,因为它们已经使用从互联网上获取的数万亿个单词进行了训练。培训大型LLM需要数月时间,费用为数千万美元。这导致人们担心人工智能将由少数财力雄厚的公司主导。

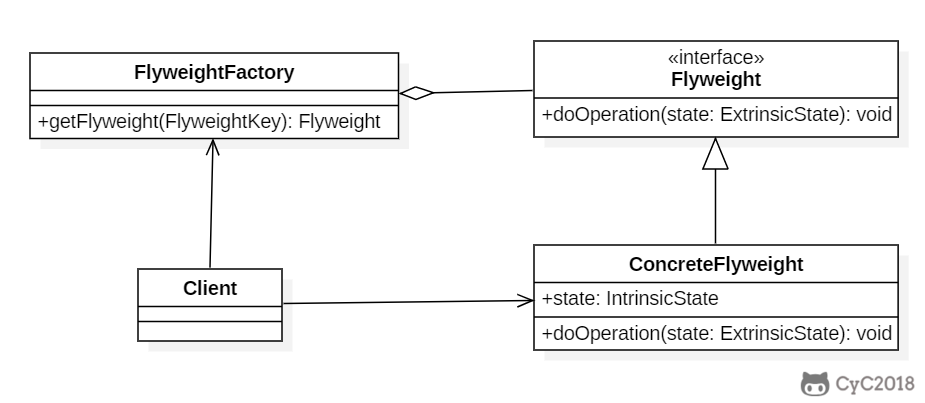

But that assumption is wrong, says the Google memo. It notes that researchers in the open-source community, using free, online resources, are now achieving results comparable to the biggest proprietary models. It turns out that LLMs can be “fine-tuned” using a technique called low-rank adaptation, or LoRa. This allows an existing LLM to be optimised for a particular task far more quickly and cheaply than training an LLM from scratch.

但这种假设是错误的,谷歌备忘录说。它指出,开源社区的研究人员使用免费的在线资源,现在所取得的结果可与最大的专有模型相匹敌。事实证明,LLM可以使用一种称为低秩适应或LoRa的技术进行“微调”。这使得现有的LLM可以针对特定任务进行优化,比从头开始训练LLM更快,更便宜。

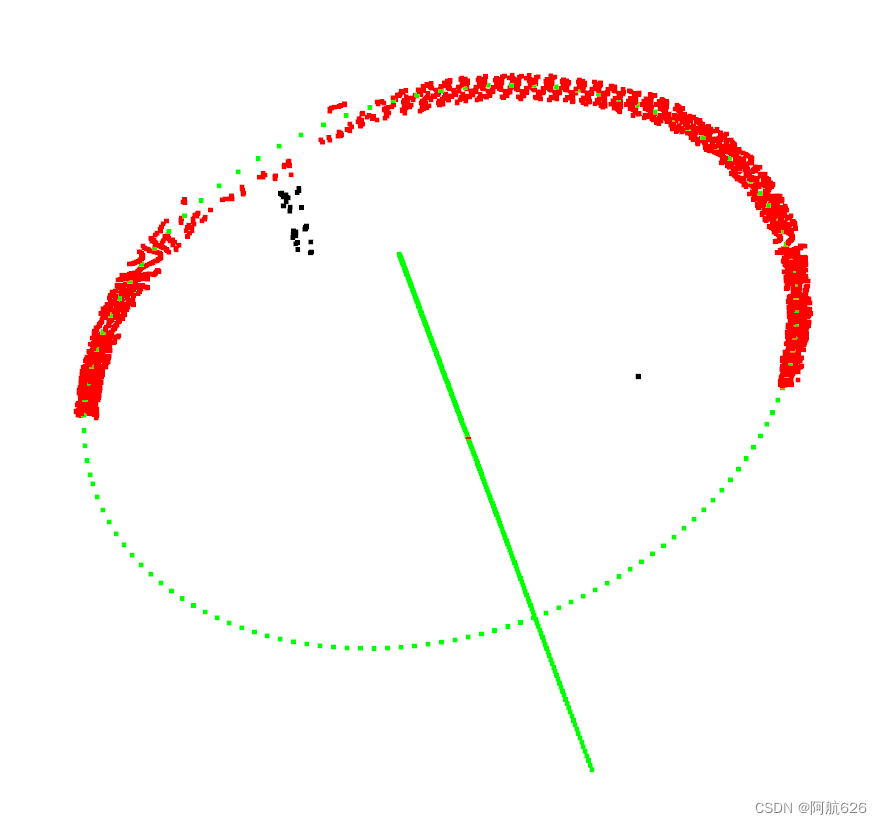

LoRA的思想逻辑可简单描述为:在原始PLM(Programmable Logic Matrice,可编程序的逻辑矩阵)旁边增加一个旁路,做一个降维再升维的操作,来模拟所谓的intrinsic rank。训练的时候固定PLM的参数,只训练降维矩阵A与升维矩阵B。而模型的输入输出维度不变,输出时将BA与PLM的参数叠加。

基于大模型的内在低秩特性,增加旁路矩阵来模拟全模型参数微调,LoRA通过简单有效的方案来达成轻量微调的目的。可以将现在的各种大模型通过轻量微调变成各个不同领域的专业模型。

考虑OpenAI对GPT模型的认知,GPT的本质是对训练数据的有效压缩,从而发现数据内部的逻辑与联系,LoRA的思想与之有相通之处,原模型虽大,但起核心作用的参数是低秩的,通过增加旁路,达到四两拨千斤的效果。

Activity in open-source AI exploded in March, when LLAMA, a model created by Meta, Facebook’s parent, was leaked online. Although it is smaller than the largest LLMs (its smallest version has 7bn parameters, compared with 540bn for Google’s PALM) it was quickly fine-tuned to produce results comparable to the original version of ChatGPT on some tasks. As open-source researchers built on each other’s work with LLAMA, “a tremendous outpouring of innovation followed,” the memo’s author writes.

开源人工智能的活动在三月份呈爆炸式增长,当时Facebook的母公司Meta创建的模型LLAMA在网上泄露。虽然它比最大的LLM小(它的最小版本有70亿个参数,而谷歌的PALM有5400亿个参数),但它很快就被微调了,在某些任务上产生了与ChatGPT原始版本相当的结果。因为开源研究人员在LLAMA为基础并借鉴彼此的成果,“随之而来的是巨大的创新涌现,”备忘录的作者写道。

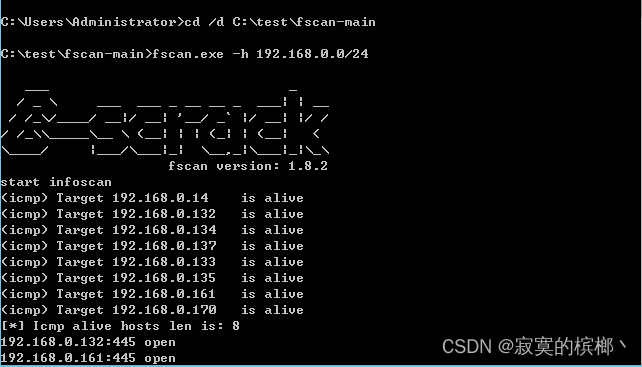

This could have seismic implications for the industry’s future. “The barrier to entry for training and experimentation has dropped from the total output of a major research organisation to one person, an evening, and a beefy laptop,” the Google memo claims. An LLM can now be fine-tuned for $100 in a few hours. With its fast-moving, collaborative and low-cost model, “open-source has some significant advantages that we cannot replicate.” Hence the memo’s title: this may mean Google has no defensive “moat” against open-source competitors. Nor, for that matter, does OpenAI.

这可能会对该行业的未来产生地震般的影响。“培训和实验的进入门槛已经从一个主要研究机构的总产出下降到一个人、花一个晚上时间和一台强大的笔记本电脑就够了,”谷歌备忘录声称。LLM现在可以在几个小时内以100美元的价格进行微调。凭借其快速发展、协作和低成本的模式,“开源具有一些我们无法复制的显著优势。”因此,备忘录的标题是:这可能意味着谷歌对开源竞争对手没有防御性的“护城河”。就此而言,OpenAI也没有。

《经济学人》2023年5月7日文章链接:你的工作可能不受人工智能的影响

(https://www.economist.com/finance-and-economics/2023/05/07/your-job-is-probably-safe-from-artificial-intelligence)

Not everyone agrees with this thesis. It is true that the internet runs on open-source software. But people use paid-for, proprietary software, from Adobe Photoshop to Microsoft Windows, as well. AI may find a similar balance. Moreover, benchmarking AI systems is notoriously hard. Yet even if the memo is partly right, the implication is that access to AI technology will be far more democratised than seemed possible even a year ago. Powerful LLMs can be run on a laptop; anyone who wants to can now fine-tune their own AI.

不是每个人都同意这个论点。的确,互联网运行在开源软件上。但人们也使用付费的专有软件,从Adobe Photoshop到Microsoft Windows。人工智能可能会找到类似的平衡。此外,对人工智能系统进行基准测试是出了名的困难。然而,即使备忘录的部分内容是正确的,其含义是,人工智能技术的获取将比一年前更加民主化。强大的LLM可以在笔记本电脑上运行;任何想要的人现在都可以微调自己的AI。

This has both positive and negative implications. On the plus side, it makes monopolistic control of AI by a handful of companies far less likely. It will make access to AI much cheaper, accelerate innovation across the field and make it easier for researchers to analyse the behaviour of AI systems (their access to proprietary models was limited), boosting transparency and safety. But easier access to AI also means bad actors will be able to fine-tune systems for nefarious purposes, such as generating disinformation. It means Western attempts to prevent hostile regimes from gaining access to powerful AI technology will fail. And it makes AI harder to regulate, because the genie is out of the bottle.

这既有积极的影响,也有消极的影响。从好的方面来说,它使少数公司垄断控制人工智能的可能性大大降低。它将使人工智能的获取成本大大降低,加速整个领域的创新,并使研究人员更容易分析人工智能系统的行为(他们对专有模型的访问受到限制),从而提高透明度和安全性。但更容易获得人工智能也意味着坏人们将能够出于邪恶的目的微调系统,例如生成虚假信息。这意味着西方阻止敌对政权获得强大人工智能技术的努力将失败。这样一来,人工智能更难监管,因为妖怪已经从瓶子里出来了。

《经济学人》2023年4月20日文章链接:如何理性担心人工智能

(https://www.economist.com/leaders/2023/04/20/how-to-worry-wisely-about-artificial-intelligence)

Whether Google and its ilk really have lost their moat in AI will soon become apparent. But as with those previous memos, this feels like another turning point for computing.

谷歌之流是否真的在人工智能领域失去了护城河,很快就会变得显而易见。但与之前的备忘录一样,这感觉像是计算科学的另一个转折点。