OpenShift Route 的实现原理

- OpenShift 如何使用 HAProxy 实现 Router 和 Route

- Router app

- HAProxy 配置

- 1. HTTP

- 2. HTTPS

OpenShift 中的 Route 解决了从集群外部访问服务的需求,与 Kubernetes 中的 Ingress 类似。

OpenShift 中 Route 资源 API 定义如下:

apiVersion: v1

kind: Route

metadata:

name: route-edge-secured

spec:

host: www.example.com

to:

kind: Service

name: service-name

tls:

termination: edge

key: |-

-----BEGIN PRIVATE KEY-----

[...]

-----END PRIVATE KEY-----

certificate: |-

-----BEGIN CERTIFICATE-----

[...]

-----END CERTIFICATE-----

caCertificate: |-

-----BEGIN CERTIFICATE-----

[...]

-----END CERTIFICATE-----

与 Ingress 类似需要指定:

host也就是服务的域名,也就是主机地址toroute 通过该 service 选择器对接至服务背后的 endpoint- 如果希望服务更安全可以通过配置 TLS 相关证书来实现 HTTPS

OpenShift 如何使用 HAProxy 实现 Router 和 Route

Router app

首先看一下 Router Pod 的控制器 DeploymentConfig API 资源定义:

apiVersion: apps.openshift.io/v1

kind: DeploymentConfig

metadata:

creationTimestamp: null

generation: 1

labels:

router: router

name: router

selfLink: /apis/apps.openshift.io/v1/namespaces/default/deploymentconfigs/router

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

router: router

OpenShift 集群中的每个 Infra 节点都会启动一个名为 router-n-xxxxx 的 Pod:

$ oc get po -l deploymentconfig=router -n default

NAME READY STATUS RESTARTS AGE

router-1-8j2pp 1/1 Running 0 8d

我们挑一个 router app 容器进入其中:

$ oc exec -it router-1-8j2pp -n default /bin/bash

bash-4.2$ ps -ef

UID PID PPID C STIME TTY TIME CMD

1000000+ 1 0 0 Mar07 ? 01:02:32 /usr/bin/openshift-router

1000000+ 3294 1 0 04:26 ? 00:00:21 /usr/sbin/haproxy -f /var/lib/haproxy/conf/haproxy.config -p /var/lib/haproxy/run/haproxy.pid -x /var/lib/haproxy/run/haproxy.so

1000000+ 3298 0 0 07:07 ? 00:00:00 /bin/bash

1000000+ 3305 3298 0 07:07 ? 00:00:00 ps -ef

Router app 由两个进程组成:openshift-router 和 haproxy。

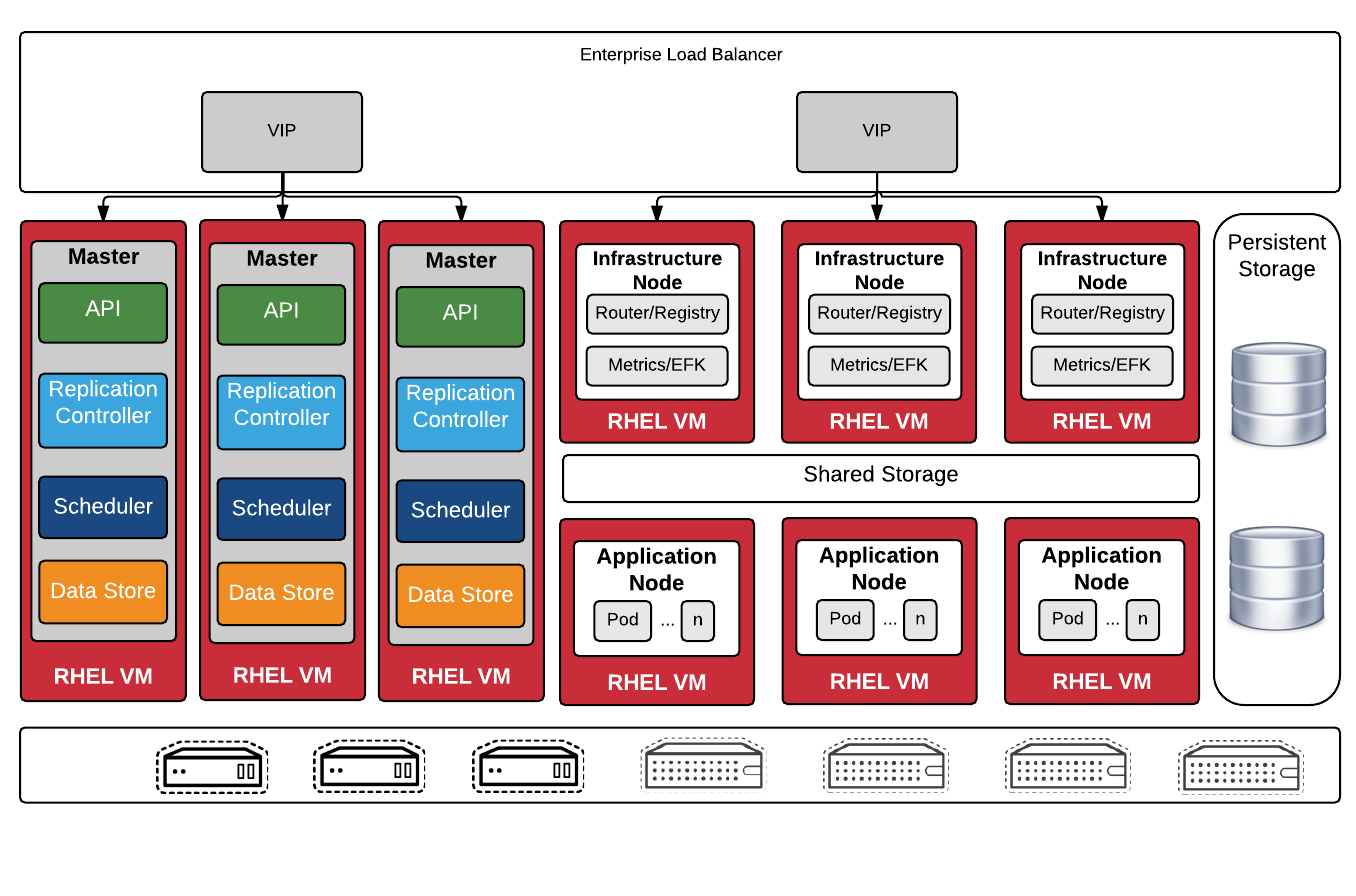

完整的 OpenShift 高可用集群架构如上图,所有集群上的业务(集群中运行的业务 Pod)流量会通过 DNS 指向右边的负载均衡 IP,然后通过集群前置的负载均衡器分片至集群的 Ingra 节点。在所有 Infra 节点上会以 Hostnetwork 方式运行 HAProxy 容器也就是进程监听着 80 和 443 端口。

$ netstat -lntp | grep haproxy

tcp 0 0 127.0.0.1:10443 0.0.0.0:* LISTEN 105481/haproxy

tcp 0 0 127.0.0.1:10444 0.0.0.0:* LISTEN 105481/haproxy

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 105481/haproxy

tcp 0 0 0.0.0.0:443 0.0.0.0:* LISTEN 105481/haproxy

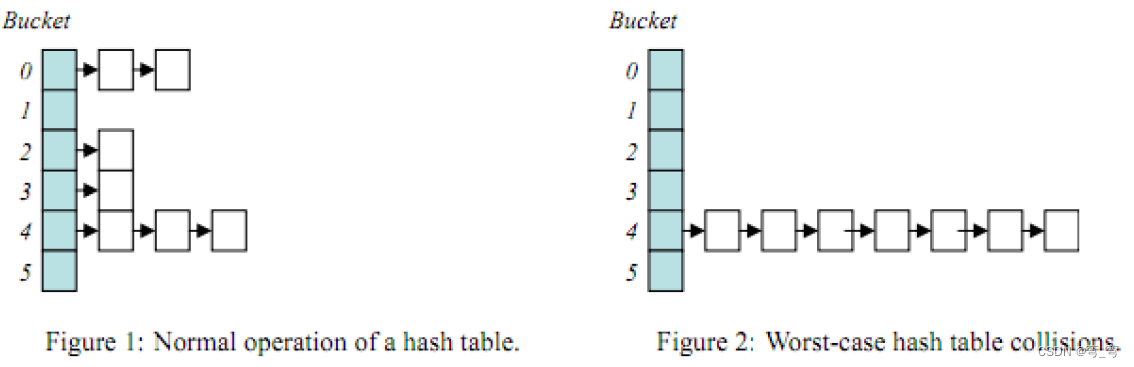

这里的 HAProxy 就相当于 Kubernetes 中的 Ingress controller,业务流量经过集群前端的负载均衡器到达 HAProxy 后会根据 HTTP 的 Host 请求头匹配转发规则分发至对应的业务后端。

$ curl https://127.0.0.1 -H 'Host: foo.bar.baz'

HAProxy 配置

查看容器中 haproxy 进程所使用的配置文件:

global

maxconn 20000

daemon

ca-base /etc/ssl

crt-base /etc/ssl

# TODO: Check if we can get reload to be faster by saving server state.

# server-state-file /var/lib/haproxy/run/haproxy.state

stats socket /var/lib/haproxy/run/haproxy.sock mode 600 level admin expose-fd listeners

stats timeout 2m

# Increase the default request size to be comparable to modern cloud load balancers (ALB: 64kb), affects

# total memory use when large numbers of connections are open.

tune.maxrewrite 8192

tune.bufsize 32768

# Prevent vulnerability to POODLE attacks

ssl-default-bind-options no-sslv3

# The default cipher suite can be selected from the three sets recommended by https://wiki.mozilla.org/Security/Server_Side_TLS,

# or the user can provide one using the ROUTER_CIPHERS environment variable.

# By default when a cipher set is not provided, intermediate is used.

# Intermediate cipher suite (default) from https://wiki.mozilla.org/Security/Server_Side_TLS

tune.ssl.default-dh-param 2048

ssl-default-bind-ciphers ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:DHE-RSA-AES128-GCM-SHA256:DHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-AES128-SHA256:ECDHE-RSA-AES128-SHA256:ECDHE-ECDSA-AES128-SHA:ECDHE-RSA-AES256-SHA384:ECDHE-RSA-AES128-SHA:ECDHE-ECDSA-AES256-SHA384:ECDHE-ECDSA-AES256-SHA:ECDHE-RSA-AES256-SHA:DHE-RSA-AES128-SHA256:DHE-RSA-AES128-SHA:DHE-RSA-AES256-SHA256:DHE-RSA-AES256-SHA:ECDHE-ECDSA-DES-CBC3-SHA:ECDHE-RSA-DES-CBC3-SHA:EDH-RSA-DES-CBC3-SHA:AES128-GCM-SHA256:AES256-GCM-SHA384:AES128-SHA256:AES256-SHA256:AES128-SHA:AES256-SHA:DES-CBC3-SHA:!DSS

defaults

maxconn 20000

# Add x-forwarded-for header.

# To configure custom default errors, you can either uncomment the

# line below (server ... 127.0.0.1:8080) and point it to your custom

# backend service or alternatively, you can send a custom 503 error.

#

# server openshift_backend 127.0.0.1:8080

errorfile 503 /var/lib/haproxy/conf/error-page-503.http

timeout connect 5s

timeout client 30s

timeout client-fin 1s

timeout server 30s

timeout server-fin 1s

timeout http-request 10s

timeout http-keep-alive 300s

# Long timeout for WebSocket connections.

timeout tunnel 1h

frontend public

bind :80

mode http

tcp-request inspect-delay 5s

tcp-request content accept if HTTP

monitor-uri /_______internal_router_healthz

# Strip off Proxy headers to prevent HTTpoxy (https://httpoxy.org/)

http-request del-header Proxy

# DNS labels are case insensitive (RFC 4343), we need to convert the hostname into lowercase

# before matching, or any requests containing uppercase characters will never match.

http-request set-header Host %[req.hdr(Host),lower]

# check if we need to redirect/force using https.

acl secure_redirect base,map_reg(/var/lib/haproxy/conf/os_route_http_redirect.map) -m found

redirect scheme https if secure_redirect

use_backend %[base,map_reg(/var/lib/haproxy/conf/os_http_be.map)]

default_backend openshift_default

# public ssl accepts all connections and isn't checking certificates yet certificates to use will be

# determined by the next backend in the chain which may be an app backend (passthrough termination) or a backend

# that terminates encryption in this router (edge)

frontend public_ssl

bind :443

tcp-request inspect-delay 5s

tcp-request content accept if { req_ssl_hello_type 1 }

# if the connection is SNI and the route is a passthrough don't use the termination backend, just use the tcp backend

# for the SNI case, we also need to compare it in case-insensitive mode (by converting it to lowercase) as RFC 4343 says

acl sni req.ssl_sni -m found

acl sni_passthrough req.ssl_sni,lower,map_reg(/var/lib/haproxy/conf/os_sni_passthrough.map) -m found

use_backend %[req.ssl_sni,lower,map_reg(/var/lib/haproxy/conf/os_tcp_be.map)] if sni sni_passthrough

# if the route is SNI and NOT passthrough enter the termination flow

use_backend be_sni if sni

# non SNI requests should enter a default termination backend rather than the custom cert SNI backend since it

# will not be able to match a cert to an SNI host

default_backend be_no_sni

##########################################################################

# TLS SNI

#

# When using SNI we can terminate encryption with custom certificates.

# Certs will be stored in a directory and will be matched with the SNI host header

# which must exist in the CN of the certificate. Certificates must be concatenated

# as a single file (handled by the plugin writer) per the haproxy documentation.

#

# Finally, check re-encryption settings and re-encrypt or just pass along the unencrypted

# traffic

##########################################################################

backend be_sni

server fe_sni 127.0.0.1:10444 weight 1 send-proxy

frontend fe_sni

# terminate ssl on edge

bind 127.0.0.1:10444 ssl no-sslv3 crt /etc/pki/tls/private/tls.crt crt-list /var/lib/haproxy/conf/cert_config.map accept-proxy

mode http

# Strip off Proxy headers to prevent HTTpoxy (https://httpoxy.org/)

http-request del-header Proxy

# DNS labels are case insensitive (RFC 4343), we need to convert the hostname into lowercase

# before matching, or any requests containing uppercase characters will never match.

http-request set-header Host %[req.hdr(Host),lower]

# map to backend

# Search from most specific to general path (host case).

# Note: If no match, haproxy uses the default_backend, no other

# use_backend directives below this will be processed.

use_backend %[base,map_reg(/var/lib/haproxy/conf/os_edge_reencrypt_be.map)]

default_backend openshift_default

##########################################################################

# END TLS SNI

##########################################################################

##########################################################################

# TLS NO SNI

#

# When we don't have SNI the only thing we can try to do is terminate the encryption

# using our wild card certificate. Once that is complete we can either re-encrypt

# the traffic or pass it on to the backends

##########################################################################

# backend for when sni does not exist, or ssl term needs to happen on the edge

backend be_no_sni

server fe_no_sni 127.0.0.1:10443 weight 1 send-proxy

frontend fe_no_sni

# terminate ssl on edge

bind 127.0.0.1:10443 ssl no-sslv3 crt /etc/pki/tls/private/tls.crt accept-proxy

mode http

# Strip off Proxy headers to prevent HTTpoxy (https://httpoxy.org/)

http-request del-header Proxy

# DNS labels are case insensitive (RFC 4343), we need to convert the hostname into lowercase

# before matching, or any requests containing uppercase characters will never match.

http-request set-header Host %[req.hdr(Host),lower]

# map to backend

# Search from most specific to general path (host case).

# Note: If no match, haproxy uses the default_backend, no other

# use_backend directives below this will be processed.

use_backend %[base,map_reg(/var/lib/haproxy/conf/os_edge_reencrypt_be.map)]

default_backend openshift_default

##########################################################################

# END TLS NO SNI

##########################################################################

backend openshift_default

mode http

option forwardfor

#option http-keep-alive

option http-pretend-keepalive

##-------------- app level backends ----------------

# Secure backend, pass through

backend be_tcp:default:docker-registry

balance source

hash-type consistent

timeout check 5000ms}

server pod:docker-registry-1-tvrjp:docker-registry:10.128.1.32:5000 10.128.1.32:5000 weight 256

# Secure backend, pass through

backend be_tcp:default:registry-console

balance source

hash-type consistent

timeout check 5000ms}

server pod:registry-console-1-jspmx:registry-console:10.128.1.10:9090 10.128.1.10:9090 weight 256

# Plain http backend or backend with TLS terminated at the edge or a

# secure backend with re-encryption.

backend be_edge_http:default:route-origin-license-information-provider

mode http

option redispatch

option forwardfor

balance leastconn

timeout check 5000ms

http-request set-header X-Forwarded-Host %[req.hdr(host)]

http-request set-header X-Forwarded-Port %[dst_port]

http-request set-header X-Forwarded-Proto http if !{ ssl_fc }

http-request set-header X-Forwarded-Proto https if { ssl_fc }

http-request set-header X-Forwarded-Proto-Version h2 if { ssl_fc_alpn -i h2 }

http-request add-header Forwarded for=%[src];host=%[req.hdr(host)];proto=%[req.hdr(X-Forwarded-Proto)];proto-version=%[req.hdr(X-Forwarded-Proto-Version)]

cookie f68e01f4fd193411edcdbd2ed2123b1b insert indirect nocache httponly secure

server pod:deployment-origin-license-information-provider-7b55689655-vcn2j:svc-origin-license-information-provider:10.128.1.1:80 10.128.1.1:80 cookie d000e3ba84d0860ea1f900879572c5fa weight 256

# Secure backend, pass through

backend be_tcp:kube-service-catalog:apiserver

balance source

hash-type consistent

timeout check 5000ms}

server pod:apiserver-w4szj:apiserver:10.128.1.17:6443 10.128.1.17:6443 weight 256

HAProxy 的配置包括几部分:

- 全局配置 最大连接数等

- 前端配置 在 80 端口监听 HTTP 请求和在 443 端口监听 HTTPS 请求

- 后端配置 包含了协议(mode)、负载均衡方式、请求头改写、后端服务的 OpenShift 集群内部地址

顺着这份配置文件我们可以大致分析出 haproxy 对业务流量的处理:

1. HTTP

frontend public

bind :80

mode http

tcp-request inspect-delay 5s

tcp-request content accept if HTTP

monitor-uri /_______internal_router_healthz

# Strip off Proxy headers to prevent HTTpoxy (https://httpoxy.org/)

http-request del-header Proxy

# DNS labels are case insensitive (RFC 4343), we need to convert the hostname into lowercase

# before matching, or any requests containing uppercase characters will never match.

http-request set-header Host %[req.hdr(Host),lower]

# check if we need to redirect/force using https.

acl secure_redirect base,map_reg(/var/lib/haproxy/conf/os_route_http_redirect.map) -m found

redirect scheme https if secure_redirect

use_backend %[base,map_reg(/var/lib/haproxy/conf/os_http_be.map)]

default_backend openshift_default

当 haproxy 接收到 HTTP (80 端口)请求,会根据 /var/lib/haproxy/conf/os_http_be.map 文件中的转发规则转发至对应的后端。

^backend-3scale.caas-aio-apps.trystack.cn(:[0-9]+)?(/.*)?$ be_edge_http:mep-apigateway:backend

后端再根据配置中集群内部的 Pod 地址将流量转发至对应业务 Pod,从这里开始走 Kubernetes 内部网络。

# Plain http backend or backend with TLS terminated at the edge or a

# secure backend with re-encryption.

backend be_edge_http:mep-apigateway:backend

mode http

option redispatch

option forwardfor

balance leastconn

timeout check 5000ms

http-request set-header X-Forwarded-Host %[req.hdr(host)]

http-request set-header X-Forwarded-Port %[dst_port]

http-request set-header X-Forwarded-Proto http if !{ ssl_fc }

http-request set-header X-Forwarded-Proto https if { ssl_fc }

http-request set-header X-Forwarded-Proto-Version h2 if { ssl_fc_alpn -i h2 }

http-request add-header Forwarded for=%[src];host=%[req.hdr(host)];proto=%[req.hdr(X-Forwarded-Proto)];proto-version=%[req.hdr(X-Forwarded-Proto-Version)]

cookie dcdcad015a918c4295da5aec7a0daf50 insert indirect nocache httponly

server pod:backend-listener-1-mwgb6:backend-listener:10.128.1.67:3000 10.128.1.67:3000 cookie 4a624e547781196b55877322edeaec05 weight 256

2. HTTPS

frontend public_ssl

bind :443

tcp-request inspect-delay 5s

tcp-request content accept if { req_ssl_hello_type 1 }

# if the connection is SNI and the route is a passthrough don't use the termination backend, just use the tcp backend

# for the SNI case, we also need to compare it in case-insensitive mode (by converting it to lowercase) as RFC 4343 says

acl sni req.ssl_sni -m found

acl sni_passthrough req.ssl_sni,lower,map_reg(/var/lib/haproxy/conf/os_sni_passthrough.map) -m found

use_backend %[req.ssl_sni,lower,map_reg(/var/lib/haproxy/conf/os_tcp_be.map)] if sni sni_passthrough

# if the route is SNI and NOT passthrough enter the termination flow

use_backend be_sni if sni

# non SNI requests should enter a default termination backend rather than the custom cert SNI backend since it

# will not be able to match a cert to an SNI host

default_backend be_no_sni

当 haproxy 接收到 HTTPS (443 端口)请求,会检查 HTTPS 请求支持 sni (TLS Server Name Indication)

这边的处理对应了 Router 对 HTTPS 流量的终结方式:

- edge HTTPS 流量将在 Router 中解密并以纯 HTTP 转发至后端 Pod

- passthrough TLS 加密包直接递交给后端 Pod,Router 不做任何 TLS 终结,不需要 TLS 相关证书

- re-encryption 是 edge 的变种,HTTPS 流量将在 Router 中解密后再使用其他证书加密后转发至后端 Pod

也就是 route API 中的 termination 字段。

当 TLS 终结类型不是 passthrough 时将使用 be_sni 后端:

backend be_sni

server fe_sni 127.0.0.1:10444 weight 1 send-proxy

名为 fe_sni 的前端与 10444 端口绑定,流量被转向 fe_sni:

frontend fe_sni

# terminate ssl on edge

bind 127.0.0.1:10444 ssl no-sslv3 crt /etc/pki/tls/private/tls.crt crt-list /var/lib/haproxy/conf/cert_config.map accept-proxy

mode http

# Strip off Proxy headers to prevent HTTpoxy (https://httpoxy.org/)

http-request del-header Proxy

# DNS labels are case insensitive (RFC 4343), we need to convert the hostname into lowercase

# before matching, or any requests containing uppercase characters will never match.

http-request set-header Host %[req.hdr(Host),lower]

# map to backend

# Search from most specific to general path (host case).

# Note: If no match, haproxy uses the default_backend, no other

# use_backend directives below this will be processed.

use_backend %[base,map_reg(/var/lib/haproxy/conf/os_edge_reencrypt_be.map)]

default_backend openshift_default

HTTPS 流量来到这里后会使用相关 TLS 证书解密,然后根据 /var/lib/haproxy/conf/os_edge_reencrypt_be.map 映射文件中的转发规则转发至对应的后端。

^user-management.caas-aio-apps.trystack.cn(:[0-9]+)?(/.*)?$ be_edge_http:user-management:user-management

^test-groupcbc37148-3scale-apicast-staging.caas-aio-apps.trystack.cn(:[0-9]+)?(/.*)?$ be_edge_http:mep-apigateway:zync-3scale-api-sp8kr

^test-groupcbc37148-3scale-apicast-production.caas-aio-apps.trystack.cn(:[0-9]+)?(/.*)?$ be_edge_http:mep-apigateway:zync-3scale-api-twdpk

^test-group26a9a65d-3scale-apicast-staging.caas-aio-apps.trystack.cn(:[0-9]+)?(/.*)?$ be_edge_http:mep-apigateway:zync-3scale-api-r7rwm

^test-group26a9a65d-3scale-apicast-production.caas-aio-apps.trystack.cn(:[0-9]+)?(/.*)?$

与 HTTP 完全一样,后端再根据配置中集群内部的 Pod 地址将流量转发至对应业务 Pod,从这里开始走 Kubernetes 内部网络。

而我们创建/删除 Route API 资源的本质就是修改 HAProxy 的配置来控制流量的走向,OpenShift Router 的七层负载均衡能力也正是 Infra 节点上的 haproxy 提供的。

与 Ingress 类似,OpenShift Route 的 service 选择器只是用来向 HAProxy 配置中添加相关后端 Pod,而实际使用中跳过了 Kubernetes Service 这一层而避免 iptables 带来的性能影响。