文章目录

- 1. 清空之前的策略

- 1.1 kubeadm 重置

- 1.2 刷新 IPtables 表

- 2. 查看 Kubernetes 集群使用的镜像

- 3. 搭建 harbor 仓库

- 3.1 部署 docker

- 3.1.1 准备镜像源

- 3.1.2 安装 docker

- 3.1.3 开机自启 docker

- 3.1.4 修改内核参数,打开桥接

- 3.1.5 验证生效

- 3.2 准备 harbor 仓库的压缩包

- 3.3 配置 harbor 仓库

- 3.4 准备 harbor 仓库的证书

- 3.5 准备解析文件

- 3.6 部署 docker-compose 工具

- 3.7 安装 harbor 仓库

- 3.8 验证部署

- 3.9 将 Kubernetes 所有镜像放入harbor 仓库

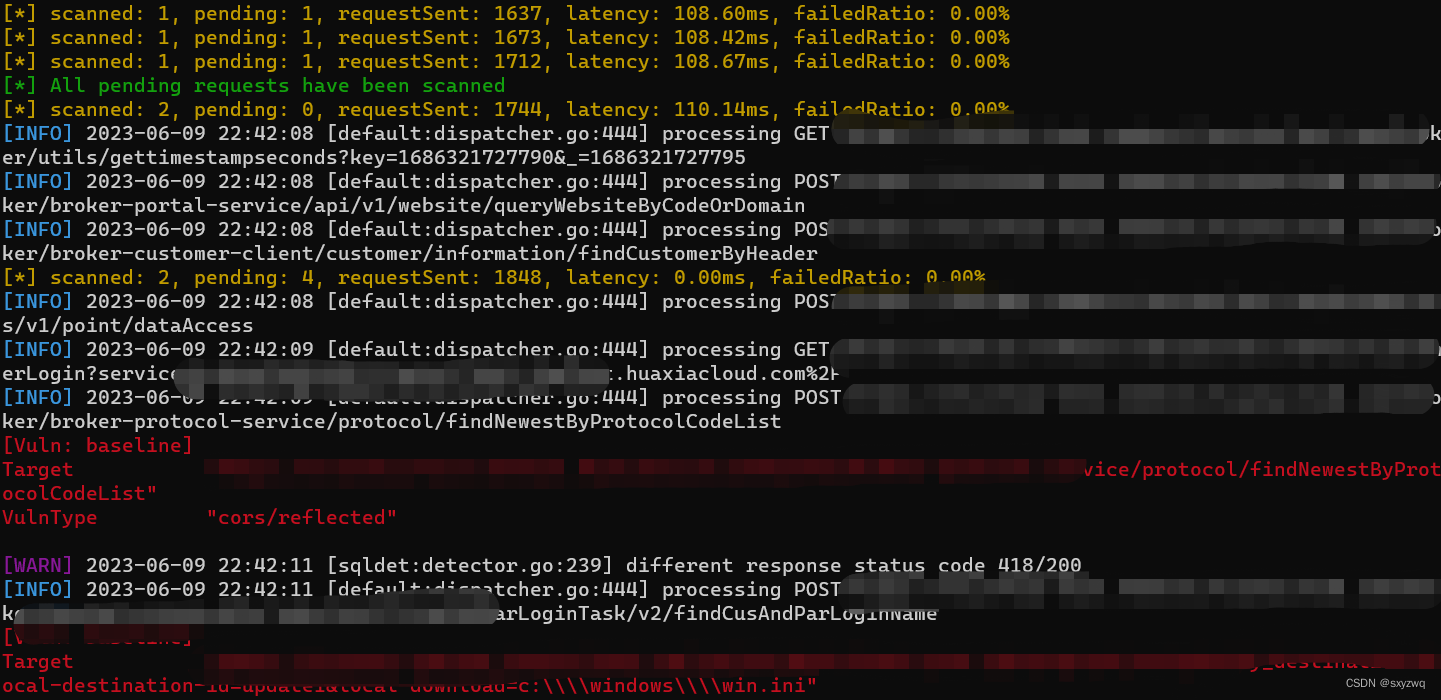

- ==**第一个 error**==:**Get https://reg.westos.org/v2/: x509: certificate signed by unknown authority**

- ==**解决方法**==:将 harbor 的 证书 拷贝一份给 docker

- ==**第二个 error**==:**denied: requested access to the resource is denied**

- ==**解决方法**==:docker login 登陆 harbor 仓库

- 3.9 将 Kubernetes 所有镜像放入harbor 仓库

- 4. containerd 连接 harbor 仓库

- 4.1 修改 Kubernetes 集群中 containerd 的配置文件

- 4.2 将 harbor 仓库的证书发送给 Kubernetes 集群

- 4.3 准备集群的解析文件

- 4.4 重启 containerd

- 4.5 验证连接

- 5. 使用 kubeadm 引导集群

- 5.1 修改初始化文件

- 5.2 kubeadm 拉取需要的镜像

- ==**第三个 error**==:私有仓库中没有合适的版本

- ==**解决方法**==

- 5.2 kubeadm 拉取需要的镜像

- 5.3 再次初始化

- 5.4 集群加入节点

- 5.5 验证集群

1. 清空之前的策略

1.1 kubeadm 重置

[root@k8s1 ~]# kubeadm reset

[reset] Reading configuration from the cluster...

[reset] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[reset] WARNING: Changes made to this host by 'kubeadm init' or 'kubeadm join' will be reverted.

[reset] Are you sure you want to proceed? [y/N]: y

[preflight] Running pre-flight checks

The 'update-cluster-status' phase is deprecated and will be removed in a future release. Currently it performs no operation

[reset] Stopping the kubelet service

[reset] Unmounting mounted directories in "/var/lib/kubelet"

[reset] Deleting contents of config directories: [/etc/kubernetes/manifests /etc/kubernetes/pki]

[reset] Deleting files: [/etc/kubernetes/admin.conf /etc/kubernetes/kubelet.conf /etc/kubernetes/bootstrap-kubelet.conf /etc/kubernetes/controller-manager.conf /etc/kubernetes/scheduler.conf]

[reset] Deleting contents of stateful directories: [/var/lib/etcd /var/lib/kubelet /var/lib/dockershim /var/run/kubernetes /var/lib/cni]

The reset process does not clean CNI configuration. To do so, you must remove /etc/cni/net.d

The reset process does not reset or clean up iptables rules or IPVS tables.

If you wish to reset iptables, you must do so manually by using the "iptables" command.

If your cluster was setup to utilize IPVS, run ipvsadm --clear (or similar)

to reset your system's IPVS tables.

The reset process does not clean your kubeconfig files and you must remove them manually.

Please, check the contents of the $HOME/.kube/config file.

[root@k8s2 ~]# kubeadm reset

[root@k8s3 ~]# kubeadm reset

1.2 刷新 IPtables 表

[root@k8s1 ~]# iptables -F

[root@k8s1 ~]# iptables -F -t nat

[root@k8s2 ~]# iptables -F

[root@k8s2 ~]# iptables -F -t nat

[root@k8s3 ~]# iptables -F

[root@k8s3 ~]# iptables -F -t nat

2. 查看 Kubernetes 集群使用的镜像

- 先打开网络

[root@foundation21 ~]# systemctl start firewalld.service

[root@k8s1 ~]# crictl img

IMAGE TAG IMAGE ID SIZE

docker.io/rancher/mirrored-flannelcni-flannel-cni-plugin v1.0.1 ac40ce6257406 3.82MB

docker.io/rancher/mirrored-flannelcni-flannel v0.17.0 9247abf086779 19.9MB

registry.aliyuncs.com/google_containers/coredns v1.8.4 8d147537fb7d1 13.7MB

registry.aliyuncs.com/google_containers/coredns v1.8.6 a4ca41631cc7a 13.6MB

registry.aliyuncs.com/google_containers/etcd 3.5.0-0 0048118155842 99.9MB

registry.aliyuncs.com/google_containers/etcd 3.5.1-0 25f8c7f3da61c 98.9MB

registry.aliyuncs.com/google_containers/kube-apiserver v1.22.1 f30469a2491a5 31.3MB

registry.aliyuncs.com/google_containers/kube-apiserver v1.22.2 e64579b7d8862 31.3MB

registry.aliyuncs.com/google_containers/kube-controller-manager v1.22.1 6e002eb89a881 29.8MB

registry.aliyuncs.com/google_containers/kube-controller-manager v1.22.2 5425bcbd23c54 29.8MB

registry.aliyuncs.com/google_containers/kube-proxy v1.22.1 36c4ebbc9d979 35.9MB

registry.aliyuncs.com/google_containers/kube-proxy v1.22.2 873127efbc8a7 35.9MB

registry.aliyuncs.com/google_containers/kube-scheduler v1.22.1 aca5ededae9c8 15MB

registry.aliyuncs.com/google_containers/kube-scheduler v1.22.2 b51ddc1014b04 15MB

registry.aliyuncs.com/google_containers/pause 3.5 ed210e3e4a5ba 301kB

registry.aliyuncs.com/google_containers/pause 3.6 6270bb605e12e 302kB

3. 搭建 harbor 仓库

3.1 部署 docker

3.1.1 准备镜像源

[root@k8s4 ~]# cd /etc/yum.repos.d/

[root@k8s4 yum.repos.d]# vim docker.repo

[docker]

name=docker-ce

baseurl=http://172.25.21.250/docker-ce

gpgcheck=0

3.1.2 安装 docker

[root@k8s4 yum.repos.d]# yum install -y docker-ce

3.1.3 开机自启 docker

[root@k8s4 yum.repos.d]# systemctl enable --now docker

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.

3.1.4 修改内核参数,打开桥接

[root@k8s4 ~]# cd /etc/sysctl.d/

[root@k8s4 sysctl.d]# vim docker.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

[root@k8s4 sysctl.d]# sysctl --system

3.1.5 验证生效

[root@k8s4 sysctl.d]# docker info

Client:

Debug Mode: false

Server:

Containers: 0

Running: 0

Paused: 0

Stopped: 0

Images: 0

Server Version: 19.03.15

Storage Driver: overlay2

Backing Filesystem: xfs

Supports d_type: true

Native Overlay Diff: true

Logging Driver: json-file

Cgroup Driver: cgroupfs

Plugins:

Volume: local

Network: bridge host ipvlan macvlan null overlay

Log: awslogs fluentd gcplogs gelf journald json-file local logentries splunk syslog

Swarm: inactive

Runtimes: runc

Default Runtime: runc

Init Binary: docker-init

containerd version: 05f951a3781f4f2c1911b05e61c160e9c30eaa8e

runc version: 12644e614e25b05da6fd08a38ffa0cfe1903fdec

init version: fec3683

Security Options:

seccomp

Profile: default

Kernel Version: 3.10.0-957.el7.x86_64

Operating System: Red Hat Enterprise Linux Server 7.6 (Maipo)

OSType: linux

Architecture: x86_64

CPUs: 2

Total Memory: 991MiB

Name: k8s4

ID: RMUV:W2WV:GCXQ:QCE7:IXW4:ZGEY:JT55:DEOM:VPY5:UIU3:MXBO:EZ2S

Docker Root Dir: /var/lib/docker

Debug Mode: false

Registry: https://index.docker.io/v1/

Labels:

Experimental: false

Insecure Registries:

127.0.0.0/8

Live Restore Enabled: false

3.2 准备 harbor 仓库的压缩包

官方网址:harbor仓库官方网站

[root@k8s4 ~]# ls

harbor-offline-installer-v1.10.1.tgz

[root@k8s4 ~]# tar zxf harbor-offline-installer-v1.10.1.tgz

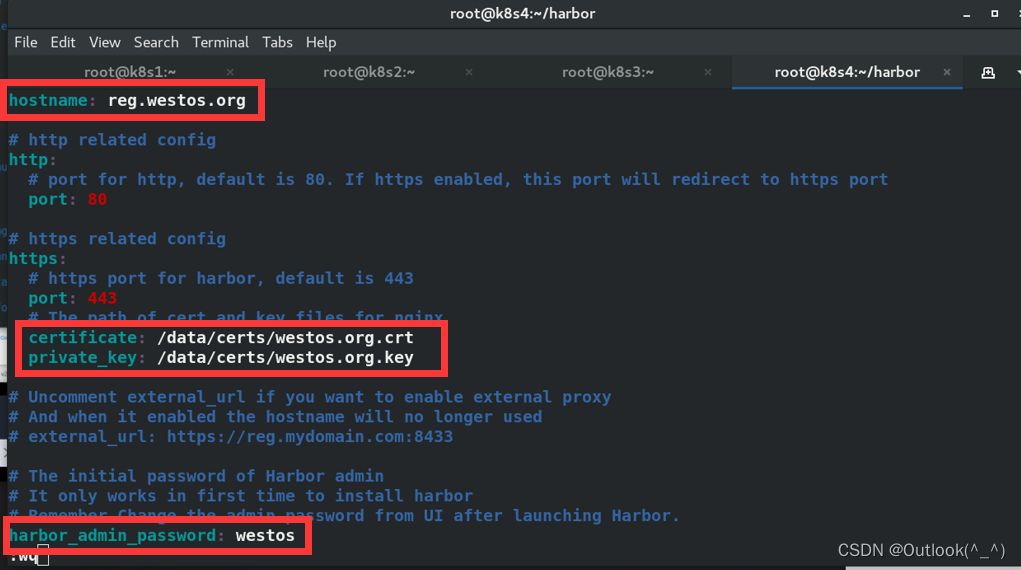

3.3 配置 harbor 仓库

- 修改 harbor 仓库的域名

- 修改证书的位置

- 修改证书密钥的位置

- 修改 harbor 仓库的登陆密码

[root@k8s4 ~]# cd harbor/

[root@k8s4 harbor]# ls

common.sh harbor.v1.10.1.tar.gz harbor.yml install.sh LICENSE prepare

[root@k8s4 harbor]# vim harbor.yml

hostname: reg.westos.org

certificate: /data/certs/westos.org.crt

private_key: /data/certs/westos.org.key

harbor_admin_password: westos

3.4 准备 harbor 仓库的证书

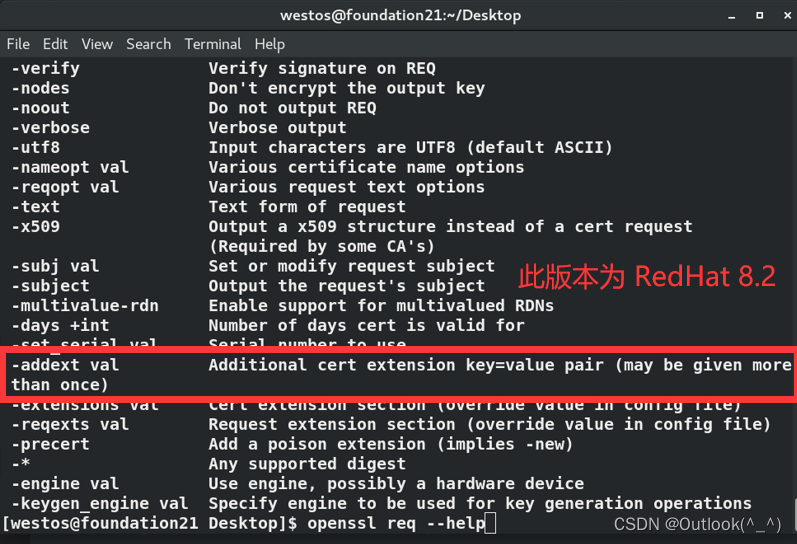

- 当前 k8s4 主机的系统版本是 RedHat 7.6,其 openssl 工具的版本较低,没有 --addext 命令(RedHat 8.2 版本上的 openssl 工具有)

[westos@foundation21 Desktop]$ cat /etc/redhat-release

Red Hat Enterprise Linux release 8.2 (Ootpa)

[westos@foundation21 Desktop]$ openssl req --help

......

-addext val Additional cert extension key=value pair (may be given more than once)

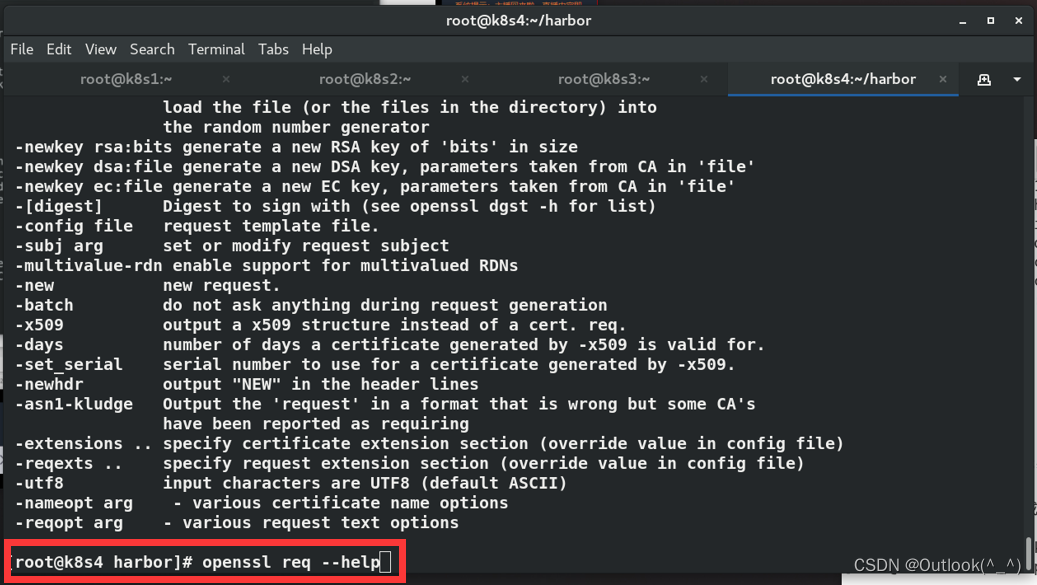

- RedHat 7.6 没有

[root@k8s4 harbor]# cat /etc/redhat-release

Red Hat Enterprise Linux Server release 7.6 (Maipo)

[root@k8s4 harbor]# openssl req --help

- 去 openssl 官方下载 1.1.1 版本(也可以去 阿里云镜像站 下载)

下载地址:openssl官方网站

阿里云镜像站下载地址:阿里云 | openssl

[root@k8s4 ~]# ls

harbor openssl11-1.1.1k-3.el7.x86_64.rpm

harbor-offline-installer-v1.10.1.tgz openssl11-libs-1.1.1k-3.el7.x86_64.rpm

[root@k8s4 ~]# yum install -y openssl11*

- 生成证书

(证书的域名要和 harbor 仓库的域名一致)

[root@k8s4 ~]# mkdir /data/certs -p

[root@k8s4 ~]# cd /data/

[root@k8s4 data]# ls

certs

[root@k8s4 data]# openssl11 req -newkey rsa:4096 -nodes -sha256 -keyout /data/certs/westos.org.key -addext "subjectAltName = DNS:reg.westos.org" -x509 -days 365 -out /data/certs/westos.org.crt

Can't load /root/.rnd into RNG

139809964558144:error:2406F079:random number generator:RAND_load_file:Cannot open file:crypto/rand/randfile.c:98:Filename=/root/.rnd

Generating a RSA private key

....................................................++++

.................................++++

writing new private key to '/data/certs/westos.org.key'

-----

You are about to be asked to enter information that will be incorporated

into your certificate request.

What you are about to enter is what is called a Distinguished Name or a DN.

There are quite a few fields but you can leave some blank

For some fields there will be a default value,

If you enter '.', the field will be left blank.

-----

Country Name (2 letter code) [XX]:cn

State or Province Name (full name) []:shannxi

Locality Name (eg, city) [Default City]:xi'an

Organization Name (eg, company) [Default Company Ltd]:westos

Organizational Unit Name (eg, section) []:cka

Common Name (eg, your name or your server's hostname) []:reg.westos.org

Email Address []:root@westos.org

[root@k8s4 data]# ls certs/

westos.org.crt westos.org.key

3.5 准备解析文件

[root@k8s4 data]# vim /etc/hosts

172.25.21.4 k8s4 reg.westos.org

3.6 部署 docker-compose 工具

[root@k8s4 ~]# mv docker-compose-Linux-x86_64-1.27.0 /usr/local/bin/docker-compose

[root@k8s4 ~]# chmod +x /usr/local/bin/docker-compose

[root@k8s4 ~]# docker-compose //命令可以正常执行

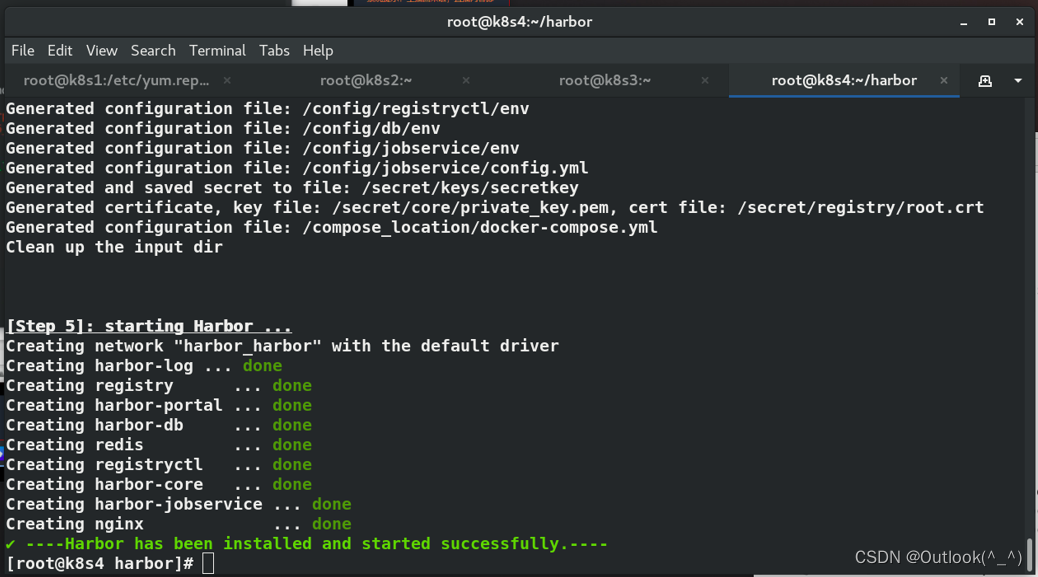

3.7 安装 harbor 仓库

[root@k8s4 ~]# cd harbor/

[root@k8s4 harbor]# ./install.sh

[root@k8s4 harbor]# docker-compose ps

Name Command State Ports

-------------------------------------------------------------------------------------------------------

harbor-core /harbor/harbor_core Up (healthy)

harbor-db /docker-entrypoint.sh Up (healthy) 5432/tcp

harbor-jobservice /harbor/harbor_jobservice ... Up (healthy)

harbor-log /bin/sh -c /usr/local/bin/ ... Up (healthy) 127.0.0.1:1514->10514/tcp

harbor-portal nginx -g daemon off; Up (healthy) 8080/tcp

nginx nginx -g daemon off; Up (healthy) 0.0.0.0:80->8080/tcp,

0.0.0.0:443->8443/tcp

redis redis-server /etc/redis.conf Up (healthy) 6379/tcp

registry /home/harbor/entrypoint.sh Up (healthy) 5000/tcp

registryctl /home/harbor/start.sh Up (healthy)

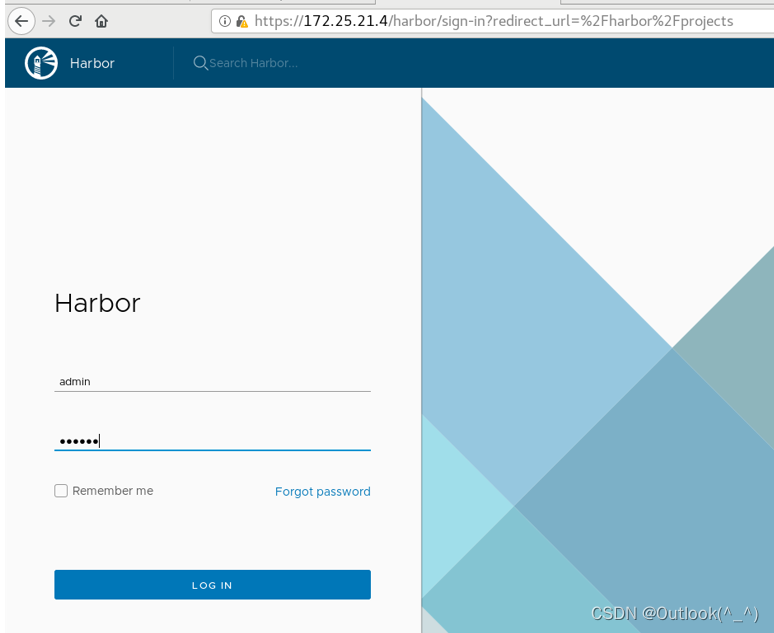

3.8 验证部署

验证仓库是否部署成功,访问 harbor 控制面板

3.9 将 Kubernetes 所有镜像放入harbor 仓库

- 使用 ctr 命令导出镜像( ctr 命令很长)

[root@k8s1 ~]# ctr ns ls

NAME LABELS

k8s.io

moby

[root@k8s1 ~]# ctr -n k8s.io i export k8s-1.22.2.tar registry.aliyuncs.com/google_containers/coredns:v1.8.6 registry.aliyuncs.com/google_containers/etcd:3.5.1-0 registry.aliyuncs.com/google_containers/kube-apiserver:v1.22.2 registry.aliyuncs.com/google_containers/kube-controller-manager:v1.22.2 registry.aliyuncs.com/google_containers/kube-proxy:v1.22.2 registry.aliyuncs.com/google_containers/kube-scheduler:v1.22.2 registry.aliyuncs.com/google_containers/pause:3.6

- 将 镜像包 发送给 harbor 主机

[root@k8s1 ~]# scp k8s-1.22.2.tar k8s4:

- docker load 命令上传镜像包

[root@k8s4 ~]# docker load -i k8s-1.22.2.tar

16679402dc20: Loading layer [==================================================>] 657.7kB/657.7kB

2d10b4260c78: Loading layer [==================================================>] 718.9kB/718.9kB

287b21f53b5a: Loading layer [==================================================>] 29.92MB/29.92MB

Loaded image: registry.aliyuncs.com/google_containers/kube-apiserver:v1.22.2

529dc7750f69: Loading layer [==================================================>] 28.4MB/28.4MB

Loaded image: registry.aliyuncs.com/google_containers/kube-controller-manager:v1.22.2

48b90c7688a2: Loading layer [==================================================>] 23.8MB/23.8MB

9e94f35577fb: Loading layer [==================================================>] 12.14MB/12.14MB

Loaded image: registry.aliyuncs.com/google_containers/kube-proxy:v1.22.2

701b343e4ffd: Loading layer [==================================================>] 13.64MB/13.64MB

Loaded image: registry.aliyuncs.com/google_containers/kube-scheduler:v1.22.2

1021ef88c797: Loading layer [==================================================>] 296.5kB/296.5kB

Loaded image: registry.aliyuncs.com/google_containers/pause:3.6

256bc5c338a6: Loading layer [==================================================>] 122.2kB/122.2kB

80e4a2390030: Loading layer [==================================================>] 13.46MB/13.46MB

Loaded image: registry.aliyuncs.com/google_containers/coredns:v1.8.6

6d75f23be3dd: Loading layer [==================================================>] 803.8kB/803.8kB

b6e8c573c18d: Loading layer [==================================================>] 1.076MB/1.076MB

d80003ff5706: Loading layer [==================================================>] 87.39MB/87.39MB

664dd6f2834b: Loading layer [==================================================>] 1.188MB/1.188MB

62ae031121b1: Loading layer [==================================================>] 8.426MB/8.426MB

Loaded image: registry.aliyuncs.com/google_containers/etcd:3.5.1-0

[root@k8s4 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.aliyuncs.com/google_containers/etcd 3.5.1-0 25f8c7f3da61 5 months ago 293MB

registry.aliyuncs.com/google_containers/coredns v1.8.6 a4ca41631cc7 6 months ago 46.8MB

registry.aliyuncs.com/google_containers/kube-apiserver v1.22.2 e64579b7d886 7 months ago 128MB

registry.aliyuncs.com/google_containers/kube-controller-manager v1.22.2 5425bcbd23c5 7 months ago 122MB

registry.aliyuncs.com/google_containers/kube-proxy v1.22.2 873127efbc8a 7 months ago 104MB

registry.aliyuncs.com/google_containers/kube-scheduler v1.22.2 b51ddc1014b0 7 months ago 52.7MB

registry.aliyuncs.com/google_containers/pause 3.6 6270bb605e12 7 months ago 683kB

goharbor/chartmuseum-photon v0.9.0-v1.10.1 0245d66323de 2 years ago 128MB

goharbor/harbor-migrator v1.10.1 a4f99495e0b0 2 years ago 364MB

goharbor/redis-photon v1.10.1 550a58b0a311 2 years ago 111MB

goharbor/clair-adapter-photon v1.0.1-v1.10.1 2ec99537693f 2 years ago 61.6MB

goharbor/clair-photon v2.1.1-v1.10.1 622624e16994 2 years ago 171MB

goharbor/notary-server-photon v0.6.1-v1.10.1 e4ff6d1f71f9 2 years ago 143MB

goharbor/notary-signer-photon v0.6.1-v1.10.1 d3aae2fc17c6 2 years ago 140MB

goharbor/harbor-registryctl v1.10.1 ddef86de6480 2 years ago 104MB

goharbor/registry-photon v2.7.1-patch-2819-2553-v1.10.1 1a0c5f22cfa7 2 years ago 86.5MB

goharbor/nginx-photon v1.10.1 01276d086ad6 2 years ago 44MB

goharbor/harbor-log v1.10.1 1f5c9ea164bf 2 years ago 82.3MB

goharbor/harbor-jobservice v1.10.1 689368d30108 2 years ago 143MB

goharbor/harbor-core v1.10.1 14151d58ac3f 2 years ago 130MB

goharbor/harbor-portal v1.10.1 8a9856c37798 2 years ago 52.1MB

goharbor/harbor-db v1.10.1 18548720d8ad 2 years ago 148MB

goharbor/prepare v1.10.1 897a4d535ced 2 years ago 192MB

-

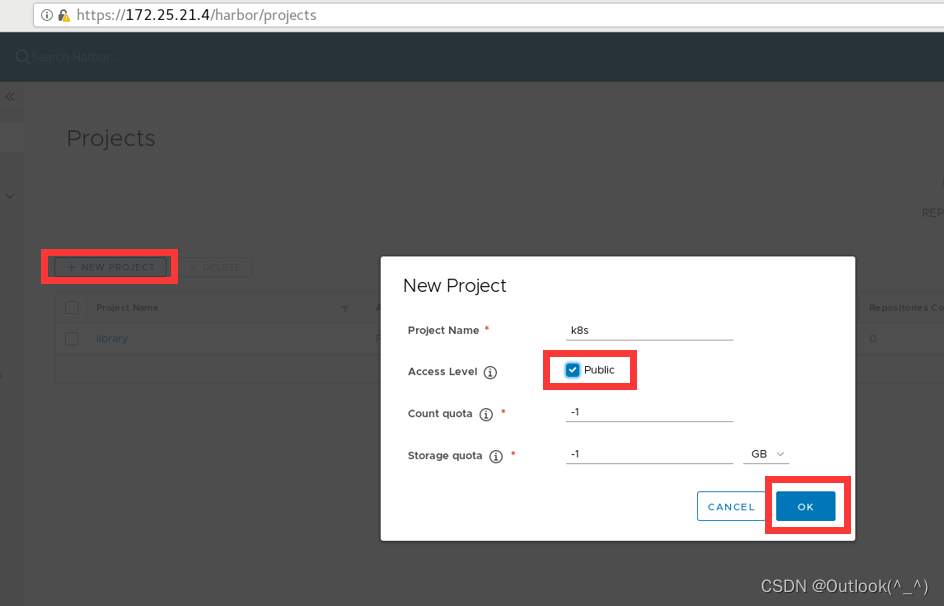

在 harbor 仓库中创建一个公开项目,存放 k8s 的镜像包

-

docker push 命令上传镜像到 harbor 仓库

[root@k8s4 ~]# docker images | grep google_containers | awk '{print $1":"$2}' | awk -F/ '{system("docker tag "$0" reg.westos.org/k8s/"$3"")}'

[root@k8s4 ~]# docker images | grep reg.westos.org/k8s/

reg.westos.org/k8s/etcd 3.5.1-0 25f8c7f3da61 5 months ago 293MB

reg.westos.org/k8s/coredns v1.8.6 a4ca41631cc7 6 months ago 46.8MB

reg.westos.org/k8s/kube-apiserver v1.22.2 e64579b7d886 7 months ago 128MB

reg.westos.org/k8s/kube-controller-manager v1.22.2 5425bcbd23c5 7 months ago 122MB

reg.westos.org/k8s/kube-scheduler v1.22.2 b51ddc1014b0 7 months ago 52.7MB

reg.westos.org/k8s/kube-proxy v1.22.2 873127efbc8a 7 months ago 104MB

reg.westos.org/k8s/pause 3.6 6270bb605e12 7 months ago 683kB

第一个 error:Get https://reg.westos.org/v2/: x509: certificate signed by unknown authority

- docker 无 harbor 的证书,无访问 harbor 的权限

[root@k8s4 ~]# docker push reg.westos.org/k8s/etcd:3.5.1-0

The push refers to repository [reg.westos.org/k8s/etcd]

Get https://reg.westos.org/v2/: x509: certificate signed by unknown authority

解决方法:将 harbor 的 证书 拷贝一份给 docker

[root@k8s4 ~]# cd /etc/docker/

[root@k8s4 docker]# mkdir certs.d

[root@k8s4 docker]# cd certs.d/

[root@k8s4 certs.d]# mkdir reg.westos.org

[root@k8s4 certs.d]# cd reg.westos.org/

[root@k8s4 reg.westos.org]# cp /data/certs/westos.org.crt .

[root@k8s4 reg.westos.org]# ls

westos.org.crt

[root@k8s4 reg.westos.org]# pwd

/etc/docker/certs.d/reg.westos.org

第二个 error:denied: requested access to the resource is denied

- 下载可以匿名,上传需要认证。需要在终端登陆 harbor 仓库

[root@k8s4 reg.westos.org]# docker push reg.westos.org/k8s/etcd:3.5.1-0

The push refers to repository [reg.westos.org/k8s/etcd]

62ae031121b1: Preparing

664dd6f2834b: Preparing

d80003ff5706: Preparing

b6e8c573c18d: Preparing

6d75f23be3dd: Preparing

denied: requested access to the resource is denied

解决方法:docker login 登陆 harbor 仓库

[root@k8s4 reg.westos.org]# docker login reg.westos.org

Username: admin

Password:

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

[root@k8s4 reg.westos.org]# cat /root/.docker/config.json

{

"auths": {

"reg.westos.org": {

"auth": "YWRtaW46d2VzdG9z"

}

},

"HttpHeaders": {

"User-Agent": "Docker-Client/19.03.15 (linux)"

}

3.9 将 Kubernetes 所有镜像放入harbor 仓库

[root@k8s4 ~]# docker images | grep westos | awk '{system("docker push "$1":"$2"")}'

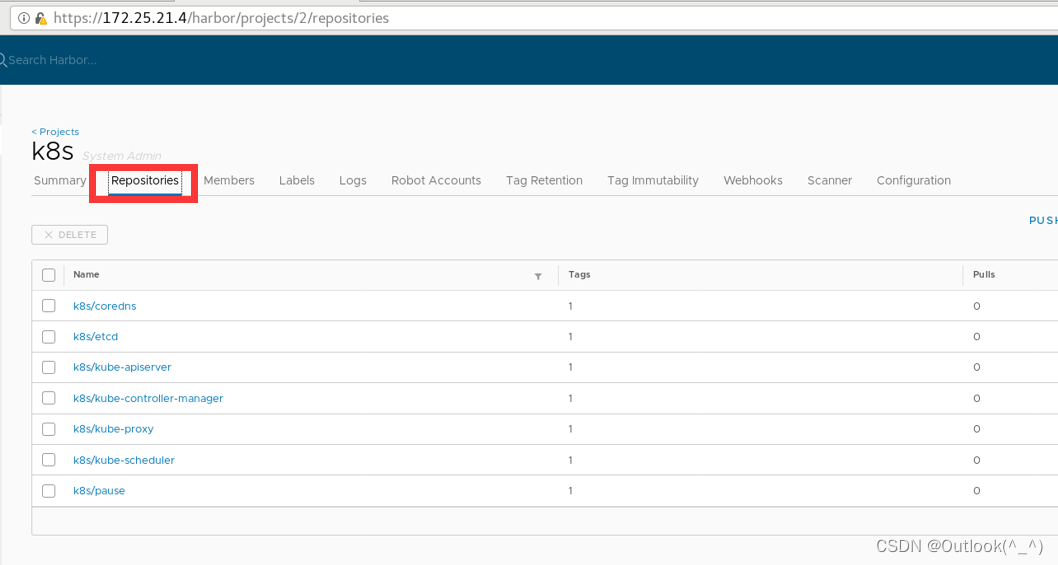

- 上传成功!!!

4. containerd 连接 harbor 仓库

4.1 修改 Kubernetes 集群中 containerd 的配置文件

- Kubernetes 修改 containerd 的配置文件 config.toml

- 修改拉取镜像的地址

- 修改仓库的证书路径

[root@k8s1 ~]# cd /etc/containerd/

[root@k8s1 containerd]# ls

config.toml

[root@k8s1 containerd]# vim config.toml

sandbox_image = "reg.westos.org/k8s/pause:3.6"

[plugins."io.containerd.grpc.v1.cri".registry]

config_path = "/etc/containerd/certs.d"

[root@k8s2 ~]# cd /etc/containerd/

[root@k8s2 containerd]# ls

certs.d config.toml

[root@k8s2 containerd]# vim config.toml

sandbox_image = "reg.westos.org/k8s/pause:3.6"

config_path = "/etc/containerd/certs.d"

[root@k8s3 ~]# vim /etc/containerd/config.toml

sandbox_image = "reg.westos.org/k8s/pause:3.6"

config_path = "/etc/containerd/certs.d"

4.2 将 harbor 仓库的证书发送给 Kubernetes 集群

[root@k8s1 containerd]# pwd

/etc/containerd

[root@k8s1 containerd]# mkdir certs.d

[root@k8s1 containerd]# cd certs.d/

[root@k8s1 certs.d]# mkdir reg.westos.org

[root@k8s1 certs.d]# cd reg.westos.org/

[root@k8s1 reg.westos.org]# scp k8s4:/data/certs/westos.org.crt .

root@k8s4's password:

westos.org.crt 100% 2155 2.1MB/s 00:00

[root@k8s1 reg.westos.org]# ls

westos.org.crt

[root@k8s1 containerd]# pwd

/etc/containerd

[root@k8s1 containerd]# scp -r certs.d/ k8s2:/etc/containerd

westos.org.crt 100% 2155 2.8MB/s 00:00

[root@k8s1 containerd]# scp -r certs.d/ k8s3:/etc/containerd

westos.org.crt 100% 2155 2.4MB/s 00:00

4.3 准备集群的解析文件

[root@k8s1 reg.westos.org]# vim /etc/hosts

172.25.21.4 k8s4 reg.westos.org

[root@k8s2 reg.westos.org]# vim /etc/hosts

172.25.21.4 k8s4 reg.westos.org

[root@k8s3 reg.westos.org]# vim /etc/hosts

172.25.21.4 k8s4 reg.westos.org

4.4 重启 containerd

[root@k8s1 ~]# systemctl restart containerd.service

[root@k8s2 ~]# systemctl restart containerd.service

[root@k8s3 ~]# systemctl restart containerd.service

4.5 验证连接

[root@k8s1 containerd]# crictl pull reg.westos.org/k8s/pause:3.6

Image is up to date for sha256:6270bb605e12e581514ada5fd5b3216f727db55dc87d5889c790e4c760683fee

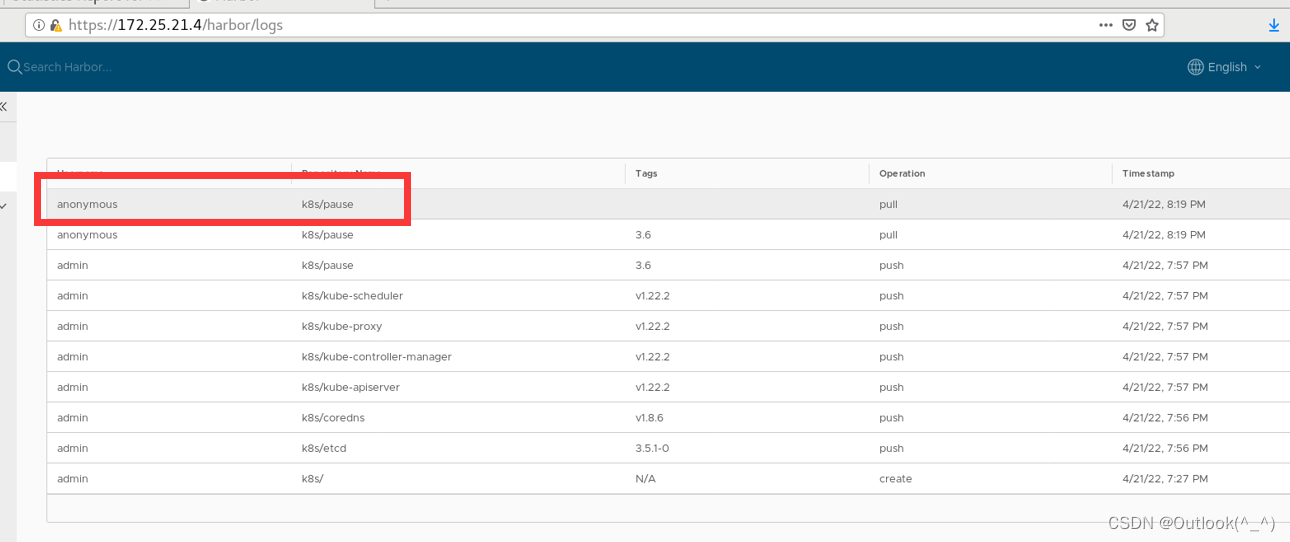

- harbor 仓库中记录了下载的经过

5. 使用 kubeadm 引导集群

5.1 修改初始化文件

[root@k8s1 ~]# vim kubeadm-init.yaml

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: "172.25.21.100:6443" // 可以忽略这一点

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: reg.westos.org/k8s //指向私有仓库的位置

kind: ClusterConfiguration

kubernetesVersion: 1.22.1

networking:

dnsDomain: cluster.local

podSubnet: 10.244.0.0/16

serviceSubnet: 10.96.0.0/12

scheduler: {}

5.2 kubeadm 拉取需要的镜像

第三个 error:私有仓库中没有合适的版本

[root@k8s1 ~]# kubeadm config images pull --config kubeadm-init.yaml

failed to pull image "reg.westos.org/k8s/kube-apiserver:v1.22.1": output: time="2022-04-21T20:24:15+08:00" level=fatal msg="pulling image: rpc error: code = NotFound desc = failed to pull and unpack image \"reg.westos.org/k8s/kube-apiserver:v1.22.1\": failed to resolve reference \"reg.westos.org/k8s/kube-apiserver:v1.22.1\": reg.westos.org/k8s/kube-apiserver:v1.22.1: not found"

, error: exit status 1

To see the stack trace of this error execute with --v=5 or higher

解决方法

- docker 根据报错的信息去 阿里云镜像站 拉取镜像

[root@k8s4 ~]# docker pull registry.aliyuncs.com/google_containers/pause:3.5

[root@k8s4 ~]# docker pull registry.aliyuncs.com/google_containers/kube-apiserver:v1.22.1

[root@k8s4 ~]# docker pull registry.aliyuncs.com/google_containers/kube-controller-manager:v1.22.1

[root@k8s4 ~]# docker pull registry.aliyuncs.com/google_containers/kube-scheduler:v1.22.1

[root@k8s4 ~]# docker pull registry.aliyuncs.com/google_containers/kube-proxy:v1.22.1

[root@k8s4 ~]# docker pull registry.aliyuncs.com/google_containers/etcd:3.5.0-0

[root@k8s4 ~]# docker pull registry.aliyuncs.com/google_containers/coredns:v1.8.4

- 再改名,分组

[root@k8s4 ~]# docker tag registry.aliyuncs.com/google_containers/pause:3.5 reg.westos.org/k8s/pause:3.5

[root@k8s4 ~]# docker tag registry.aliyuncs.com/google_containers/kube-apiserver:v1.22.1 reg.westos.org/k8s/kube-apiserver:v1.22.1

[root@k8s4 ~]# docker tag registry.aliyuncs.com/google_containers/kube-controller-manager:v1.22.1 reg.westos.org/k8s/kube-controller-manager:v1.22.1

[root@k8s4 ~]# docker tag registry.aliyuncs.com/google_containers/kube-scheduler:v1.22.1 reg.westos.org/k8s/kube-scheduler:v1.22.1

[root@k8s4 ~]# docker tag registry.aliyuncs.com/google_containers/kube-proxy:v1.22.1 reg.westos.org/k8s/kube-proxy:v1.22.1

[root@k8s4 ~]# docker tag registry.aliyuncs.com/google_containers/etcd:3.5.0-0 reg.westos.org/k8s/etcd:3.5.0-0

[root@k8s4 ~]# docker tag registry.aliyuncs.com/google_containers/coredns:v1.8.4 reg.westos.org/k8s/coredns:v1.8.4

- 上传到 harbor

[root@k8s4 ~]# docker push reg.westos.org/k8s/pause:3.5

The push refers to repository [reg.westos.org/k8s/pause]

dee215ffc666: Pushed

3.5: digest: sha256:2f4b437353f90e646504ec8317dacd6123e931152674628289c990a7a05790b0 size: 526

[root@k8s4 ~]# docker push reg.westos.org/k8s/kube-apiserver:v1.22.1

The push refers to repository [reg.westos.org/k8s/kube-apiserver]

09a0fcc34bc8: Pushed

71204d686e50: Pushed

07363fa84210: Pushed

v1.22.1: digest: sha256:d61567706f42ef70e6351e2fd5637e69bcef6d487442fbfa9d1fee15e694faa8 size: 949

[root@k8s4 ~]# docker push reg.westos.org/k8s/kube-controller-manager:v1.22.1

[root@k8s4 ~]# docker push reg.westos.org/k8s/kube-scheduler:v1.22.1

[root@k8s4 ~]# docker push reg.westos.org/k8s/kube-proxy:v1.22.1

[root@k8s4 ~]# docker push reg.westos.org/k8s/etcd:3.5.0-0

[root@k8s4 ~]# docker push reg.westos.org/k8s/coredns:v1.8.4

5.2 kubeadm 拉取需要的镜像

再次拉取成功!!!

[root@k8s1 ~]# kubeadm config images pull --config kubeadm-init.yaml

[config/images] Pulled reg.westos.org/k8s/kube-apiserver:v1.22.1

[config/images] Pulled reg.westos.org/k8s/kube-controller-manager:v1.22.1

[config/images] Pulled reg.westos.org/k8s/kube-scheduler:v1.22.1

[config/images] Pulled reg.westos.org/k8s/kube-proxy:v1.22.1

[config/images] Pulled reg.westos.org/k8s/pause:3.5

[config/images] Pulled reg.westos.org/k8s/etcd:3.5.0-0

[config/images] Pulled reg.westos.org/k8s/coredns:v1.8.4

5.3 再次初始化

- 保留 join 命令

[root@k8s1 ~]# kubeadm init --config kubeadm-init.yaml --upload-certs

5.4 集群加入节点

[root@k8s2 ~]# kubeadm join 172.25.21.100:6443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:31b8b3c4fda3fb790bd7d6bb3cca2dd0e6b6870eec9842a201493b0a78e45aee --control-plane --certificate-key 03fc498033a4f8e66cc8a74dbfdac7d8f40908d3fa7dd6b8f3a3014ac5aee3c4

[root@k8s3 ~]# kubeadm join 172.25.21.100:6443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:31b8b3c4fda3fb790bd7d6bb3cca2dd0e6b6870eec9842a201493b0a78e45aee --control-plane --certificate-key 03fc498033a4f8e66cc8a74dbfdac7d8f40908d3fa7dd6b8f3a3014ac5aee3c4

5.5 验证集群

[root@k8s1 ~]# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-bdc44d9f-fgm8h 1/1 Running 0 8m15s

kube-system coredns-bdc44d9f-zr4mf 1/1 Running 0 8m15s

kube-system etcd-k8s1 1/1 Running 0 8m35s

kube-system etcd-k8s2 1/1 Running 0 5m49s

kube-system etcd-k8s3 1/1 Running 0 3m44s

kube-system kube-apiserver-k8s1 1/1 Running 0 8m21s

kube-system kube-apiserver-k8s2 1/1 Running 0 5m51s

kube-system kube-apiserver-k8s3 1/1 Running 0 3m45s

kube-system kube-controller-manager-k8s1 1/1 Running 1 (5m40s ago) 8m34s

kube-system kube-controller-manager-k8s2 1/1 Running 0 5m51s

kube-system kube-controller-manager-k8s3 1/1 Running 0 3m45s

kube-system kube-proxy-bnhvg 1/1 Running 0 5m51s

kube-system kube-proxy-gkgxt 1/1 Running 0 8m15s

kube-system kube-proxy-vnhjh 1/1 Running 0 3m45s

kube-system kube-scheduler-k8s1 1/1 Running 1 (5m40s ago) 8m32s

kube-system kube-scheduler-k8s2 1/1 Running 0 5m51s

kube-system kube-scheduler-k8s3 1/1 Running 0 3m45s